This repository contains the infrastructure as code implementation and pipelines necessary for the required infrastructure to host OSDU on Azure.

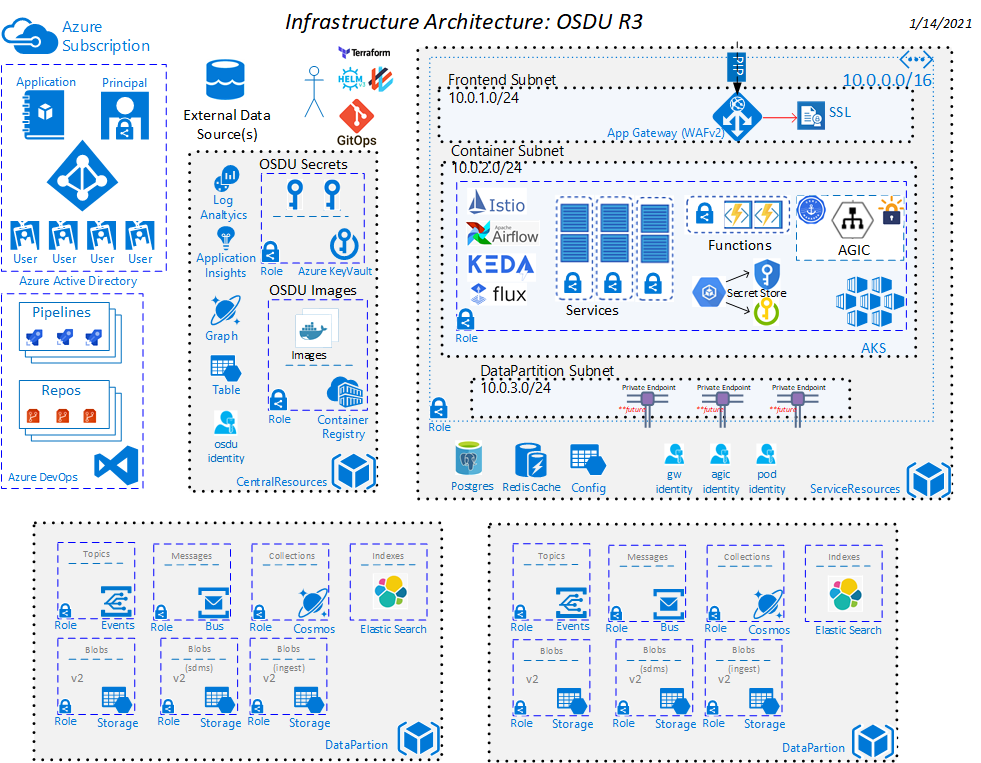

The osdu - R3 MVP Architecture solution template is intended to provision Managed Kubernetes resources like AKS and other core OSDU cloud managed services like Cosmos, Blob Storage and Keyvault.

Azure environment cost ballpark estimate. This is subject to change and is driven from the resource pricing tiers configured when the template is deployed.

- Azure Subscription

- Terraform and Go are locally installed.

- Requires the use of direnv.

- Install the required common tools (kubectl, helm, and terraform).

This document assumes one is running a current version of Ubuntu. Windows users can install the Ubuntu Terminal from the Microsoft Store. The Ubuntu Terminal enables Linux command-line utilities, including bash, ssh, and git that will be useful for the following deployment. Note: You will need the Windows Subsystem for Linux installed to use the Ubuntu Terminal on Windows.

Currently the versions in use are Terraform 0.14.4 and GO 1.12.14.

Note: Terraform and Go are recommended to be installed using a Terraform Version Manager and a Go Version Manager

For information specific to your operating system, see the Azure CLI install guide. You can also use the single command install if running on a Unix based machine.

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

# Login to Azure CLI and ensure subscription is set to desired subscription

az login

az account set --subscription <your_subscription>Role Documentation: Working on ADO Organizations require certain Roles for the user. To perform these activities a user must be able to create an ADO project in an organization and have administrator level access to the Project created.

Configure an Azure Devops Project in your Organization called osdu-mvp and set the cli command to use the organization by default.

export ADO_ORGANIZATION=<organization_name>

export ADO_PROJECT=osdu-mvp

# Ensure the CLI extension is added

az extension add --name azure-devops

# Setup a Project Space in your organization

az devops project create --name $ADO_PROJECT --organization https://dev.azure.com/$ADO_ORGANIZATION/

# Configure the CLI Defaults to work with the organization and project

az devops configure --defaults organization=https://dev.azure.com/$ADO_ORGANIZATION project=$ADO_PROJECTIt is recommended to work with this repository in a WSL Ubuntu directory structure.

git clone git@community.opengroup.org:osdu/platform/deployment-and-operations/infra-azure-provisioning.git

cd infra-azure-provisioningCreate an empty git repository with a name that clearly signals that the repo is used for the Flux manifests. For example k8-gitops-manifests.

Flux requires that the git repository have at least one commit. Initialize the repo with an empty commit.

export ADO_REPO=k8-gitops-manifests

# Initialize a Git Repository

(mkdir k8-gitops-manifests \

&& cd k8-gitops-manifests \

&& git init \

&& git commit --allow-empty -m "Initializing the Flux Manifest Repository")

# Create an ADO Repo

az repos create --name $ADO_REPO

export GIT_REPO=git@ssh.dev.azure.com:v3/${ADO_ORGANIZATION}/${ADO_PROJECT}/k8-gitops-manifests

# Push the Git Repository

(cd k8-gitops-manifests \

&& git remote add origin $GIT_REPO \

&& git push -u origin --all)In order for Automated Pipelines to be able to work with this repository the following Permissions must be set in the ADO Project for All Repositories/Permissions on the user osdu-mvp Build Service.

- Create Branch

Allow - Contribute

Allow - Contribute to Pull requests

Allow

Role Documentation: Provisioning Common Resources requires owner access to the subscription, however AD Service Principals are created that will required an AD Admin to grant approval consent on the principals created.

The script common_prepare.sh script is a helper script designed to help setup some of the common things that are necessary for infrastructure.

- Ensure you are logged into the azure cli with the desired subscription set.

- Ensure you have the access to run az ad commands.

# Execute Script

export UNIQUE=demo

./infra/scripts/common_prepare.sh $(az account show --query id -otsv) $UNIQUEIntegration Tests requires 2 Azure AD User Accounts, a tenant user and a guest user to be setup in order to use for integration testing. This activity needs to be performed by someone who has access as an AD User Admin.

- ad-guest-email (ie: integration.test@email.com)

- ad-guest-oid (OID of the user)

- ad-user-email (ie: integration.test@{tenant}.onmicrosoft.com

- ad-user-oid (OID of the user)

USER_EMAIL=""

USER_EMAIL_OID=""

GUEST_EMAIL=""

GUEST_EMAIL_OID=""

az keyvault secret set --vault-name $COMMON_VAULT --name "ad-user-email" --value $USER_EMAIL

az keyvault secret set --vault-name $COMMON_VAULT --name "ad-user-oid" --value $USER_EMAIL_OID

az keyvault secret set --vault-name $COMMON_VAULT --name "ad-guest-email" --value $GUEST_EMAIL

az keyvault secret set --vault-name $COMMON_VAULT --name "ad-guest-oid" --value $GUEST_EMAIL_OIDIstio Configuration setups a Dashboard that requires some admin credentials. Automation Pipelines uses settings out of the common keyvault for applying the values of the Istio Dashboard default credentials.

ISTIO_USERNAME=""

ISTIO_PASSWORD=""

az keyvault secret set --vault-name $COMMON_VAULT --name "istio-username" --value $(echo $ISTIO_USERNAME |base64)

az keyvault secret set --vault-name $COMMON_VAULT --name "istio-password" --value $(echo $ISTIO_PASSWORD |base64)Local Script Output Resources

The script creates some local files to be used.

- .envrc_{UNIQUE} -- This is a copy of the required environment variables for the common components.

- .envrc -- This file is used directory by direnv and requires

direnv allowto be run to access variables. - ~/.ssh/osdu_{UNIQUE}/azure-aks-gitops-ssh-key -- SSH key used by flux.

- ~/.ssh/osdu_{UNIQUE}/azure-aks-gitops-key.pub -- SSH Public Key used by flux.

- ~/.ssh/osdu_{UNIQUE}/azure-aks-node-ssh-key -- SSH Key used by AKS

- ~/.ssh/osdu_{UNIQUE}/azure-aks-node-ssh-key.pub -- SSH Public Key used by AKS

- ~/.ssh/osdu_{UNIQUE}/azure-aks-node-ssh-key.passphrase -- SSH Key Passphrase used by AKS

Ensure environment variables are loaded

direnv allow

Installed Azure Resources

- Resource Group

- Storage Account

- Key Vault

- A principal to be used by Terraform to create all resources for an OSDU Environment.

- A principal required by an OSDU environment deployment.

- An AD application to be leveraged that defines and controls access to the OSDU Environment for AD Identity.

- An AD application to be used for negative integration testing

- Assign "Azure Storage" "user_impersonation" api permission to the ad application

Removal would require deletion of all AD elements

osdu-mvp-{UNIQUE}-*, unlocking and deleting the resource group.

Infrastructure requires a bring your own Elastic Search Instance of a version of 7.x (ie: 7.11.1) with a valid https endpoint and the access information must now be stored in the Common KeyVault. The recommended method of Elastic Search is to use the Elastic Cloud Managed Service from the Marketplace.

Note: Elastic Cloud Managed Service requires a Credit Card to be associated to the subscription for billing purposes.

ES_ENDPOINT=""

ES_USERNAME=""

ES_PASSWORD=""

az keyvault secret set --vault-name $COMMON_VAULT --name "elastic-endpoint-dp1-${UNIQUE}" --value $ES_ENDPOINT

az keyvault secret set --vault-name $COMMON_VAULT --name "elastic-username-dp1-${UNIQUE}" --value $ES_USERNAME

az keyvault secret set --vault-name $COMMON_VAULT --name "elastic-password-dp1-${UNIQUE}" --value $ES_PASSWORD

cat >> .envrc << EOF

# https://cloud.elastic.co

# ------------------------------------------------------------------------------------------------------

export TF_VAR_elasticsearch_endpoint="$ES_ENDPOINT"

export TF_VAR_elasticsearch_username="$ES_USERNAME"

export TF_VAR_elasticsearch_password="$ES_PASSWORD"

EOF

cp .envrc .envrc_${UNIQUE}The public key of the azure-aks-gitops-ssh-key previously created needs to be added as a deploy key in your Azure DevOPS Project, follow these steps to add your public SSH key to your ADO environment.

# Retrieve the public key

az keyvault secret show \

--id https://$COMMON_VAULT.vault.azure.net/secrets/azure-aks-gitops-ssh-key-pub \

--query value \

-otsvThere are 2 methods that can be chosen to perform installation at this point in time.

-

Manual Installation -- Typically used when the desire is to manually make modifications to the environment and have full control all updates and deployments.

-

Pipeline Installation -- Typically used when the need is to only access the Data Platform but allow for automatic upgrades of infrastructure and services.

Manual Installation (Preferred)

This typically takes about 2 hours to complete.

-

Install the Infrastructure following directions here.

-

Setup DNS to point to the deployed infrastructure following directions here.

-

Upload the Configuration Data following directions here.

-

Deploy the application helm charts following the directions here.

-

Upload the Test Data (Entitlements) following directions here.

-

Register your partition with the Data Partition API by following the instructions here to configure your IDE to make authenticated requests to your OSDU instance and send the API request located here (createPartition).

-

Initialize tenant provisioning by hitting POST {{ENTITLEMENTS_HOST}}/tenant-provisioning api in here.

-

Load Service Data following directions here.

Automated Pipeline Installation

This typically takes about 3 hours to complete.

-

Setup Code Mirroring following directions here.

-

Setup Infrastructure Automation following directions here.

-

Setup DNS to point to the deployed infrastructure following directions here.

-

Upload the Configuration Data following directions here.

-

Upload the Test Data (Entitlements) following directions here.

-

Setup Service Automation following directions here.

-

Setup Airflow DNS to point the deployed airflow in data partition followin directions here

Steps to load TNO Data

AKS Upgrade Steps

AKS supports upgrade to only one major version i.e. 1.20 to 1.21 For checking the compatible upgrade use the following command:

az aks get-versions --location centralus --output tableData Migration for Entitlements from Milestone 4(v0.7.0) or lower, to Milestone 5(v0.8.0) or higher

Milestone 5(v0.8.0) introduced a breaking changed for Entitlements, which required migration of data using the scipt here. The script should be run whenever you update OSDU installation from less than Milestone 5(v0.8.0) to equivalent or higher.

Migration scripts for Notification from Milestone 7(v0.10.0) or lower, to Milestone 8(v0.11.0) or higher

KEDA upgrade steps from Milestone 7(v0.10.0) or lower, to Milestone 8(v0.11.0) or higher (Not Mandatory)

Follow the steps in the link to enbale policy based authoorization.

- To onboard new services follow the process located here.

Back is enabled by default. To set the backup policies, utilize the script here. The script should be run whenever you bring up a Resource Group in your deployment.