Amir Bar, Xin Wang, Vadim Kantorov, Colorado J Reed, Roei Herzig, Gal Chechik, Anna Rohrbach, Trevor Darrell, Amir Globerson

This repository is the implementation of DETReg.

- COCO training code and eval - DONE

- Pascal VOC training code and eval- TODO

- Pretrained models - TODO

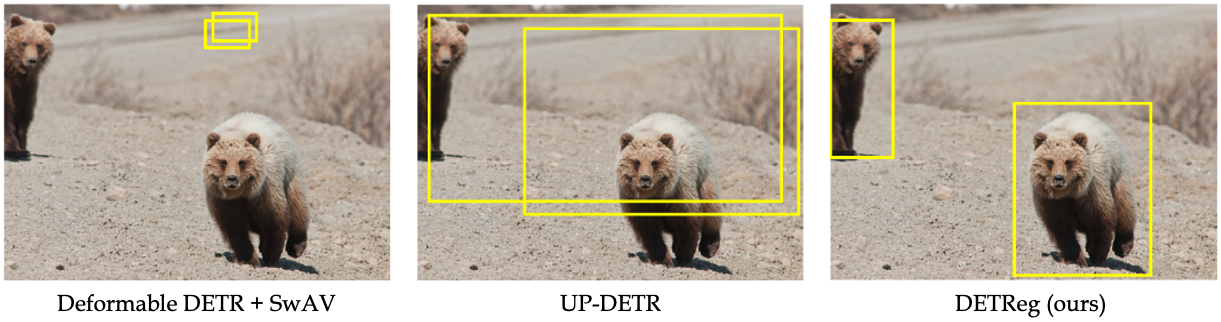

DETReg is an unsupervised pretraining approach for object DEtection with TRansformers using Region priors. Motivated by the two tasks underlying object detection: localization and categorization, we combine two complementary signals for self-supervision. For an object localization signal, we use pseudo ground truth object bounding boxes from an off-the-shelf unsupervised region proposal method, Selective Search, which does not require training data and can detect objects at a high recall rate and very low precision. The categorization signal comes from an object embedding loss that encourages invariant object representations, from which the object category can be inferred. We show how to combine these two signals to train the Deformable DETR detection architecture from large amounts of unlabeled data. DETReg improves the performance over competitive baselines and previous self-supervised methods on standard benchmarks like MS COCO and PASCAL VOC. DETReg also outperforms previous supervised and unsupervised baseline approaches on low-data regime when trained with only 1%, 2%, 5%, and 10% of the labeled data on MS COCO.

-

Linux, CUDA>=9.2, GCC>=5.4

-

Python>=3.7

We recommend you to use Anaconda to create a conda environment:

conda create -n detreg python=3.7 pip

Then, activate the environment:

conda activate detreg

Installation: (change cudatoolkit to your cuda version. For detailed pytorch installation instructions click here)

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=10.2 -c pytorch

-

Other requirements

pip install -r requirements.txt

cd ./models/ops

sh ./make.sh

# unit test (should see all checking is True)

python test.pyPlease download COCO 2017 dataset and ImageNet and organize them as following:

code_root/

└── data/

├── ilsvrc/

├── train/

└── val/

└── MSCoco/

├── train2017/

├── val2017/

└── annotations/

├── instances_train2017.json

└── instances_val2017.json

Note that in this work we used the ImageNet100 dataset, which is x10 smaller than ImageNet. To create ImageNet100 run the following command:

mkdir -p data/ilsvrc100/train

mkdir -p data/ilsvrc100/val

while read line; do ln -s <code_root>/data/ilsvrc/train/$line <code_root>/data/ilsvrc100/train/$line; done < <code_root>/datasets/category.txt

while read line; do ln -s <code_root>/data/ilsvrc/val/$line <code_root>/data/ilsvrc100/val/$line; done < <code_root>/datasets/category.txtThis should results with the following structure:

code_root/

└── data/

├── ilsvrc/

├── train/

└── val/

├── ilsvrc100/

├── train/

└── val/

└── MSCoco/

├── train2017/

├── val2017/

└── annotations/

├── instances_train2017.json

└── instances_val2017.json

To create selective search boxes for ImageNet100 on a single machine, run the following command (set num_processes):

python -m datasets.cache_ss --dataset imagenet100 --part 0 --num_m 1 --num_p <num_processes_to_use> To speed up the creation of boxes, change the arguments accordingly and run the following command on each different machine:

python -m datasets.cache_ss --dataset imagenet100 --part <machine_number> --num_m <num_machines> --num_p <num_processes_to_use> The cached boxes are saved in the following structure:

code_root/

└── cache/

└── ilsvrc/

The command for pretraining DETReg on 8 GPUs on ImageNet100 is as following:

GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 8 ./configs/DETReg_top30_in100.sh --batch_size 24 --num_workers 8Training takes around 1.5 days with 8 NVIDIA V100 GPUs.

After pretraining, a checkpoint is saved in exps/DETReg_top30_in100/checkpoint.pth. To fine tune it over different coco settings use the following commands:

Fine tuning on full COCO (should take 2 days with 8 NVIDIA V100 GPUs):

GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 8 ./configs/DETReg_fine_tune_full_coco.shFor smaller subsets which trains faster, you can use smaller number of gpus (e.g 4 with batch size 2)/ Fine tuning on 1%

GPUS_PER_NODE=4 ./tools/run_dist_launch.sh 4 ./configs/DETReg_fine_tune_1pct_coco.sh --batch_size 2Fine tuning on 2%

GPUS_PER_NODE=4 ./tools/run_dist_launch.sh 4 ./configs/DETReg_fine_tune_2pct_coco.sh --batch_size 2Fine tuning on 5%

GPUS_PER_NODE=4 ./tools/run_dist_launch.sh 4 ./configs/DETReg_fine_tune_5pct_coco.sh --batch_size 2Fine tuning on 10%

GPUS_PER_NODE=4 ./tools/run_dist_launch.sh 4 ./configs/DETReg_fine_tune_10pct_coco.sh --batch_size 2To evaluate a finetuned model, use the following command from the project basedir:

./configs/<config file>.sh --resume exps/<config file>/checkpoint.pth --evalIf you found this code helpful, feel free to cite our work:

@misc{bar2021detreg,

title={DETReg: Unsupervised Pretraining with Region Priors for Object Detection},

author={Amir Bar and Xin Wang and Vadim Kantorov and Colorado J Reed and Roei Herzig and Gal Chechik and Anna Rohrbach and Trevor Darrell and Amir Globerson},

year={2021},

eprint={2106.04550},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you found DETReg useful, consider checking out these related works as well: ReSim, SwAV, DETR, UP-DETR, and Deformable DETR.

DETReg builds on previous works code base such as Deformable DETR and UP-DETR. If you found DETReg useful please consider citing these works as well.