Neural Point Cloud Rendering via Multi-Plane projection (CVPR 2020)

Peng Dai*, Yinda Zhang*, Zhuwen Li*, Shuaicheng Liu, Bing Zeng.

Paper, Project_page, Video

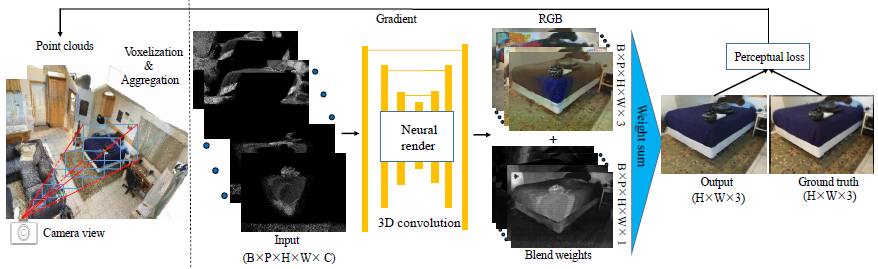

Our method is divided into two parts, the multi-plane-based voxelization (left) and multi-plane rendering(right). For the first part, point clouds are re-projected into the camera coordinate system to form a frustum region and voxelization plus aggregation operations are adopted to generate a multi-plane 3D representation, which will be concatenated with normalized view direction and sent to the render network. For the second part, the concatenated input is fed into a 3D neural render network to predict the product with 4 channels (i.e. RGB + blend weight) and the final output is generated by blending all planes. The training process is under the supervision of perceptual loss, and both network parameters and point cloud features are optimized according to the gradient.

Tensorflow 1.10.0

Python 3.6

OpenCV

Download datasets (i.e. ScanNet and Matterport 3D) into corresponding 'data/...' folders, including RGB_D images, and camera parameters.

Download 'imagenet-vgg-verydeep-19.mat' into 'VGG_Model/'.

Before training, there are several steps required. And the pre-processed results will be stored in 'pre_processing_results/[Matterport3D or ScanNet]/[scene_name]/'.

cd pre_processing/

Generate point clouds files('point_clouds.ply') from registrated RGB-D images by running

python generate_pointclouds_[ScanNet or Matterport].py

Before that, you need to specify which scene is used in 'generate_pointclouds_[ScanNet or Matterport].py' (e.g. set "scene = 'scene0010_00'" for ScanNet).

Based on generated point cloud files, point cloud simplification is adopted by running

python pointclouds_simplification.py

Also, you need to specify the 'point_clouds.ply' file generated from which dataset and scene in 'pointclouds_simplification.py' (e.g. set "scene = 'ScanNet/scene0010_00'"). And simplified point clouds will be saved in 'point_clouds_simplified.ply'.

In order to save training time, we voxelize and aggregate point clouds in advance by running

python voxelization_aggregation_[ScanNet or Matterport].py

This will pre-compute voxelization and aggregation information for each camera and save them in 'reproject_results_32/' and 'weight_32/' respectively (default 32 planes). Also, you need to specify the scene in 'voxelization_aggregation_[ScanNet or Matterport].py' (e.g. set "scene = 'scene0010_00'" for ScanNet).

To train the model, just run python npcr_ScanNet.py for ScanNet and python npcr_Matterport3D.py for Matterport3D.

You need to set 'is_training=True' and provide the paths of train-related files (i.e. RGB images, camera parameters, simplified point cloud file, pre-processed aggregation and voxelization information) by specifying the scene name in 'npcr_[ScanNet or Matterport3D].py' (e.g. set "scene = 'scene0010_00'" for ScanNet).

The trained model (i.e. checkpoint files) and optimized point descriptors (i.e. 'descriptor.mat') will be saved in '[ScanNet or Matterport3D]npcr[scene_name]/'.

To test the model, also run python npcr_ScanNet.py for ScanNet and python npcr_Matterport3D.py for Matterport3D.

You need to set 'is_training=False' and provide the paths of test-related files (i.e. checkpoint files, optimized point descriptors, camera parameters, simplified point cloud file, pre-processed aggregation and voxelization information) by specifying the scene name in 'npcr_ [ScanNet or Matterport3D].py' (e.g. set "scene = 'scene0010_00'" for ScanNet).

The test results will be saved in '[ScanNet or Matterport3D]npcr[scene_name]/Test_Result/'.

The point cloud files and pre-trained models can be downloaded here. Please follow the licenses of ScanNet and Matterport3D.

If you have questions, you can email me(daipengwa@gmail.com).

If you use our code or method in your work, please cite the following:

@inproceedings{dai2020neural,

title={Neural point cloud rendering via multi-plane projection},

author={Dai, Peng and Zhang, Yinda and Li, Zhuwen and Liu, Shuaicheng and Zeng, Bing},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7830--7839},

year={2020}

}