TiKV is an open-source, distributed, and transactional key-value database. Unlike other traditional NoSQL systems, TiKV not only provides classical key-value APIs, but also transactional APIs with ACID compliance. Built in Rust and powered by Raft, TiKV was originally created to complement TiDB, a distributed HTAP database compatible with the MySQL protocol.

The design of TiKV ('Ti' stands for titanium) is inspired by some great distributed systems from Google, such as BigTable, Spanner, and Percolator, and some of the latest achievements in academia in recent years, such as the Raft consensus algorithm.

If you're interested in contributing to TiKV, or want to build it from source, see CONTRIBUTING.md.

TiKV is a graduated project of the Cloud Native Computing Foundation (CNCF). If you are an organization that wants to help shape the evolution of technologies that are container-packaged, dynamically-scheduled and microservices-oriented, consider joining the CNCF. For details about who's involved and how TiKV plays a role, read the CNCF announcement.

With the implementation of the Raft consensus algorithm in Rust and consensus state stored in RocksDB, TiKV guarantees data consistency. Placement Driver (PD), which is introduced to implement auto-sharding, enables automatic data migration. The transaction model is similar to Google's Percolator with some performance improvements. TiKV also provides snapshot isolation (SI), snapshot isolation with lock (SQL: SELECT ... FOR UPDATE), and externally consistent reads and writes in distributed transactions.

TiKV has the following key features:

-

Geo-Replication

TiKV uses Raft and the Placement Driver to support Geo-Replication.

-

Horizontal scalability

With PD and carefully designed Raft groups, TiKV excels in horizontal scalability and can easily scale to 100+ TBs of data.

-

Consistent distributed transactions

Similar to Google's Spanner, TiKV supports externally-consistent distributed transactions.

-

Coprocessor support

Similar to HBase, TiKV implements a coprocessor framework to support distributed computing.

-

Cooperates with TiDB

Thanks to the internal optimization, TiKV and TiDB can work together to be a compelling database solution with high horizontal scalability, externally-consistent transactions, support for RDBMS, and NoSQL design patterns.

See Governance.

For instructions on deployment, configuration, and maintenance of TiKV,see TiKV documentation on our website. For more details on concepts and designs behind TiKV, see Deep Dive TiKV.

Note:

We have migrated our documentation from the TiKV's wiki page to the official website. The original Wiki page is discontinued. If you have any suggestions or issues regarding documentation, offer your feedback here.

You can view the list of TiKV Adopters.

You can see the TiKV Roadmap.

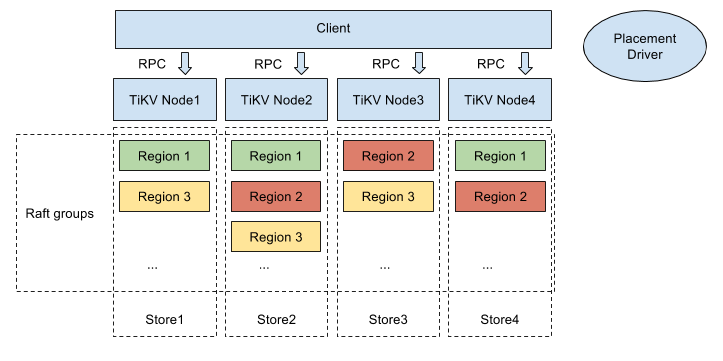

- Placement Driver: PD is the cluster manager of TiKV, which periodically checks replication constraints to balance load and data automatically.

- Store: There is a RocksDB within each Store and it stores data into the local disk.

- Region: Region is the basic unit of Key-Value data movement. Each Region is replicated to multiple Nodes. These multiple replicas form a Raft group.

- Node: A physical node in the cluster. Within each node, there are one or more Stores. Within each Store, there are many Regions.

When a node starts, the metadata of the Node, Store and Region are recorded into PD. The status of each Region and Store is reported to PD regularly.

TiKV was originally a component of TiDB. To run TiKV you must build and run it with PD, which is used to manage a TiKV cluster. You can use TiKV together with TiDB or separately on its own.

We provide multiple deployment methods, but it is recommended to use our Ansible deployment for production environment. The TiKV documentation is available on TiKV's website.

-

You can use

tidb-docker-composeto quickly test TiKV and TiDB on a single machine. This is the easiest way. For other ways, see TiDB documentation. -

Try TiKV separately

- Deploy TiKV Using Docker Stack: To quickly test TiKV separately without TiDB on a single machine

- Deploy TiKV Using Docker: To deploy a multi-node TiKV testing cluster using Docker

- Deploy TiKV Using Binary Files: To deploy a TiKV cluster using binary files on a single node or on multiple nodes

For the production environment, use TiDB Ansible to deploy the cluster.

Currently, the interfaces to TiKV are the TiDB Go client and the TiSpark Java client.

These are the clients for TiKV:

If you want to try the Go client, see Go Client.

The TiKV team meets on the 4th Wednesday of every month (unless otherwise specified) at 07.00 p.m. PST (Time zone converter).

Quick links:

A third-party security auditing was performed by Cure53. See the full report here.

To report a security vulnerability, please send an email to TiKV-security group.

See Security for the process and policy followed by the TiKV project.

Communication within the TiKV community abides by TiKV Code of Conduct. Here is an excerpt:

In the interest of fostering an open and welcoming environment, we as contributors and maintainers pledge to making participation in our project and our community a harassment-free experience for everyone, regardless of age, body size, disability, ethnicity, sex characteristics, gender identity and expression, level of experience, education, socio-economic status, nationality, personal appearance, race, religion, or sexual identity and orientation.

- Blog

- Post questions or help answer them on Stack Overflow

Join the TiKV community on Slack - Sign up and join channels on TiKV topics that interest you.

The TiKV community is also available on WeChat. If you want to join our WeChat group, send a request mail to zhangyanqing@pingcap.com, with your personal information that includes the following:

- WeChat ID (Required)

- A contribution you've made to TiKV, such as a PR (Required)

- Other basic information

We will invite you in right away.

TiKV is under the Apache 2.0 license. See the LICENSE file for details.

- Thanks etcd for providing some great open source tools.

- Thanks RocksDB for their powerful storage engines.

- Thanks rust-clippy. We do love the great project.