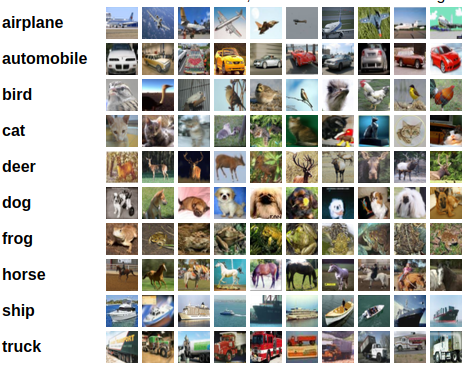

This repository is about some implementations of CNN Architecture for cifar10.

I just use Keras and Tensorflow to implementate all of these CNN models.

(maybe torch/pytorch version if I have time)

- Python (3.5.2)

- Keras (2.1.2)

- tensorflow-gpu (1.4.1)

- The first CNN model: LeNet

- Network in Network

- Vgg19 Network

- Residual Network

- Wide Residual Network

- ResNeXt

- DenseNet

- SENet

There are also some documents and tutorials in doc & issues/3.

Get it if you need. 😄

| network | dropout | preprocess | GPU | params | training time | accuracy(%) |

|---|---|---|---|---|---|---|

| Lecun-Network | - | meanstd | GTX980TI | 62k | 30 min | 76.27 |

| Network-in-Network | 0.5 | meanstd | GTX1060 | 0.96M | 1 h 30 min | 91.25 |

| Network-in-Network_bn | 0.5 | meanstd | GTX980TI | 0.97M | 2 h 20 min | 91.75 |

| Vgg19-Network | 0.5 | meanstd | GTX980TI | 39M | 4 hours | 93.53 |

| Residual-Network110 | - | meanstd | GTX980TI | 1.7M | 8 h 58 min | 94.10 |

| Wide-resnet 16x8 | - | meanstd | GTX1060 | 11.3M | 11 h 32 min | 95.14 |

| DenseNet-100x12 | - | meanstd | GTX980TI | 0.85M | 30 h 40 min | 95.15 |

| ResNeXt-4x64d | - | meanstd | GTX1080TI | 20M | 22 h 50 min | 95.51 |

| SENet(ResNeXt-4x64d) | - | meanstd | GTX1080 | 20M | - | - |

Now, I fixed some bugs and used 1080TI to retrain all of the following models.

In particular:

Change the batch size according to your GPU's memory.

Modify the learning rate schedule may imporve the results of accuracy!

| network | GPU | params | batch size | epoch | training time | accuracy(%) |

|---|---|---|---|---|---|---|

| Lecun-Network | GTX1080TI | 62k | 128 | 200 | 30 min | 76.25 |

| Network-in-Network | GTX1080TI | 0.97M | 128 | 200 | 1 h 40 min | 91.63 |

| Vgg19-Network | GTX1080TI | 39M | 128 | 200 | 1 h 53 min | 93.53 |

| Residual-Network20 | GTX1080TI | 0.27M | 128 | 200 | 44 min | 91.82 |

| Residual-Network32 | GTX1080TI | 0.47M | 128 | 200 | 1 h 7 min | 92.68 |

| Residual-Network50 | GTX1080TI | 1.7M | 128 | 200 | 1 h 42 min | 93.18 |

| Residual-Network110 | GTX1080TI | 0.27M | 128 | 200 | 3 h 38 min | 93.93 |

| Wide-resnet 16x8 | GTX1080TI | 11.3M | 128 | 200 | 4 h 55 min | 95.13 |

| DenseNet-100x12 | GTX1080TI | 0.85M | 64 | 250 | 17 h 20 min | 94.91 |

| DenseNet-100x24 | GTX1080TI | 3.3M | 64 | 250 | 22 h 27 min | 95.30 |

| DenseNet-160x24 | 1080 x 2 | 7.5M | 64 | 250 | 50 h 20 min | 95.90 |

| ResNeXt-4x64d | GTX1080TI | 20M | 120 | 250 | 21 h 3 min | 95.19 |

| SENet(ResNeXt-4x64d) | GTX1080TI | 20M | 120 | 250 | 21 h 57 min | 95.60 |

Different learning rate schedule may get different training/testing accuracy!

The original paper start with a learning rate of 0.1, divide it by 10 at 81 epoch and 122 epoch(200 epochs total).

I just run some experiments. see ResNet_CIFAR for more details.

| network | start learning rate | learning rate decay | epoch | batch size | accuracy(%) |

|---|---|---|---|---|---|

| Residual-Network20 | 0.1 | [81,122] | 200 | 128 | 91.82 |

| Residual-Network32 | 0.1 | [81,122] | 200 | 128 | 92.68 |

| Residual-Network50 | 0.1 | [81,122] | 200 | 128 | 93.18 |

| Residual-Network110 | 0.1 | [81,122] | 200 | 128 | 93.93 |

| - | 0.1 | - | - | - | - |

| Residual-Network20 | 0.1 | [100,150] | 200 | 128 | 92.02 |

| Residual-Network32 | 0.1 | [100,150] | 200 | 128 | 92.53 |

| Residual-Network50 | 0.1 | [100,150] | 200 | 128 | 93.25 |

| Residual-Network110 | 0.1 | [100,150] | 200 | 128 | 93.61 |

| - | 0.1 | - | - | - | - |

| Residual-Network20 | 0.1 | [150,225] | 300 | 128 | 91.95 |

| Residual-Network32 | 0.1 | [150,225] | 300 | 128 | 93.07 |

| Residual-Network50 | 0.1 | [150,225] | 300 | 128 | 93.12 |

| Residual-Network110 | 0.1 | [150,225] | 300 | 128 | 94.13 |

Since I don't have enough machines to train the larger networks, I only trained the smallest network described in the paper. You can see the results in liuzhuang13/DenseNet and prlz77/ResNeXt.pytorch

Please feel free to contact me if you have any questions! 😸