⭐ Star us on GitHub — it helps!!

PyTorch implementation for Interpretable Explanations of Black Boxes by Meaningful Perturbation

You will need a machine with a GPU and CUDA installed.

Then, you prepare runtime environment:

pip install -r requirements.txtThis codes are baesed on ImageNet dataset

python main.py --model_path=vgg19 --img_path=examples/catdog.pngArguments:

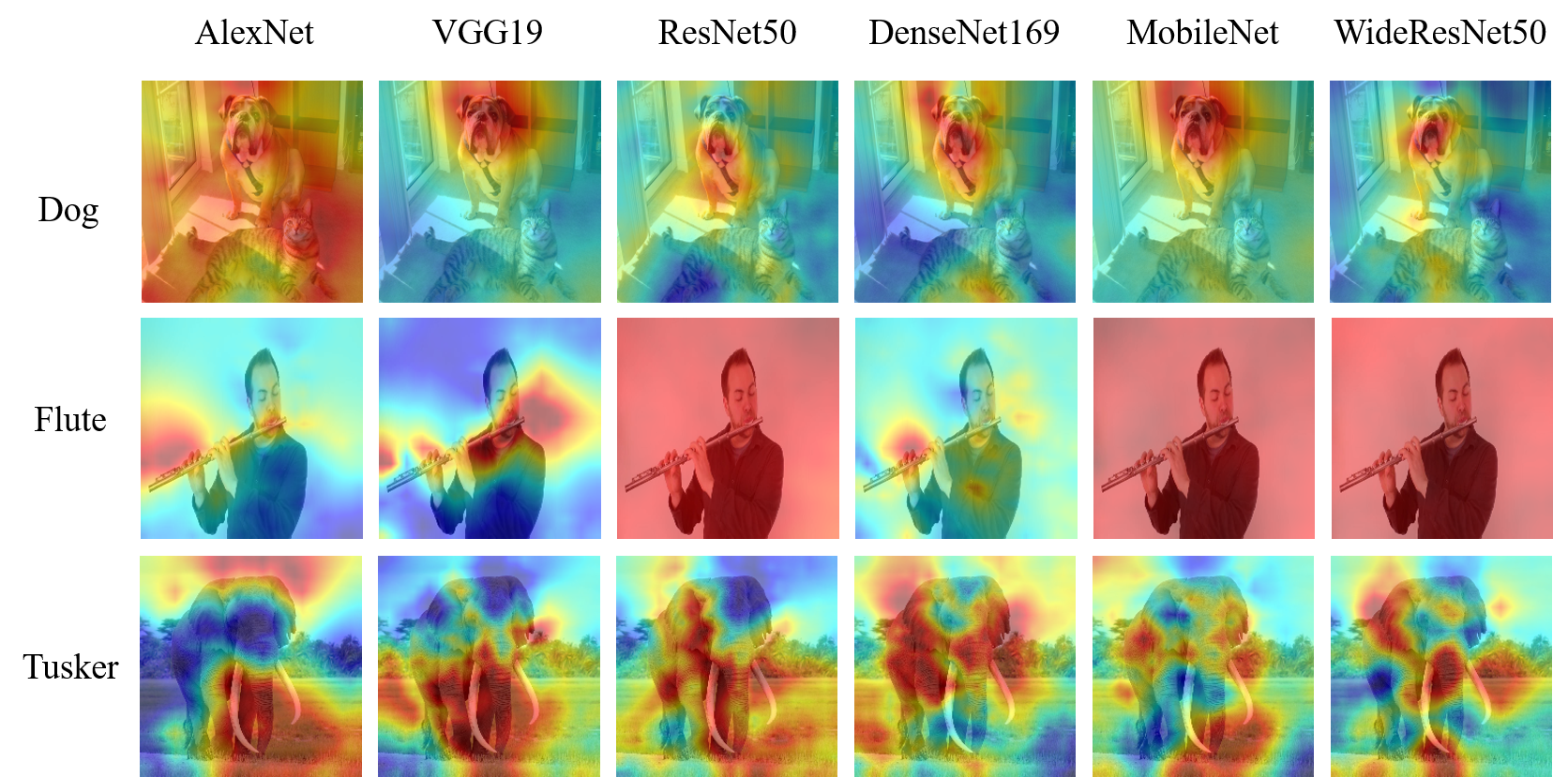

model_path- Choose a pretrained model in torchvision.models or saved model (.pt)- Examples of available list: ['alexnet', 'vgg19', 'resnet50', 'densenet169', 'mobilenet_v2' ,'wide_resnet50_2', ...]

img_path- Image Pathperturb- Choose a perturbation method (blur, noise)tv_coeff- Coefficient of TVtv_beta- TV beta valuel1_coeff- L1 regularizationfactor- Factor to upsamplinglr- Learning rateiter- Iteration

If you want to use customized model that has a type 'OrderedDict', you shoud type a code that loads model object.

Search 'load model' function in utils.py and type a code such as:

from yourNetwork import yourNetwork())

model=yourNetwork()✅ Check my blog!! Here