Simulated Cognitive Experiment Test Suite

There are several established collections of cognition tests used to test aspects of intelligence in animals (e.g. crows, monkeys) and humans such as the Primate Cognition Test Battery. These experiments help determine, for example, an agent's ability to understand object permanence and causality. Although these experiments are very common in cognitive science, they have not been deployed on and applied to machine learning models and algorithms. This is mostly because, so far, these cognitive science experiments were undertaken manually, with an experimenter having to physically move objects in from of the test agent while recording the interaction as a data point.

The goal of the Simulated Cognitive Experiment Test Suite is to allow these experiments to be deployed for machine learning research, and at scale. We therefore recreate these experiments in 3D in a randomized and programmatic fashion to allow for the creation of rich, large datasets.

This repository contains the implementation of these experiments built using AI2-THOR and Unity 3D.

🤖️ Implemented simulations:

Before attempting to reproduce these simulations, ensure that you have completed basic setup.

Gravity Bias

This experiment tests an agent's ability to recognize the role gravity plays in objects' movements. A number of rewards is dropped through an opaque tube into one of three receptables, and the agent has to infer in which receptable the reward lands.

Unlike some other experiments, this experiment was built using Unity3D instead of AI2-THOR. To set up the experiment, you need to:

- Download the Gravity Bias Unity build from the following link here.

- Unzip the GravityBias.zip file in the

cognitive_battery_benchmark/experiments/dataset_generation/utilsfolder.

cd experiments/dataset_generation

python gravity_bias_example.py

gravity_bias_example.mp4

Shape causality

In this experiment, the agent has to infer that the reward is under the object that is raised over the table as it is hiding a reward underneath. The idea is to infer a causal relationship between shape and reward position.

cd experiments/dataset_generation

python shape_example.py

experiment_video.mp4

Addition numbers

In this experiment, the agent has to infer which plate (left or right) contains the higher number of reward that were transfered from the center plate.

cd experiments/dataset_generation

python addition_numbers_example.py

experiment_video.mp4

Relative numbers

This is the simplest experiment whereby the agent has to decide which plate has more rewards.

cd experiments/dataset_generation

python relative_numbers_example.py

Simple swap

This is based on the famous shell game where a reward is put in/under one bowl, and the bowl is swapped around. The agent has to infer based on the motion of the bowls which bowl did the rewards end up in.

cd experiments/dataset_generation

python simple_swap_example.py

experiment_video.mp4

Rotation

In this experiment, similar to the simple swap experiment, the agent has to where the reward ends up. Instead of swaps, the receptables are moved using rotations.

cd experiments/dataset_generation

python rotation_example.py

experiment_video.mp4

💻 Installation

Clone this repo

git clone https://github.com/d-val/cognitive_battery_benchmark- Download our customized build from the following link here

- Unzip the downloaded thor-OSXIntel64-local.zip file in the

cognitive_battery_benchmark/experiments/dataset_generation/utilsfolder

Python 3.7 or 3.8 set-up:

Select one of the below installation options (pip or conda).

With pip:

pip install -r setup/requirements.txtWith conda:

conda env create -f setup/environment.yml

conda activate cognitive-battery-benchmark🕹️ Running simulation

Minimal Example to test if AI2-THOR installation:

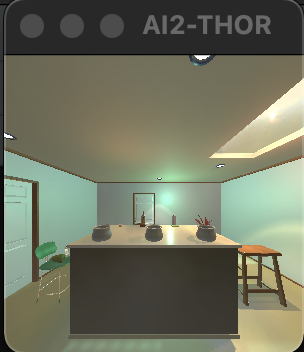

Most of our simulated environments are built on top of the excellent AI2-THOR interactable framework for embodied AI agents.

After running pip or conda install earlier, it should have installed AI2-THOR. you can verify that everything is working correctly by running the following minimal example:

python ai2-thor-minimal-example.pyThis will download some AI2-THOR Unity3D libraries which can take a while as they are big (~0.5 GB)

ai2thor/controller.py:1132: UserWarning: Build for the most recent commit: 47bafe1ca0e8012d29befc11c2639584f8f10d52 is not available. Using commit build 5c1b4d6c3121d17161935a36baaf0b8ac00378e7

warnings.warn(thor-OSXIntel64-5c1b4d6c3121d17161935a36baaf0b8ac00378e7.zip: [||||||||||||||||||||||||||||||||||||||| 42% 1.7 MiB/s] of 521.MB

After this downloads, you will see a Unity simulator window open up:

Followed by this terminal output:

success! <ai2thor.server.Event at 0x7fadd0b87250

.metadata["lastAction"] = RotateRight

.metadata["lastActionSuccess"] = True

.metadata["errorMessage"] = "

.metadata["actionReturn"] = None

> dict_keys(['objects', 'isSceneAtRest', 'agent', 'heldObjectPose', 'arm', 'fov', 'cameraPosition', 'cameraOrthSize', 'thirdPartyCameras', 'collided', 'collidedObjects', 'inventoryObjects', 'sceneName', 'lastAction', 'errorMessage', 'errorCode', 'lastActionSuccess', 'screenWidth', 'screenHeight', 'agentId', 'colors', 'colorBounds', 'flatSurfacesOnGrid', 'distances', 'normals', 'isOpenableGrid', 'segmentedObjectIds', 'objectIdsInBox', 'actionIntReturn', 'actionFloatReturn', 'actionStringsReturn', 'actionFloatsReturn', 'actionVector3sReturn', 'visibleRange', 'currentTime', 'sceneBounds', 'updateCount', 'fixedUpdateCount', 'actionReturn'])

Running Human Cognitive Battery Experiments

To run the experiments described above, paste the code snippet into the terminal.

For instance, for the SimpleSwap example, run the code below:

cd experiments/dataset_generation

python simple_swap_example.py

If successful, a window will pop up, and the experiment will run in the terminal. The output for the SimpleSwap example is shown below:

💾 Saving Images

To save images of a simulation, ensure that the last line is uncommented. (By default, the last line is uncommented. For instance, SimpleSwapExperiment.save_frames_to_folder('output') in experiments/dataset_generation/simple_swap_example.py is uncommented.)

This will save the experiment inside of experiments/dataset_generation/output as a human_readable/ set of frames with accompanying target/label (in the swap experiment, this is the zero-indexed index of the correct pot where the reward ended in), a machine_readable/ pickle file containing all frames and metadata, and a video experiment_video.mp4.

📝 Technical definitions and implementation summary:

Each of the simulated experiments are instances of the Experiment class saved within the 'experiments/dataset_generation/utils/experiment.py' class definition.

To run all experiments as a job,

cd experiments/dataset_generation

python run_all_experiments.py

If all goes well, this will output:

Running Experiment: AdditionNumbers | 1 Iterations

100%||█████████████████████████| 1/1 [00:30<00:00, 30.95s/it]

Running Experiment: RelativeNumbers | 1 Iterations

100%||█████████████████████████| 1/1 [00:04<00:00, 4.01s/it]

Running Experiment: Rotation | 1 Iterations

100%||█████████████████████████| 1/1 [00:06<00:00, 6.34s/it]

Running Experiment: Shape | 1 Iterations

100%||█████████████████████████| 1/1 [00:09<00:00, 9.99s/it]

Running Experiment: SimpleSwap | 1 Iterations

100%||█████████████████████████| 1/1 [00:12<00:00, 12.46s/it]

Running Experiment: GravityBias | 1 Iterations

100%|████████████████████████████████████████████████████████| 1/1 [00:22<00:00, 22.57s/it]

The created datasets will be stored in cd experiments/dataset_generation/output

Here is a link to a zipped folder as an example of the expected output.

Running headless

If you plan to generate a dataset with a Linux headless instance, run:

python setup/linux_setup.pyThen, make sure to use the _linux renderer config; then the rest of the dataset generation is the same.

🚨 Issues & Debugging

- When running on OS X, you might get a prompt that

thor-OSXIntel64-localis not verified. Follow the following steps for allowing running of the file.