Official implementation of Scale Adaptive Network (SAN) as described in Learning to Learn Parameterized Classification Networks for Scalable Input Images (ECCV'20) by Duo Li, Anbang Yao and Qifeng Chen on the ILSVRC 2012 benchmark.

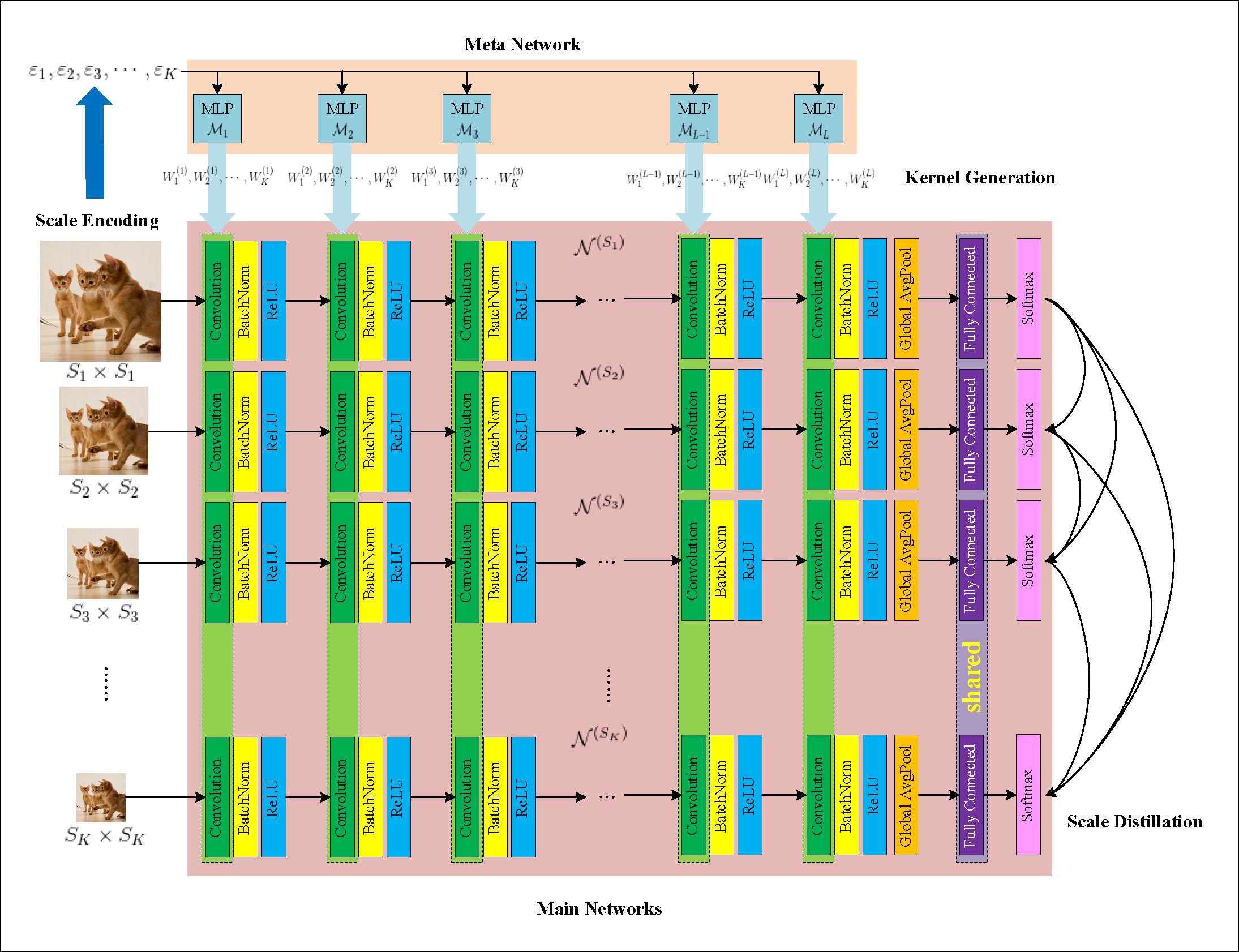

We present a meta learning framework which dynamically parameterizes main networks conditioned on its input resolution at runtime, leading to efficient and flexible inference for arbitrarily switchable input resolutions.

- PyTorch 1.0+

- NVIDIA-DALI (in development, not recommended)

Download the ImageNet dataset and move validation images to labeled subfolders. To do this, you can use the following script: https://raw.githubusercontent.com/soumith/imagenetloader.torch/master/valprep.sh

| Resolution | Top-1 Acc. | Download |

|---|---|---|

| 224x224 | 70.974 | Google Drive |

| 192x192 | 69.754 | Google Drive |

| 160x160 | 68.482 | Google Drive |

| 128x128 | 66.360 | Google Drive |

| 96x96 | 62.560 | Google Drive |

| Resolution | Top-1 Acc. | Download |

|---|---|---|

| 224x224 | 77.150 | Google Drive |

| 192x192 | 76.406 | Google Drive |

| 160x160 | 75.312 | Google Drive |

| 128x128 | 73.526 | Google Drive |

| 96x96 | 70.610 | Google Drive |

Please visit my repository mobilenetv2.pytorch.

| Architecture | Download |

|---|---|

| ResNet-18 | Google Drive |

| ResNet-50 | Google Drive |

| MobileNetV2 | Google Drive |

python imagenet.py \

-a meta_resnet18/50 \

-d <path-to-ILSVRC2012-data> \

--epochs 120 \

--lr-decay cos \

-c <path-to-save-checkpoints> \

--sizes <list-of-input-resolutions> \ # default is 224, 192, 160, 128, 96

-j <num-workers>

--kdpython imagenet.py \

-a meta_mobilenetv2 \

-d <path-to-ILSVRC2012-data> \

--epochs 150 \

--lr-decay cos \

--lr 0.05 \

--wd 4e-5 \

-c <path-to-save-checkpoints> \

--sizes <list-of-input-resolutions> \ # default is 224, 192, 160, 128, 96

-j <num-workers>

--kdpython imagenet.py \

-a <arch> \

-d <path-to-ILSVRC2012-data> \

--resume <checkpoint-file> \

--sizes <list-of-input-resolutions> \

-e

-j <num-workers>Arguments are:

checkpoint-file: previously downloaded checkpoint file from here.list-of-input-resolutions: test resolutions using different privatized BNs.

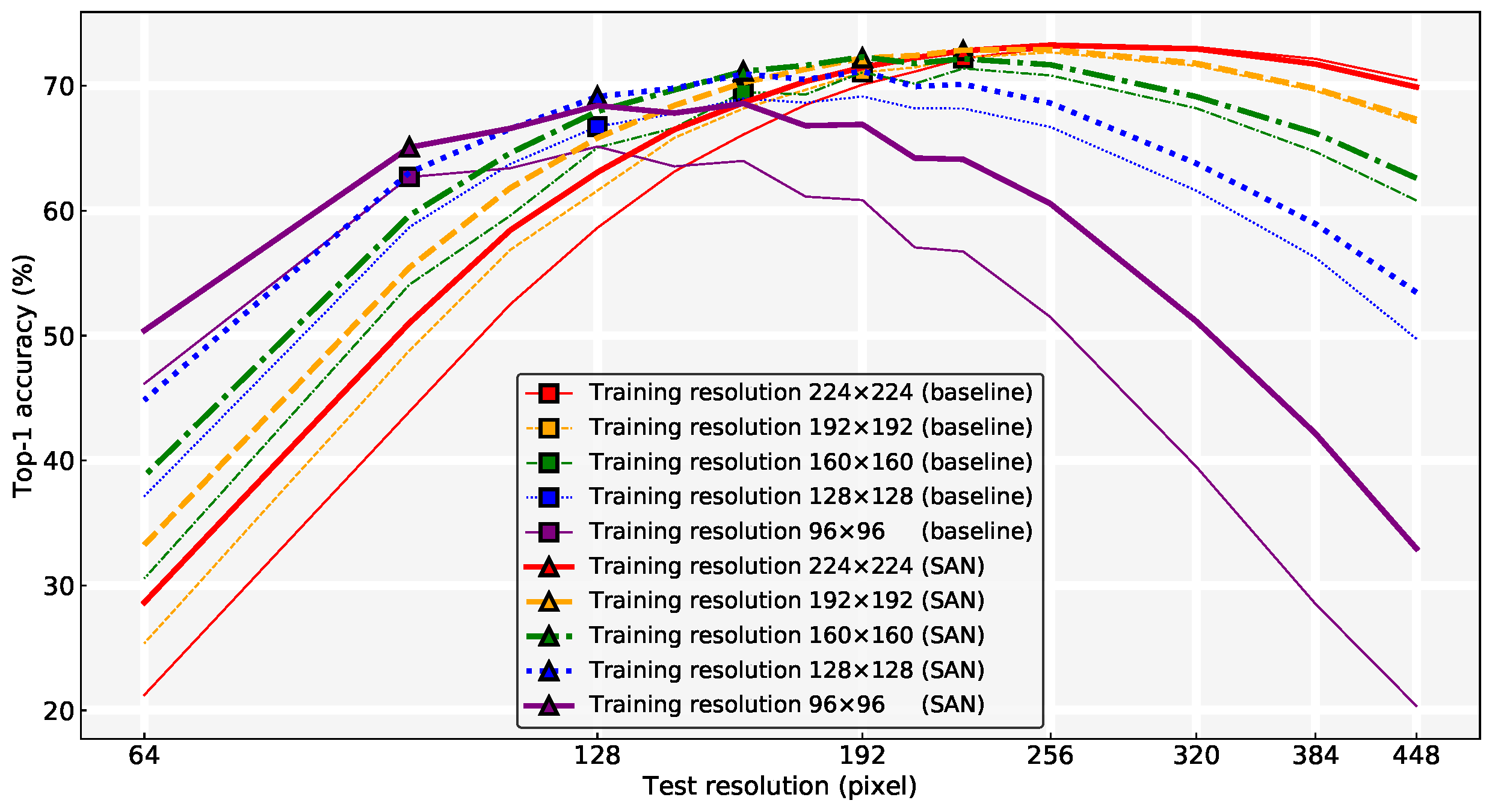

which gives Table 1 in the main paper and Table 5 in the supplementary materials.

Manually set the scale encoding here, which gives the left panel of Table 2 in the main paper.

Uncomment this line in the main script to enable post-hoc BN calibration, which gives the middle panel of Table 2 in the main paper.

Manually set the scale encoding here and its corresponding shift here, then uncomment this line to replace its above line, which gives Table 6 in the supplementary materials.

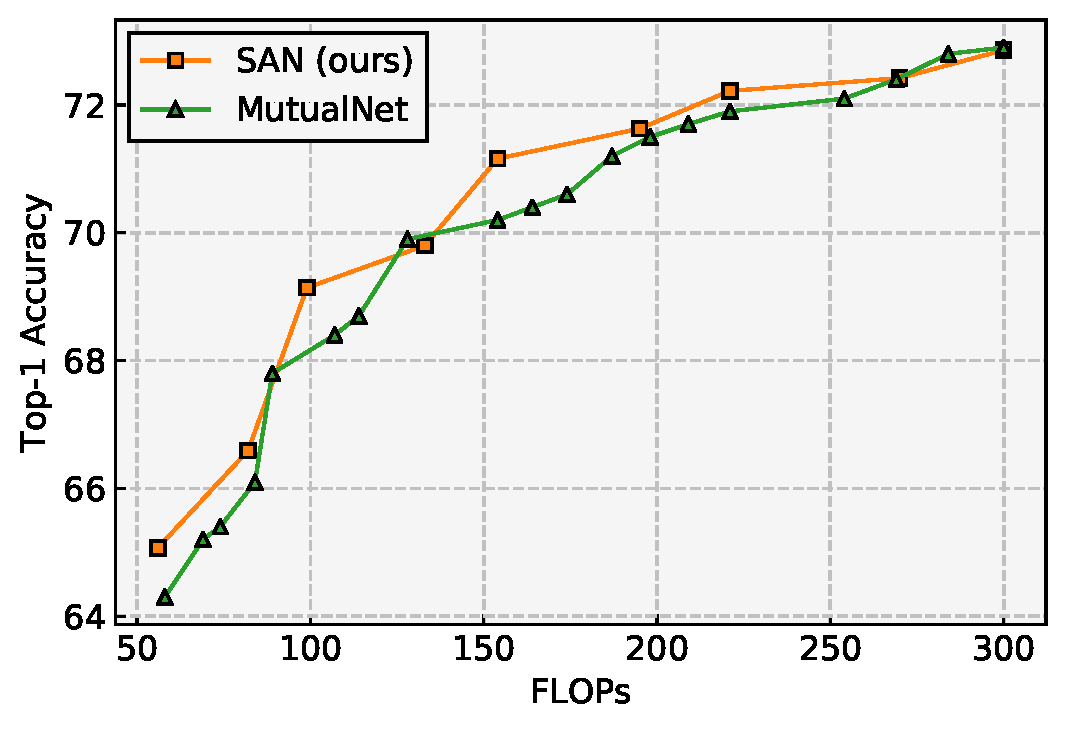

MutualNet: Adaptive ConvNet via Mutual Learning from Network Width and Resolution is accpepted to ECCV 2020 as oral. MutualNet and SAN are contemporary works sharing the similar motivation, regarding to switchable resolution during inference. We provide a comparison of top-1 validation accuracy on ImageNet with the same FLOPs, based on the common MobileNetV2 backbone.

Note that MutualNet training is based on the resolution range of {224, 192, 160, 128} with 4 network widths, while SAN training is based on the resolution range of {224, 192, 160, 128, 96} without tuning the network width, so the configuratuon is different in this comparison.

If you find our work useful in your research, please consider citing:

@InProceedings{Li_2020_ECCV,

author = {Li, Duo and Yao, Anbang and Chen, Qifeng},

title = {Learning to Learn Parameterized Classification Networks for Scalable Input Images},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {August},

year = {2020}

}