Self-Attention GAN

Meta overview

This repository provides a PyTorch implementation of SAGAN. Both wgan-gp and wgan-hinge loss are ready, but note that wgan-gp is somehow not compatible with the spectral normalization. Remove all the spectral normalization at the model for the adoption of wgan-gp.

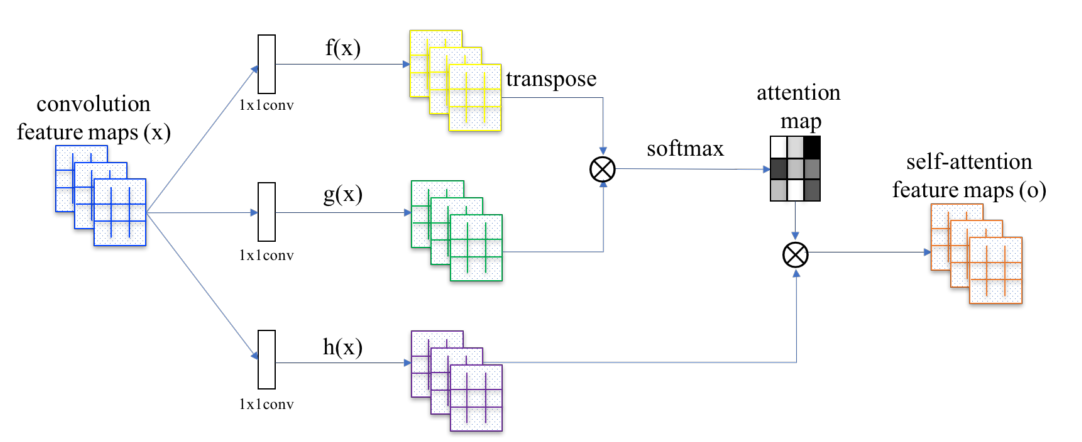

Self-attentions are applied to later two layers of both discriminator and generator.

Prerequisites

Usage

1. Clone the repository

$ git clone https://github.com/heykeetae/Self-Attention-GAN.git

$ cd Self-Attention-GAN2. Install datasets (CelebA or LSUN)

$ bash download.sh CelebA

or

$ bash download.sh LSUN3. Train

(i) Train

$ python python main.py --batch_size 64 --imsize 64 --dataset celeb --adv_loss hinge --version sagan_celeb

or

$ python python main.py --batch_size 64 --imsize 64 --dataset lsun --adv_loss hinge --version sagan_lsun4. Enjoy the results

$ cd samples/sagan_celeb

or

$ cd samples/sagan_lsun

Samples generated every 100 iterations are located. The rate of sampling could be controlled via --sample_step (ex, --sample_step 100).