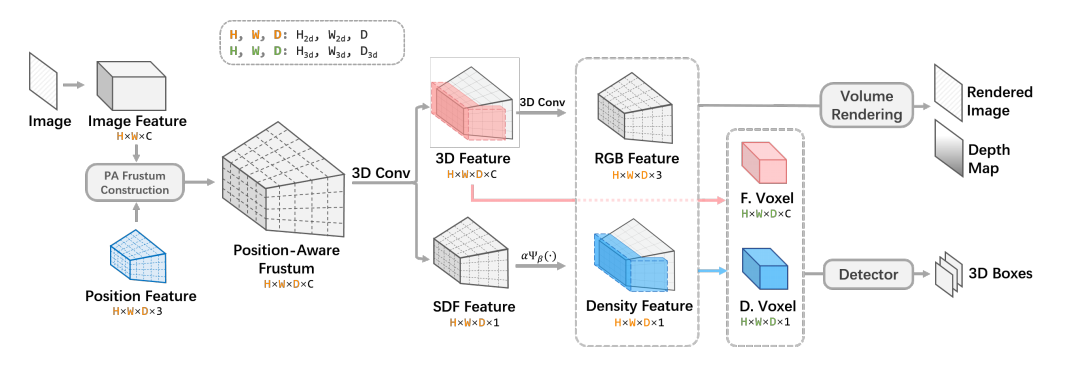

This is the implementation of MonoNeRD: NeRF-like Representations for Monocular 3D Object Detection, In ICCV'23, Junkai Xu, Liang Peng, Haoran Cheng, Hao Li, Wei Qian, Ke Li, Wenxiao Wang and Deng Cai. Also a simple way to get 3D geometry and occupancy from monocular images.

- [2023-8-21] Paper is released on arxiv!

- [2023-8-1] Code is released.

- [2023-7-14] MonoNeRD is accepted at ICCV 2023!! Code is comming soon.

- [2023-2-20] Demo release.

All the codes for training and evaluation are tested in the following environment:

- Linux (tested on Ubuntu 18.04)

- Python 3.8

- PyTorch 1.8.1

- Torchvision 0.9.1

- CUDA 11.1.1

spconv 1.2.1 (commit f22dd9)

a. Clone this repository.

git clone https://github.com/cskkxjk/MonoNeRD.gitb. Install the dependent libraries as follows:

- Install the dependent python libraries:

pip install -r requirements.txt - Install the SparseConv library, we use the implementation from

[spconv].

git clone https://github.com/traveller59/spconv

git reset --hard f22dd9

git submodule update --recursive

python setup.py bdist_wheel

pip install ./dist/spconv-1.2.1-cp38-cp38m-linux_x86_64.whl- Install modified mmdetection from

[mmdetection_kitti]

git clone https://github.com/xy-guo/mmdetection_kitti

python setup.py developc. Install this library by running the following command:

python setup.py developFor KITTI, dataset configs are located within configs/stereo/dataset_configs, and the model configs are located within configs/stereo/kitti_models.

For Waymo, dataset configs are located within configs/mono/dataset_configs, and the model configs are located within configs/mono/waymo_models.

- Please download the official KITTI 3D object detection dataset and organize the downloaded files as follows (the road planes are provided by OpenPCDet [road plane], which are optional for training LiDAR models):

- Our implementation used a KITTI formated version of Waymo Open Dataset just like

[CaDDN], please refer to[Waymo-KITTI Converter]for details.

MonoNeRD_PATH

├── data

│ ├── kitti

│ │ │── ImageSets

│ │ │── training

│ │ │ ├──calib & velodyne & label_2 & image_2 & image_3

│ │ │── testing

│ │ │ ├──calib & velodyne & image_2

│ ├── waymo

│ │ │── ImageSets

│ │ │── training

│ │ │ ├──calib & velodyne & label_2 & image_2 ...

│ │ │── validation

│ │ │ ├──calib & velodyne & label_2 & image_2 ...

├── configs

├── mononerd

├── tools

- You can also choose to link your KITTI and Waymo dataset path by

YOUR_KITTI_DATA_PATH=~/data/kitti_object

ln -s $YOUR_KITTI_DATA_PATH/ImageSets/ ./data/kitti/

ln -s $YOUR_KITTI_DATA_PATH/training/ ./data/kitti/

ln -s $YOUR_KITTI_DATA_PATH/testing/ ./data/kitti/

YOUR_WAYMO_DATA_PATH=~/data/waymo

ln -s YOUR_WAYMO_DATA_PATH/ImageSets/ ./data/waymo/

ln -s YOUR_WAYMO_DATA_PATH/training/ ./data/waymo/

ln -s YOUR_WAYMO_DATA_PATH/validation/ ./data/waymo/

- Generate the data infos by running the following command:

python -m mononerd.datasets.kitti.lidar_kitti_dataset create_kitti_infos

python -m mononerd.datasets.kitti.lidar_kitti_dataset create_gt_database_only

python -m mononerd.datasets.waymo.lidar_waymo_dataset creat_waymo_infos

python -m mononerd.datasets.waymo.lidar_waymo_dataset create_gt_database_only- Train with multiple GPUs

./scripts/dist_train.sh ${NUM_GPUS} 'exp_name' ./configs/stereo/kitti_models/mononerd.yaml

- To test with multiple GPUs:

./scripts/dist_test_ckpt.sh ${NUM_GPUS} ./configs/stereo/kitti_models/mononerd.yaml ./ckpt/pretrained_mononerd.pth

The results are the BEV / 3D detection performance of Car class on the val set of KITTI dataset.

- All models are trained with 4 NVIDIA 3080Ti GPUs and are available for download.

- The training time is measured with 4 NVIDIA 3080Ti GPUs and PyTorch 1.8.1.

| Training Time | Easy@R40 | Moderate@R40 | Hard@R40 | download | |

|---|---|---|---|---|---|

| mononerd | ~13 hours | 29.03 / 20.64 | 22.03 / 15.44 | 19.41 / 13.99 | Google-drive / 百度盘 |

@inproceedings{xu2023mononerd,

title={Mononerd: Nerf-like representations for monocular 3d object detection},

author={Xu, Junkai and Peng, Liang and Cheng, Haoran and Li, Hao and Qian, Wei and Li, Ke and Wang, Wenxiao and Cai, Deng},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={6814--6824},

year={2023}

}

This project benefits from the following codebases. Thanks for their great works!