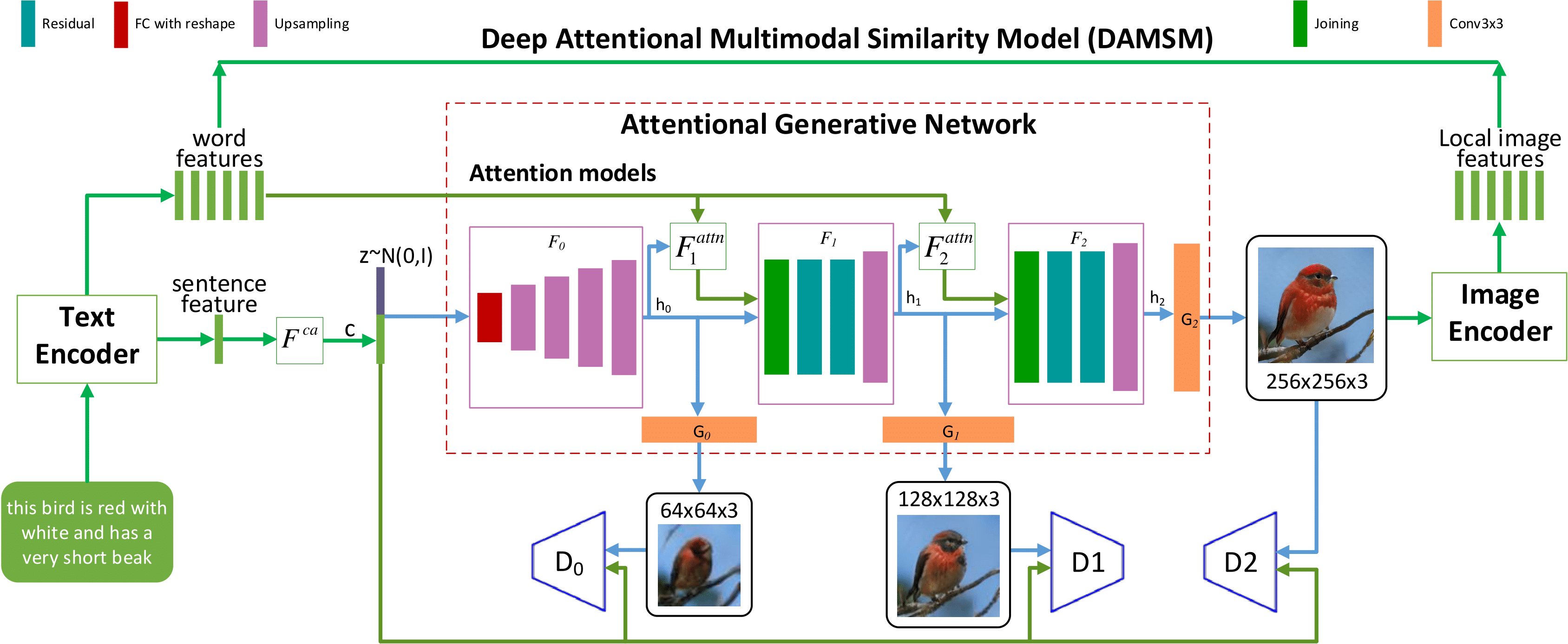

Pytorch implementation for reproducing AttnGAN results in the paper AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks by Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He. (This work was performed when Tao was an intern with Microsoft Research).

python 3.5

Pytorch

In addition, please add the project folder to PYTHONPATH and pip install the following packages:

python-dateutileasydictpandastorchfilenltkscikit-image

Data

- Download our preprocessed metadata for birds coco and save them to

data/ - Download the birds image data. Extract them to

data/birds/ - Download coco dataset and extract the images to

data/coco/

Training

-

Pre-train DAMSM models:

- For bird dataset:

python pretrain_DAMSM.py --cfg cfg/DAMSM/bird.yml --gpu 0 - For coco dataset:

python pretrain_DAMSM.py --cfg cfg/DAMSM/coco.yml --gpu 1

- For bird dataset:

-

Train AttnGAN models:

- For bird dataset:

python main.py --cfg cfg/bird_attn2.yml --gpu 2 - For coco dataset:

python main.py --cfg cfg/coco_attn2.yml --gpu 3

- For bird dataset:

-

*.ymlfiles are example configuration files for training/evaluation our models.

Pretrained Model

-

DAMSM for bird. Download and save it to

DAMSMencoders/ -

DAMSM for coco. Download and save it to

DAMSMencoders/ -

AttnGAN for bird. Download and save it to

models/ -

AttnGAN for coco. Download and save it to

models/ -

AttnDCGAN for bird. Download and save it to

models/- This is an variant of AttnGAN which applies the propsoed attention mechanisms to DCGAN framework.

Sampling

- Run

python main.py --cfg cfg/eval_bird.yml --gpu 1to generate examples from captions in files listed in./data/birds/example_filenames.txt. Results are saved toDAMSMencoders/. - For sampling, be sure to set

TRAIN.FLAGandB_VALIDATIONtoFalse. In case of executing the model on a CPU, set--gpuparameter to a negative value. The fileexample_filenames.txtshould contain a list of files, where each file has a one caption per line. After execution,AttnGANwill generate 3 image files (with different qualities) and 2 attention maps. - Change the

eval_*.ymlfiles to generate images from other pre-trained models. - Input your own sentence in "./data/birds/example_captions.txt" if you wannt to generate images from customized sentences.

Validation

- To generate images for all captions in the validation dataset, change B_VALIDATION to True in the eval_*.yml. and then run

python main.py --cfg cfg/eval_bird.yml --gpu 1 - We compute inception score for models trained on birds using StackGAN-inception-model.

- We compute inception score for models trained on coco using improved-gan/inception_score.

Examples generated by AttnGAN [Blog]

| bird example | coco example |

|---|---|

|

|

Evaluation code embedded into a callable containerized API is included in the eval\ folder.

For a given bird attribute in attributes.txt , using InterfaceGAN we can obtain

a direction for latent code manipulation, in order to make it more positive/negative for such attribute.

To obtain the direction as numpy array, InterfaceGAN needs a set of latent codes and their corresponding attribute

values. The following files support that process:

batch_generate_birds.pygenerates bird images using random latent codes. The latent codes are stored innoise_vectors_array.npyand image information, including file location, is saved in themetadata_file.csvfile.organize_image_folder.pywill organise images in the Caltech-UCSD Birds into train and validation folders for an specific attribute fromattributes.txt. This is needed for training a feature predictor for that attribute.train_feature_predictor.pywill train a transfer-learning based feature predictor, using the folder organised viaorganize_image_folder.pyas data input. Model state will be stored in thefeature_predictor.ptfile.batch_predict_feature.pywill predict the value of a feature using the model trained withtrain_feature_predictor.py, over images generated using thenoise_vectors_array.npylatent codes. Features values will be stored in thepredictions.npynumpy array.

We can later feed noise_vectors_array.npy and predictions.npy to the train_boundary.py module of InterfaceGAN

to obtain the direction for attribute manipulation.

Once we have the boundary as a numpy array, can use the AttnGAN/code/main.py file for image generation and interpolation.

Use the attnganw/config.py to configure the interpolation parameters.

If you find AttnGAN useful in your research, please consider citing:

@article{Tao18attngan,

author = {Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He},

title = {AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks},

Year = {2018},

booktitle = {{CVPR}}

}

Reference