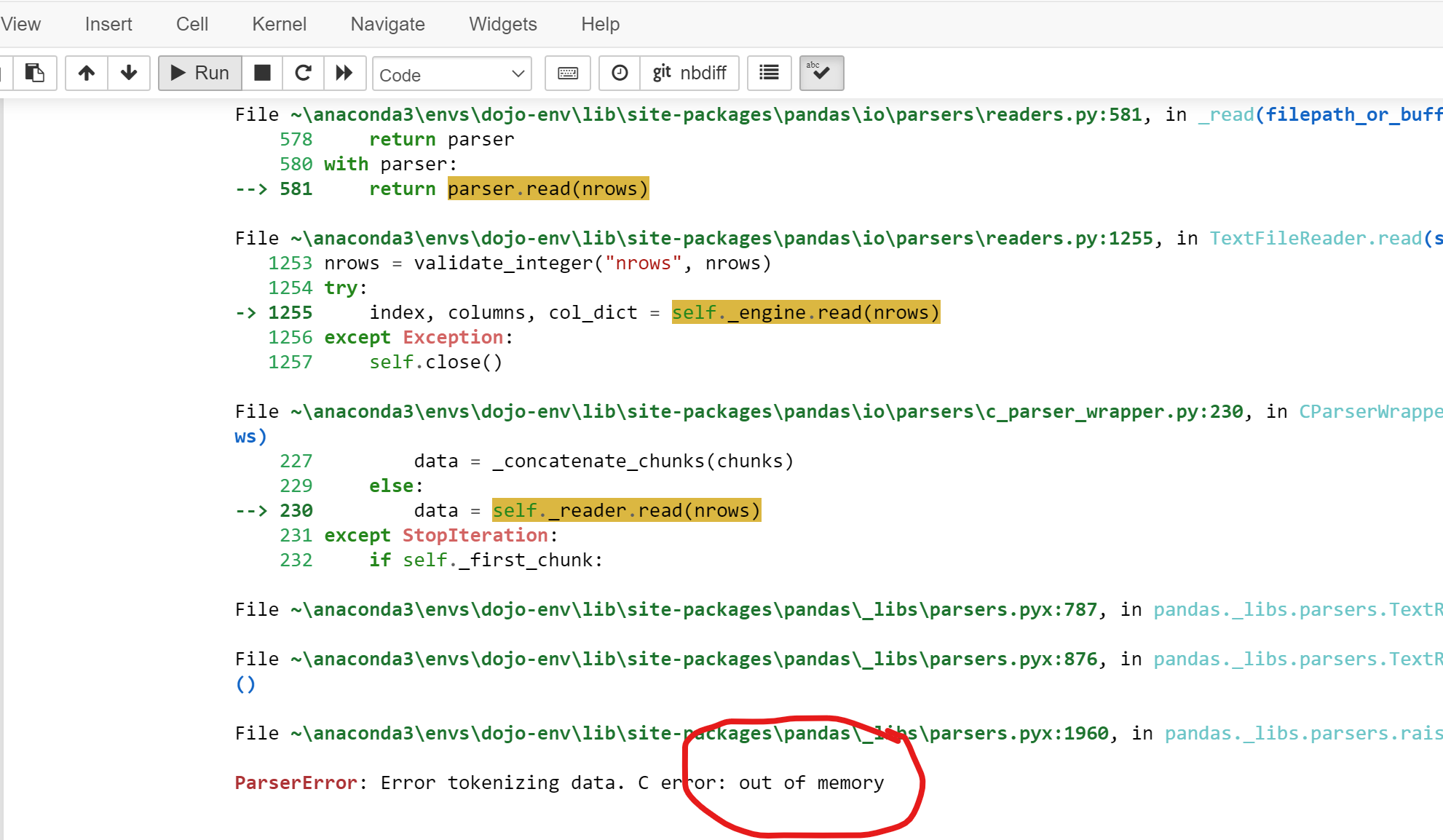

- Your machine runs out of RAM (memory) when loading in a large file.

Instead of loading the entire dataframe using pd.read_csv, we can instead create a special TextFileReader object, which will allow us to read in our dataframe in chunks.

- Use the chunksize argument for

pd.read_csvto create a TextFileReader.- chunksize is the number of rows to load at once.

- We will use 100,000 rows in our examples.

df_reader = pd.read_csv(basics_url, sep='\t', low_memory=False, chunksize=100_000 )

df_reader<pandas.io.parsers.readers.TextFileReader at 0x2ed437f40>

- Use the .get_chunk() method to extract the first chunk of rows.

temp_df = df_reader.get_chunk()-

Figure out your entire workflow for that file using just temp_df chunk, and save to disk.

-

Now combine the workflow into 1 large loop through the entire textfilereader.

-

Use glob to easily combine all chunk csvs into 1 final.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import os

os.makedirs('Data',exist_ok=True)## title basics

basics_url = 'https://datasets.imdbws.com/title.basics.tsv.gz'

df_reader = pd.read_csv(basics_url, sep='\t',

low_memory=False, chunksize=100_000)

df_reader<pandas.io.parsers.readers.TextFileReader at 0x106670e80>

- We now get a TextFileReader instead of a DataFrame.

- The TextFileReader is designed to return one chunk at a time from the source file as a dataframe using the

reader.get_chunk()method.- It keep tracks of its position in the original file using the

._currowattribute.

- It keep tracks of its position in the original file using the

## the first row # of the next chunk is stored under ._currow

df_reader._currow0

- We haven't loaded any chunks yet so currow should indeed be 0.

## get the first df chunk from the reader

temp_df = df_reader.get_chunk()

temp_df.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | tt0000001 | short | Carmencita | Carmencita | 0 | 1894 | \N | 1 | Documentary,Short |

| 1 | tt0000002 | short | Le clown et ses chiens | Le clown et ses chiens | 0 | 1892 | \N | 5 | Animation,Short |

| 2 | tt0000003 | short | Pauvre Pierrot | Pauvre Pierrot | 0 | 1892 | \N | 4 | Animation,Comedy,Romance |

| 3 | tt0000004 | short | Un bon bock | Un bon bock | 0 | 1892 | \N | 12 | Animation,Short |

| 4 | tt0000005 | short | Blacksmith Scene | Blacksmith Scene | 0 | 1893 | \N | 1 | Comedy,Short |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 99995 | tt0102317 | movie | Little Noises | Little Noises | 0 | 1991 | \N | 73 | Comedy,Drama |

| 99996 | tt0102318 | tvMovie | A Little Piece of Heaven | A Little Piece of Heaven | 0 | 1991 | \N | 110 | Crime,Drama,Family |

| 99997 | tt0102319 | movie | A Little Stiff | A Little Stiff | 0 | 1991 | \N | 86 | Comedy |

| 99998 | tt0102320 | short | A Little Vicious | A Little Vicious | 0 | 1991 | \N | 30 | Documentary,Short |

| 99999 | tt0102321 | movie | Liao zhai: Hua nong yue | Liao zhai: Hua nong yue | 0 | 1991 | \N | 93 | \N |

100000 rows × 9 columns

- We should now have an updated currrow that reflects we have already grabbed rows 0 through 99_999.

- Therefore the currow should be 100_000

## checking the updated ._currow

df_reader._currow100000

- Now, figure out the filtering steps you need to apply to the temp df.

## Replace "\N" with np.nan

temp_df.replace({'\\N':np.nan},inplace=True)

## Eliminate movies that are null for runtimeMinute, genres, and startYear

temp_df = temp_df.dropna(subset=['runtimeMinutes','genres','startYear'])Note: there are additional required filtering steps for the assignment that should should be included here in your own notebook.

### Convert startyear to numeric for slicing

temp_df['startYear'] = temp_df['startYear'].astype(float).copy()

## keep startYear 2000-2022

temp_df = temp_df[(temp_df['startYear']>=2000)&(temp_df['startYear']<2022)]

temp_df/var/folders/rf/vw4r41jd7vd95x1w0dth7v9h0000gp/T/ipykernel_3151/2128585443.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

temp_df['startYear'] = temp_df['startYear'].astype(float).copy()

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 13079 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 33790 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 34790 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 39532 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 43536 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| 44078 | tt0044879 | short | Mandala | Mandala | 0 | 2021.0 | NaN | 3 | Short |

| 55738 | tt0056840 | short | Aufsätze | Aufsätze | 0 | 2021.0 | NaN | 10 | Short |

| 59185 | tt0060366 | short | A Embalagem de Vidro | A Embalagem de Vidro | 0 | 2020.0 | NaN | 11 | Documentary,Short |

| 61089 | tt0062336 | movie | The Tango of the Widower and Its Distorting Mi... | El Tango del Viudo y Su Espejo Deformante | 0 | 2020.0 | NaN | 70 | Drama |

| 63720 | tt0065047 | short | The Sun's Gonna Shine | The Sun's Gonna Shine | 0 | 2014.0 | NaN | 10 | Documentary,Music,Short |

| 65857 | tt0067230 | short | I Miss Sonia Henie | I Miss Sonia Henie | 0 | 2009.0 | NaN | 20 | Comedy,Short |

| 66247 | tt0067626 | short | Before the Rally | Przed rajdem | 0 | 2006.0 | NaN | 16 | Documentary,Short |

| 66304 | tt0067683 | movie | Workers '71: Nothing About Us Without Us | Robotnicy 1971 - Nic o nas bez nas | 0 | 2006.0 | NaN | 47 | Documentary |

| 67531 | tt0068943 | short | Between Wroclaw and Zielona Góra | Miedzy Wroclawiem a Zielona Góra | 0 | 2010.0 | NaN | 11 | Documentary,Short |

| 67635 | tt0069049 | movie | The Other Side of the Wind | The Other Side of the Wind | 0 | 2018.0 | NaN | 122 | Drama |

| 70534 | tt0072043 | short | X-Ray | Przeswietlenie | 0 | 2006.0 | NaN | 13 | Documentary,Short |

| 77929 | tt0079644 | movie | November 1828 | November 1828 | 0 | 2001.0 | NaN | 140 | Drama,War |

| 86766 | tt0088751 | movie | The Naked Monster | The Naked Monster | 0 | 2005.0 | NaN | 100 | Comedy,Horror,Sci-Fi |

| 87078 | tt0089067 | movie | El día de los albañiles 2 | El día de los albañiles 2 | 0 | 2001.0 | NaN | 90 | Comedy |

| 87435 | tt0089435 | short | Kokoa | Kokoa | 0 | 2019.0 | NaN | 13 | Animation,Short |

| 90881 | tt0092960 | movie | En tres y dos | En tres y dos | 0 | 2004.0 | NaN | 102 | Drama |

| 92731 | tt0094859 | movie | Chief Zabu | Chief Zabu | 0 | 2016.0 | NaN | 74 | Comedy |

| 93902 | tt0096056 | movie | Crime and Punishment | Crime and Punishment | 0 | 2002.0 | NaN | 126 | Drama |

| 95113 | tt0097304 | movie | Everything's for You | Everything's for You | 0 | 2009.0 | NaN | 58 | Documentary |

| 98005 | tt0100275 | movie | The Wandering Soap Opera | La Telenovela Errante | 0 | 2017.0 | NaN | 80 | Comedy,Drama,Fantasy |

- Now, save the filtered dataframe to disk, using the chunk # in the filename.

## Programatically saving an fname using the chunk #

chunk_num=1

fname= f'Data/title_basics_chunk_{chunk_num:03d}.csv.gz'

fname'Data/title_basics_chunk_001.csv.gz'

-

Tip: if we use the ":03d" format code when inserting the chunk number using an f-string, it will add 2 leading 0's, so the first file will be numbered 001 instead of 1. This will be helpful when viewing the files in your file explorer or on GitHub.

-

Now, let's save the temp_df to disk, using the filename based on the chunk_num.

- Make sure to increase the value of chunk_num by 1 after saving the file.

## Save temp_df to disk using the fname.

temp_df.to_csv(fname, compression='gzip')

## incrementing chunk_num by 1 for the next file.

chunk_num+=1- While we usually add "index=False" when we save a dataframe to disk, we did not do that above.

- This means that the index will be saved as an additional column, which will show up as "Unnamed: 0" when we load in the csv again.

- This index will allow us to know which row # each movie was in the original file.

pd.read_csv(fname).dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| Unnamed: 0 | tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 13079 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 1 | 33790 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 2 | 34790 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 3 | 39532 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 4 | 43536 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| 5 | 44078 | tt0044879 | short | Mandala | Mandala | 0 | 2021.0 | NaN | 3 | Short |

| 6 | 55738 | tt0056840 | short | Aufsätze | Aufsätze | 0 | 2021.0 | NaN | 10 | Short |

| 7 | 59185 | tt0060366 | short | A Embalagem de Vidro | A Embalagem de Vidro | 0 | 2020.0 | NaN | 11 | Documentary,Short |

| 8 | 61089 | tt0062336 | movie | The Tango of the Widower and Its Distorting Mi... | El Tango del Viudo y Su Espejo Deformante | 0 | 2020.0 | NaN | 70 | Drama |

| 9 | 63720 | tt0065047 | short | The Sun's Gonna Shine | The Sun's Gonna Shine | 0 | 2014.0 | NaN | 10 | Documentary,Music,Short |

| 10 | 65857 | tt0067230 | short | I Miss Sonia Henie | I Miss Sonia Henie | 0 | 2009.0 | NaN | 20 | Comedy,Short |

| 11 | 66247 | tt0067626 | short | Before the Rally | Przed rajdem | 0 | 2006.0 | NaN | 16 | Documentary,Short |

| 12 | 66304 | tt0067683 | movie | Workers '71: Nothing About Us Without Us | Robotnicy 1971 - Nic o nas bez nas | 0 | 2006.0 | NaN | 47 | Documentary |

| 13 | 67531 | tt0068943 | short | Between Wroclaw and Zielona Góra | Miedzy Wroclawiem a Zielona Góra | 0 | 2010.0 | NaN | 11 | Documentary,Short |

| 14 | 67635 | tt0069049 | movie | The Other Side of the Wind | The Other Side of the Wind | 0 | 2018.0 | NaN | 122 | Drama |

| 15 | 70534 | tt0072043 | short | X-Ray | Przeswietlenie | 0 | 2006.0 | NaN | 13 | Documentary,Short |

| 16 | 77929 | tt0079644 | movie | November 1828 | November 1828 | 0 | 2001.0 | NaN | 140 | Drama,War |

| 17 | 86766 | tt0088751 | movie | The Naked Monster | The Naked Monster | 0 | 2005.0 | NaN | 100 | Comedy,Horror,Sci-Fi |

| 18 | 87078 | tt0089067 | movie | El día de los albañiles 2 | El día de los albañiles 2 | 0 | 2001.0 | NaN | 90 | Comedy |

| 19 | 87435 | tt0089435 | short | Kokoa | Kokoa | 0 | 2019.0 | NaN | 13 | Animation,Short |

| 20 | 90881 | tt0092960 | movie | En tres y dos | En tres y dos | 0 | 2004.0 | NaN | 102 | Drama |

| 21 | 92731 | tt0094859 | movie | Chief Zabu | Chief Zabu | 0 | 2016.0 | NaN | 74 | Comedy |

| 22 | 93902 | tt0096056 | movie | Crime and Punishment | Crime and Punishment | 0 | 2002.0 | NaN | 126 | Drama |

| 23 | 95113 | tt0097304 | movie | Everything's for You | Everything's for You | 0 | 2009.0 | NaN | 58 | Documentary |

| 24 | 98005 | tt0100275 | movie | The Wandering Soap Opera | La Telenovela Errante | 0 | 2017.0 | NaN | 80 | Comedy,Drama,Fantasy |

- If we add "

index_col=0to read_csv then it will use this unnamed column as our index, which is the ideal solution.

pd.read_csv(fname, index_col=0).dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 13079 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 33790 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 34790 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 39532 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 43536 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| 44078 | tt0044879 | short | Mandala | Mandala | 0 | 2021.0 | NaN | 3 | Short |

| 55738 | tt0056840 | short | Aufsätze | Aufsätze | 0 | 2021.0 | NaN | 10 | Short |

| 59185 | tt0060366 | short | A Embalagem de Vidro | A Embalagem de Vidro | 0 | 2020.0 | NaN | 11 | Documentary,Short |

| 61089 | tt0062336 | movie | The Tango of the Widower and Its Distorting Mi... | El Tango del Viudo y Su Espejo Deformante | 0 | 2020.0 | NaN | 70 | Drama |

| 63720 | tt0065047 | short | The Sun's Gonna Shine | The Sun's Gonna Shine | 0 | 2014.0 | NaN | 10 | Documentary,Music,Short |

| 65857 | tt0067230 | short | I Miss Sonia Henie | I Miss Sonia Henie | 0 | 2009.0 | NaN | 20 | Comedy,Short |

| 66247 | tt0067626 | short | Before the Rally | Przed rajdem | 0 | 2006.0 | NaN | 16 | Documentary,Short |

| 66304 | tt0067683 | movie | Workers '71: Nothing About Us Without Us | Robotnicy 1971 - Nic o nas bez nas | 0 | 2006.0 | NaN | 47 | Documentary |

| 67531 | tt0068943 | short | Between Wroclaw and Zielona Góra | Miedzy Wroclawiem a Zielona Góra | 0 | 2010.0 | NaN | 11 | Documentary,Short |

| 67635 | tt0069049 | movie | The Other Side of the Wind | The Other Side of the Wind | 0 | 2018.0 | NaN | 122 | Drama |

| 70534 | tt0072043 | short | X-Ray | Przeswietlenie | 0 | 2006.0 | NaN | 13 | Documentary,Short |

| 77929 | tt0079644 | movie | November 1828 | November 1828 | 0 | 2001.0 | NaN | 140 | Drama,War |

| 86766 | tt0088751 | movie | The Naked Monster | The Naked Monster | 0 | 2005.0 | NaN | 100 | Comedy,Horror,Sci-Fi |

| 87078 | tt0089067 | movie | El día de los albañiles 2 | El día de los albañiles 2 | 0 | 2001.0 | NaN | 90 | Comedy |

| 87435 | tt0089435 | short | Kokoa | Kokoa | 0 | 2019.0 | NaN | 13 | Animation,Short |

| 90881 | tt0092960 | movie | En tres y dos | En tres y dos | 0 | 2004.0 | NaN | 102 | Drama |

| 92731 | tt0094859 | movie | Chief Zabu | Chief Zabu | 0 | 2016.0 | NaN | 74 | Comedy |

| 93902 | tt0096056 | movie | Crime and Punishment | Crime and Punishment | 0 | 2002.0 | NaN | 126 | Drama |

| 95113 | tt0097304 | movie | Everything's for You | Everything's for You | 0 | 2009.0 | NaN | 58 | Documentary |

| 98005 | tt0100275 | movie | The Wandering Soap Opera | La Telenovela Errante | 0 | 2017.0 | NaN | 80 | Comedy,Drama,Fantasy |

-

Since the TextFileReader is an iterator, we can loop through the df_reader itself to get the temp_df.

-

We will be re-creating the initial temp_df above as part of our final loop.

-

We will declare our chunk_num=1 before we start our loop.

# title basics

basics_url = 'https://datasets.imdbws.com/title.basics.tsv.gz'

chunk_num = 1

df_reader = pd.read_csv(basics_url, sep='\t',

low_memory=False, chunksize=100_000)for temp_df in df_reader:

#### COMBINED WORKFLOW FROM ABOVE

## Replace "\N" with np.nan

temp_df.replace({'\\N':np.nan},inplace=True)

## Eliminate movies that are null for runtimeMinute, genres, and startYear

temp_df = temp_df.dropna(subset=['runtimeMinutes','genres','startYear'])

## NOTE: THERE ARE ADDITIONAL REQUIRED FILTERING STEPS FOR THE PROJECT NOT SHOWN HERE

### Convert startyear to numeric for slicing

## convert numeric features

temp_df['startYear'] = temp_df['startYear'].astype(float)

## keep startYear 2000-2022

temp_df = temp_df[(temp_df['startYear']>=2000)&(temp_df['startYear']<2022)]

### Saving chunk to disk

fname= f'Data/title_basics_chunk_{chunk_num:03d}.csv.gz'

temp_df.to_csv(fname, compression='gzip')

print(f"- Saved {fname}")

chunk_num+=1

df_reader.close()- Saved Data/title_basics_chunk_001.csv.gz

- Saved Data/title_basics_chunk_002.csv.gz

- Saved Data/title_basics_chunk_003.csv.gz

- Saved Data/title_basics_chunk_004.csv.gz

- Saved Data/title_basics_chunk_005.csv.gz

- Saved Data/title_basics_chunk_006.csv.gz

- Saved Data/title_basics_chunk_007.csv.gz

- Saved Data/title_basics_chunk_008.csv.gz

- Saved Data/title_basics_chunk_009.csv.gz

- Saved Data/title_basics_chunk_010.csv.gz

- Saved Data/title_basics_chunk_011.csv.gz

- Saved Data/title_basics_chunk_012.csv.gz

- Saved Data/title_basics_chunk_013.csv.gz

- Saved Data/title_basics_chunk_014.csv.gz

- Saved Data/title_basics_chunk_015.csv.gz

- Saved Data/title_basics_chunk_016.csv.gz

- Saved Data/title_basics_chunk_017.csv.gz

- Saved Data/title_basics_chunk_018.csv.gz

- Saved Data/title_basics_chunk_019.csv.gz

- Saved Data/title_basics_chunk_020.csv.gz

- Saved Data/title_basics_chunk_021.csv.gz

- Saved Data/title_basics_chunk_022.csv.gz

- Saved Data/title_basics_chunk_023.csv.gz

- Saved Data/title_basics_chunk_024.csv.gz

- Saved Data/title_basics_chunk_025.csv.gz

- Saved Data/title_basics_chunk_026.csv.gz

- Saved Data/title_basics_chunk_027.csv.gz

- Saved Data/title_basics_chunk_028.csv.gz

- Saved Data/title_basics_chunk_029.csv.gz

- Saved Data/title_basics_chunk_030.csv.gz

- Saved Data/title_basics_chunk_031.csv.gz

- Saved Data/title_basics_chunk_032.csv.gz

- Saved Data/title_basics_chunk_033.csv.gz

- Saved Data/title_basics_chunk_034.csv.gz

- Saved Data/title_basics_chunk_035.csv.gz

- Saved Data/title_basics_chunk_036.csv.gz

- Saved Data/title_basics_chunk_037.csv.gz

- Saved Data/title_basics_chunk_038.csv.gz

- Saved Data/title_basics_chunk_039.csv.gz

- Saved Data/title_basics_chunk_040.csv.gz

- Saved Data/title_basics_chunk_041.csv.gz

- Saved Data/title_basics_chunk_042.csv.gz

- Saved Data/title_basics_chunk_043.csv.gz

- Saved Data/title_basics_chunk_044.csv.gz

- Saved Data/title_basics_chunk_045.csv.gz

- Saved Data/title_basics_chunk_046.csv.gz

- Saved Data/title_basics_chunk_047.csv.gz

- Saved Data/title_basics_chunk_048.csv.gz

- Saved Data/title_basics_chunk_049.csv.gz

- Saved Data/title_basics_chunk_050.csv.gz

- Saved Data/title_basics_chunk_051.csv.gz

- Saved Data/title_basics_chunk_052.csv.gz

- Saved Data/title_basics_chunk_053.csv.gz

- Saved Data/title_basics_chunk_054.csv.gz

- Saved Data/title_basics_chunk_055.csv.gz

- Saved Data/title_basics_chunk_056.csv.gz

- Saved Data/title_basics_chunk_057.csv.gz

- Saved Data/title_basics_chunk_058.csv.gz

- Saved Data/title_basics_chunk_059.csv.gz

- Saved Data/title_basics_chunk_060.csv.gz

- Saved Data/title_basics_chunk_061.csv.gz

- Saved Data/title_basics_chunk_062.csv.gz

- Saved Data/title_basics_chunk_063.csv.gz

- Saved Data/title_basics_chunk_064.csv.gz

- Saved Data/title_basics_chunk_065.csv.gz

- Saved Data/title_basics_chunk_066.csv.gz

- Saved Data/title_basics_chunk_067.csv.gz

- Saved Data/title_basics_chunk_068.csv.gz

- Saved Data/title_basics_chunk_069.csv.gz

- Saved Data/title_basics_chunk_070.csv.gz

- Saved Data/title_basics_chunk_071.csv.gz

- Saved Data/title_basics_chunk_072.csv.gz

- Saved Data/title_basics_chunk_073.csv.gz

- Saved Data/title_basics_chunk_074.csv.gz

- Saved Data/title_basics_chunk_075.csv.gz

- Saved Data/title_basics_chunk_076.csv.gz

- Saved Data/title_basics_chunk_077.csv.gz

- Saved Data/title_basics_chunk_078.csv.gz

- Saved Data/title_basics_chunk_079.csv.gz

- Saved Data/title_basics_chunk_080.csv.gz

- Saved Data/title_basics_chunk_081.csv.gz

- Saved Data/title_basics_chunk_082.csv.gz

- Saved Data/title_basics_chunk_083.csv.gz

- Saved Data/title_basics_chunk_084.csv.gz

- Saved Data/title_basics_chunk_085.csv.gz

- Saved Data/title_basics_chunk_086.csv.gz

- Saved Data/title_basics_chunk_087.csv.gz

- Saved Data/title_basics_chunk_088.csv.gz

- Saved Data/title_basics_chunk_089.csv.gz

- Saved Data/title_basics_chunk_090.csv.gz

- Saved Data/title_basics_chunk_091.csv.gz

- Saved Data/title_basics_chunk_092.csv.gz

- Now that we have saved the individual filtered files, we can combine them back into 1 final file.

-

Python has a module called glob that has a very helpful function for finding all file paths that match a specific criterion.

-

Glob takes a filepath/query and will find every filename that matches the pattern provided.

- We use asterisks as wildcards in our query.

-

In this case, we want to load in the all of the saved title basics chunk files.

- If we use

"Data/title_basics_chunk*.csv.gz"as our search query, it will find all files that match the text, where*represents any number of other characters.

- If we use

-

We then run

glob.glob(q)and save the returned list.

import glob

q = "Data/title_basics_chunk*.csv.gz"

chunked_files = glob.glob(q)

# Showing the first 5

chunked_files[:5]['Data/title_basics_chunk_069.csv.gz',

'Data/title_basics_chunk_014.csv.gz',

'Data/title_basics_chunk_077.csv.gz',

'Data/title_basics_chunk_006.csv.gz',

'Data/title_basics_chunk_065.csv.gz']

- Note: if we want the list sorted alphabetically, we can use the "sorted" function from python.

import glob

q = "Data/title_basics_chunk*.csv.gz"

chunked_files = sorted(glob.glob(q))

# Showing the first 5

chunked_files[:5]['Data/title_basics_chunk_001.csv.gz',

'Data/title_basics_chunk_002.csv.gz',

'Data/title_basics_chunk_003.csv.gz',

'Data/title_basics_chunk_004.csv.gz',

'Data/title_basics_chunk_005.csv.gz']

- Now that we have a list of all of the files we want to load in and concatenate, we can use a for loop or list comprehension to do so!

## Loading all files as df and appending to a list

df_list = []

for file in chunked_files:

temp_df = pd.read_csv(file, index_col=0)

df_list.append(temp_df)

## Concatenating the list of dfs into 1 combined

df_combined = pd.concat(df_list)

df_combined.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 13079 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 33790 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 34790 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 39532 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 43536 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 9163345 | tt9916754 | movie | Chico Albuquerque - Revelações | Chico Albuquerque - Revelações | 0 | 2013.0 | NaN | 49 | Documentary |

| 9163351 | tt9916766 | tvEpisode | Episode #10.15 | Episode #10.15 | 0 | 2019.0 | NaN | 43 | Family,Game-Show,Reality-TV |

| 9163386 | tt9916840 | tvEpisode | Horrid Henry's Comic Caper | Horrid Henry's Comic Caper | 0 | 2014.0 | NaN | 11 | Adventure,Animation,Comedy |

| 9163393 | tt9916856 | short | The Wind | The Wind | 0 | 2015.0 | NaN | 27 | Short |

| 9163394 | tt9916880 | tvEpisode | Horrid Henry Knows It All | Horrid Henry Knows It All | 0 | 2014.0 | NaN | 10 | Adventure,Animation,Comedy |

1703471 rows × 9 columns

## Loading and Concatenating the list of dfs with 1 line

df_combined = pd.concat([pd.read_csv(file, index_col=0) for file in chunked_files])

df_combined.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 13079 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 33790 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 34790 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 39532 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 43536 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 9163345 | tt9916754 | movie | Chico Albuquerque - Revelações | Chico Albuquerque - Revelações | 0 | 2013.0 | NaN | 49 | Documentary |

| 9163351 | tt9916766 | tvEpisode | Episode #10.15 | Episode #10.15 | 0 | 2019.0 | NaN | 43 | Family,Game-Show,Reality-TV |

| 9163386 | tt9916840 | tvEpisode | Horrid Henry's Comic Caper | Horrid Henry's Comic Caper | 0 | 2014.0 | NaN | 11 | Adventure,Animation,Comedy |

| 9163393 | tt9916856 | short | The Wind | The Wind | 0 | 2015.0 | NaN | 27 | Short |

| 9163394 | tt9916880 | tvEpisode | Horrid Henry Knows It All | Horrid Henry Knows It All | 0 | 2014.0 | NaN | 10 | Adventure,Animation,Comedy |

1703471 rows × 9 columns

- And now we can save this single dataframe as the final combined file we will use going forward.

## Saving the final combined dataframe

final_fname ='Data/title_basics_combined.csv.gz'

df_combined.to_csv(final_fname, compression='gzip', index=False)df_combined = pd.read_csv(final_fname)

df_combined.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| tconst | titleType | primaryTitle | originalTitle | isAdult | startYear | endYear | runtimeMinutes | genres | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | tt0013274 | movie | Istoriya grazhdanskoy voyny | Istoriya grazhdanskoy voyny | 0 | 2021.0 | NaN | 133 | Documentary |

| 1 | tt0034413 | short | Youth Gets a Break | Youth Gets a Break | 0 | 2001.0 | NaN | 20 | Short |

| 2 | tt0035423 | movie | Kate & Leopold | Kate & Leopold | 0 | 2001.0 | NaN | 118 | Comedy,Fantasy,Romance |

| 3 | tt0040241 | short | Color Rhapsodie | Color Rhapsodie | 0 | 2021.0 | NaN | 6 | Short |

| 4 | tt0044326 | short | Abstronic | Abstronic | 0 | 2021.0 | NaN | 6 | Short |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1703466 | tt9916754 | movie | Chico Albuquerque - Revelações | Chico Albuquerque - Revelações | 0 | 2013.0 | NaN | 49 | Documentary |

| 1703467 | tt9916766 | tvEpisode | Episode #10.15 | Episode #10.15 | 0 | 2019.0 | NaN | 43 | Family,Game-Show,Reality-TV |

| 1703468 | tt9916840 | tvEpisode | Horrid Henry's Comic Caper | Horrid Henry's Comic Caper | 0 | 2014.0 | NaN | 11 | Adventure,Animation,Comedy |

| 1703469 | tt9916856 | short | The Wind | The Wind | 0 | 2015.0 | NaN | 27 | Short |

| 1703470 | tt9916880 | tvEpisode | Horrid Henry Knows It All | Horrid Henry Knows It All | 0 | 2014.0 | NaN | 10 | Adventure,Animation,Comedy |

1703471 rows × 9 columns

- Bonus functions for getting the size of dataframes and files

import os

def get_memory_usage(df,units='mb'):

"""returns memory size of dataframe in requested units"""

memory = df.memory_usage().sum()

if units.lower()=='mb':

denom = 1e6

elif units.lower()=='gb':

denom = 1e9

else:

raise Exception('Units must be either "mb" or "gb"')

val = memory/denom

print(f"- Total Memory Usage = {val} {units.upper()}")

get_memory_usage(df_combined)- Total Memory Usage = 122.65004 MB

def get_filesize(fname, units='mb'):

"""Get size of file at given path in MB or GB"""

if units.lower()=='mb':

denom = 1e6

elif units.lower()=='gb':

denom = 1e9

else:

raise Exception('Units must be either "mb" or "gb"')

import os

size = os.path.getsize(fname)

val = size/denom

print(f"- {fname} is {val} {units.upper()} on disk.")get_filesize(final_fname)- Data/title_basics_combined.csv.gz is 37.488613 MB on disk.