The roachinfo effort is designed to gather quarterly usage, sizing and performance metrics from self-hosted clusters. This will help us to better understand the customer's journey and help them to be successful.

It is not always possible to get customers to run extensive data collection on all clusters. The CEA often doesn’t have access to all layers of the organization or it is too tedious to run infrastructure and SQL level collection across all clusters in an organization. To make this journey as easy as possible for the customer, the roachinfo program has been broken into two levels: roachinfo light and roachinfo deep-dive.

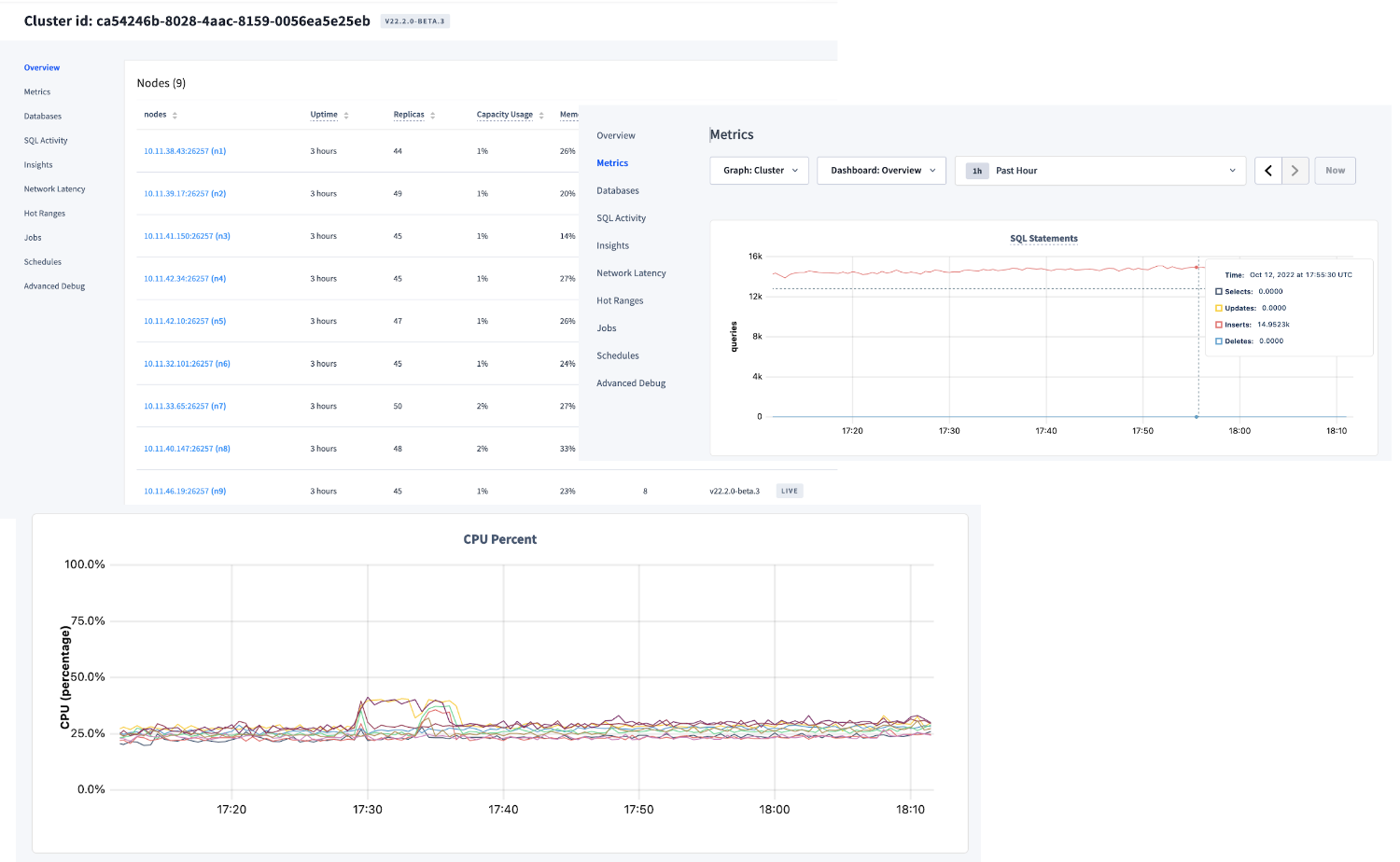

This access level runs a simple SQL script and uses access to the DB console to gather usage and consumption data for their clusters. The idea is to gather as much data that is possible via the roachinfo-light.sh script then have the CEA walk through some screen shots regarding usage. This data is then used to record usage and consumption data for internal use at Cockroach.

The three values of Memory%, CPU%, and QPS are meant to be gathered from the DB console with the customer and CEA. This ensures the customer works with the CEA to gather usage information from their clusters.

ubuntu@ip-10-11-35-10:~$ ./roachinfo-light.sh

Gathinging Cluster Information on Sun Oct 30 17:42:14 UTC 2022....

Please enter some observations from DB console..

CPU% peak observation : 70

Memory% peak observation : 85

IOPS peak observation : 1000

QPS peak observation : 3000

Summary of Cluster Statistics via SQL...

Sample Date : 20221030

ClusterID : e7a5bf31-5f0e-4a56-9626-44e566b63514

Organization : Cockroach Labs - Production Testing

Version : v22.2.0-alpha.3-1366-g0a11b74e23

Build : CockroachDB CCL v22.2.0-alpha.3-1366-g0a11b74e23 (x86_64-pc-linux-gnu, built 2022/10/20 23:39:26, go1.19.1)

Platform : linux amd64

Total Nodes : 9

vCPU total : 72

Total Disk (GB) : 622

Largest Table(GB) : 323

Changefeeds : 0

CPU% peak observation : 70

Memory% peak observation : 85

IOPS peak observation : 1000

QPS peak observation : 3000

... Send Sample File to Cockroach Enterprise Architect : 20221030_e7a5bf31-5f0e-4a56-9626-44e566b63514.sqlThe sql file created is finally uploaded to the usage-selfhosted Cockroach Cloud cluster into the cluster_usage table. The goal is to make this an automated process, but for the initial phase, the sql files are simply added to the #usage_selfhosted slack channel for each cluster where the files are discovered and loaded.

CREATE TABLE cluster_usage (

sample_date TIMESTAMPTZ NOT NULL,

cluster_id UUID NOT NULL,

organization TEXT,

version TEXT,

build TEXT,

platform TEXT,

node_count INT,

vcpu_count INT,

disk_gb INT,

largest_table_gb INT,

changefeeds INT,

cpu_pct INT,

mem_pct INT,

iops_pct INT,

qps INT,

PRIMARY KEY (sample_date, cluster_id)

);The usage data will be accessible via CC for analysis by internal teams at Cockroach Labs.

In addition to information gathered with the roachinfo-light.sh script, this process can be expanded to dive further into the usage patterns and cluster health. To do this, roachinfo-light.sh is run, but in addition to this a “tsdump” and “debug zip” are also gathered and submitted via a support ticket. This will ensure that the customer is trained on these procedures and allow us to further analyze usage patterns and cluster health.

To gather debug zip, the customer should follow the standard procedures described in their own runbooks. The debug zip does not have to include all the log files for the last 24 hours. See the example below and reference the debug zip documentation for more details.

date '+%Y-%m-%d %H:%M'

2022-10-12 17:12

cockroach debug zip ./debug.zip --redact-logs --files-from='2022-10-11 17:12'Once the debug zip is gathered, the customer will open a support ticket and attach the debug zip file. The TSE team will review the debug zip for anomalies with their standard tooling and report back to the customer and CEA.

The tsdump function extracts the time series data and stores a compressed gzip file.

cockroach debug tsdump --host localhost --format csv --insecure \

--from '2022-10-12 00:00' --to '2022-10-13 00:00' |gzip > tsdump.csv.gzOnce this data is collected, the customer will also upload this data to the support ticket created for the debug zip. The CEA can analyze the data and upload to oggy displays series data gathered from customers.

Once the Debug Zip and tsdump have been analyzed, the CEA can schedule a follow up session to discuss the findings.