Learning Object-Language Alignments for Open-Vocabulary Object Detection,

Chuang Lin, Peize Sun, Yi Jiang, Ping Luo, Lizhen Qu, Gholamreza Haffari, Zehuan Yuan, Jianfei Cai,

ICLR 2023 (https://arxiv.org/abs/2211.14843)

We are excited to announce that our paper was accepted to ICLR 2023! 🥳🥳🥳

vldet_demo.mp4

- Linux or macOS with Python ≥ 3.7

- PyTorch ≥ 1.9. Install them together at pytorch.org to make sure of this. Note, please check PyTorch version matches that is required by Detectron2.

- Detectron2: follow Detectron2 installation instructions.

conda create --name VLDet python=3.7 -y

conda activate VLDet

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch-lts -c nvidia

# under your working directory

git clone https://github.com/clin1223/VLDet.git

cd VLDet

cd detectron2

pip install -e .

cd ..

pip install -r requirements.txt-

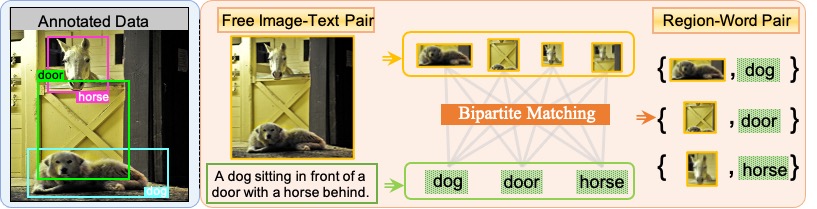

Directly learn an open-vocabulary object detector from image-text pairs by formulating the task as a bipartite matching problem.

-

State-of-the-art results on Open-vocabulary LVIS and Open-vocabulary COCO.

-

Scaling and extending novel object vocabulary easily.

Please first prepare datasets.

The VLDet models are finetuned on the corresponding Box-Supervised models (indicated by MODEL.WEIGHTS in the config files). Please train or download the Box-Supervised model and place them under VLDet_ROOT/models/ before training the VLDet models.

To train a model, run

python train_net.py --num-gpus 8 --config-file /path/to/config/name.yaml

To evaluate a model with a trained/ pretrained model, run

python train_net.py --num-gpus 8 --config-file /path/to/config/name.yaml --eval-only MODEL.WEIGHTS /path/to/weight.pth

Download the trained network weights here.

| OV_COCO | box mAP50 | box mAP50_novel |

|---|---|---|

| config_RN50 | 45.8 | 32.0 |

| OV_LVIS | mask mAP_all | mask mAP_novel |

|---|---|---|

| config_RN50 | 30.1 | 21.7 |

| config_Swin-B | 38.1 | 26.3 |

If you find this project useful for your research, please use the following BibTeX entry.

@article{VLDet,

title={Learning Object-Language Alignments for Open-Vocabulary Object Detection},

author={Lin, Chuang and Sun, Peize and Jiang, Yi and Luo, Ping and Qu, Lizhen and Haffari, Gholamreza and Yuan, Zehuan and Cai, Jianfei},

journal={arXiv preprint arXiv:2211.14843},

year={2022}

}

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This repository was built on top of Detectron2, Detic, RegionCLIP and OVR-CNN. We thank for their hard work.