Data.World

SLACK CHANNEL: TBD

Project Description:

The idea is a sharable staging area for wrangling data before transfer to an open data portal (e.g., https://data.world). The repo stores raw data and wrangling scripts. Citizen Lab members can push raw data into the repo and authorized members can write scripts to prepare data for transfer to an open data portal.

With some effort, we can create a repository of data and scripts to facilitate future data wrangling and help keep our open data manageable.

Table of Contents

- Upload Quick Start

- Definitions

- Applications

- Raw-data

- Clean-data

- Scripts

- API

- Installation

- Data Deployment

- Data Processing

- Expectations

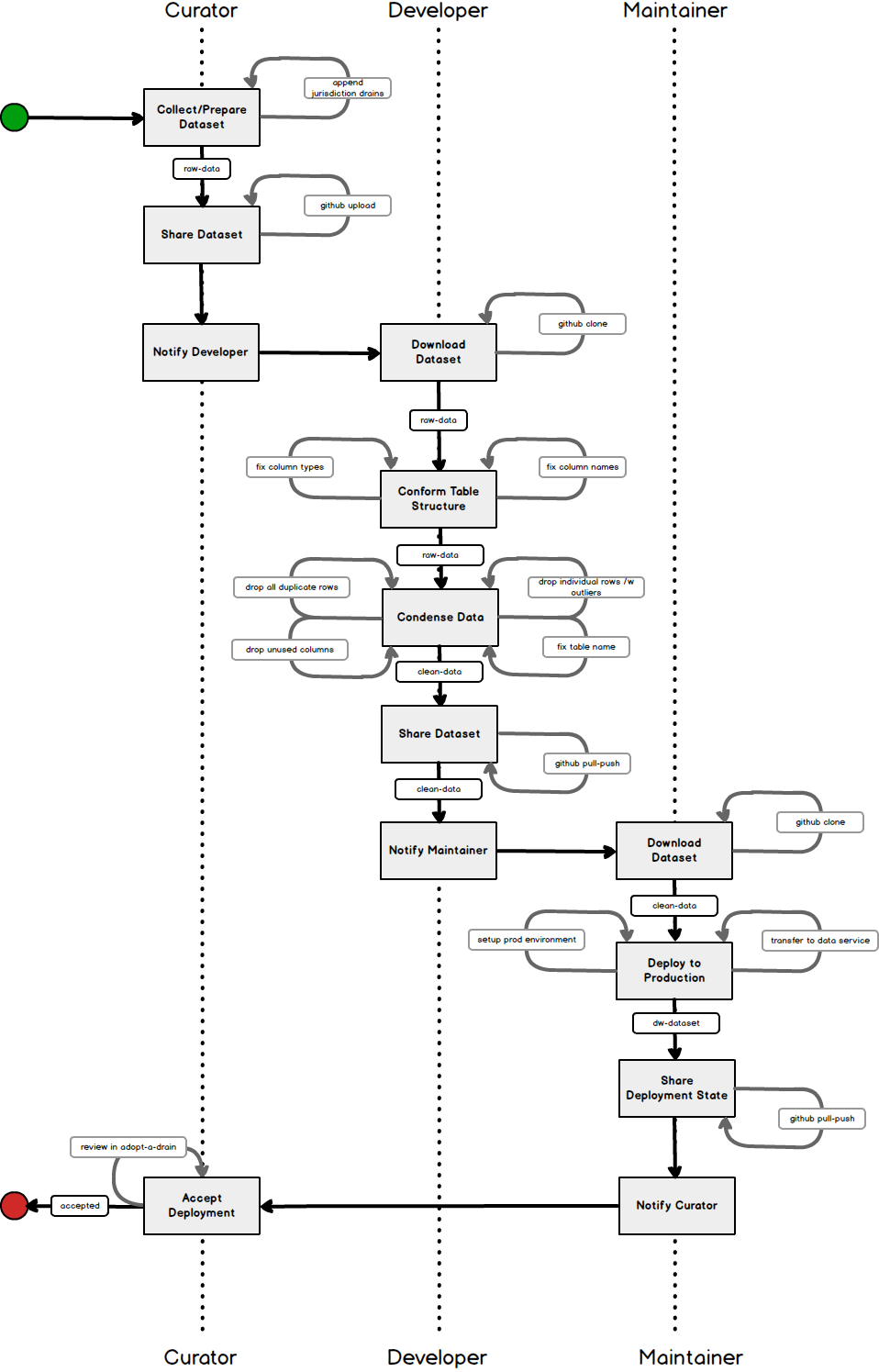

- Process

Diagrams

Quick Start

- Curator Process, starts in raw-data folder

- Developer Process

- Maintainer Process

Definitions

- Comma Separated Values (CSV). CSV is a method of formatting values in a text file.

- GitHub is a technology for versioning files.

- Open Data

- Open Data Portal is technology that facilitates the sharing of a dataset(s) via an application programming interface (API)

Citizen Labs Members

Members and areas of responsibility.

| Name | Role | Raw-data | Clean-data | Scripts | Open Data |

|---|---|---|---|---|---|

| Cara D | Curator | X | |||

| Eileen B | Curator | X | |||

| James W | Developer | X | X | ||

| Jace B | Maintainer | X | X |

Member Roles & Responsibilities

Declares the duties of members.

| Curator | Developer | Maintainer |

|---|---|---|

| Curates dataset(s) | Writes/Updates scripts | Puts data into production |

| Loads raw dataset to GitHub | Tests dataset load | Removes data from production |

| Creates GIT pull request | Maintains GIT scripts folder | |

| Signoff on Prod dataset load | Maintains clean-data folder | Maintains the GIT master branch |

| Creates clean-data set | Maintains the Prod Environment | |

| Maintains Dev Environment | ||

| Creates GIT pull request | ||

| Adds raw-data sub-folders |

Member Provisioning

| Role | CL Membership | GHF Account | DDW Account | CLDW Account | Jupiter Notebook |

|---|---|---|---|---|---|

| Curator | X | X | |||

| Developer | X | X | X | X | |

| Maintainer | X | X | X | X |

- GHF Account is GitHub Free Account

- CL Membership is Citizen Labs Membership

- DDW Account is Development Data.World Account. This is the Develpers personal account.

- CLDW Account is Citizen Labs Data.World Account

- X is a link to more info or install instructions

Applications & Datasets

For every dataset in the citizenlabs/data.world repo, there exists at least one application. For instance: the Grand River Drains data (gr_drains.csv) is used by the "Adopt a Drain" application.

| Application | Dataset |

|---|---|

| Adopt a Drain | gr_drains |

Raw-data

Raw data (raw-data) is the original data provided by a client (aka, curator).

Raw data is expected to contain text, organized in columns and rows, where values are separated by commas. The first row is required to list the name of the columns.

The Curator is responsible for putting raw data into the raw-data folder.

More info about providing raw-data can be found here.

Clean-data

Raw data doesn't always conform to the expectations of an application.

Cleaning includes: renaming columns, enforcing types, formating dates, removing duplicate rows and outliers.

Clean data is stored in the clean-data folder.

The Developer is responsible for cleaning raw data.

More info about clean-data can be found here.

Scripts

Scripts encapuslate the steps to process raw-data into clean-data and finally move data to https://data.world. Each dataset in the raw-data folder has a script to control how it is cleaned and moved into the test or production environments.

- We use python in the form of Jupyter Notebooks to build, document, and run our scripts.

- The Developer is responsible for writing and testing scripts to clean the raw data.

- The Maintainer is responsible for running scripts that create or refesh data used by applications.

More info about scripts can be found here.

API

Each dataset has an Application Programming Interface (API).

APIs are a common point through which to share data and services.

Once a dataset is loaded into the https://data.world portal, an API is automatically generated.

For more information on using a https://data.world API can be found here .

Installation

The is no public installation of scripts and therefore no public or production installation. Both Developers and Maintainers install the scripts locally on their respective computers. Scripting does require the installation of Jupiter Notebooks

Details for local installation can be found here.

Data Deployment

Data is deployed to the production environment using scripts. The Maintainer is responsible for data deployment.

Details for deployment are found here.

Process

Data Flow

The path which data moves through the process.

| Local | GitHub | Data.World.Test | Data.World.Prod | |||

|---|---|---|---|---|---|---|

| Curator | > | raw-data | ||||

| Developer | < | raw-data | ||||

| > | clean-data | > | test-data | |||

| Maintainer | < | clean-data | ||||

| > | > | open-data | ||||

| App(s) | < | < | open-data |

Process Overview

This is a best case scenario with no failures. Use as a guide to a successful completion of the process.