This repository contains the code and data used in the following paper:

Weakly-supervised Visual Instrument-playing Action Detection in Videos authored by Jen-Yu Liu, Yi-Hsuan Yang, and Shyh-Kang Jeng

It was submitted to a journal and is currently under review. The preprint version can be found here: arXiv

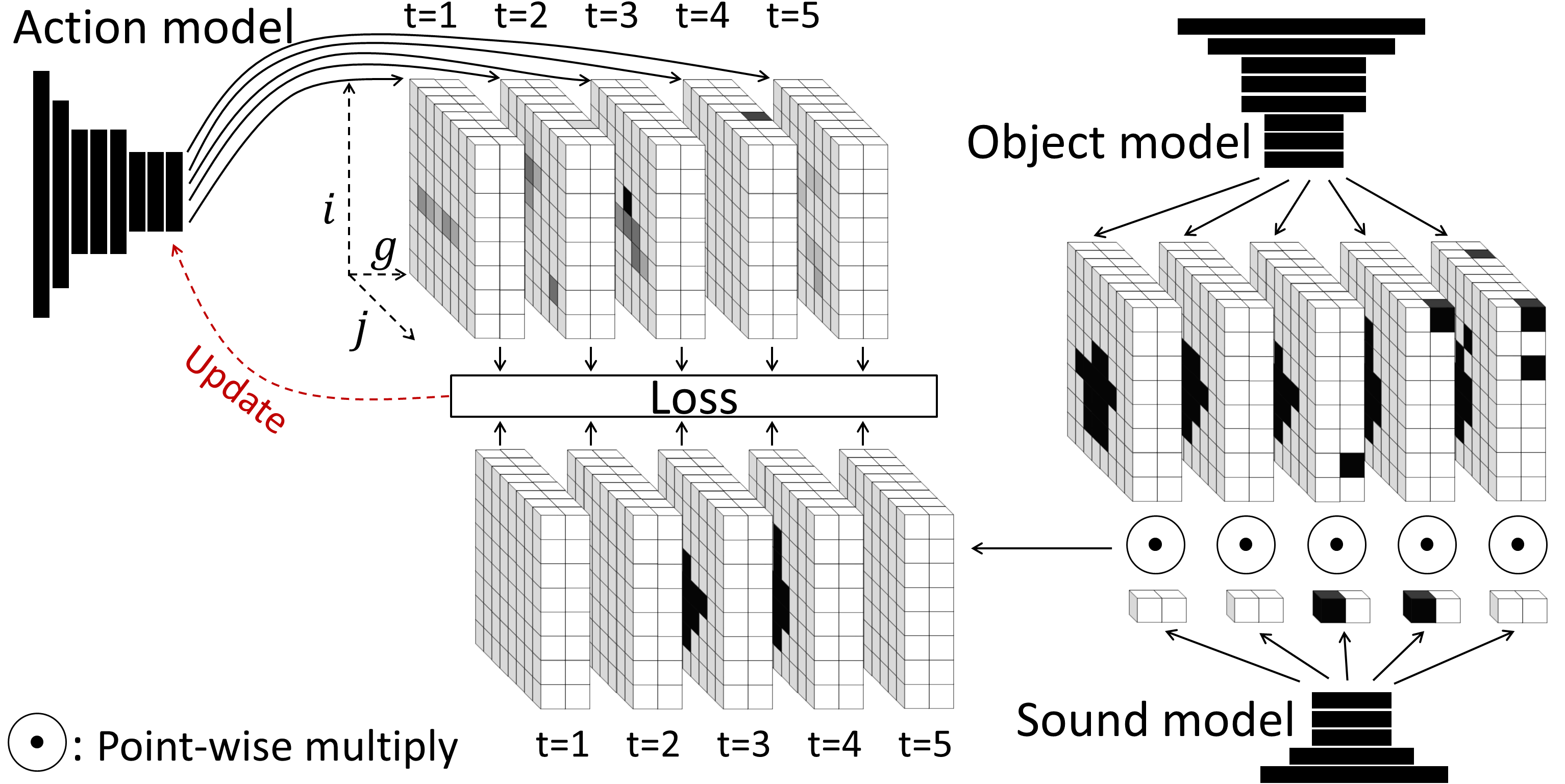

In this work, we want to detect instrument-playing actions temporally and spatially from videos, that is, we want to know when and where the playing actions occur.

The difficulty is in the lack of training data with detailed locations of actions. We deal with this problem by utilizing two auxiliary models: a sound model and an object model. The sound model predicts the temporal locations of instrument sounds and provides temporal supervision. The object model predicts the spatial locations of the instrument objects and provides spaital supervision.

| Instrument | |

|---|---|

| Flute |  |

| Violin |  |

| Piano |  |

| Saxophone |  |

| Instrument | |

|---|---|

| Violin |  |

| Cello |  |

| Flute |  |

http://mac.citi.sinica.edu.tw/~liu/videos_instrument_playing_detection_web.zip

python setup.py install

We manaully annotated the playing actions from clips of 135 videos (15 for each instrument). Totally 5400 frames are annotated.

data/action_annotations/

http://mac.citi.sinica.edu.tw/~liu/data/InstrumentPlayingDetection/MedleyDB.zip

This file includes the features and annotations converted from the original timestamps for the evaluation in this work. The original files are from http://medleydb.weebly.com/

load into a python dict with torch.load

FCN trained with AudioSet

FCN trained with YouTube8M, pretrained with VGG_CNN_M_2048 model

Download: http://mac.citi.sinica.edu.tw/~liu/data/InstrumentPlayingDetection/models/object/params.torch

FCN trained with YouTube8M

Download:

Video tag as target (VT): http://mac.citi.sinica.edu.tw/~liu/data/InstrumentPlayingDetection/models/action/params.VT.torch

Sound*Object as target (SOT0503): http://mac.citi.sinica.edu.tw/~liu/data/InstrumentPlayingDetection/models/action/params.SOT0503.torch

scripts/AudioSet/test.FCN.merged_tags.multilogmelspec.py

scripts/YouTube8M/compute_predictions.fragment.dense_optical_flow.no_resize.py

scripts/YouTube8M/extract_image.fragment.no_padding.py

scripts/YouTube8M/test.action.temporal.py

scripts/YouTube8M/test.action.spatial.py

wget -P pretrained_models http://mac.citi.sinica.edu.tw/~liu/data/InstrumentPlayingDetection/models/action/params.SOT0503.torch

cd scripts

python download_videos.py

python compute_predictions_for_sample_videos.fragment.dense_optical_flow.no_resize.py