The purpose of this project is to demonstrate various skills associated with data engineering projects. In particular, developing ETL pipelines using Airflow, data storage as well as defining efficient data models e.g. star schema, etc.

For this project I will perform a pipeline using the data of the Madrid Public Libraries provided by the Madrid OpenData Portal, focusing only on the book loans. Just for test purposes the data used will be only for 2018, but any other data could be easily added.

This project uses as main tools:

Also as we are dealing with MARCXML data one of the libraries used is:

These selected tools are enough for th4e scope of this project which is obtain the number of books on loan in Madrid during the year 2018 (This could be extended adding more datasets).

This data could be used to know for example:

- Which were the most requested books during a period of time

- Who is the most read author

- Which library lend more books

- Which Madrid district lend more books

- Some other book related data

The two main sources used in this project are:

- Library Catalog (Bibliotecas Públicas de Madrid. Catálogo): wich provides the whole catalog of items stored in all Public Libraries of Madrid.

- Historical active Loans (Bibliotecas Públicas de Madrid. Préstamos Activos): which provides the historical of all loans from 2014 until the current year.

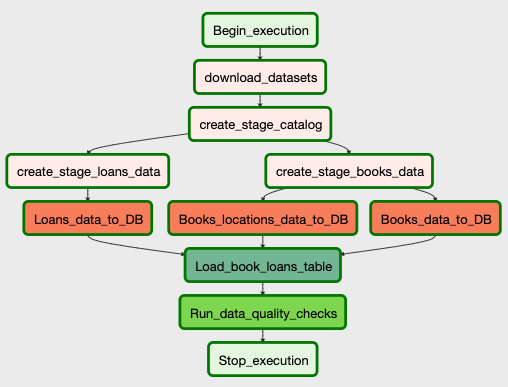

The pipeline is executed using Airflow, here is the diagram of the pipeline:

The first step for the pipeline is to download all the datasets from the repository, for this I'm using an Airflow PythonOperator and the library wget. The data is stored in the datasets folder.

The loans records are stored in CSV format, so this data could be used directly with no processing.

The catalog dataset is provided in the MARCXML format, to make use of the data this needs to be converted from this format. For this task I'm using the library pymarc, which allow convert MARC into JSON. As part of the scope of the project only the data for books will be extracted. To determine how to extract only those items the script needs to filter the data using some specific MARC fields (as stated in the documentation provided from the dataset repository), finally only those records will be stored in a JSON file which will be used for the next step.

Once the data is downloaded and processed we need to obtain the data to be used for both fact and dimension tables.

For this process I'm using pandas to read the catalog dataset and the loans dataset. From the catalog we can obtain the data for the books and their locations on the libraries, each library could have many items of each books and each of one is identified by an unique code (prbarc).

In this same step we will get also the data for each loan made in each library,

as in these data there are all the item loans we need also to filter the

attribute prcocs which identify the type of item, in our case this value

should be LIB.

In this step we already have all the data needed in CSV files, here we need to export the data into the database, the first step is to load the fact tables:

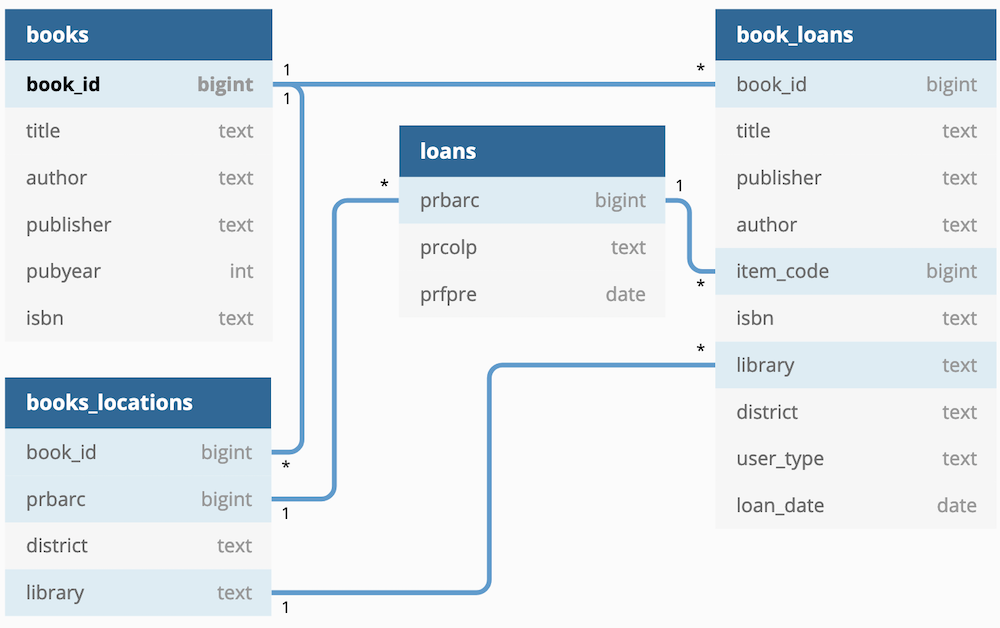

TABLE books (

book_id bigint PRIMARY KEY,

title text,

author text,

publisher text,

pubyear integer,

isbn text

)

TABLE loans (

prbarc bigint,

prcolp text,

prfpre date

)

TABLE books_locations (

book_id bigint,

district text,

library text,

prbarc bigint

)

After the data is loaded in these tables, we are ready to obtain the data from them and store it into the dimensional table:

TABLE book_loans (

book_id bigint,

title text,

publisher text,

author text,

item_code bigint,

isbn text,

library text,

district text,

user_type text,

loan_date date

)

Here is a ERD diagram of the database structure:

The database used in this project is Postgres, but it should work also with Redshift

- Install required libraries:

First you need to execute the following commands:

pip install -r requirements.txt

- Create tables:

psql -U $DB_USER -h $DB_HOST -W $DB < create_tables.sql

-

Configure a Postgres connection in Airflow, the default conection name used in this project is

capstone_db. -

Start the Airflow scheduler and webserver and open the Admin page to trigger the DAG.

Here are some possible scenarios and how they can be addressed:

-

The data was increased by 100x: In this case one recommended solution is to create an EMR Spark on Amazon to store the staging data, that way we could process more data in a fastest way. Also is recommended the use of Redshift, so the ETL should get data from Spark and store it into a Redshit Cluster. The aiflow DAG used can handle this changes just adding the connections to the AWS infraestructure instead of using the local enviroment.

-

The pipelines would be run on a daily basis by 7 am every day: Airflow allows to schedule the pipeline to run at any time, so in this case we could configure the DAG to be triggered at 7am. Although it makes more sense for this pipeline to occur monthly, because the Open Data portal update the datasets each month with the new obtained data.

-

The database needed to be accessed by 100+ people: If we decided to use Redshift this couldn't be a problem because the AWS platform provides a higly scalable infraestructure which could be accessed for as many people needed, just need to configure the Concurrency Scaling feature.

As I mentioned at first this project only covers the loans of books for the year 2018, this could be extended to use all the other types of items and also incorporate data from other years, currently the Open Data Portal of Madrid offers data from 2014 until the curren date.

Incorporate more data related to the book using the ISBN field, with that we can obtain more info like price, reviews, etc. which could be useful to add more value to the data processed.

Add a visualisation tool to generate reports, graphs, etc. That way obtain insights of the data could be easy. Here is the Top 10 books on loan in 2018: