Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks.

- Why Code2Prompt?

- Features

- Installation

- Quick Start

- Usage

- Options

- Examples

- Templating System

- Integration with LLM CLI

- GitHub Actions Integration

- Configuration File

- Troubleshooting

- Contributing

- License

Code2Prompt is a powerful, open-source command-line tool that bridges the gap between your codebase and Large Language Models (LLMs). By converting your entire project into a comprehensive, AI-friendly prompt, Code2Prompt enables you to leverage the full potential of AI for code analysis, documentation, and improvement tasks.

- Holistic Codebase Representation: Generate a well-structured Markdown prompt that captures your entire project's essence.

- Intelligent Source Tree Generation: Create a clear, hierarchical view of your codebase structure.

- Customizable Prompt Templates: Tailor your output using Jinja2 templates to suit specific AI tasks.

- Smart Token Management: Count and optimize tokens to ensure compatibility with various LLM token limits.

- Gitignore Integration: Respect your project's .gitignore rules for accurate representation.

- Flexible File Handling: Filter and exclude files using powerful glob patterns.

- Clipboard Ready: Instantly copy generated prompts to your clipboard for quick AI interactions.

- Multiple Output Options: Save to file or display in the console.

- Enhanced Code Readability: Add line numbers to source code blocks for precise referencing.

- Contextual Understanding: Provide LLMs with a comprehensive view of your project for more accurate suggestions and analysis.

- Consistency Boost: Maintain coding style and conventions across your entire project.

- Efficient Refactoring: Enable better interdependency analysis and smarter refactoring recommendations.

- Improved Documentation: Generate contextually relevant documentation that truly reflects your codebase.

- Pattern Recognition: Help LLMs learn and apply your project-specific patterns and idioms.

Transform the way you interact with AI for software development. With Code2Prompt, harness the full power of your codebase in every AI conversation.

Ready to elevate your AI-assisted development? Let's dive in! 🏊♂️

Choose one of the following methods to install Code2Prompt:

pip install code2prompt- Ensure you have Poetry installed:

curl -sSL https://install.python-poetry.org | python3 - - Install Code2Prompt:

poetry add code2prompt

pipx install code2prompt-

Generate a prompt from a single Python file:

code2prompt --path /path/to/your/script.py

-

Process an entire project directory and save the output:

code2prompt --path /path/to/your/project --output project_summary.md

-

Generate a prompt for multiple files, excluding tests:

code2prompt --path /path/to/src --path /path/to/lib --exclude "*/tests/*" --output codebase_summary.md

The basic syntax for Code2Prompt is:

code2prompt --path /path/to/your/code [OPTIONS]For multiple paths:

code2prompt --path /path/to/dir1 --path /path/to/file2.py [OPTIONS]| Option | Short | Description |

|---|---|---|

--path |

-p |

Path(s) to the directory or file to process (required, multiple allowed) |

--output |

-o |

Name of the output Markdown file |

--gitignore |

-g |

Path to the .gitignore file |

--filter |

-f |

Comma-separated filter patterns to include files (e.g., ".py,.js") |

--exclude |

-e |

Comma-separated patterns to exclude files (e.g., ".txt,.md") |

--case-sensitive |

Perform case-sensitive pattern matching | |

--suppress-comments |

-s |

Strip comments from the code files |

--line-number |

-ln |

Add line numbers to source code blocks |

--no-codeblock |

Disable wrapping code inside markdown code blocks | |

--template |

-t |

Path to a Jinja2 template file for custom prompt generation |

--tokens |

Display the token count of the generated prompt | |

--encoding |

Specify the tokenizer encoding to use (default: "cl100k_base") | |

--create-templates |

Create a templates directory with example templates | |

--version |

-v |

Show the version and exit |

The --filter and --exclude options allow you to specify patterns for files or directories that should be included in or excluded from processing, respectively.

--filter "PATTERN1,PATTERN2,..."

--exclude "PATTERN1,PATTERN2,..."

or

-f "PATTERN1,PATTERN2,..."

-e "PATTERN1,PATTERN2,..."

- Both options accept a comma-separated list of patterns.

- Patterns can include wildcards (

*) and directory indicators (**). - Case-sensitive by default (use

--case-sensitiveflag to change this behavior). --excludepatterns take precedence over--filterpatterns.

-

Include only Python files:

--filter "**.py" -

Exclude all Markdown files:

--exclude "**.md" -

Include specific file types in the src directory:

--filter "src/**.{js,ts}" -

Exclude multiple file types and a specific directory:

--exclude "**.log,**.tmp,**/node_modules/**" -

Include all files except those in 'test' directories:

--filter "**" --exclude "**/test/**" -

Complex filtering (include JavaScript files, exclude minified and test files):

--filter "**.js" --exclude "**.min.js,**test**.js" -

Include specific files across all directories:

--filter "**/config.json,**/README.md" -

Exclude temporary files and directories:

--exclude "**/.cache/**,**/tmp/**,**.tmp" -

Include source files but exclude build output:

--filter "src/**/*.{js,ts}" --exclude "**/dist/**,**/build/**" -

Exclude version control and IDE-specific files:

--exclude "**/.git/**,**/.vscode/**,**/.idea/**"

- Always use double quotes around patterns to prevent shell interpretation of special characters.

- Patterns are matched against the full path of each file, relative to the project root.

- The

**wildcard matches any number of directories. - Single

*matches any characters within a single directory or filename. - Use commas to separate multiple patterns within the same option.

- Combine

--filterand--excludefor fine-grained control over which files are processed.

- Start with broader patterns and refine as needed.

- Test your patterns on a small subset of your project first.

- Use the

--case-sensitiveflag if you need to distinguish between similarly named files with different cases. - When working with complex projects, consider using a configuration file to manage your filter and exclude patterns.

By using the --filter and --exclude options effectively and safely (with proper quoting), you can precisely control which files are processed in your project, ensuring both accuracy and security in your command execution.

-

Generate documentation for a Python library:

code2prompt --path /path/to/library --output library_docs.md --suppress-comments --line-number --filter "*.py" -

Prepare a codebase summary for a code review, focusing on JavaScript and TypeScript files:

code2prompt --path /path/to/project --filter "*.js,*.ts" --exclude "node_modules/*,dist/*" --template code_review.j2 --output code_review.md

-

Create input for an AI model to suggest improvements, focusing on a specific directory:

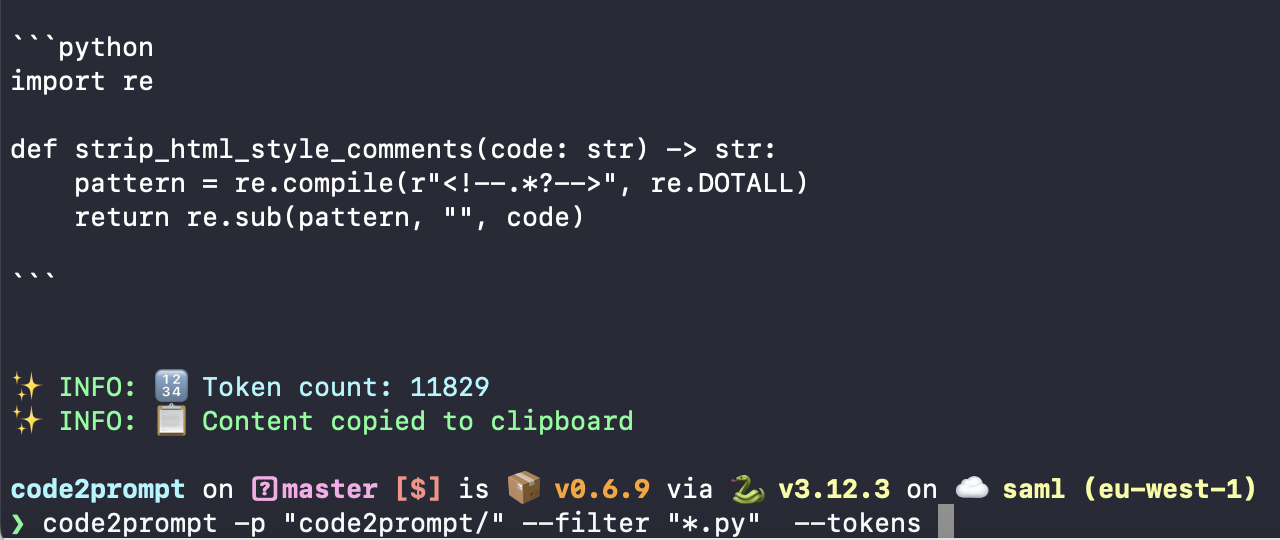

code2prompt --path /path/to/src/components --suppress-comments --tokens --encoding cl100k_base --output ai_input.md

-

Analyze comment density across a multi-language project:

code2prompt --path /path/to/project --template comment_density.j2 --output comment_analysis.md --filter "*.py,*.js,*.java" -

Generate a prompt for a specific set of files, adding line numbers:

code2prompt --path /path/to/important_file1.py --path /path/to/important_file2.js --line-number --output critical_files.md

Code2Prompt supports custom output formatting using Jinja2 templates. To use a custom template:

code2prompt --path /path/to/code --template /path/to/your/template.j2Use the --create-templates command to generate example templates:

code2prompt --create-templatesThis creates a templates directory with sample Jinja2 templates, including:

- default.j2: A general-purpose template

- analyze-code.j2: For detailed code analysis

- code-review.j2: For thorough code reviews

- create-readme.j2: To assist in generating README files

- improve-this-prompt.j2: For refining AI prompts

For full template documentation, see Documentation Templating.

Code2Prompt can be integrated with Simon Willison's llm CLI tool for enhanced code analysis.

pip install code2prompt llm-

Generate a code summary and analyze it with an LLM:

code2prompt --path /path/to/your/project | llm "Analyze this codebase and provide insights on its structure and potential improvements"

-

Process a specific file and get refactoring suggestions:

code2prompt --path /path/to/your/script.py | llm "Suggest refactoring improvements for this code"

For more advanced use cases, refer to the Integration with LLM CLI section in the full documentation.

You can integrate Code2Prompt into your GitHub Actions workflow. Here's an example:

name: Code Analysis

on: [push]

jobs:

analyze-code:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.x'

- name: Install dependencies

run: |

pip install code2prompt llm

- name: Analyze codebase

run: |

code2prompt --path . | llm "Perform a comprehensive analysis of this codebase. Identify areas for improvement, potential bugs, and suggest optimizations." > analysis.md

- name: Upload analysis

uses: actions/upload-artifact@v2

with:

name: code-analysis

path: analysis.mdTokens are the basic units of text that language models process. They can be words, parts of words, or even punctuation marks. Different tokenizer encodings split text into tokens in various ways. Code2Prompt supports multiple token types through its --encoding option, with "cl100k_base" as the default. This encoding, used by models like GPT-3.5 and GPT-4, is adept at handling code and technical content. Other common encodings include "p50k_base" (used by earlier GPT-3 models) and "r50k_base" (used by models like CodeX).

To count tokens in your generated prompt, use the --tokens flag:

code2prompt --path /your/project --tokensFor a specific encoding:

code2prompt --path /your/project --tokens --encoding p50k_baseUnderstanding token counts is crucial when working with AI models that have token limits, ensuring your prompts fit within the model's context window.

Code2Prompt supports a .code2promptrc configuration file in JSON format for setting default options. Place this file in your project or home directory.

Example .code2promptrc:

{

"suppress_comments": true,

"line_number": true,

"encoding": "cl100k_base",

"filter": "*.py,*.js",

"exclude": "tests/*,docs/*"

}-

Issue: Code2Prompt is not recognizing my .gitignore file. Solution: Run Code2Prompt from the project root, or specify the .gitignore path with

--gitignore. -

Issue: The generated output is too large for my AI model. Solution: Use

--tokensto check the count, and refine--filteror--excludeoptions. -

Issue: Some files are not being processed. Solution: Check for binary files or exclusion patterns. Use

--case-sensitiveif needed.

- Include system in template to promote re-usability of sub templates.

- Tokens count for Anthropic Models and other models such LLama3 or Mistral

- Cost Estimations for main LLM providers based in token count

- Integration with qllm (Quantalogic LLM)

Contributions to Code2Prompt are welcome! Please read our Contributing Guide for details on our code of conduct and the process for submitting pull requests.

Code2Prompt is released under the MIT License. See the LICENSE file for details.

⭐ If you find Code2Prompt useful, please give us a star on GitHub! It helps us reach more developers and improve the tool. ⭐

Made with ❤️ by Raphaël MANSUY