- 2024-1-13: 📃 We release a survey paper on LLM for NLG/text generation evaluation. "Leveraging Large Language Models for NLG Evaluation: A Survey". Welcome to read and cite it. We are looking forward to your feedback and suggestions.

In the rapidly evolving domain of Natural Language Generation (NLG) evaluation, introducing Large Language Models (LLMs) has opened new avenues for assessing generated content quality, e.g., coherence, creativity, and context relevance. This survey aims to provide a thorough overview of leveraging LLMs for NLG evaluation, a burgeoning area that lacks a systematic analysis. We propose a coherent taxonomy for organizing existing LLM-based evaluation metrics, offering a structured framework to understand and compare these methods. Our detailed exploration includes critically assessing various LLM-based methodologies, as well as comparing their strengths and limitations in evaluating NLG outputs. By discussing unresolved challenges, including bias, robustness, domain-specificity, and unified evaluation, this survey seeks to offer insights to researchers and advocate for fairer and more advanced NLG evaluation techniques.

- Large Language Models Are State-of-the-Art Evaluators of Translation Quality [paper]

- LLM-Eval: Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with Large Language Models [paper]

- Evaluate What You Can't Evaluate: Unassessable Quality for Generated Response [paper]

- Is ChatGPT a Good NLG Evaluator? A Preliminary Study [paper]

- Multi-Dimensional Evaluation of Text Summarization with In-Context Learning [paper]

- BARTScore: Evaluating Generated Text as Text Generation [paper]

- GPTScore: Evaluate as You Desire [paper]

- Zero-shot Faithfulness Evaluation for Text Summarization with Foundation Language Model [paper]

- Large Language Models Are State-of-the-Art Evaluators of Translation Quality [paper]

- ChatGPT as a Factual Inconsistency Evaluator for Text Summarization [paper]

- Human-like summarization evaluation with chatgpt [paper]

- Towards Better Evaluation of Instruction-Following: A Case-Study in Summarization [paper]

- Investigating Table-to-Text Generation Capabilities of LLMs in Real-World Information Seeking Scenarios [paper]

- Automatic Evaluation of Attribution by Large Language Models [paper]

- Exploring the use of large language models for reference-free text quality evaluation: A preliminary empirical study [paper]

- ChatGPT Outperforms Crowd-Workers for Text-Annotation Tasks [paper]

- Benchmarking Foundation Models with Language-Model-as-an-Examiner [paper]

- Is ChatGPT better than Human Annotators? Potential and Limitations of ChatGPT in Explaining Implicit Hate Speech [paper]

- Less is More for Long Document Summary Evaluation by LLMs [paper]

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena [paper]

- Large Language Models Are State-of-the-Art Evaluators of Code Generation [paper]

- Evaluation Metrics in the Era of GPT-4: Reliably Evaluating Large Language Models on Sequence to Sequence Tasks [paper]

- Text Style Transfer Evaluation Using Large Language Models [paper]

- Calibrating LLM-Based Evaluator [paper]

- Can Large Language Models Be an Alternative to Human Evaluations? [paper]

- ChatGPT as a Factual Inconsistency Evaluator for Text Summarization [paper]

- Human-like summarization evaluation with chatgpt [paper]

- Large Language Models are not Fair Evaluators [paper]

- Exploring ChatGPT's Ability to Rank Content: A Preliminary Study on Consistency with Human Preferences [paper]

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena [paper]

- EvalLM: Interactive Evaluation of Large Language Model Prompts on User-Defined Criteria [paper]

- Benchmarking Foundation Models with Language-Model-as-an-Examiner [paper]

- Exploring the use of large language models for reference-free text quality evaluation: A preliminary empirical study [paper]

- Automated Evaluation of Personalized Text Generation using Large Language Models [paper]

- Large Language Models are Diverse Role-Players for Summarization Evaluation [paper]

- Wider and Deeper LLM Networks are Fairer LLM Evaluators [paper]

- ChatEval: Towards Better LLM-based Evaluators through Multi-Agent Debate [paper]

- PRD: Peer Rank and Discussion Improve Large Language Model based Evaluations [paper]

- Error Analysis Prompting Enables Human-Like Translation Evaluation in Large Language Models: A Case Study on ChatGPT [paper]

- G-Eval: NLG Evaluation using GPT-4 with Better Human Alignment [paper]

- FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation [paper]

- ALLURE: A Systematic Protocol for Auditing and Improving LLM-based Evaluation of Text using Iterative In-Context-Learning [paper]

- Not All Metrics Are Guilty: Improving NLG Evaluation with LLM Paraphrasing [paper]

- Automatic Machine Translation Evaluation in Many Languages via Zero-Shot Paraphrasing [paper]

- T5Score: Discriminative Fine-tuning of Generative Evaluation Metrics [paper]

- TrueTeacher: Learning Factual Consistency Evaluation with Large Language Models [paper]

- Learning Personalized Story Evaluation [paper]

- Automatic Evaluation of Attribution by Large Language Models [paper]

- Generative Judge for Evaluating Alignment [paper]

- Prometheus: Inducing Fine-grained Evaluation Capability in Language Models [paper]

- CritiqueLLM: Scaling LLM-as-Critic for Effective and Explainable Evaluation of Large Language Model Generation [paper]

- X-Eval: Generalizable Multi-aspect Text Evaluation via Augmented Instruction Tuning with Auxiliary Evaluation Aspects [paper]

- PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization [paper]

- Generative Judge for Evaluating Alignment [paper]

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena [paper]

- Learning Personalized Story Evaluation [paper]

- Prometheus: Inducing Fine-grained Evaluation Capability in Language Models [paper]

- INSTRUCTSCORE: Towards Explainable Text Generation Evaluation with Automatic Feedback [paper]

- TIGERScore: Towards Building Explainable Metric for All Text Generation Tasks [paper]

- Experts, Errors, and Context: A Large-Scale Study of Human Evaluation for Machine Translation [paper]

- Results of the WMT20 Metrics Shared Task [paper]

- Results of the WMT21 Metrics Shared Task: Evaluating Metrics with Expert-based Human Evaluations on TED and News Domain [paper]

- Results of the WMT22 Metrics Shared Task: Stop Using BLEU -- Neural Metrics Are Better and More Robust [paper]

- Newsroom: A Dataset of 1.3 Million Summaries with Diverse Extractive Strategies [paper]

- SAMSum Corpus: A Human-annotated Dialogue Dataset for Abstractive Summarization [paper]

- Re-evaluating Evaluation in Text Summarization [paper]

- Asking and Answering Questions to Evaluate the Factual Consistency of Summaries [paper]

- Understanding Factuality in Abstractive Summarization with {FRANK}: A Benchmark for Factuality Metrics [paper]

- SummEval: Re-evaluating Summarization Evaluation [paper]

- SummaC: Re-Visiting NLI-based Models for Inconsistency Detection in Summarization [paper]

- RiSum: Towards Better Evaluation of Instruction-Following: A Case-Study in Summarization [paper]

- OpinSummEval: Revisiting Automated Evaluation for Opinion Summarization [paper]

- Unsupervised Evaluation of Interactive Dialog with DialoGPT [paper]

- Topical-Chat: Towards Knowledge-Grounded Open-Domain Conversations [paper]

- Personalizing Dialogue Agents: I have a dog, do you have pets too? [paper]

- Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics [paper]

- From Images to Sentences Through Scene Description Graphs Using Commonsense Reasoning and Knowledge [paper]

- Cider: Consensus-based Image Description Evaluation [paper]

- Learning to Evaluate Image Captioning [paper]

- Phrase-Based & Neural Unsupervised Machine Translation [paper]

- Semantically Conditioned LSTM-based Natural Language Generation for Spoken Dialogue Systems [paper]

- The 2020 Bilingual, Bi-Directional WebNLG+ Shared Task: Overview and Evaluation Results (WebNLG+ 2020) [paper]

- OpenMEVA: A Benchmark for Evaluating Open-Ended Story Generation Metrics [paper]

- StoryER: Automatic Story Evaluation via Ranking, Rating and Reasoning [paper]

- Learning Personalized Story Evaluation [paper]

- AlpacaEval: An Automatic Evaluator of Instruction-following Models [Github]

- Judging LLM-as-a-judge with MT-Bench and Chatbot Arena [paper]

- Large Language Models are not Fair Evaluators [paper]

- Shepherd: A Critic for Language Model Generation [paper]

- Evaluating Large Language Models at Evaluating Instruction Following [paper]

- Wider and Deeper LLM Networks are Fairer LLM Evaluators [paper]

- Automatic Evaluation of Attribution by Large Language Models [paper]

- AlignBench: Benchmarking Chinese Alignment of Large Language Models [paper]

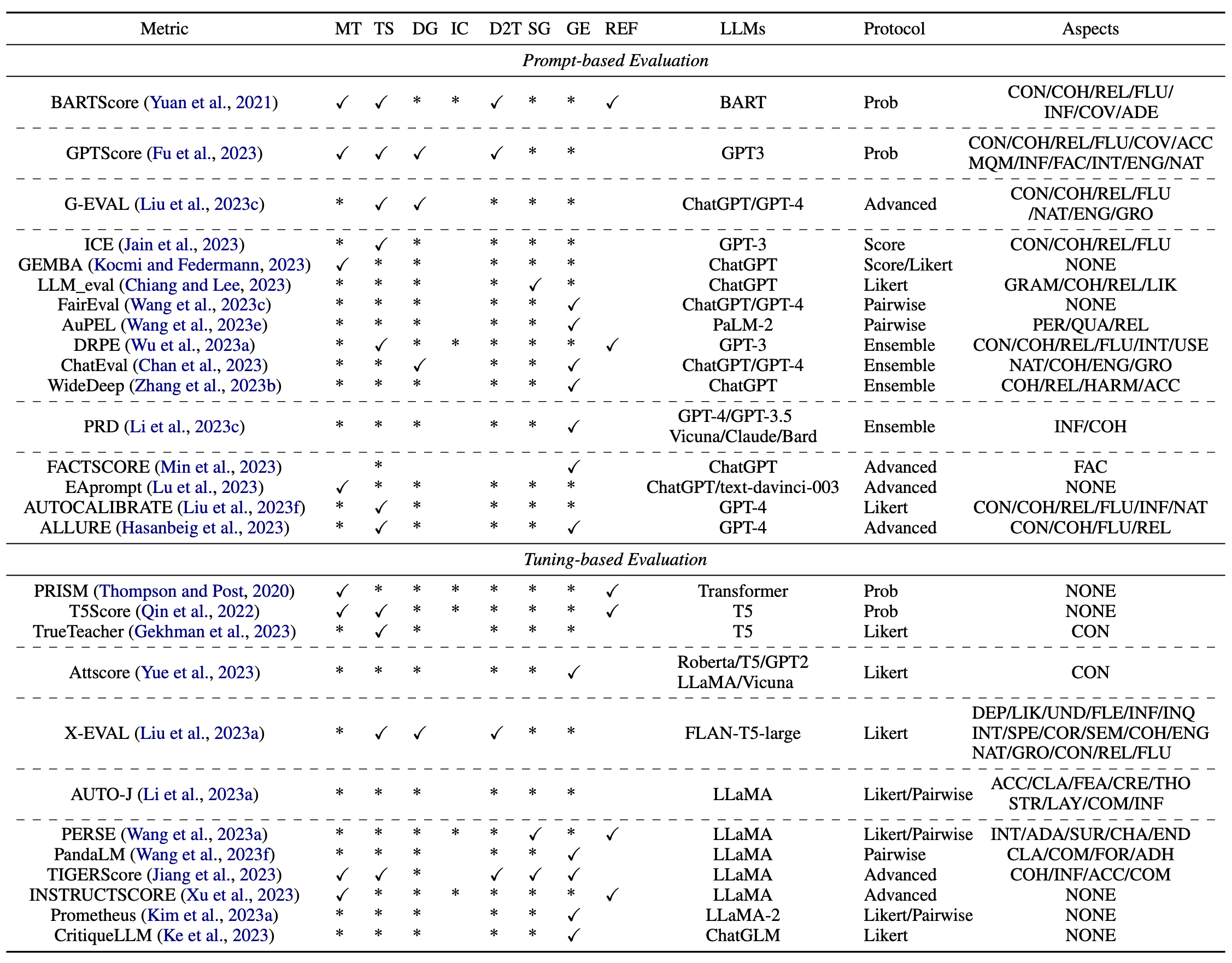

Automatic metrics proposed (✓) and adopted (*) for various NLG tasks. REF indicate the method is source context-free. MT: Machine Translation, TS: Text Summarization, DG: Dialogue Generation, IC: Image Captioning, D2T: Data-to-Text, SG: Story Generation, GE: General Generation. We adopted the evaluation aspects for different tasks from Fu et al. (2023). Specifically, for each evaluation aspect, CON: consistency, COH: coherence, REL: relevance, FLU: fluency, INF: informativeness, COV: semantic coverage, ADE: adequacy, NAT: naturalness, ENG: engagement, GRO: groundness, GRAM: grammaticality, LIK: likability, PER: personalization, QUA: quality, INT: interest, USE: usefulness, HARM: harmlessness, ACC: accuracy, FAC: factuality, ADA: adaptability, SUR: surprise, CHA: character, END: ending, FEA: feasibility, CRE: creativity, THO: thoroughness, STR: structure, LAY: layout, CLA: clarity, COM: comprehensiveness, FPR: formality, ADH: adherence, DEP: topic depth, UND: understandability, FLE: flexibility, INQ: inquisitiveness, SPE: specificity, COR: correctness, SEM: semantic appropriateness. NONE means that the method does not specify any aspects and gives an overall evaluation. The detailed explanation of each evaluation aspect can be found in Fu et al. (2023).

If this survey is helpful for you, please help us by citing this paper:

@misc{li2024leveraging,

title={Leveraging Large Language Models for NLG Evaluation: A Survey},

author={Zhen Li and Xiaohan Xu and Tao Shen and Can Xu and Jia-Chen Gu and Chongyang Tao},

year={2024},

eprint={2401.07103},

archivePrefix={arXiv},

primaryClass={cs.CL}

}