Split-Attention Network, A New ResNet Variant. It significantly boosts the performance of downstream models such as Mask R-CNN, Cascade R-CNN and DeepLabV3.

- Install this package repo, note that you only need to choose one of the options

# using github url

pip install git+https://github.com/zhanghang1989/ResNeSt

# using pypi

pip install resnest --pre| crop size | PyTorch | Gluon | |

|---|---|---|---|

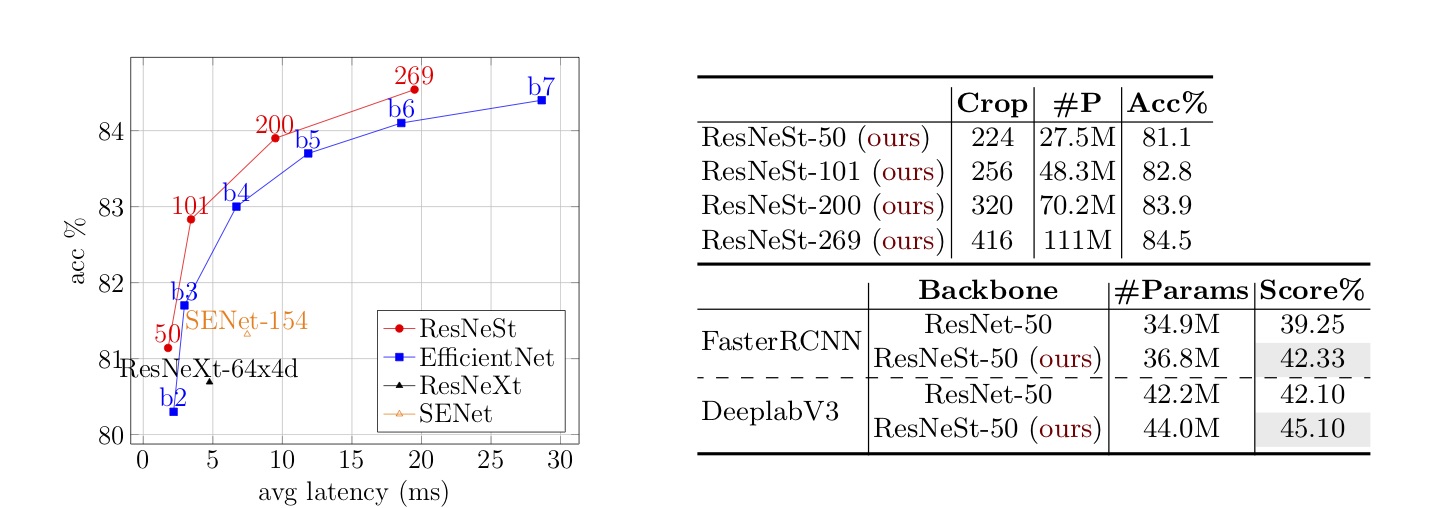

| ResNeSt-50 | 224 | 81.03 | 81.04 |

| ResNeSt-101 | 256 | 82.83 | 82.81 |

| ResNeSt-200 | 320 | 83.84 | 83.88 |

| ResNeSt-269 | 416 | 84.54 | 84.53 |

-

3rd party Tensorflow implementation is available at link.

-

Extra ablation study models are available in link

- Load using Torch Hub

import torch

# get list of models

torch.hub.list('zhanghang1989/ResNeSt', force_reload=True)

# load pretrained models, using ResNeSt-50 as an example

net = torch.hub.load('zhanghang1989/ResNeSt', 'resnest50', pretrained=True)- Load using python package

# using ResNeSt-50 as an example

from resnest.torch import resnest50

net = resnest50(pretrained=True)- Load pretrained model:

# using ResNeSt-50 as an example

from resnest.gluon import resnest50

net = resnest50(pretrained=True)Training code and pretrained models are released at our Detectron2 Fork.

| Method | Backbone | mAP% |

|---|---|---|

| Faster R-CNN | ResNet-50 | 39.25 |

| ResNet-101 | 41.37 | |

| ResNeSt-50 (ours) | 42.33 | |

| ResNeSt-101 (ours) | 44.72 | |

| Cascade R-CNN | ResNet-50 | 42.52 |

| ResNet-101 | 44.03 | |

| ResNeSt-50 (ours) | 45.41 | |

| ResNeSt-101 (ours) | 47.50 | |

| ResNeSt-200 (ours) | 49.03 |

| Method | Backbone | bbox | mask |

|---|---|---|---|

| Mask R-CNN | ResNet-50 | 39.97 | 36.05 |

| ResNet-101 | 41.78 | 37.51 | |

| ResNeSt-50 (ours) | 42.81 | 38.14 | |

| ResNeSt-101 (ours) | 45.75 | 40.65 | |

| Cascade R-CNN | ResNet-50 | 43.06 | 37.19 |

| ResNet-101 | 44.79 | 38.52 | |

| ResNeSt-50 (ours) | 46.19 | 39.55 | |

| ResNeSt-101 (ours) | 48.30 | 41.56 | |

| ResNeSt-200 (w/ tricks ours) | 50.54 | 44.21 | |

| ResNeSt-200-dcn (w/ tricks ours) | 50.91 | 44.50 | |

| 53.30* | 47.10* |

All of results are reported on COCO-2017 validation dataset. The values with * demonstrate the mutli-scale testing performance on the test-dev2019.

| Backbone | bbox | mask | PQ |

|---|---|---|---|

| ResNeSt-200 | 51.00 | 43.68 | 47.90 |

- PyTorch models and training: Please visit PyTorch Encoding Toolkit.

- Training with Gluon: Please visit GluonCV Toolkit.

| Method | Backbone | pixAcc% | mIoU% |

|---|---|---|---|

| Deeplab-V3 |

ResNet-50 | 80.39 | 42.1 |

| ResNet-101 | 81.11 | 44.14 | |

| ResNeSt-50 (ours) | 81.17 | 45.12 | |

| ResNeSt-101 (ours) | 82.07 | 46.91 | |

| ResNeSt-200 (ours) | 82.45 | 48.36 | |

| ResNeSt-269 (ours) | 82.62 | 47.60 |

| Method | Backbone | Split | w Mapillary | mIoU% |

|---|---|---|---|---|

| Deeplab-V3+ |

ResNeSt-200 (ours) | Validation | no | 82.7 |

| ResNeSt-200 (ours) | Validation | yes | 83.8 | |

| ResNeSt-200 (ours) | Test | yes | 83.3 |

Note: the inference speed reported in the paper are tested using Gluon implementation with RecordIO data.

Here we use raw image data format for simplicity, please follow GluonCV tutorial if you would like to use RecordIO format.

cd scripts/dataset/

# assuming you have downloaded the dataset in the current folder

python prepare_imagenet.py --download-dir ./# use resnest50 as an example

cd scripts/torch/

python verify.py --model resnest50 --crop-size 224# use resnest50 as an example

cd scripts/gluon/

python verify.py --model resnest50 --crop-size 224- Training with MXNet Gluon: Please visit Gluon folder.

- Training with PyTorch: Please visit PyTorch Encoding Toolkit (slightly worse than Gluon implementation).

For object detection and instance segmentation models, please visit our detectron2-ResNeSt fork.

- Training with PyTorch: Encoding Toolkit.

- Training with MXNet: GluonCV Toolkit.

ResNeSt: Split-Attention Networks [arXiv]

Hang Zhang, Chongruo Wu, Zhongyue Zhang, Yi Zhu, Zhi Zhang, Haibin Lin, Yue Sun, Tong He, Jonas Muller, R. Manmatha, Mu Li and Alex Smola

@article{zhang2020resnest,

title={ResNeSt: Split-Attention Networks},

author={Zhang, Hang and Wu, Chongruo and Zhang, Zhongyue and Zhu, Yi and Zhang, Zhi and Lin, Haibin and Sun, Yue and He, Tong and Muller, Jonas and Manmatha, R. and Li, Mu and Smola, Alexander},

journal={arXiv preprint arXiv:2004.08955},

year={2020}

}

- ResNeSt Backbone (Hang Zhang)

- Detectron Models (Chongruo Wu, Zhongyue Zhang)

- Semantic Segmentation (Yi Zhu)

- Distributed Training (Haibin Lin)