👍👍👍🤙🤙🤙

This repository provides the Official PyTorch implementation of DMA-Net, from the following paper:

Deep mutual attention network for acoustic scene classification. Digital Signal Processing, 2022.

Wei Xie, Qianhua He,

Zitong Yu, Yanxiong Li

South China University of Technology, Guangzhou, China

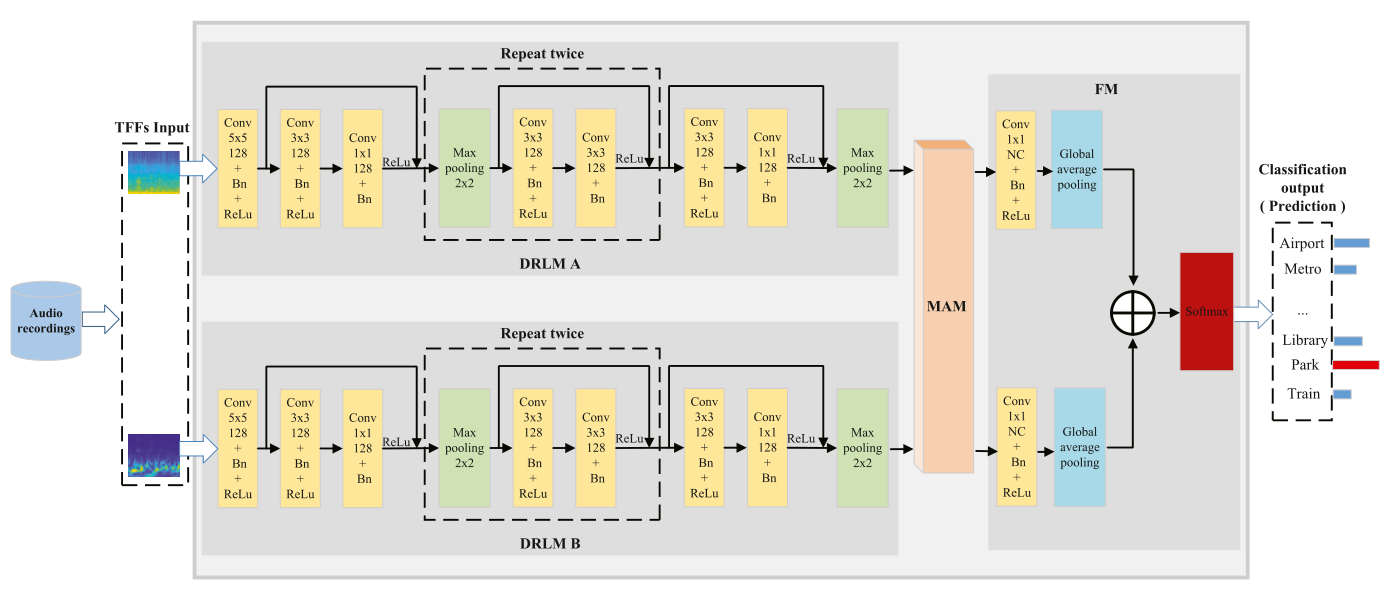

Figure 1: Schematic diagram of the proposed deep mutual attention network.

For the sake of simplicity, this repository provides instructions on processing the DCASE2018-1a dataset using both PyTorch and MATLAB. However, it can easily be adapted for processing other datasets by making changes solely to the dataset path and label information.

The dataset is downloadable from here

The dataset contains 10 classes of audio scenes, recorded with Device A, B and C. The statistic of the data is shown below:

| Attributes | Dev. | Test | |

|---|---|---|---|

| Subtask A | Binanural, 48 kHz, 24 bit | Device A: 8640 | 1200 |

| Subtask B | Mono, 44.1 kHz | Device A: 8640 Device B: 720 Device C: 720 | 2400 |

| Subtask C | - | Any data | 1200 |

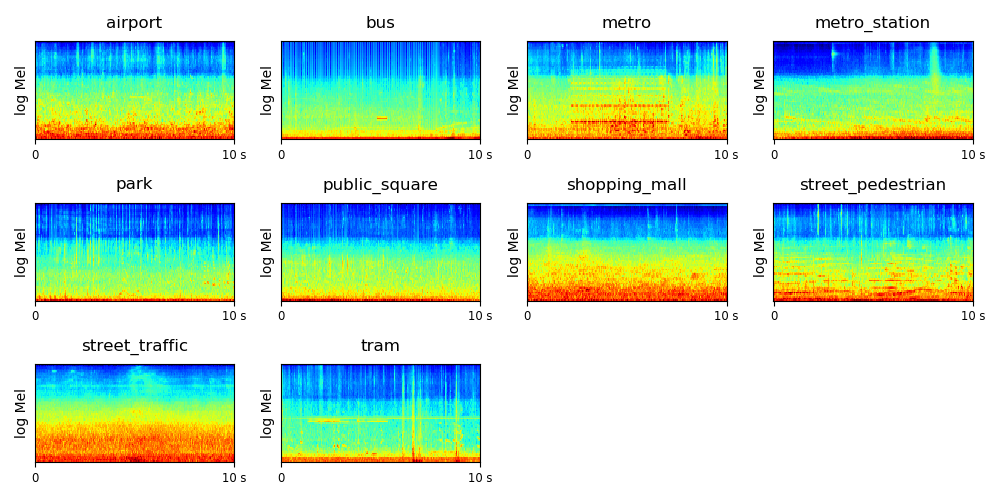

The log mel spectrogram of the scenes are shown below:

Conda should be installed on the system.

-

Install Anaconda or conda

-

Run the install dependencies script:

conda env create -f environment.ymlThis creates conda environment DCASE with all the dependencies.

Please follow the instructions in here to prepare the TFFs of all the audio files.

python matlab_feature_conversion.py --project_dir path to DMA-Net

python DatasetsManager.py --project_dir path to DMA-Net

python main.py --project_dir path to DMA-Net

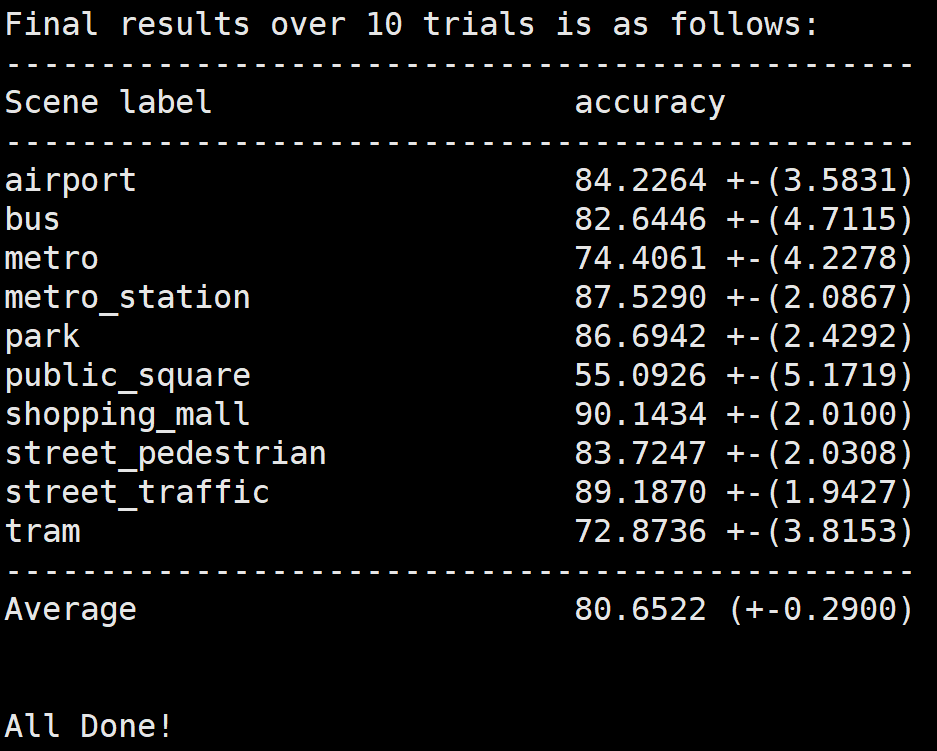

The script is configured to conduct 10 trials by default, with the final results being aggregated for statistical analysis.

You should obtain results similar to the following, which may vary based on your software and hardware configuration.

final_results

Our project references the codes in the following repos.

If you find this repository helpful, please consider citing:

@article{xie2022deep,

title={Deep mutual attention network for acoustic scene classification},

author={Xie, Wei and He, Qianhua and Yu, Zitong and Li, Yanxiong},

journal={Digital Signal Processing},

volume={123},

pages={103450},

year={2022},

publisher={Elsevier}

}