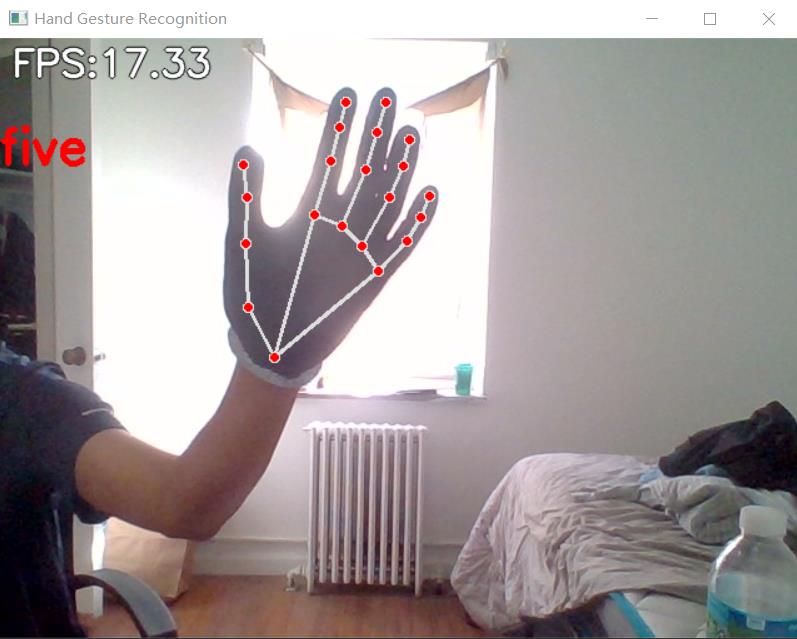

This is a project of real-time first-view hand gesture recognition. Both the static gestures (i.e. thumb up) and dynamic hand gestures (i.e. checkmark, clockwise) can be recognized.

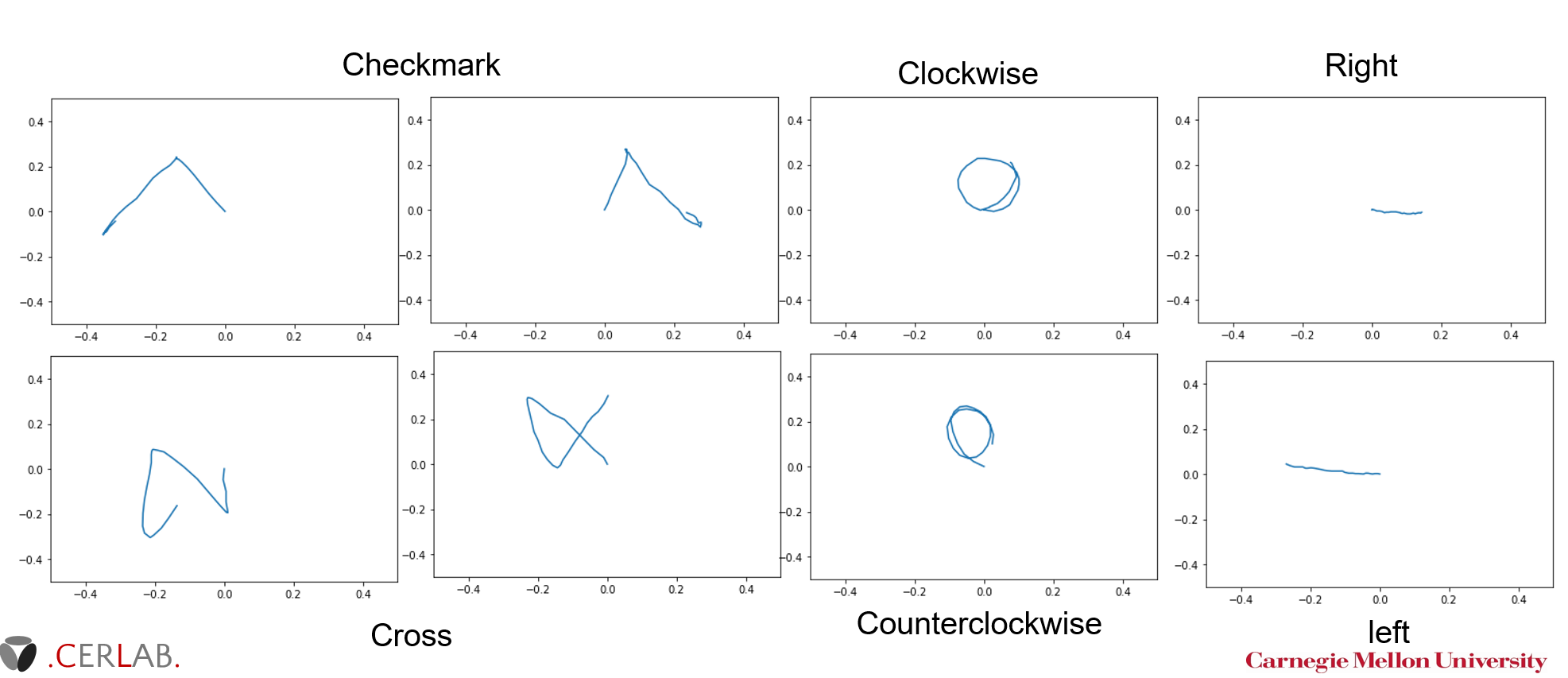

There are seven dynamic gestures. Dynamic gestures are defined as a gesture that requires multiple video frames to be classified:

- clockwise

- counterclockwise

- checkmark

- cross

- right

- left

- none (other than the above six)

We show an example of the trajactories of the six dynamic gestures.

You can customize your own gestures.

There are multiple static poses to be recognized. Static poses are different from dynamic gestures, because the classification task is conducted using one image.

- Number counting (1 - 9)

- Thumb up & thumb down

- Yeah

- Gun

- Point

- ...

This is an introduction of how you may give instructions on setting up your project locally. To get a local copy up and running follow these simple example steps.

Python 3 is needed. You are welcomed to install the latest python version.

You need to install the following python libraries.

pip install tensorflow numpy mediapipe scikit-learn opencv-python pandas seaborn

mediapipe == 0.8.11

opencv-python == 4.6.0.66We recommend you to install cuda to accelerate the training process. The version of the cuda and cudnn module should matches the tensorflow version you installed.

Using your camera

python3 app.py

Using a recorded video

python3 app.py --video <path-to-video>

Using the glove preprocessor

python3 app.py --glove --lowA <glove's low bound of A in LAB space> --lowB <glove's low bound of B in LAB space> --highA <glove's high bound of A in LAB space> --highB <glove's hign bound of B in LAB space>

When use the glove preprocessor, you can turn on the show_color_mask mode to show current color mask.

python3 app.py --glove --show_color_mask --lowA <glove's low bound of A in LAB space> --lowB <glove's low bound of B in LAB space> --highA <glove's high bound of A in LAB space> --highB <glove's hign bound of B in LAB space>

An example:

python3 app.py --glove --show_color_mask --lowA 130 --lowB 120 --highA 145 --highB 130

In the right picture, the white area is in the mask. You can tune the lowA, lowB, highA and highB to let the mask include the whole glove. Also, you can try to include background pixels as less as possible, which can improve the accuracy. However, always try to include the whole mask first before you attempt to get rid of the background. Play around with the argument until the system works well with your input.

.

├── README.md

├── app.py

├── conquer_cross_model.ipynb

├── images

│ └── ...

├── keypoint_classification_EN.ipynb

├── glove_utils

│ ├── __init__.py

│ ├── MediapipeDetector.py

│ ├── OpenCVGloveSolver.py

│ ├── OpenCVMaskGenerator.py

├── model

│ ├── __init__.py

│ ├── keypoint_classifier

│ │ ├── keypoint.csv

│ │ ├── keypoint_classifier.hdf5

│ │ ├── keypoint_classifier.py

│ │ ├── keypoint_classifier.tflite

│ │ └── keypoint_classifier_label.csv

│ ├── point_history_classifier

│ │ ├── datasets

│ │ │ ├── point_history.csv

│ │ │ ├── point_history_classifier_label.csv

│ │ │ ├── point_history_xinyang.csv

│ │ │ └── prediction_results.pkl

│ │ ├── point_history_classifier.py

│ │ ├── point_history_classifier_LSTM_ConquerCross.tflite

│ │ ├── point_history_classifier_LSTM_LR_random_10.tflite

│ │ ├── point_history_classifier_LSTM_LR_random_18.tflite

│ │ └── point_history_classifier_LSTM_LR_random_22.tflite

│ └── test.txt

├── utils

│ ├── __init__.py

│ ├── cleaningDataset.py

│ ├── cvfpscalc.py

│ ├── dataManipulation.py

│ ├── delete_polluted_data.py

│ └── hand_gesture_mediapipe.py

└── videos

└── ...

This is a sample program for inference.

In addition, learning data (key points) for hand sign recognition,

You can also collect training data (index finger coordinate history) for finger gesture recognition.

This is a model training script for hand pose recognition.

This is a model training script for hand gesture recognition.

This directory stores files related to glove preprocessor and Mediapipe detector.

The following files are stored.

- MediaPipe hand detector(MediapipeDetector.py)

- Mask generator based on glove's color(OpenCVMaskGenerator.py)

- Glove preprocessor which help MediaPipe detect a hand with glove (OpenCVGloveSolver.py)

This directory stores files related to hand sign recognition.

The following files are stored.

- Training data(keypoint.csv)

- Trained model(point_history_classifier_LSTM_LR_random_N.tflite): where N is a parameter can be set during the test to pad multiple gestures with maximum length N.

- Label data(keypoint_classifier_label.csv)

- Inference module(keypoint_classifier.py)

This directory stores files related to hand gesture recognition.

The following files are stored.

- Training data(./datasets/point_history.csv)

- Trained model(point_history_classifier.tflite)

- Label data(point_history_classifier_label.csv)

- Inference module(point_history_classifier.py)

This directory stores all the images in this project.

This directory stores all the videos in this project.

This directory contains multiple functions to clean the dataset, augment dataset, and evaluate the system.

- First step of deleting polluted data (delete_polluted_data.py): screen the polluted data in the raw dataset into a txt file

- Dataset cleaning (cleaningDataset.py): delete all the screened out data

- Add augmented data points by rotating/translating/scaling the original dataset (utils/dataManipulation.py)

- Mode changing detection (hand_gesture_mediapipe.py): detect the transition between static hand pose mode and dynamic hand gesture mode.

- Add none-sense data points (utils/dataManipulation.py)

- Randomly insert and/or append another data point before/after the current data point. (utils/dataManipulation.py)

- Module for FPS measurement (cvfpscalc.py).

Yipeng Lin - yipengli@andrew.cmu.edu Xinyang Chen - xinyangc@andrew.cmu.edu