EVA is an open-domain Chinese pre-trained model, which contains the largest Chinese dialogue model with 2.8B parameters and is pre-trained on WDC-Dialogue, including 1.4B Chinese dialogue data from different domains. Paper link: https://arxiv.org/abs/2108.01547.

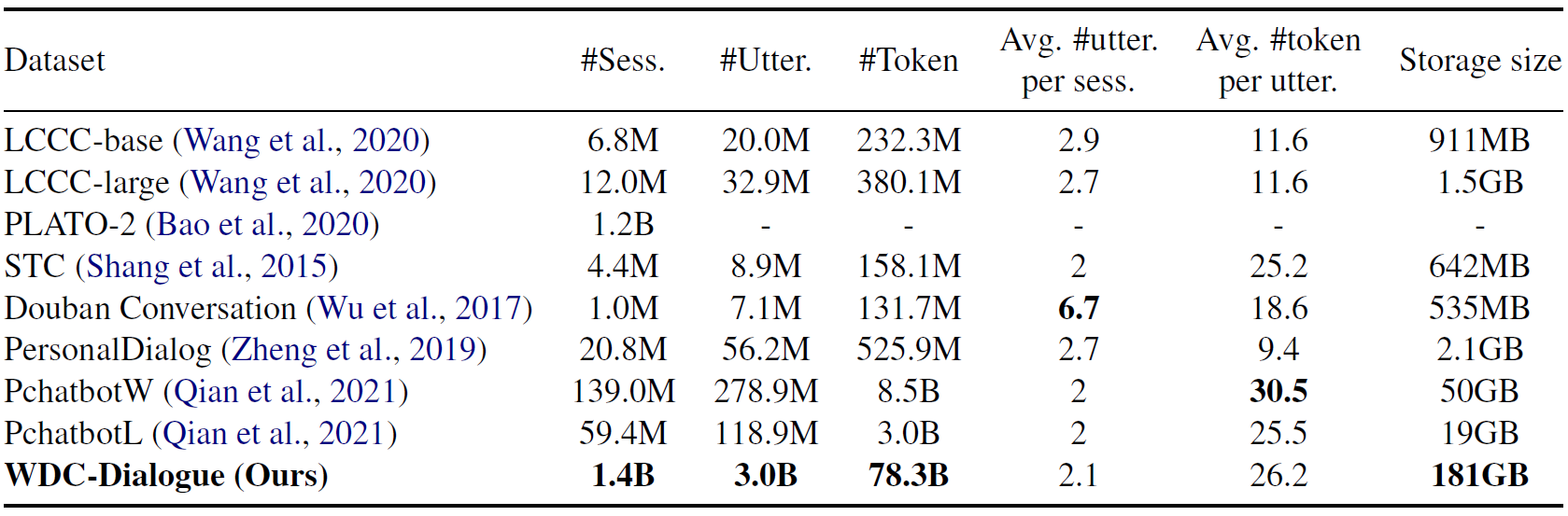

We construct a dataset named WDC-Dialogue from Chinese social media to train EVA. Specifically, conversations from various sources are gathered and a rigorous data cleaning pipeline is designed to enforce the quality of WDC-Dialogue. We mainly focus on three categories of textual interaction data, i.e., repost on social media, comment / reply on various online forums and online question and answer (Q&A) exchanges. Each round of these textual interactions yields a dialogue session via well-designed parsing rules. The following table shows statistics of the filtered WDC-Dialogue dataset and other Chinese dialogue datasets.

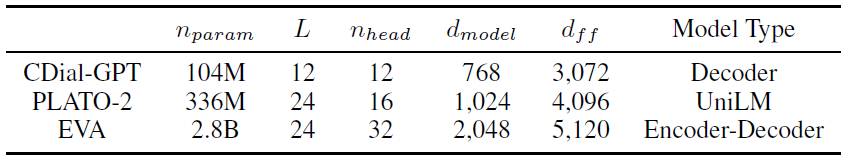

EVA is a Transformer-based dialogue model with a bi-directional encoder and a uni-directional decoder. We present the EVA's model details and a comparison with previous large-scale Chinese pre-trained dialogue models in the following table.

The model can be downloaded in BAAI's repository. The downloaded folder should have the following structure:

eva/

├── 222500

│ └── mp_rank_00_model_states.pt

├── latest_checkpointed_iteration.txt

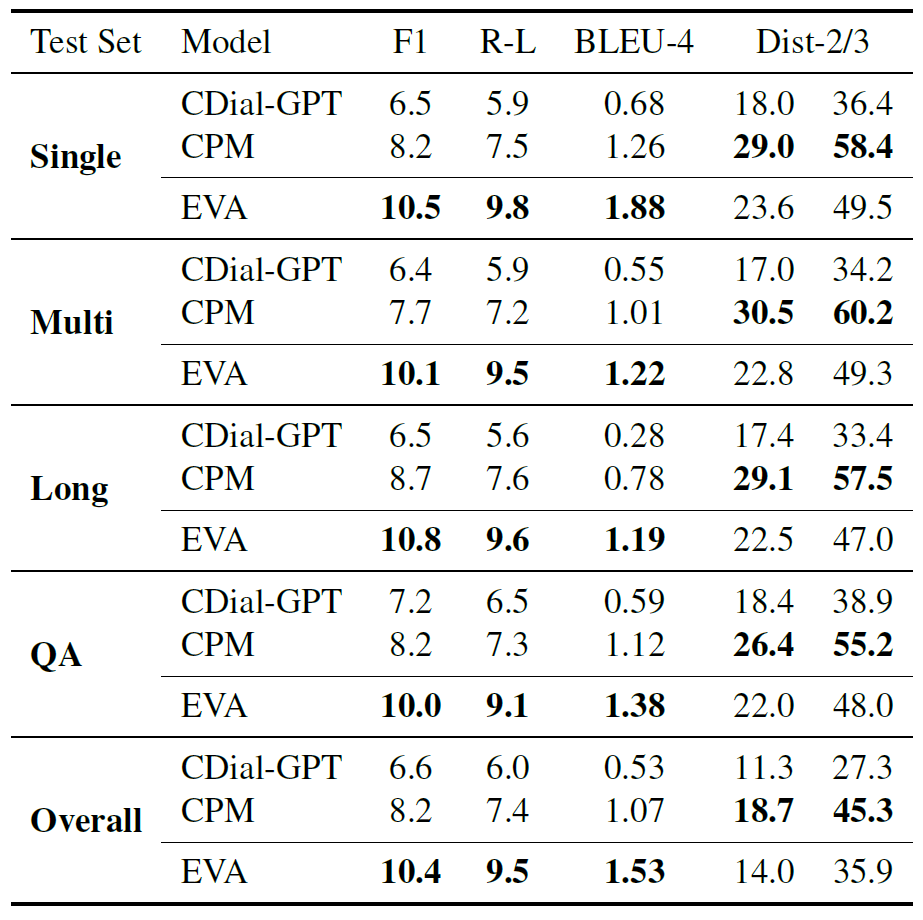

We compare EVA with Chinese pre-trained models including CDial-GPT and CPM. Results in the automatic evaluation including uni-gram F1, ROUGE-L, BLEU-4 and distinct n-grams are shown as follows:

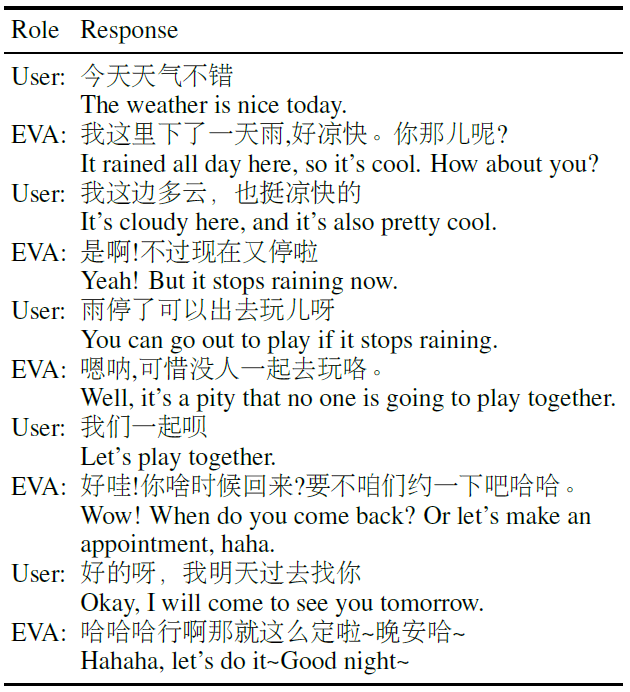

We also present an example of multi-turn generation results in the interactive human evaluation:

We provide the inference code of EVA. The source code is provided in src/.

The inference code occupies only about 7000MB GPU memory. So generally a single GPU is enough. We provide 2 options to set up the environment.

pip install -r requirements.txtgit clone https://github.com/NVIDIA/apex

cd apex

pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./The version we used is v0.3.9, It can be installed from its repo or

pip install deepspeed==0.3.9Since there exists some bugs in DeepSpeed, you need to make some little modifications to this package. You can refer to TsinghuaAI/CPM-2-Finetune#11 for more information. Specifically, you need to modify two lines of code in deepspeed/runtime/zero/stage1.py. We provide the modified stage1.py in our repo. You can simply replace deepspeed/runtime/zero/stage1.py with stage1.py in our repo.

docker pull gyxthu17/eva:1.2

Since the environment is ready in the docker, you don't need to set any environment variables. You may need to mount this directory to a directory in the docker. For example, to mount to /mnt, run the following code to run the docker image:

docker run -ti -v ${PWD}:/mnt gyxthu17/eva:1.2 /bin/bash

Before running the code, please change WORKING_DIR in the script to the path of this EVA directory, change CKPT_PATH to the path where the pre-trained weights are stored.

Run the following command:

cd src/

bash scripts/infer_enc_dec_interactive.sh

After running the command, please first make sure the pre-trained weights are load. If they are loaded, the log printed to the stdout should contain messages like successfully loaded /path-to-checkpoint/eva/mp_rank_01_model_states.pt. Otherwise, WARNING: could not find the metadata file /***/latest_checkpointed_iteration.txt will not load any checkpoints and will start from random will display. Note that when you successfully load the model, you will see messages like The following zero checkpoints paths are missing: ['/path-to-checkpoint/eva/200000/zero_pp_rank_0_mp_rank_00_optim_states.pt',... which mean optimizer states are not loaded. This DOES NOT affect the use of model inference and you can just ignore it.

If things go well, you will eventually enter an interactive interface. Have fun talking to EVA!

The pre-trained models aim to facilitate the research for conversation generation. The model provided in this repository is trained on a large dataset collected from various sources. Although a rigorous cleaning and filtering process has been carried out to the data and the model output, there is no guarantee that all the inappropriate contents have been completely banned. All the contents generated by the model do not represent the authors' opinions. The decoding script provided in this repository is only for research purposes. We are not responsible for any content generated using our model.

@article{coai2021eva,

title={EVA: An Open-Domain Chinese Dialogue System with Large-Scale Generative Pre-Training},

author={Zhou, Hao and Ke, Pei and Zhang, Zheng and Gu, Yuxian and Zheng, Yinhe and Zheng, Chujie and Wang, Yida and Wu, Chen Henry and Sun, Hao and Yang, Xiaocong and Wen, Bosi and Zhu, Xiaoyan and Huang, Minlie and Tang, Jie},

journal={arXiv preprint arXiv:2108.01547},

year={2021}

}