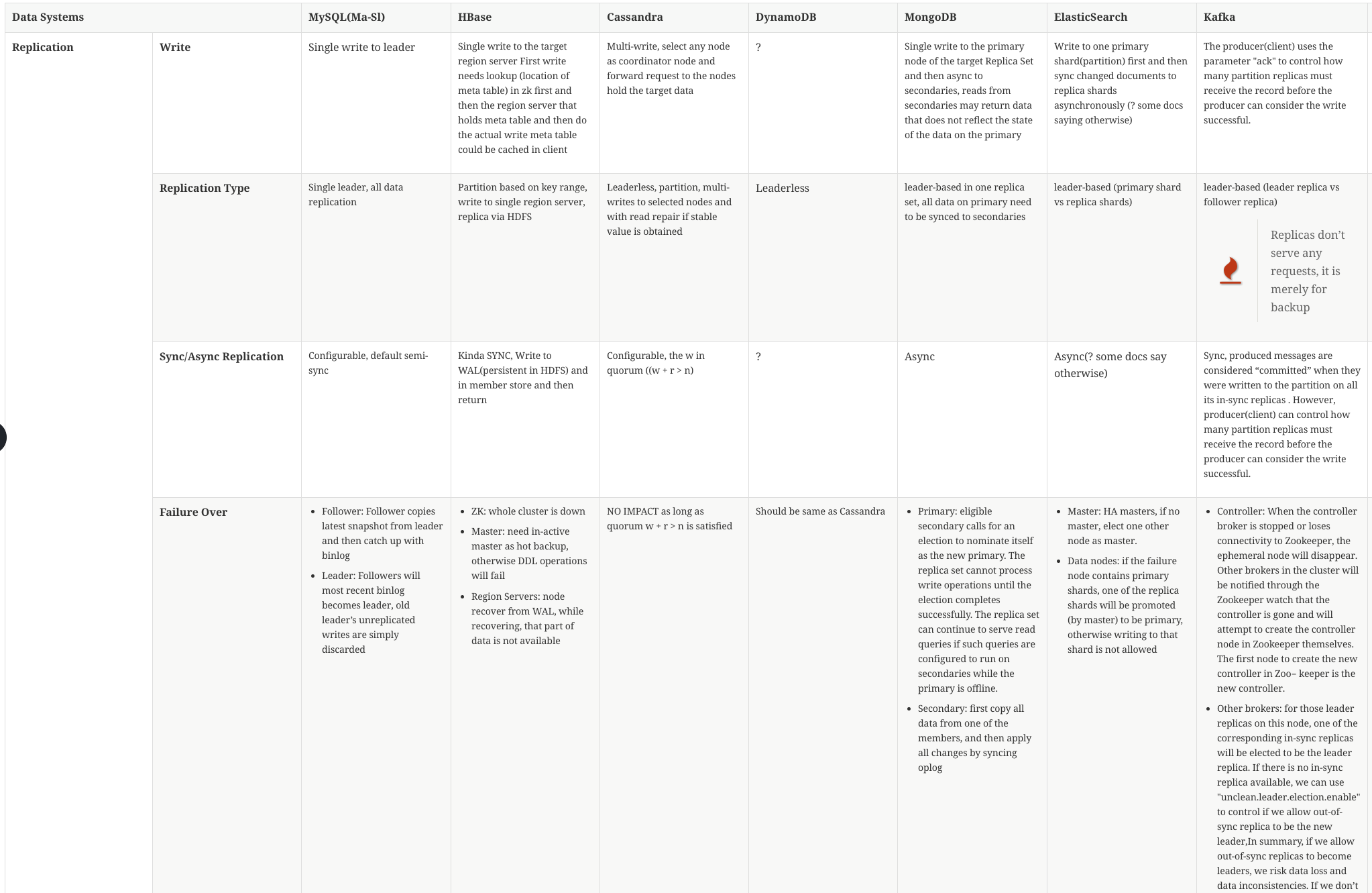

While reading the book "Designing Data-Intensive Applications", I was trying to understand the essence of distributed data systems and what are the techniques commonly applied in those data systems that we (as developers) interact with everyday and what are the pros and cons.

Though the book mentioned some of those data systems throughout the book, there is no central place to categorize all the popular existing distributed data systems by different characteristics. That’s why I have the idea to make a such table so that it could help me to memorize and also it may be valuable to all the developers that are interested in this area.

|

Caution

|

The comparison table is not finished yet nor 100% correct, contributions are welcomed! |

| Data Systems | MySQL(Ma-Sl) | HBase | Cassandra | DynamoDB | MongoDB | ElasticSearch | Kafka | RabbitMQ | ||

|---|---|---|---|---|---|---|---|---|---|---|

Replication |

Write |

Single write to leader |

Single write to the target region server First write needs lookup (location of meta table) in zk first and then the region server that holds meta table and then do the actual write meta table could be cached in client |

Multi-write, select any node as coordinator node and forward request to the nodes hold the target data |

? |

Single write to the primary node of the target Replica Set and then async to secondaries, reads from secondaries may return data that does not reflect the state of the data on the primary |

Write to one primary shard(partition) first and then sync changed documents to replica shards asynchronously (? some docs saying otherwise) |

The producer(client) uses the parameter "ack" to control how many partition replicas must receive the record before the producer can consider the write successful. |

? |

|

Replication Type |

Single leader, all data replication |

Partition based on key range, write to single region server, replica via HDFS |

Leaderless, partition, multi-writes to selected nodes and with read repair if stable value is obtained |

Leaderless |

leader-based in one replica set, all data on primary need to be synced to secondaries |

leader-based (primary shard vs replica shards) |

leader-based (leader replica vs follower replica)

|

leader-based |

||

Sync/Async Replication |

Configurable, default semi-sync |

Kinda SYNC, Write to WAL(persistent in HDFS) and in member store and then return |

Configurable, the w in quorum ((w + r > n) |

? |

Async |

Async(? some docs say otherwise) |

Sync, produced messages are considered “committed” when they were written to the partition on all its in-sync replicas . However, producer(client) can control how many partition replicas must receive the record before the producer can consider the write successful. |

Y |

||

Failure Over |

|

|

NO IMPACT as long as quorum w + r > n is satisfied |

Should be same as Cassandra |

|

|

|

? |

||

Multi-leader Replication Topology |

Circular by default (Cluster version) |

NA |

Circular |

? |

NA, Secondary (Follower) chooses to sync oplog from Primary or Secondary based on ping time and the state of other secondary’s replica status |

NA, Leader(node with that primary shard) forwards changed documents to nodes with replicas |

NA |

Y |

||

Replication Logs |

Originally STATEMENT-BASED, default to LOGICAL(row-based) if any nondeterminism in statement |

WAL |

Commit Log, just like the WAL in HBase, however, the write doesn’t wait for finishing writing to in-memory store |

Y |

Op Log, should be STATEMENT-BASED with transforms |

No Log, copy shards initially, and then forward changed documents to sync between primary shard and replicas |

The topic is actually a partition-ed log, brokers having follower replicas receive messages from other brokers having corresponding leader replicas using log offset just like how the client consumes messages. |

Y |

||

Multi-Write Conflict Resolve |

NA (as all writes are sent to leader) |

NA (as writes are region-based, no conflict) |

LWW (last write win) |

Y |

NA (as write are shard(partition) based, no conflict) |

NA (as write are shard(partition) based, no conflict) |

NA (as write are shard(partition) based, no conflict) |

Y |

||

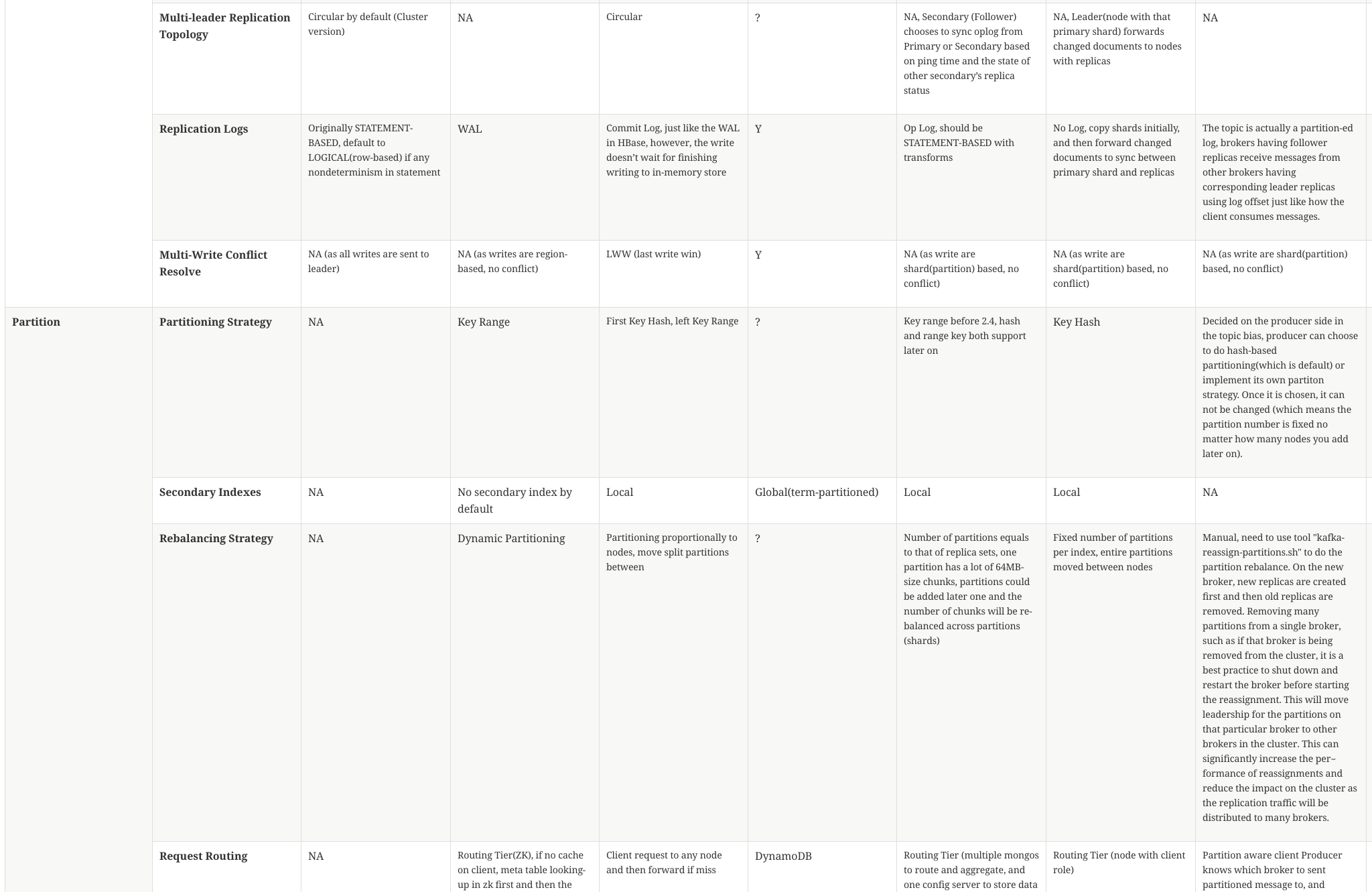

Partition |

Partitioning Strategy |

NA |

Key Range |

First Key Hash, left Key Range |

? |

Key range before 2.4, hash and range key both support later on |

Key Hash |

Decided on the producer side in the topic bias, producer can choose to do hash-based partitioning(which is default) or implement its own partiton strategy. Once it is chosen, it can not be changed (which means the partition number is fixed no matter how many nodes you add later on). |

? |

|

Secondary Indexes |

NA |

No secondary index by default |

Local |

Global(term-partitioned) |

Local |

Local |

NA |

? |

||

Rebalancing Strategy |

NA |

Dynamic Partitioning |

Partitioning proportionally to nodes, move split partitions between |

? |

Number of partitions equals to that of replica sets, one partition has a lot of 64MB-size chunks, partitions could be added later one and the number of chunks will be re-balanced across partitions (shards) |

Fixed number of partitions per index, entire partitions moved between nodes |

Manual, need to use tool "kafka-reassign-partitions.sh" to do the partition rebalance. On the new broker, new replicas are created first and then old replicas are removed. Removing many partitions from a single broker, such as if that broker is being removed from the cluster, it is a best practice to shut down and restart the broker before starting the reassignment. This will move leadership for the partitions on that particular broker to other brokers in the cluster. This can significantly increase the per‐ formance of reassignments and reduce the impact on the cluster as the replication traffic will be distributed to many brokers. |

RabbitMQ |

||

Request Routing |

NA |

Routing Tier(ZK), if no cache on client, meta table looking-up in zk first and then the region server is required meta table could be cached in client |

Client request to any node and then forward if miss |

DynamoDB |

Routing Tier (multiple mongos to route and aggregate, and one config server to store data location information(on which partition)) |

Routing Tier (node with client role) |

Partition aware client Producer knows which broker to sent partitioned message to, and consumer knows which partitions he is responsible for receiving the messages from |

RabbitMQ |

||

|

Note

|

CAP theorem is actually widely misunderstood, please refer to links: "Please stop calling databases CP or AP" & "What is the CAP theorem?" for clarification. However, it is still useful or relevant to most people for understanding the characteristics of those data systems |

| MySQL | HBase | Cassandra | DynamoDB | MongoDB | ElasticSearch | Kafka | RabbitMQ |

|---|---|---|---|---|---|---|---|

Master-Slave: AP, Cluster: CP (I question that Ma-Sla is only P, as when master goes down, during master election, data is not available for read and write) |

CP |

AP, Eventually C |

AP, Eventually C |

P, Not A (during failure-over election), Not C (as async replica sync) |

P, Not A (during the promotion of primary shards), Not C (as async replica sync) |

CA (Author stats CA, however I think it is a CP system, system can tolerant node failure, however during the failure, primary partition has to be elected, in that period, data in that partition is not available to read ) |

RabbitMQ |

-

Add more data systems: tidb, zookeeper, etcd, consule

-

Add "Read behavior","Dependencies", "Consensus Algorithm", "Distributed Transaction" in the table

-

Designing Data-Intensive Applications (https://dataintensive.net/)

-

MongoDB: The Definitive Guide, 2nd Edition (http://shop.oreilly.com/product/0636920028031.do)

-

The MongoDB 4.0 Manual (https://docs.mongodb.com/manual/)

-

Elasticsearch: The Definitive Guide (https://www.elastic.co/guide/en/elasticsearch/guide/current/index.html)

-

Elasticsearch Reference (https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html)

-

Cassandra: The Definitive Guide (http://shop.oreilly.com/product/0636920010852.do)

-

Kafka: The Definitive Guide (http://shop.oreilly.com/product/0636920044123.do)

-

RabbitMQ in Action (https://www.manning.com/books/rabbitmq-in-action)