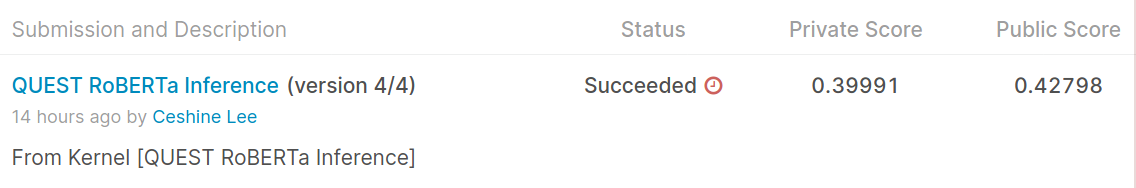

The 5-fold models can be trained in about an hour using Colab TPU. The model performance after post-processing the predictions (to optimize the Spearman correlation to the target):

This is at around 65th place on the private leaderboard. The post-processing (which unfortunately I did not use in the competition) gives an almost 0.03 score boost.

The Notebook used the generate the above submission is on Github Gist, and can be opened in Colab.

Run this command in the project root director and in the tf-helper-bot subdirectory:

python setup.py sdist bdist_wheel

And upload the .whl files in the dist directory to Google Cloud Storage.

Run this command and then upload the content in cache/tfrecords to Google Cloud Storage:

python -m quest.prepare_tfrecords --model-name roberta-base -n-folds 5

(Note: check requirements.txt for missing dependencies.)

Some of the TPU resources used in the project is generously sponsored by TensorFlow Research Cloud.