")}return r}function n(t,w,r){var x=h(t,r);var v=a(t);var y,s;if(v){y=d(v,x)}else{return}var q=c(t);if(q.length){s=document.createElement("pre");s.innerHTML=y.value;y.value=k(q,c(s),x)}y.value=i(y.value,w,r);var u=t.className;if(!u.match("(\\s|^)(language-)?"+v+"(\\s|$)")){u=u?(u+" "+v):v}if(/MSIE [678]/.test(navigator.userAgent)&&t.tagName=="CODE"&&t.parentNode.tagName=="PRE"){s=t.parentNode;var p=document.createElement("div");p.innerHTML="

"+y.value+"hljs.initHighlightingOnLoad(); </script>

This GitHub repository contains code and materials related to the article "Birds of a Feather Tweet Together. Bayesian Ideal Point Estimation Using Twitter Data," published in Political Analysis in 2015.

The original replication code can be found in the replication folder. See also Dataverse for the full replication materials, including data and output.

As an application of the method, in June 2015 I wrote a blog post on The Monkey Cage / Washington Post entitled "Who is the most conservative Republican candidate for president?." The replication code for the figure in the post is available in the primary folder.

Finally, this repository also contains an R package (tweetscores) with several functions to facilitate the application of this method in future research. The rest of this README file provides a tutorial with instructions showing how to use it

In order to download data from Twitter’s API, the first step is to create an authentication token. In order to do so, it’s necessary to follow these steps:

1 - Go to apps.twitter.com and sign in

2 - Click on “Create New App”

3 - Fill name, description, and website (it can be anything, even google.com), and make sure you leave ‘Callback URL’ empty

4 - Agree to user conditions

5 - Copy consumer key and consumer secret and paste below

install.packages("ROAuth")

library(ROAuth)

requestURL <- "https://api.twitter.com/oauth/request_token"

accessURL <- "https://api.twitter.com/oauth/access_token"

authURL <- "https://api.twitter.com/oauth/authorize"

consumerKey <- "XXXXXXXXXXXX"

consumerSecret <- "YYYYYYYYYYYYYYYYYYY"

my_oauth <- OAuthFactory$new(consumerKey=consumerKey, consumerSecret=consumerSecret,

requestURL=requestURL, accessURL=accessURL, authURL=authURL)6 - Run this line and go to the URL that appears on screen

my_oauth$handshake(cainfo = system.file("CurlSSL", "cacert.pem", package = "RCurl"))7 - Copy and paste the PIN number (6 digits) on the R console

8 - Change current folder into a folder where you will save all your tokens

setwd("~/Dropbox/credentials/twitter")9 - Now you can save oauth token for use in future sessions with R

save(my_oauth, file="my_oauth")The following code will install the tweetscores package, as well as all other R packages necessary for the functions to run.

toInstall <- c("ggplot2", "scales", "R2WinBUGS", "devtools", "yaml", "httr", "RJSONIO")

install.packages(toInstall, repos = "http://cran.r-project.org")

library(devtools)

install_github("pablobarbera/twitter_ideology/pkg/tweetscores")We can now go ahead and estimate ideology for any Twitter users in the US. In order to do so, the package includes pre-estimated ideology for political accounts and media outlets, so here we’re just replicating the second stage in the method – that is, estimating a user’s ideology based on the accounts they follow.

# load package

library(tweetscores)# downloading friends of a user

user <- "p_barbera"

friends <- getFriends(screen_name=user, oauth_folder="~/Dropbox/credentials/twitter")## /Users/pablobarbera/Dropbox/credentials/twitter/oauth_token_32

## 15 API calls left

## 1065 friends. Next cursor: 0

## 14 API calls left# estimate ideology with MCMC method

results <- estimateIdeology(user, friends)## p_barbera follows 11 elites: nytimes maddow caitlindewey carr2n fivethirtyeight

NickKristof nytgraphics nytimesbits NYTimeskrugman nytlabs thecaucus

## Chain 1

|=================================================================| 100%

## Chain 2

|=================================================================| 100%

Once we have this set of estimates, we can analyze them with a series of built-in functions.

# summarizing results

summary(results)## mean sd 2.5% 25% 50% 75% 97.5% Rhat n.eff

## beta -2.30 0.57 -3.37 -2.72 -2.25 -1.92 -1.26 1.02 200

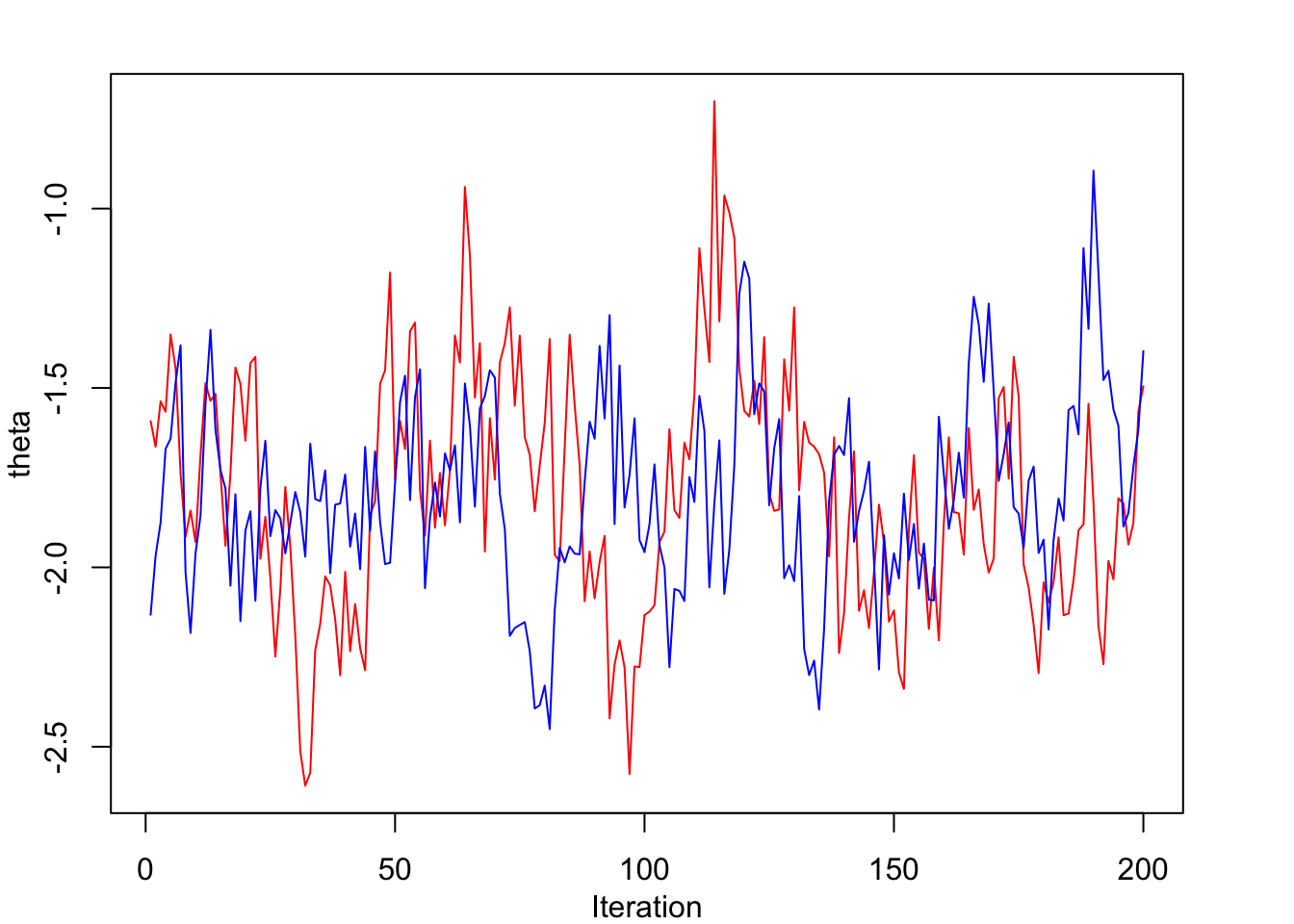

## theta -1.78 0.30 -2.28 -1.99 -1.82 -1.59 -1.11 1.00 200# assessing chain convergence using a trace plot

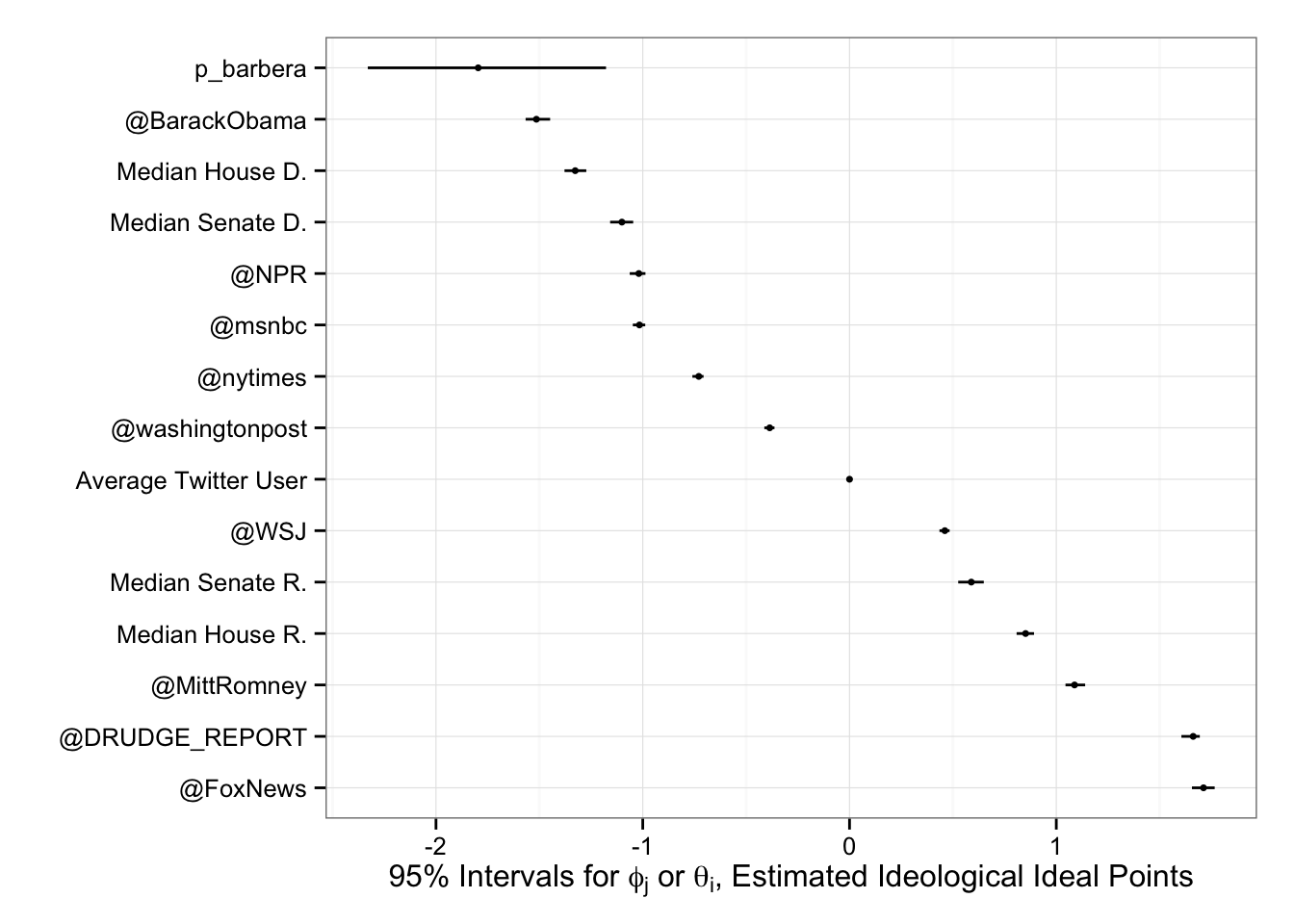

tracePlot(results, "theta")# comparing with other ideology estimates

plot(results)The previous function relies on a Metropolis-Hastings sampling algorithm to estimate ideology. However, we can also use Maximum Likelihood estimation to compute the distribution of the latent parameters. This method is much faster, since it’s not sampling from the posterior distribution of the parameters, but it will tend to give smaller standard errors. However, overall the results should be almost identical. (See here for the actual estimation functions for each of these two approaches.)

# faster estimation using maximum likelihood

results <- estimateIdeology(user, friends, method="MLE")## p_barbera follows 11 elites: nytimes maddow caitlindewey carr2n fivethirtyeight

NickKristof nytgraphics nytimesbits NYTimeskrugman nytlabs thecaucussummary(results)## mean sd 2.5% 25% 50% 75% 97.5% Rhat n.eff

## beta -2.30 0.57 -3.37 -2.72 -2.25 -1.92 -1.26 1.02 200

## theta -1.78 0.30 -2.28 -1.99 -1.82 -1.59 -1.11 1.00 200One limitation of the previous method is that users need to follow at least one political account. To partially overcome this problem, in a recently published article in Psychological Science, we add a third stage to the model where we add additional accounts (not necessarily political) followed predominantely by liberal or by conservative users, under the assumption that if other users also follow this same set of accounts, they are also likely to be liberal or conservative. To reduce computational costs, we rely on correspondence analysis to project all users onto the latent ideological space (see Supplementary Materials), and then we normalize all the estimates so that they follow a normal distribution with mean zero and standard deviation one. This package also includes a function that reproduces the last stage in the estimation, after all the additional accounts have been added:

# estimation using correspondence analysis

results <- estimateIdeology2(user, friends)## p_barbera follows 22 elites: andersoncooper, billclinton, BreakingNews,

## cnnbrk, davidaxelrod, Gawker, HillaryClinton, maddow, MaddowBlog, mashable, mattyglesias,

## NateSilver538, NickKristof, nytimes, NYTimeskrugman, repjoecrowley, RonanFarrow,

## SCOTUSblog, StephenAtHome, TheDailyShow, TheEconomist, UniteBlueresults## [1] -1.06158The package also contains additional functions that I use in my research, which I’m providing here in case they are useful:

scrapeCongressDatais a scraper of the list of Twitter accounts for Members of the US congress from theunitedstatesGithub account.getUsersBatchscrapes user information for more than 100 Twitter users from Twitter’s REST API.getFollowerscrapes followers lists from Twitter’ REST API.CAis a modified version of thecafunction in thecapackage (available on CRAN) that computes simple correspondence analysis with a much lower memory usage.supplementaryColumnsandsupplementaryRowstakes additional columns of a follower matrix and projects them to the latent ideological space using the parameters of an already-fitted correspondence analysis model.getCreatedreturns the approximate date in which a Twitter account was created based on its Twitter ID. In combination withestimatePastFollowersandestimateDateBreaks, it can be used to infer past Twitter follower networks.