Code for CVPR 2019 paper:

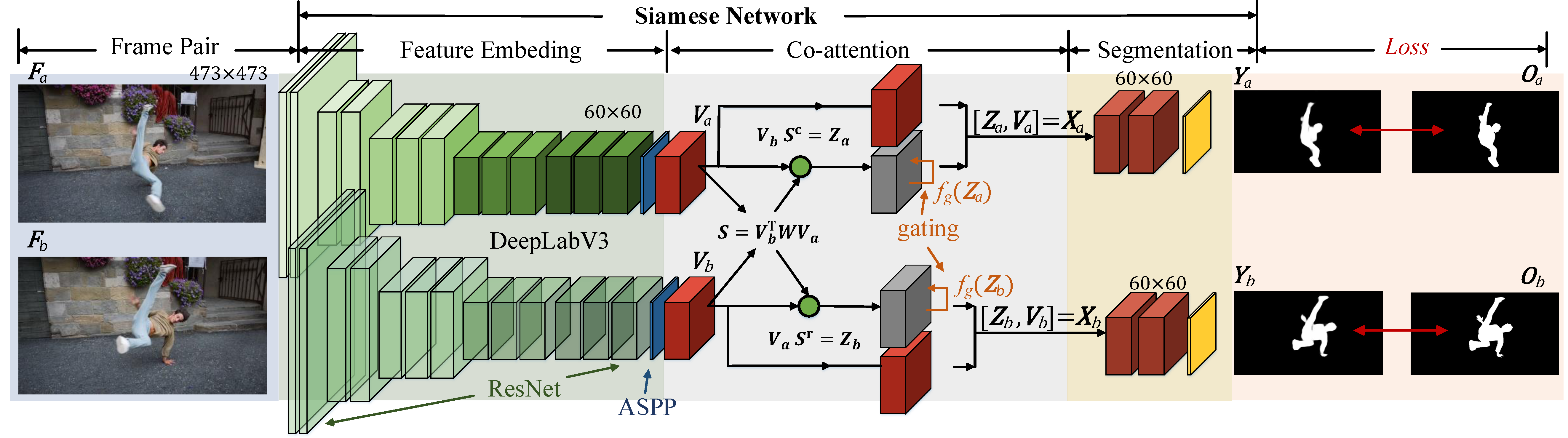

See More, Know More: Unsupervised Video Object Segmentation with Co-Attention Siamese Networks

Xiankai Lu, Wenguan Wang, Chao Ma, Jianbing Shen, Ling Shao, Fatih Porikli

🆕

Our group co-attention achieves a further performance gain (81.1 mean J on DAVIS-16 dataset), related codes have also been released.

The pre-trained model, testing and training code:

-

Install pytorch (version:1.0.1).

-

Download the pretrained model. Run 'test_coattention_conf.py' and change the davis dataset path, pretrainde model path and result path.

-

Run command: python test_coattention_conf.py --dataset davis --gpus 0

-

Post CRF processing code comes from: https://github.com/lucasb-eyer/pydensecrf.

The pretrained weight can be download from GoogleDrive or BaiduPan, pass code: xwup.

The segmentation results on DAVIS, FBMS and Youtube-objects can be download from DAVIS_benchmark(https://davischallenge.org/davis2016/soa_compare.html) or GoogleDrive or BaiduPan, pass code: q37f.

The youtube-objects dataset can be downloaded from here and annotation can be found here.

The FBMS dataset can be downloaded from here.

-

Download all the training datasets, including MARA10K and DUT saliency datasets. Create a folder called images and put these two datasets into the folder.

-

Download the deeplabv3 model from GoogleDrive. Put it into the folder pretrained/deep_labv3.

-

Change the video path, image path and deeplabv3 path in train_iteration_conf.py. Create two txt files which store the saliency dataset name and DAVIS16 training sequences name. Change the txt path in PairwiseImg_video.py.

-

Run command: python train_iteration_conf.py --dataset davis --gpus 0,1

If you find the code and dataset useful in your research, please consider citing:

@InProceedings{Lu_2019_CVPR,

author = {Lu, Xiankai and Wang, Wenguan and Ma, Chao and Shen, Jianbing and Shao, Ling and Porikli, Fatih},

title = {See More, Know More: Unsupervised Video Object Segmentation With Co-Attention Siamese Networks},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

@article{lu2020_pami,

title={Zero-Shot Video Object Segmentation with Co-Attention Siamese Networks},

author={Lu, Xiankai and Wang, Wenguan and Shen, Jianbing and Crandall, David and Luo, Jiebo},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2020},

publisher={IEEE}

}

Saliency-Aware Geodesic Video Object Segmentation (CVPR15)

Learning Unsupervised Video Primary Object Segmentation through Visual Attention (CVPR19)

Any comments, please email: carrierlxk@gmail.com