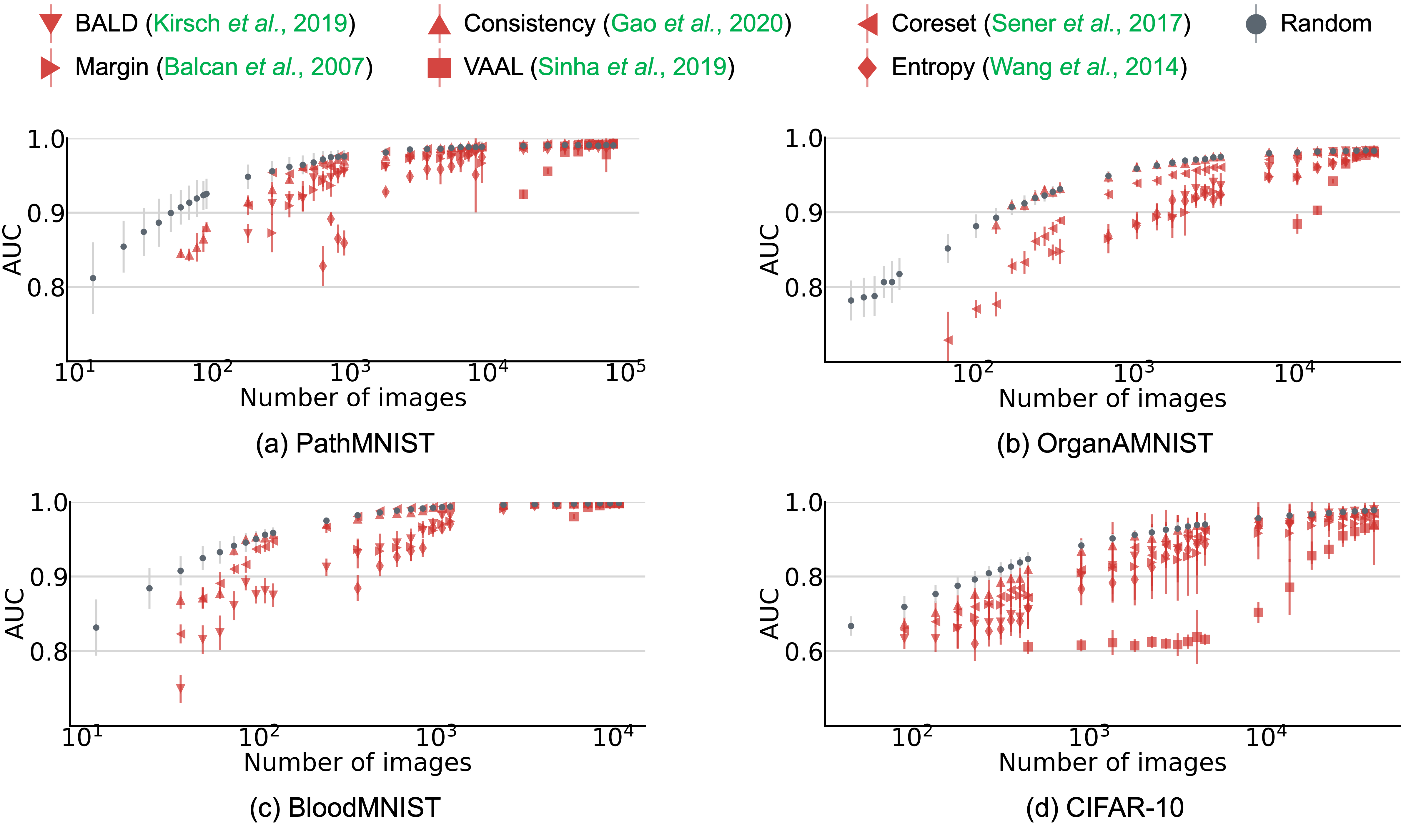

Active learning promises to improve annotation efficiency by iteratively selecting the most important data to be annotated first. However, we uncover a striking contradiction to this promise: active learning fails to select data as efficiently as random selection at the first few choices. We identify this as the cold start problem in vision active learning. We seek to address the cold start problem by exploiting the three advantages of contrastive learning: (1) no annotation is required; (2) label diversity is ensured by pseudo-labels to mitigate bias; (3) typical data is determined by contrastive features to reduce outliers.

This repository provides the official implementation of the following paper:

Making Your First Choice: To Address Cold Start Problem in Vision Active Learning

Liangyu Chen1, Yutong Bai2, Siyu Huang3, Yongyi Lu2, Bihan Wen1, Alan L. Yuille2, and Zongwei Zhou2

1 Nanyang Technological University, 2 Johns Hopkins University, 3 Harvard University

Medical Imaging with Deep Learning (MIDL), 2023

NeurIPS Workshop on Human in the Loop Learning, 2022

paper | code | poster

If you find this repo useful, please consider citing our paper:

@article{chen2022making,

title={Making Your First Choice: To Address Cold Start Problem in Vision Active Learning},

author={Chen, Liangyu and Bai, Yutong and Huang, Siyu and Lu, Yongyi and Wen, Bihan and Yuille, Alan L and Zhou, Zongwei},

journal={arXiv preprint arXiv:2210.02442},

year={2022}

}

The selection part of code is developed on the basis of open-mmlab/mmselfsup. Please see mmselfsup installation.

All datasets can be auto downloaded in this repo.

MedMNIST can also be downloaded at MedMNIST v2.

CIFAR-10-LT is generated in this repo with a fixed seed.

Pretrain on all MedMNIST datasets

cd selection

bash tools/medmnist_pretrain.shPretrain on CIFAR-10-LT

bash tools/cifar_pretrain.shSelect initial queries on all MedMNIST datasets

bash tools/medmnist_postprocess.shSelect initial queries on CIFAR-10-LT

bash tools/cifar_postprocess.shcd training

cd medmnist_active_selection # for selecting with actively selected initial queries

# cd medmnist_random_selection # for selecting with randomly selected initial queries

# cd medmnist_uniform_active_selection # for selecting with class-balanced actively selected initial queries

# cd medmnist_uniform_random_selection # for selecting with class-balanced randomly selected initial queries

for mnist in { bloodmnist breastmnist dermamnist octmnist organamnist organcmnist organsmnist pathmnist pneumoniamnist retinamnist tissuemnist }; do bash run.sh $mnist; done