English | 简体中文

Spiderpool is a CNCF Landscape Level Project.

Spiderpool is an underlay network solution for Kubernetes that seamlessly integrates existing open-source CNI projects through two lightweight plugins. It streamlines underlay IPAM operations, allowing multiple CNIs to collaborate effectively.

-

Spiderpool: an IPAM plugin, is designed to meet the requirements of the underlay network's IP address management and can allocate underlay IP addresses for various open-source CNI projects.

-

metal plugins: provides supporting capabilities such as multi-network card route coordination, IP conflict detection, host connectivity, vlan interface creation.

-

multus operator: Simplify creation of multus CR instances with best-practice CNI composition

Why Spiderpool? There has not yet been a comprehensive, user-friendly and intelligent open source solution for the complicated requirements of underlay networks' IPAMs. Therefore, Spiderpool provides many innovative features:

-

Shared and dedicated IP pools with support for fixed IP address allocation to meet the firewall's security management needs.

-

Automated management of dedicated IP pools with dynamic creation, scaling, and recovery of fixed IP addresses based on application orchestration events monitoring, resulting in zero maintenance operations.

-

IP allocation across multiple NICs and route coordination between NICs to ensure consistent request and reply data paths, enabling smooth communication.

-

Multiple underlay CNI collaboration and overlay CNI and underlay CNI collaboration that reduce hardware requirements for cluster nodes and optimize infrastructure resource usage.

-

Enhanced Pod and host connectivity, ensuring successful communication for clusterIP access, local health check, IP conflict detection, and gateway accessibility detection, which makes Macvlan, SR-IOV, and other projects more useful.

There are two technologies in cloud-native networking: "overlay network" and "underlay network". Despite no strict definition for underlay and overlay networks in cloud-native networking, we can simply abstract their characteristics from many CNI projects. The two technologies meet the needs of different scenarios.

The article provides a brief comparison of IPAM and network performance between the two technologies, which offers better insights into the unique features and use cases of Spiderpool.

Why underlay network solutions? In data center scenarios, the following requirements necessitate underlay network solutions:

-

Low-latency applications need optimized network latency and throughput provided by underlay networks

-

Initial migration of traditional host applications to the cloud use traditional network methods such as service exposure and discovery and multi subnets

-

Network management in the data center desires security controls like firewalls, vlan insulation and traditional network observation techniques to implement cluster network monitoring.

-

Independent host network interface to ensure the bandwidth isolation of the underlying subnet. Projects such as kubevirt, storage and log, ensure independent network bandwidth to transfer data.

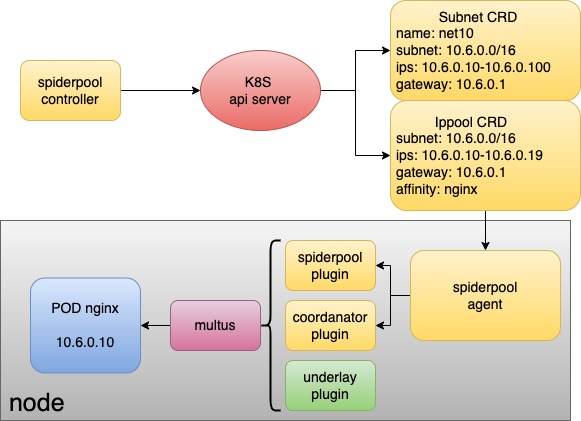

Spiderpool consists of the following components:

-

Spiderpool controller: a set of deployments that manage CRD validation, status updates, IP recovery, and automated IP pools

-

Spiderpool agent: a set of daemonsets that help Spiderpool plugin by performing IP allocation and coordinator plugin for information synchronization.

-

Spiderpool plugin: a binary plugin on each host that CNI can utilize to implement IP allocation.

-

coordinator plugin: a binary plugin on each host that CNI can use for multi-NIC route coordination, IP conflict detection, and host connectivity.

On top of its own components, Spiderpool relies on open-source underlay CNIs to allocate network interfaces to Pods. You can use Multus CNI to manage multiple NICs and CNI configurations.

Any CNI project compatible with third-party IPAM plugins can work well with Spiderpool, such as:

Macvlan CNI, vlan CNI, ipvlan CNI, SR-IOV CNI, ovs CNI, Multus CNI, Calico CNI, Weave CNI

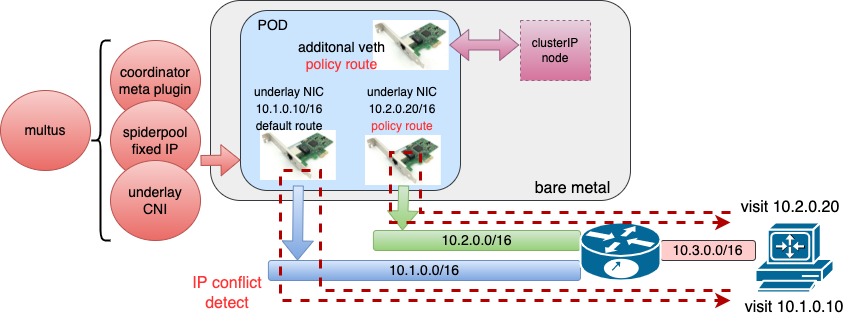

In underlay networks, Spiderpool can work with underlay CNIs such as Macvlan CNI and SR-IOV CNI to provide the following benefits:

-

Rich IPAM capabilities for underlay CNIs, including shared/fixed IPs, multi-NIC IP allocation, and dual-stack support

-

One or more underlay NICs for Pods with coordinating routes between multiple NICs to ensure smooth communication with consistent request and reply data paths

-

Enhanced connectivity between open-source underlay CNIs and hosts using additional veth network interfaces and route control. This enables clusterIP access, local health checks of applications, and much more

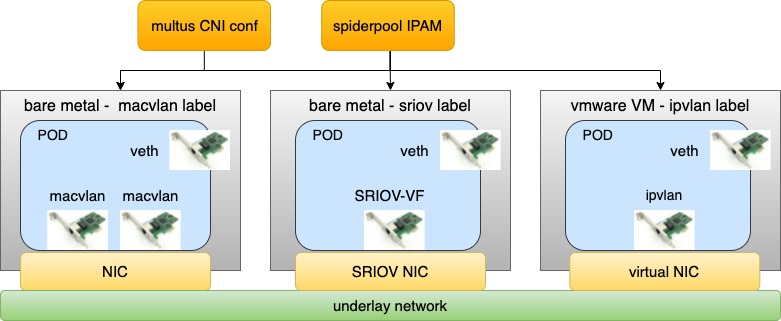

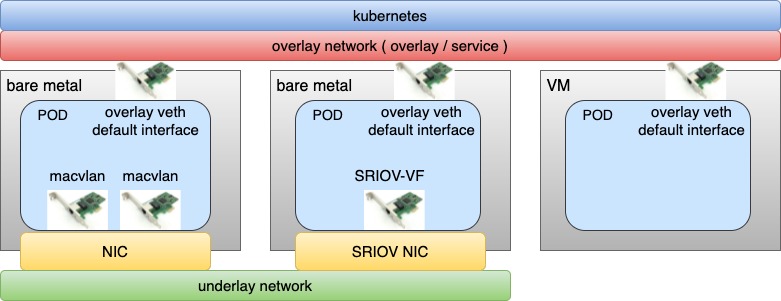

How can you deploy containers using a single underlay CNI, when a cluster has multiple underlying setups?

-

Some nodes in the cluster are virtual machines like VMware that don't enable promiscuous mode, while others are bare metal and connected to traditional switch networks. What CNI solution should be deployed on each type of node?

-

Some bare metal nodes only have one SR-IOV high-speed NIC that provides 64 VFs. How can more pods run on such a node?

-

Some bare metal nodes have an SR-IOV high-speed NIC capable of running low-latency applications, while others have only ordinary network cards for running regular applications. What CNI solution should be deployed on each type of node?

By simultaneously deploying multiple underlay CNIs through Multus CNI configuration and Spiderpool's IPAM abilities, resources from various infrastructure nodes across the cluster can be integrated to solve these problems.

For example, as shown in the above diagram, different nodes with varying networking capabilities in a cluster can use various underlay CNIs, such as SR-IOV CNI for nodes with SR-IOV network cards, Macvlan CNI for nodes with ordinary network cards, and ipvlan CNI for nodes with restricted network access (e.g., VMware virtual machines with limited layer 2 network forwarding).

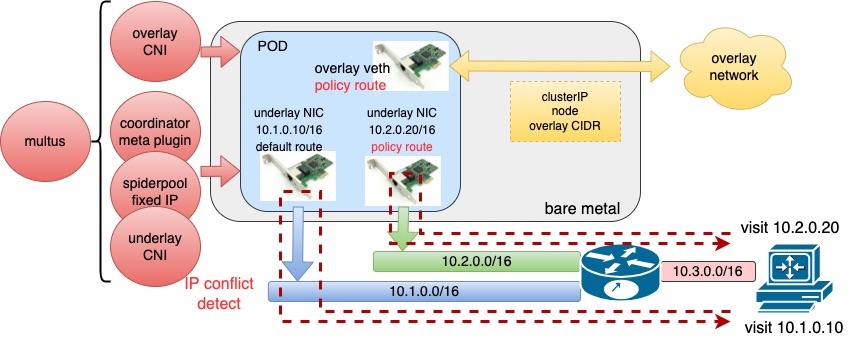

In overlay networks, Spiderpool uses Multus to add an overlay NIC (such as Calico or Cilium) and multiple underlay NICs (such as Macvlan CNI or SR-IOV CNI) for each Pod. This offers several benefits:

-

Rich IPAM features for underlay CNIs, including shared/fixed IPs, multi-NIC IP allocation, and dual-stack support.

-

Route coordination for multiple underlay CNI NICs and an overlay NIC for Pods, ensuring the consistent request and reply data paths for smooth communication.

-

Use the overlay NIC as the default one with route coordination and enable local host connectivity to enable clusterIP access, local health checks of applications, and forwarding overlay network traffic through overlay networks while forwarding underlay network traffic through underlay networks.

The integration of Multus CNI and Spiderpool IPAM enables the collaboration of an overlay CNI and multiple underlay CNIs. For example, in clusters with nodes of varying network capabilities, Pods on bare-metal nodes can access both overlay and underlay NICs. Meanwhile, Pods on virtual machine nodes only serving east-west services are connected to the Overlay NIC. This approach provides several benefits:

-

Applications providing east-west services can be restricted to being allocated only the overlay NIC while those providing north-south services can simultaneously access overlay and underlay NICs. This results in reduced Underlay IP resource usage, lower manual maintenance costs, and preserved pod connectivity within the cluster.

-

Fully integrate resources from virtual machines and bare-metal nodes.

If you want to start some Pods with Spiderpool in minutes, refer to Quick start.

-

Create multiple underlay subnets

The administrator can create multiple subnet objects mapping to each underlay CIDR, and applications can be assigned IP addresses within different subnets to meet the complex planning of underlay networks. See example for more details.

-

Automated IP pools for applications requiring static IPs

To realize static IP addresses, some open source projects need hardcoded IP addresses in the application's annotation, which is prone to operations accidents, manual operations of IP address conflicts, and higher IP management costs caused by application scalability. Spiderpool's CRD-based IP pool management automates the creation, deletion, and scaling of fixed IPs to minimize operational burdens.

-

For stateless applications, the IP address range can be automatically fixed and IP resources can be dynamically scaled according to the number of application replicas. See example for more details.

-

For stateful applications, IP addresses can be automatically fixed for each Pod, and the overall IP scaling range can be fixed as well. See example for more details.

-

The automated IP pool ensures the availability of a certain number of redundant IP addresses, allowing newly launched Pods to have temporary IP addresses during application rolling out. See example for more details.

-

Support for third-party application controllers based on operators and other mechanisms. See example for details.

-

-

Manual IP pools enable administrators to customize fixed IP addresses, helping applications maintain consistent IP addresses. See example for details.

-

For applications not requiring static IP addresses, they can share an IP pool. See example for details.

-

For one application deployed across different underlay subnets, Spiderpool could assign IP addresses from different subnets. See example for details.

-

Multiple IP pools can be set for a Pod for backup IP resources. See example for details.

-

Set global reserved IPs that will not be assigned to Pods, it can avoid misusing IP addresses already used by other network hosts. See example for details.

-

Assign IP addresses from different subnets to a Pod with multiple NICs. See example for details.

-

IP pools can be shared by the whole cluster or bound to a specified namespace. See example for details.

-

An additional plugin veth provided by spiderpool has features:

-

Help some CNI addons access clusterIP and pod-healthy check , such as Macvlan CNI, vlan CNI, ipvlan CNI, SR-IOV CNI, ovs CNI. See example for details.

-

Coordinate routes of each NIC for Pods who have multiple NICs assigned by Multus. See example for details.

For scenarios involving multiple Underlay NICs, please refer to the example.

For scenarios involving one Overlay NIC and multiple Underlay NICs, please refer to the example.

-

To ensure successful Pod communication, IP address conflict detection and gateway reachability detection can be implemented during Pod initialization in the network namespace. See the example for more details.

-

A well-designed IP re mechanism can maximize the availability of IP resources. See the example for more information.

-

The administrator could specify customized routes. See example for details

-

By comparison with other open source projects in the community, outstanding performance for assigning and releasing Pod IPs is showcased in the test report covering multiple scenarios of IPv4 and IPv6:

-

Enable fast allocation and release of static IPs for large-scale creation, restart, and deletion of applications

-

Enable applications to quickly obtain IP addresses for self-recovery after downtime or a cluster host reboot

-

-

All above features can work in ipv4-only, ipv6-only, and dual-stack scenarios. See example for details.

-

Support AMD64 and ARM64

-

To avoid operational errors and accidental issues resulting from concurrent administrative actions, Spiderpool is able to prevent IP leakage and conflicts in the work process.

Spiderpool is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.