Yan Zeng*, Hanbo Zhang*, Jiani Zheng*, Jiangnan Xia, Guoqiang Wei, Yang Wei, Yuchen Zhang, Tao Kong

*Equal Contribution

update

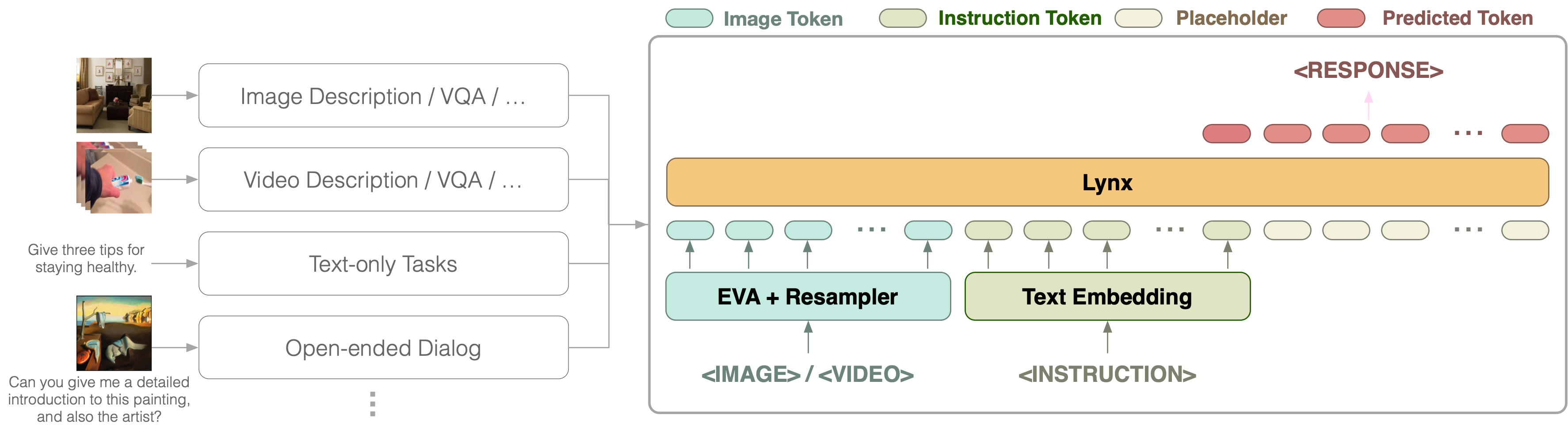

Lynx (8B parameters):

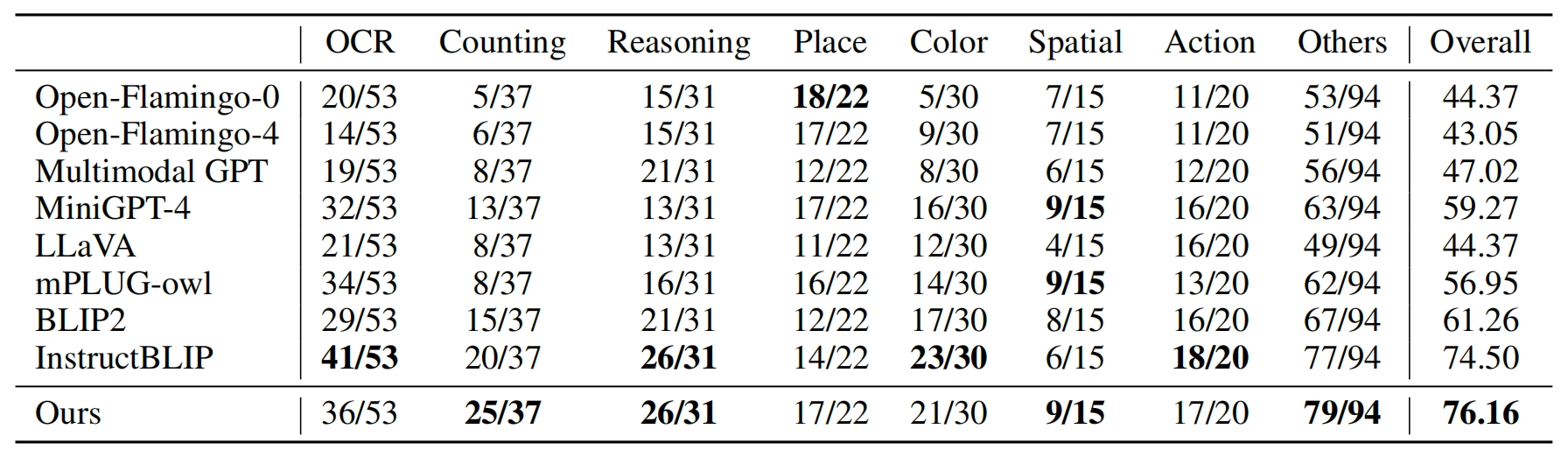

results on Open-VQA image testsets

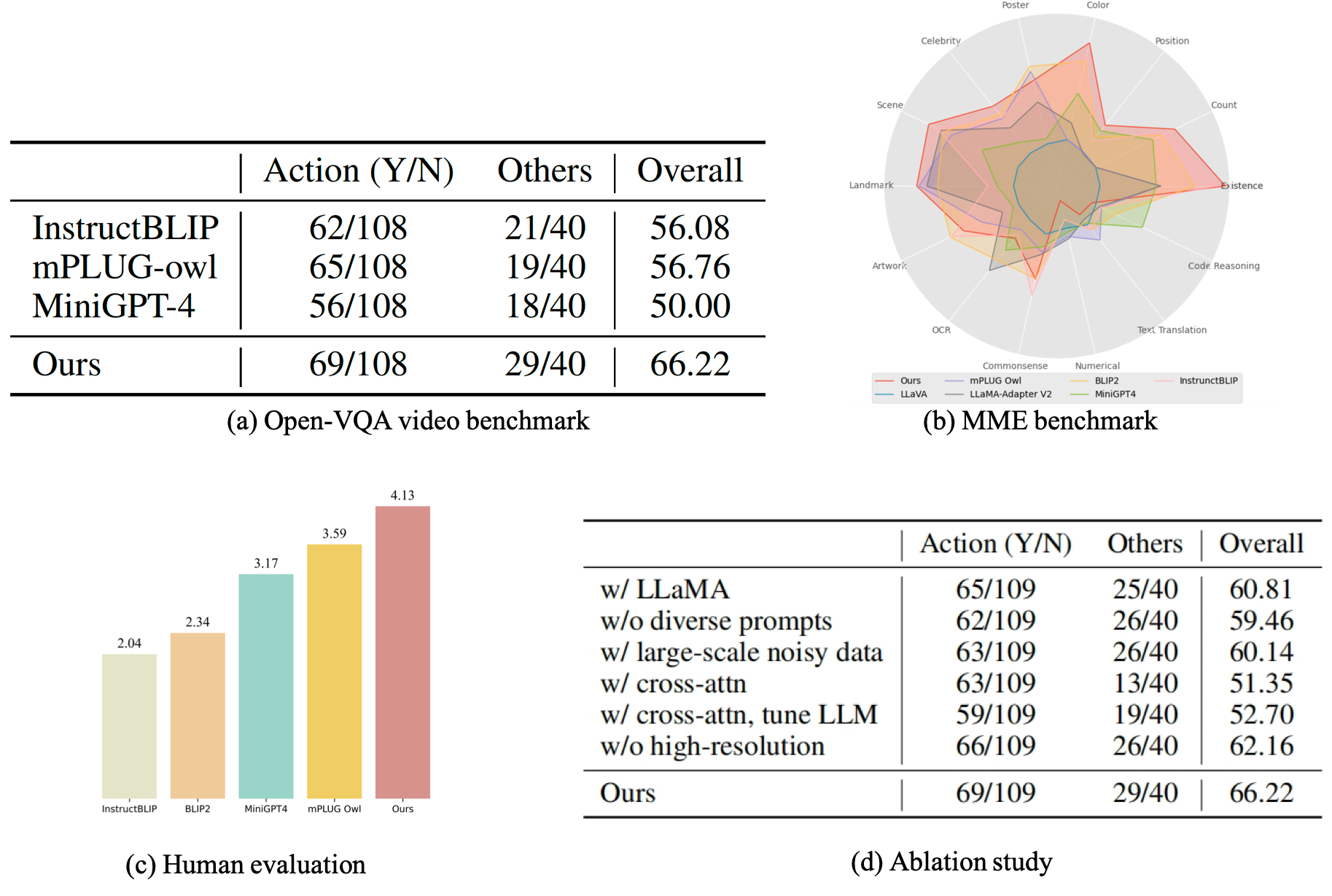

results on Open-VQA video testsets && OwlEval human eval && MME benchmark

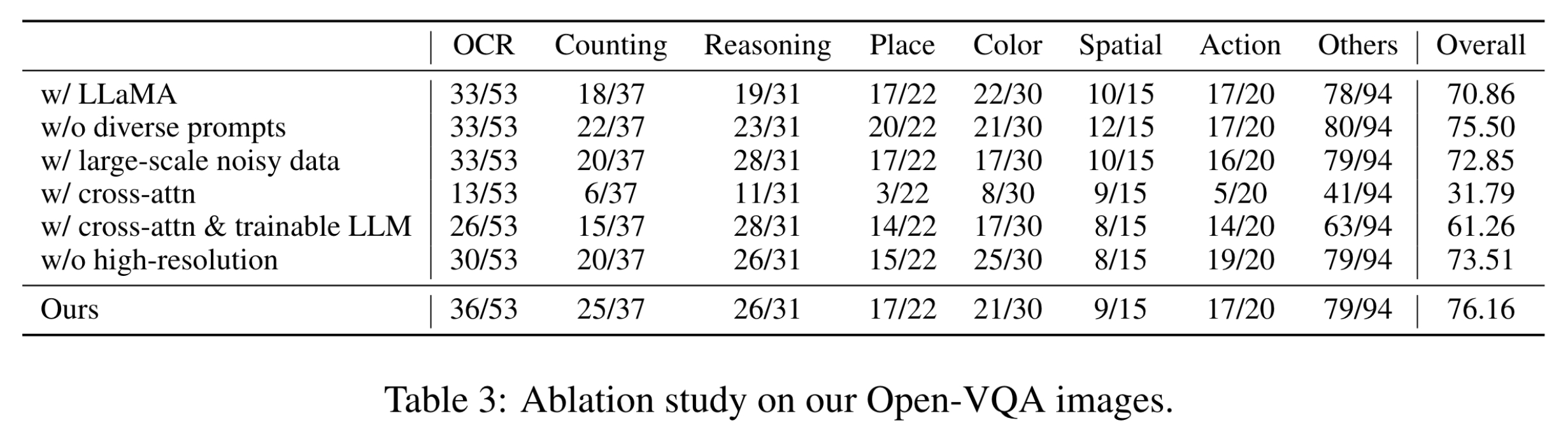

ablation result

conda env create -f environment.yml

conda activate lynx

Open-VQA annotations file is under the path data/Open_VQA_images.jsonl and data/Open_VQA_videos.jsonl, there is an example:

{

"dataset": "Open_VQA_images", # the dataset name of your data

"question": "What is in the image?",

"answer": ["platform or tunnel"], # list

"index": 1,

"image": "images/places365/val_256/Places365_val_00000698.jpg", # relative path of image

"origin_dataset": "places365",

"class": "Place", # eight image VQA types and two video VQA types correspond to the open_VQA dataset

}

You can also convert your own data in jsonl format, the keys origin_dataset and class are optional.

Download raw images from corresponding websites: Places365(256x256), VQAv2, OCRVQA, Something-Something-v.2, MSVD-QA, NeXT-QA and MSRVTT-QA.

You need the check some import settings in the configs configs/LYNX.yaml, for example:

# change this prompt for different task, this is the default prompt

prompt: "User: {question}\nBot:"

# the key must match the vision key in test_files

# if you test Open_VQA_videos.jsonl, need to change to "video"

vision_prompt_dict: "image"

output_prompt_dict: "answer"- step 1: download the

eva_vit_1bon official website and put it under thedata/, rename it aseva_vit_g.pth - step 2: prepare the

vicuna-7band put it under thedata/- method 1: download from huggingface directly.

- method 2:

- download Vicuna’s delta weight from v1.1 version (use git-lfs)

- get

LLaMA-7bfrom here or from the Internet. - install FastChat

pip install git+https://github.com/lm-sys/FastChat.git - run

python -m fastchat.model.apply_delta --base /path/to/llama-7b-hf/ --target ./data/vicuna-7b/ --delta /path/to/vicuna-7b-delta-v1.1/

- step 3: download the pretrain_lynx.pt or finetune_lynx.pt and put it under the

data/(please check thecheckpointin the config is match the file you download.)

organize the files like this:

lynx-llm/

data/

Open_VQA_images.jsonl

Open_VQA_videos.jsonl

eva_vit_g.pth

vicuna-7b/

finetune_lynx.pt

pretrain_lynx.pt

images/

vqav2/val2014/*.jpg

places365/val_256/*.jpg

ocrvqa/images/*.jpg

sthsthv2cap/val/*.mp4

msvdqa/test/*.mp4

nextqa/*.mp4

msrvttqa/*.mp4

sh generate.sh

If you find this repository useful, please considering giving ⭐ or citing:

@article{zeng2023matters,

title={What Matters in Training a GPT4-Style Language Model with Multimodal Inputs?},

author={Zeng, Yan and Zhang, Hanbo and Zheng, Jiani and Xia, Jiangnan and Wei, Guoqiang and Wei, Yang and Zhang, Yuchen and Kong, Tao},

journal={arXiv preprint arXiv:2307.02469},

year={2023}

}

For issues using this code, please submit a GitHub issue.

This project is licensed under the Apache-2.0 License.