We are excited to release a new video-text benchmark and extendable codes for multi-shot video understanding. Our 20k version of dataset includes detailed long summaries for 20k videos and shot captions for 80k video shots.

Stay tuned for more exciting data release and new features!

🌟 Update (16/12/2023): Paper and Demo for SUM-shot model. It showcases the power and versatility of detailed and grounded video summaries. Dive into the demo and share your experiences with us! Chat-SUM-shot is on the way! Stay tuned!🎥📝🚀

🌟 Update (12/12/2023): Code for video summarization and shot captioning, in the sub-directory code of this repo. Dive into these new features and share your experiences with us! 🎥📝🚀

🌟 Update (30/11/2023): Data of Shot2Story-20K. Check them out and stay tuned for more exciting updates! 💫🚀

We build a demo for SUM-shot model hosted in Space. Please have a look and explore what it is capable of. Issues are welcomed! Chat-SUM-shot model is on the way!

Some hints to play with our demo:

- 🎉 Start with our provided demo videos, some of which are sampled from ActivityNet, not included in our training data.

- 🚀 Please upload videos less than 20MB. Enjoy!

- 😄 For a more comprehensive understanding, try specifying reasonable starting and ending timestamps for the shots. Enjoy!

- 😄 Setting temperature to 0.1 for the most grounded understanding and question-answering.

- 😄 Setting temperature to greater value for the creative grounded understanding and question-answering.

Multi-round conversation analyzing a humorous video:

demo3.mp4

Multiple-step minutes-long video analysis:

demo_multistep.mov

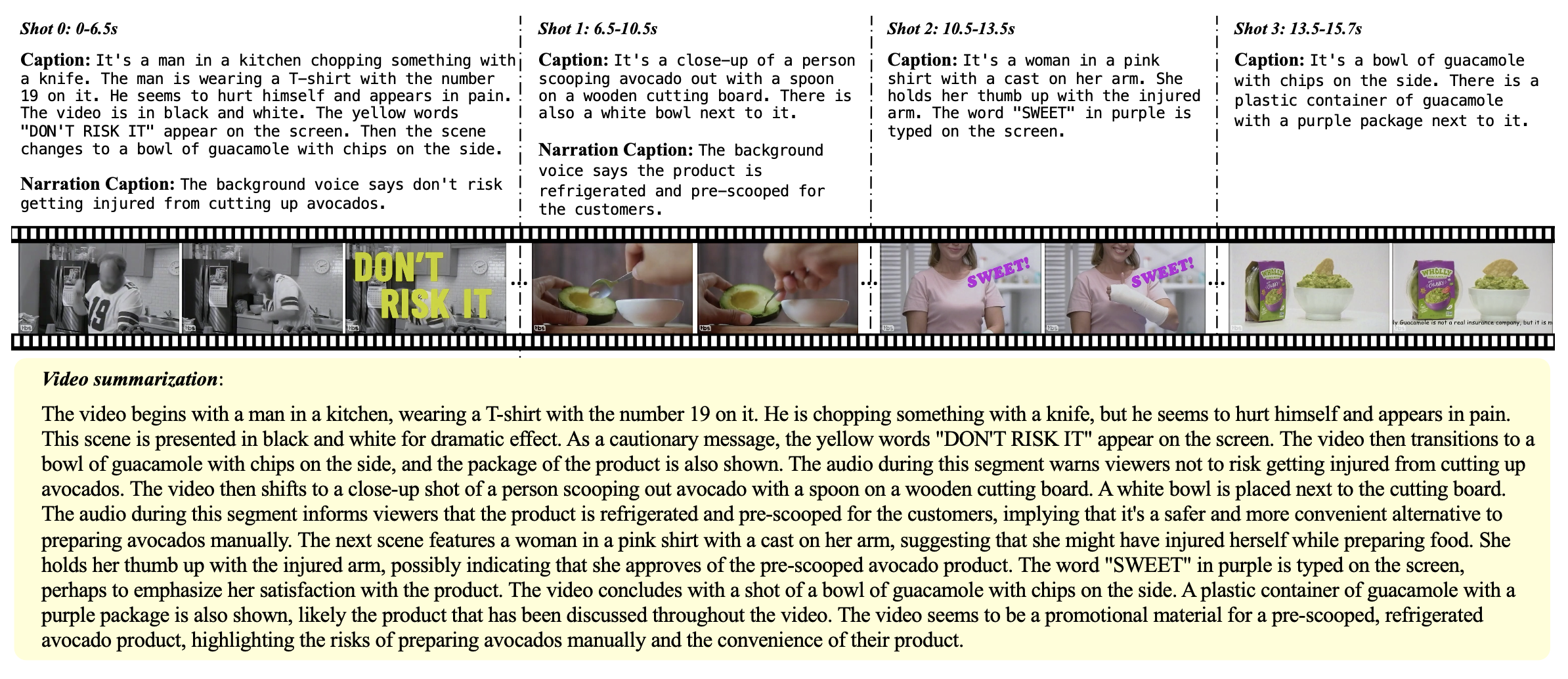

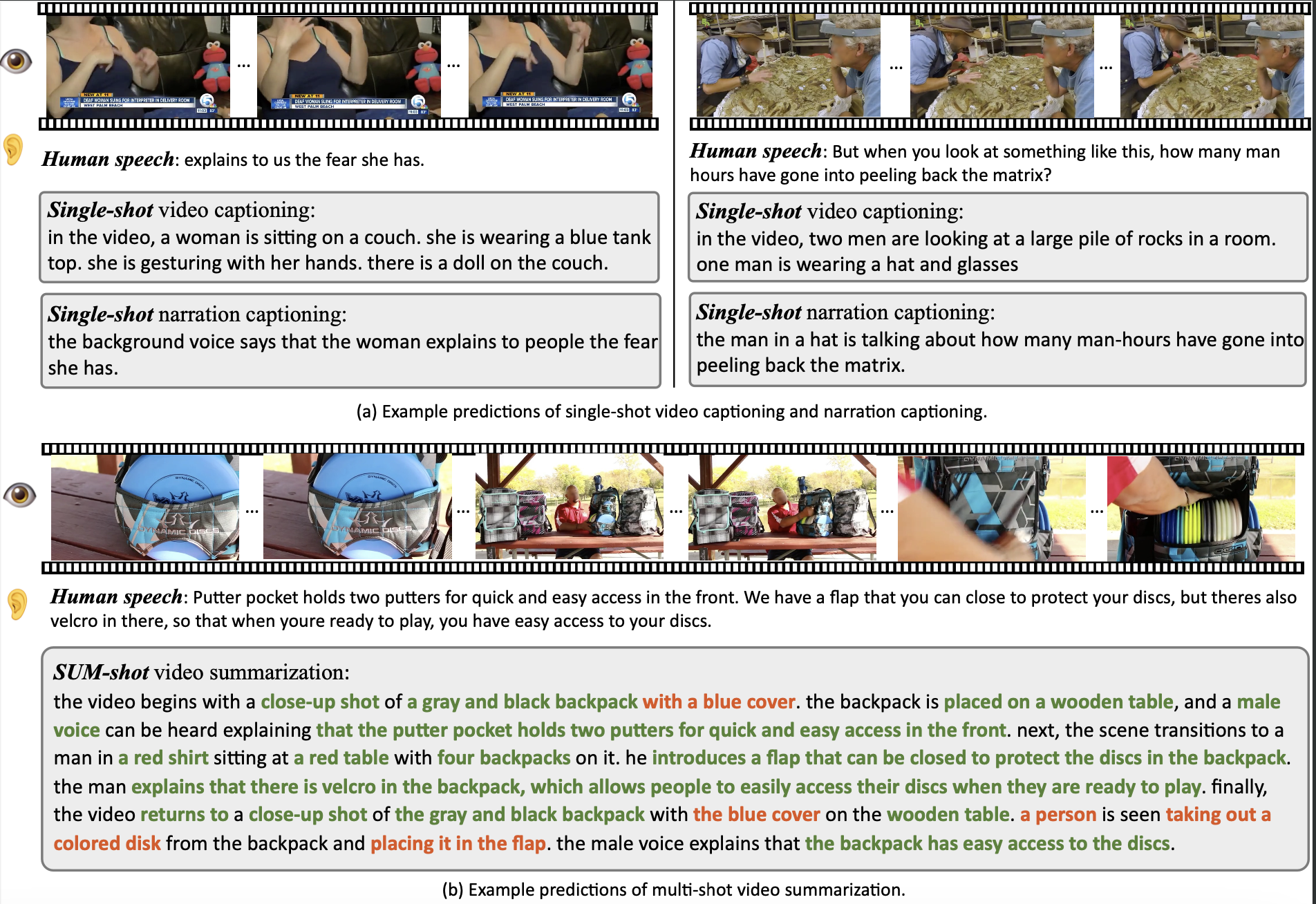

A short clip of video may contain progression of multiple events and an interesting story line. A human needs to capture both the event in every shot and associate them together to understand the story behind it. In this work, we present a new multi-shot video understanding benchmark Shot2Story with detailed shot-level captions and comprehensive video summaries. To facilitate better semantic understanding of videos, we provide captions for both visual signals and human narrations. We design several distinct tasks including single-shot video and narration captioning, multi-shot video summarization, and video retrieval with shot descriptions. Preliminary experiments show some challenges to generate a long and comprehensive video summary.

Our dataset comprises 20k video clips sourced from HD-VILA-100M. Each clip is meticulously annotated with single-shot video captions, narration captions, video summaries, extracted ASR texts, and shot transitions. Please refer to DATA.md for video and annotation preparation.

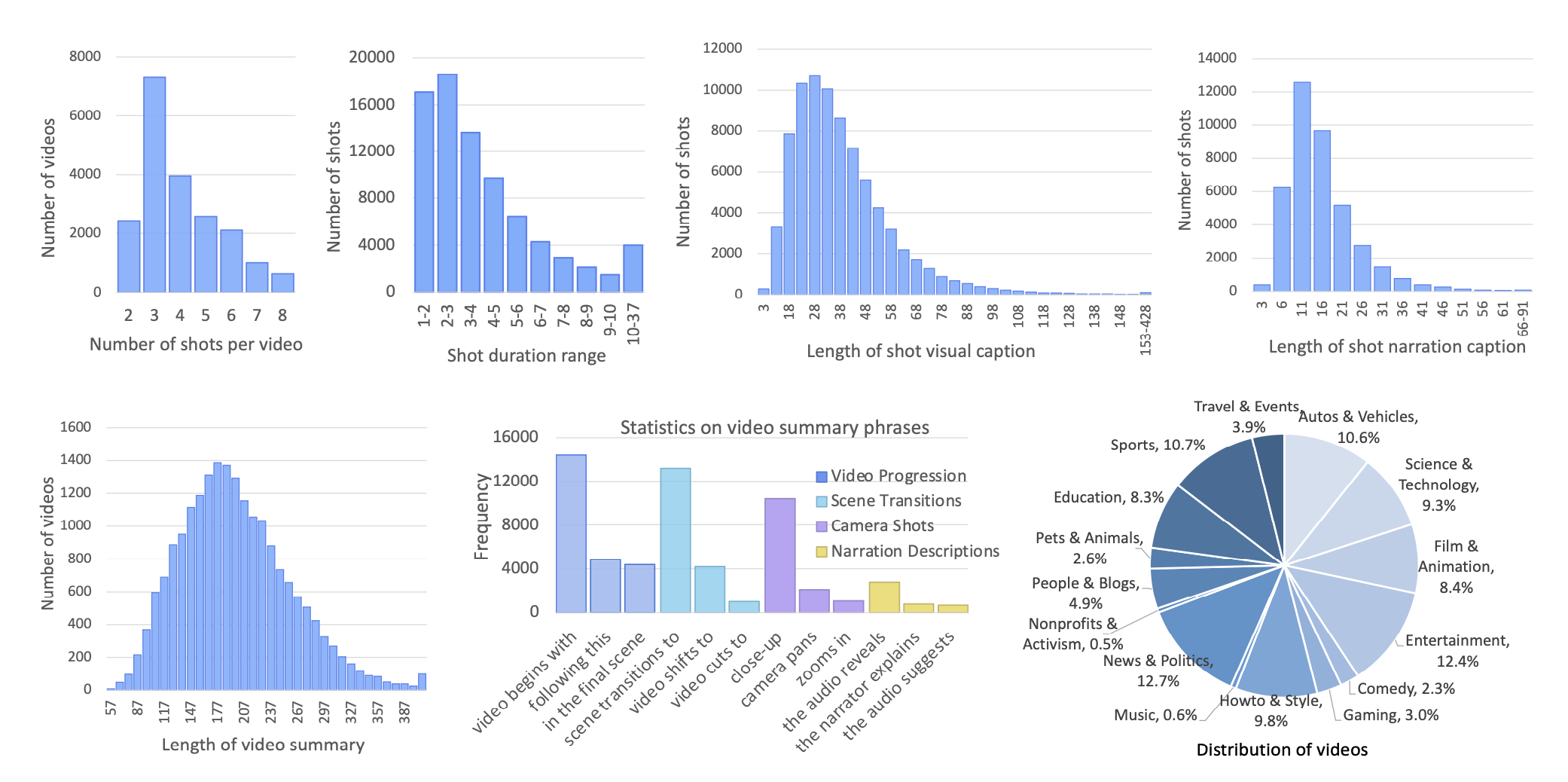

The dataset includes an average of 4.0 shots per video, resulting in a total of 80k video shots, each with detailed video caption and narration caption annotations. The average length of our video summaries is 201.8, while the average length of a video is 16s.

For more comprehensive details, please refer to the plots below.

To benchmark the advances of multi-modal video understanding, we designed several distinctive tasks using our dataset, including single-shot captioning, multi-shot summarization, and video retrieval with shot description. We design and implemented several baseline models using a frozen vision encoder and an LLM, by prompting the LLM with frame tokens and ASR (Automatic Speech Recognition) text.

Code here for running the project.

Our code is licensed under a Apache 2.0 License.

Our text annotations are released under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0) License. They are available strictly for non-commercial research. More guidelines of dataset can be found in here.

If you find this repo useful for your research, please consider citing the paper

@article{han2023shot2story20k,

title={Shot2Story20K: A New Benchmark for Comprehensive Understanding of Multi-shot Videos},

author={Mingfei Han and Linjie Yang and Xiaojun Chang and Heng Wang},

journal={arXiv preprint arXiv:2311.17043},

year={2023}

}

If you have any questions or concerns about our dataset, please don't hesitate to contact us. You can raise an issue or reach us at hanmingfei@bytedance.com. We welcome feedback and are always looking to improve our dataset.

We extend our thanks to the teams behind HD-VILA-100M, BLIP2, Whisper, MiniGPT-4, Vicuna and LLaMA. Our work builds upon their valuable contributions. Please acknowledge these resources in your work.