Building a Multimodal Search Engine for Text and Image with Amazon Titan Embeddings, Amazon Bedrock, Amazon Aurora and LangChain.

Getting started with Amazon Bedrock, RAG, and Vector database in Python

This repository demonstrates the construction of a state-of-the-art multimodal search engine, leveraging Amazon Titan Embeddings, Amazon Bedrock, and LangChain. It covers the generation of cutting-edge text and image embeddings using Titan's models, unlocking powerful semantic search and retrieval capabilities. Through Jupyter notebooks, the repository guides you through the process of ingesting text from PDFs, generating text embeddings, and segmenting the text into meaningful chunks using LangChain. These embeddings are then stored in a FAISS vector database and an Amazon Aurora PostgreSQL database, enabling efficient search and retrieval operations.

Amazon Aurora allows you to maintain both traditional application data and vector embeddings within the same database. This unified approach enhances governance and enables faster deployment, while minimizing the learning curve.

In a second part you'll build a Serveless Embedding APP leverage AWS Cloud Development Kit (CDK) to create four AWS Lambda Functions: responsible for embedding text and image files, and for retrieving documents based on text or image queries. These Lambda functions will be designed to be invoked through events invocations, providing a scalable and serverless solution for my multimodal search engine.

By the end of this post, I'll have a solid understanding of how to:

- Load PDF text and generate text/image embeddings using Amazon Titan Embeddings.

- Chunk text into semantic segments with LangChain.

- Create local FAISS vector databases for text and images.

- Build an image search app leveraging Titan Multimodal Embeddings.

- Store vector embeddings in Amazon Aurora PostgreSQL with pgvector extension.

- Query vector databases for relevant text documents and images.

- Deploy Lambda functions for embedding/retrieval using AWS CDK.

Get ready to unlock the power of multi-modal search and unlock new possibilities in my apps!

Requirements:

- Install boto3 - This is the AWS SDK for Python that allows interacting with AWS services. Install with

pip install boto3. - Configure AWS credentials - Boto3 needs credentials to make API calls to AWS.

- Install Langchain, a framework for developing applications powered by large language models (LLMs). Install with

pip install langchain.

💰 Cost to complete:

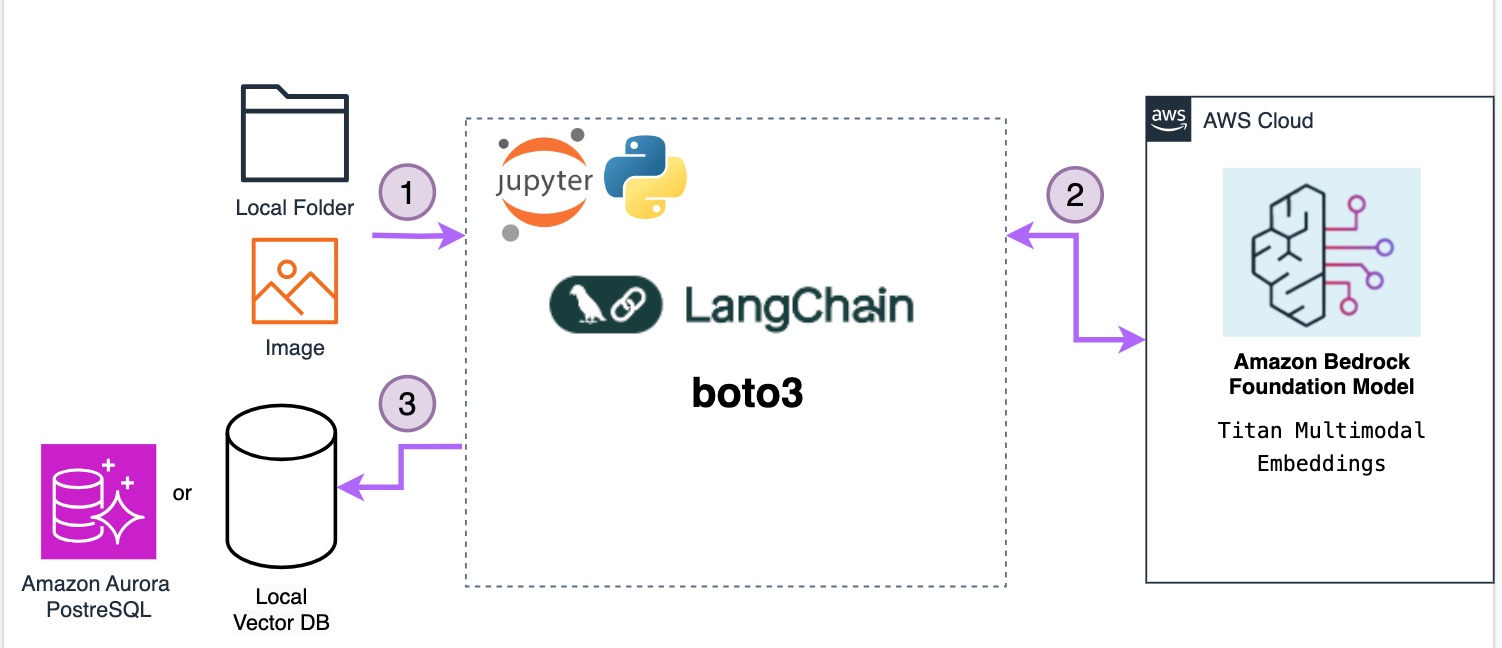

Jupyter notebook for loading documents from PDFs, extracting and splitting text into semantically meaningful chunks using LangChain, generating text embeddings from those chunks utilizing an , generating embeddings from the text using an Amazon Titan Embeddings G1 - Text models, and storing the embeddings in a FAISS vector database for retrieval.

This notebook demonstrates how to combine Titan Multimodal Embeddings, LangChain and FAISS to build a capable image search application. Titan's embeddings allow representing images and text in a common dense vector space, enabling natural language querying of images. FAISS provides a fast, scalable way to index and search those vectors. And LangChain offers abstractions to hook everything together and surface relevant image results based on a user's query.

By following the steps outlined, you'll be able to preprocess images, generate embeddings, load them into FAISS, and write a simple application that takes in a natural language query, searches the FAISS index, and returns the most semantically relevant images. It's a great example of the power of combining modern AI technologies to build applications.

In this Jupyter Notebook, you'll explore how to store vector embeddings in a vector database using Amazon Aurora and the pgvector extension. This approach is particularly useful for applications that require efficient similarity searches on high-dimensional data, such as natural language processing, image recognition, and recommendation systems.

💰 Cost to complete:

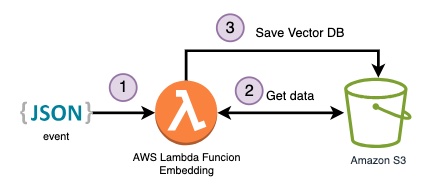

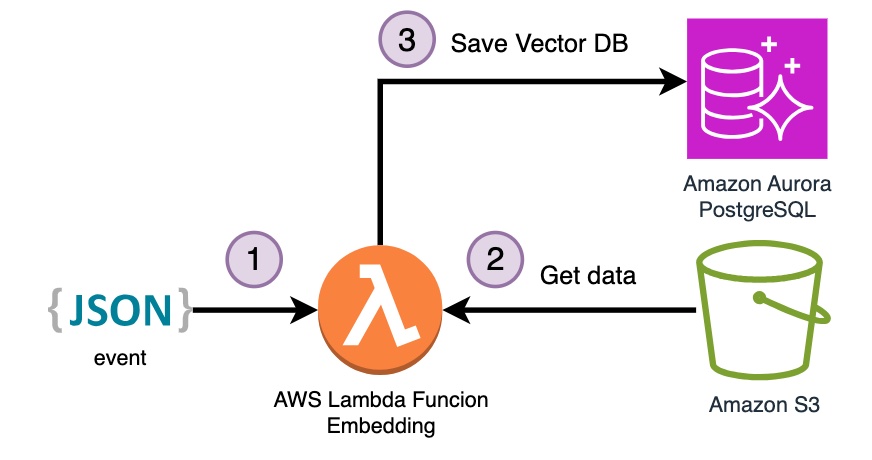

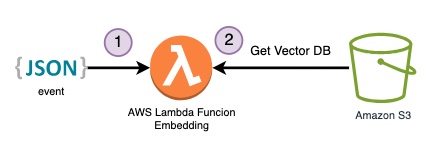

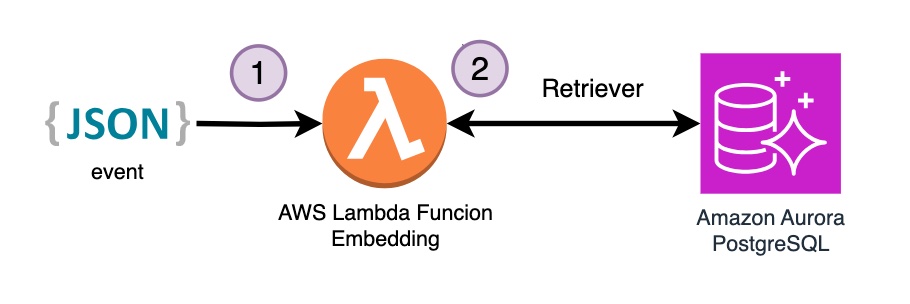

In the second part, you'll construct a Serverless Embedding App utilizing the AWS Cloud Development Kit (CDK) to create four Lambda Functions.

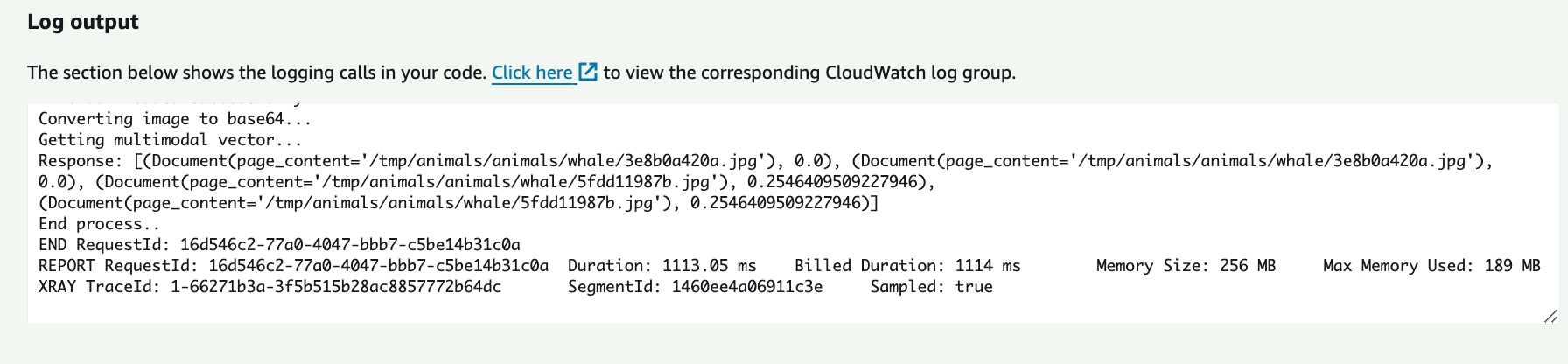

Learn how test Lambda Functions in the console with test events.

To handle the embedding process, there is a dedicated Lambda Function for each file type:

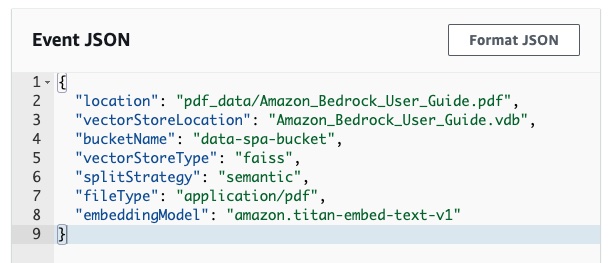

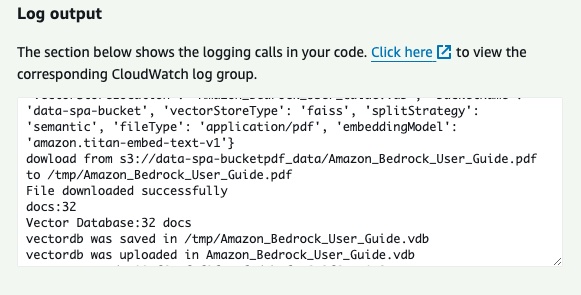

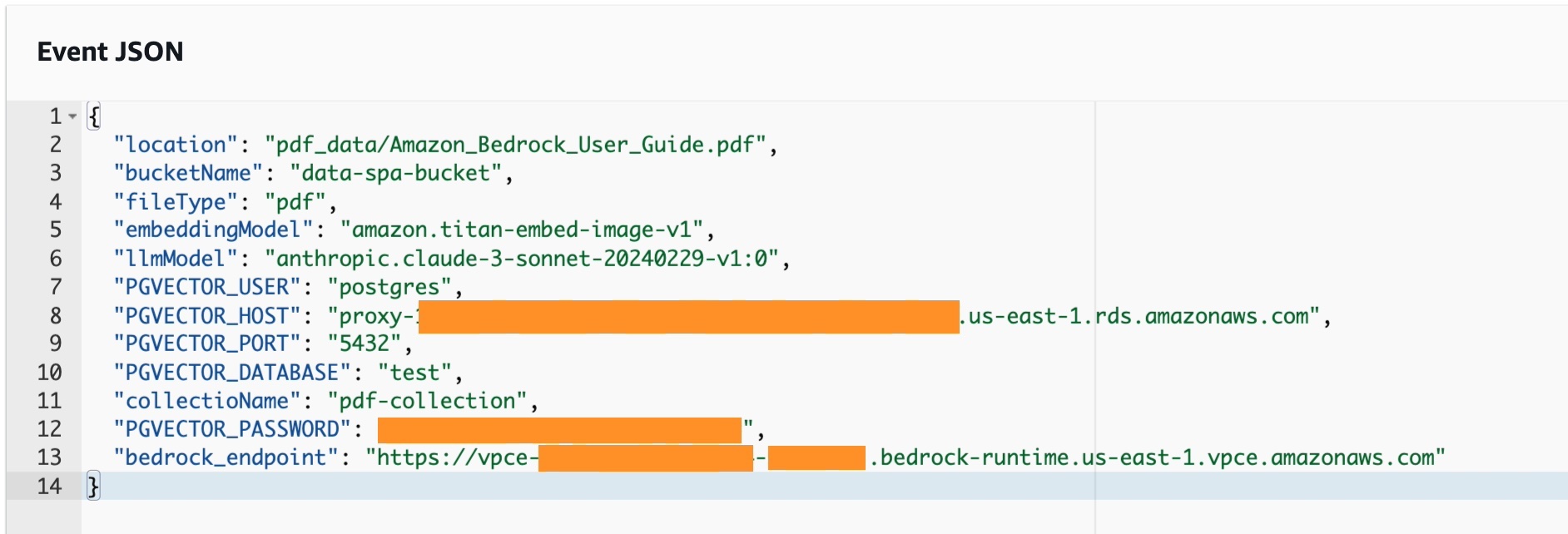

Event to trigger:

{

"location": "REPLACE-YOU-KEY",

"vectorStoreLocation": "REPALCE-NAME.vdb",

"bucketName": "REPLACE-YOU-BUCKET",

"vectorStoreType": "faiss",

"splitStrategy": "semantic",

"fileType": "application/pdf",

"embeddingModel": "amazon.titan-embed-text-v1"

}| Event | Executing function: succeeded |

|---|---|

|

|

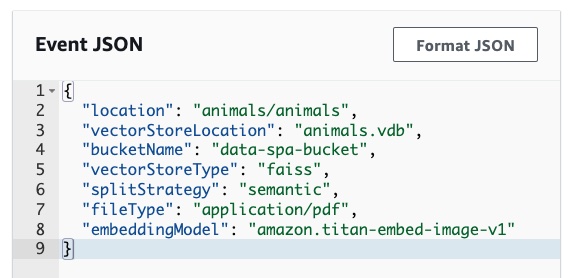

Event to trigger:

{

"location": "REPLACE-YOU-KEY-FOLDER",

"vectorStoreLocation": "REPLACE-NAME.vdb",

"bucketName": "REPLACE-YOU-BUCKET",

"vectorStoreType": "faiss",

"splitStrategy": "semantic",

"embeddingModel": "amazon.titan-embed-image-v1"

}| Event | Executing function: succeeded |

|---|---|

|

|

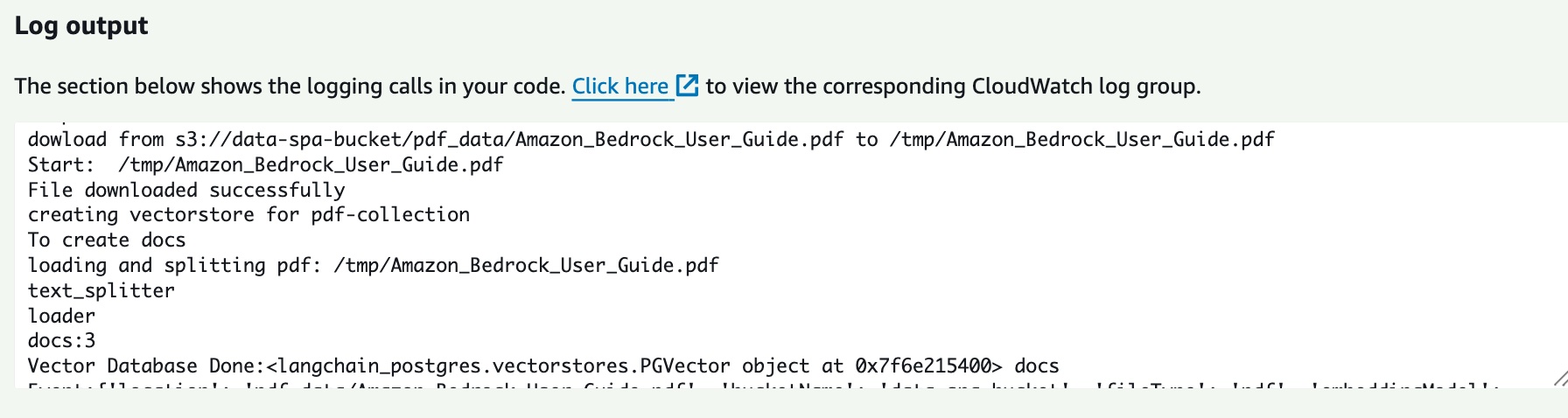

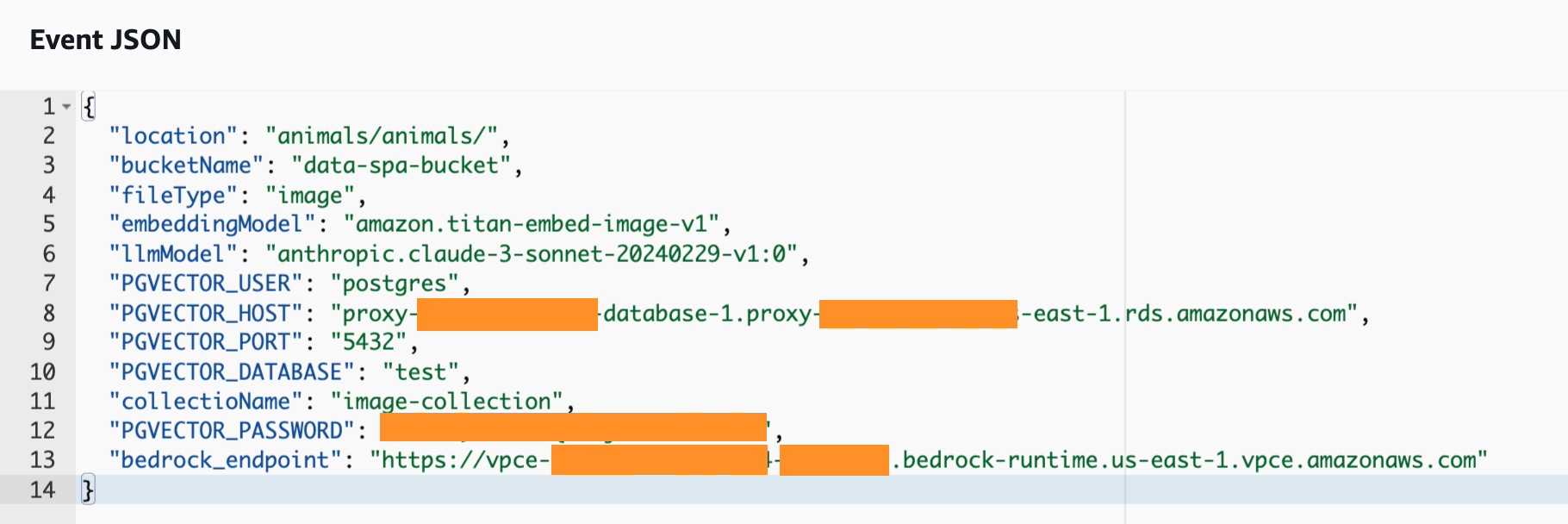

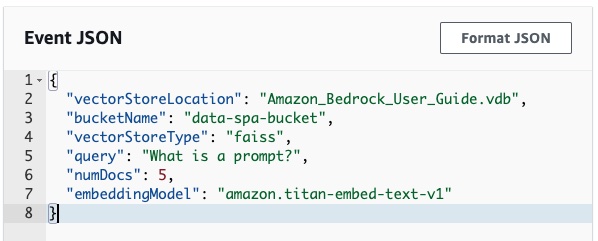

💡 Before testing this Lambda Function keep in mind that it must be in the same VPC and be able to access the Amazon Aurora PostreSQL DB, for that check Automatically connecting a Lambda function and an Aurora DB cluster, Using Amazon RDS Proxy for Aurora and Use interface VPC endpoints (AWS PrivateLink) for Amazon Bedrock VPC endpoint.

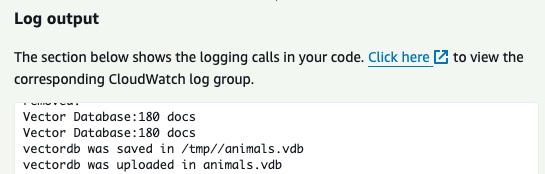

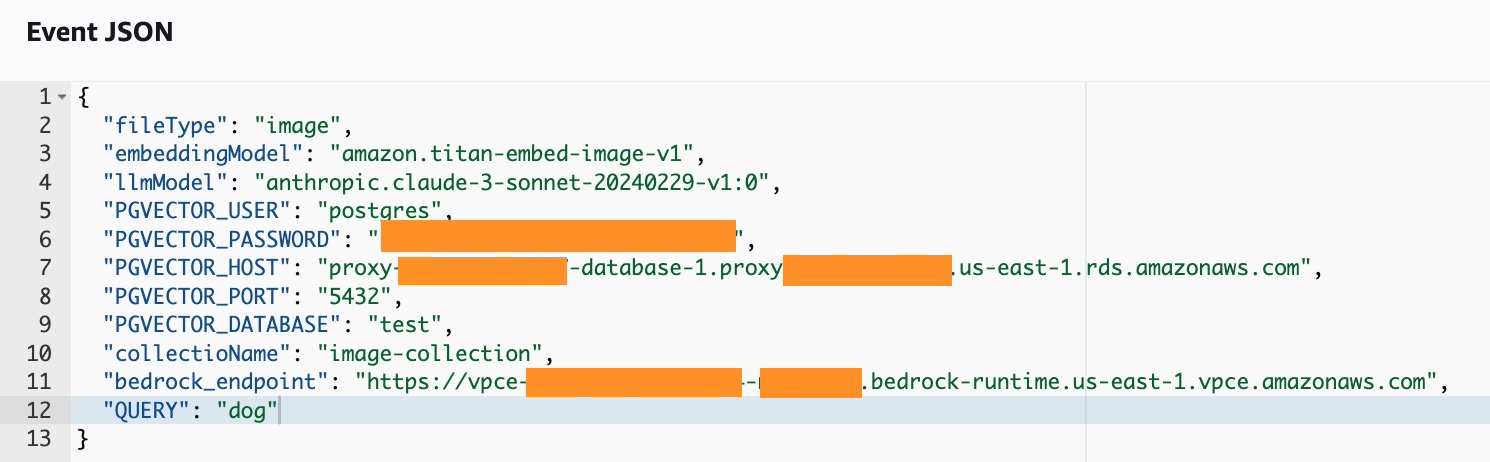

Event to trigger:

{

"location": "YOU-KEY",

"bucketName": "YOU-BUCKET-NAME",

"fileType": "pdf or image",

"embeddingModel": "amazon.titan-embed-text-v1",

"PGVECTOR_USER":"YOU-RDS-USER",

"PGVECTOR_PASSWORD":"YOU-RDS-PASSWORD",

"PGVECTOR_HOST":"YOU-RDS-ENDPOINT-PROXY",

"PGVECTOR_DATABASE":"YOU-RDS-DATABASE",

"PGVECTOR_PORT":"5432",

"collectioName": "YOU-collectioName",

"bedrock_endpoint": "https://vpce-...-.....bedrock-runtime.YOU-REGION.vpce.amazonaws.com"

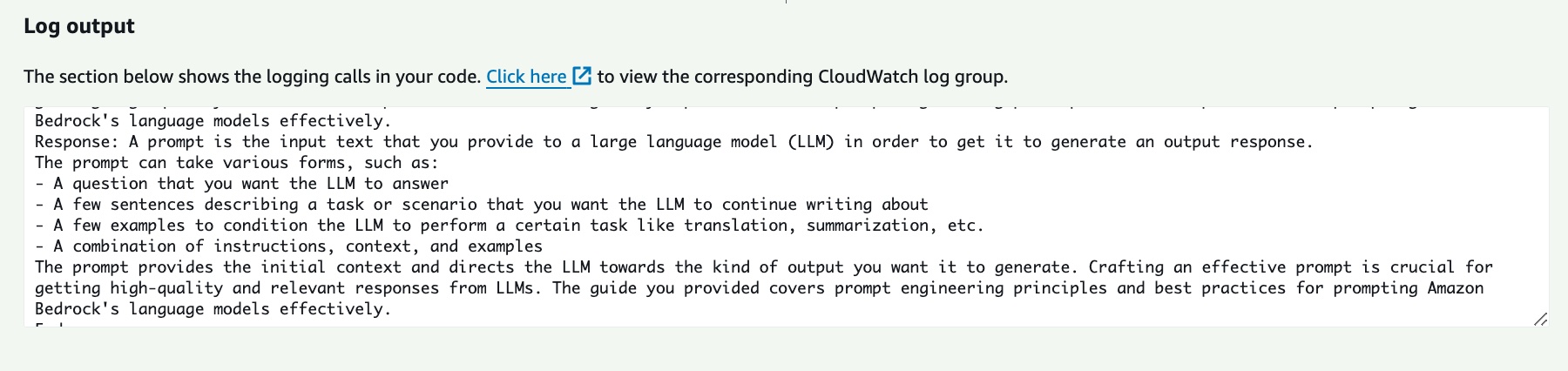

}| Event PDF | Executing function: succeeded |

|---|---|

|

|

| Event Image | Executing function: succeeded |

|---|---|

|

|

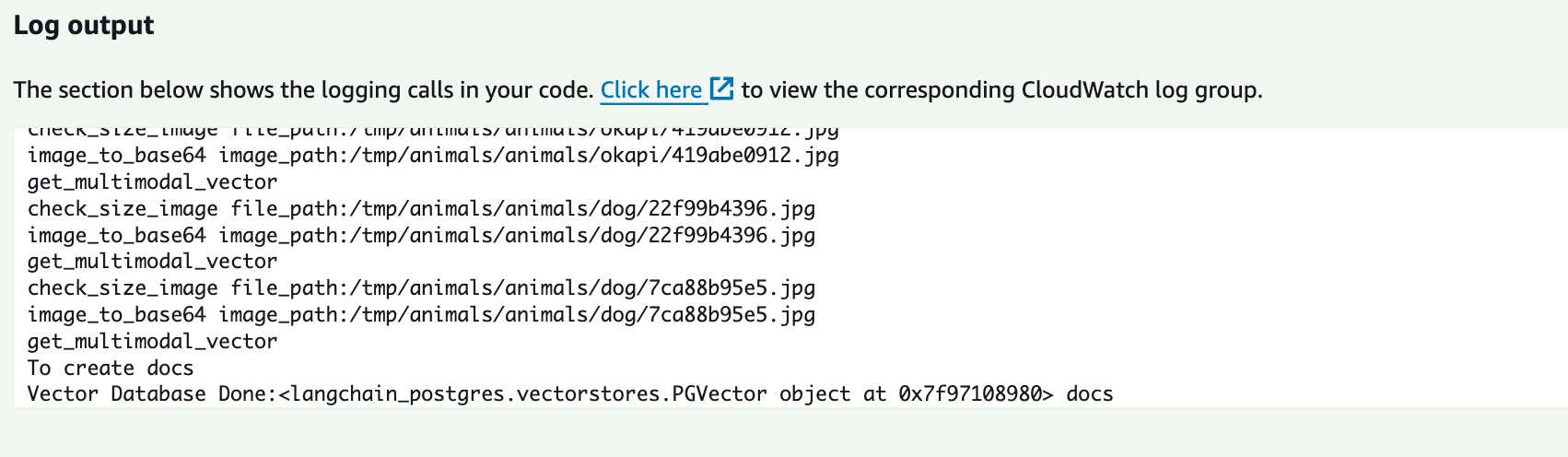

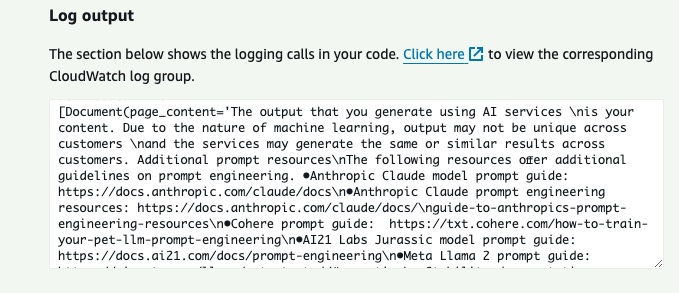

To handle the embedding process, there is a dedicated Lambda Function for each file type:

Event to trigger:

{

"vectorStoreLocation": "REPLACE-NAME.vdb",

"bucketName": "REPLACE-YOU-BUCKET",

"vectorStoreType": "faiss",

"query": "YOU-QUERY",

"numDocs": 5,

"embeddingModel": "amazon.titan-embed-text-v1"

}

| Event | Executing function: succeeded |

|---|---|

|

|

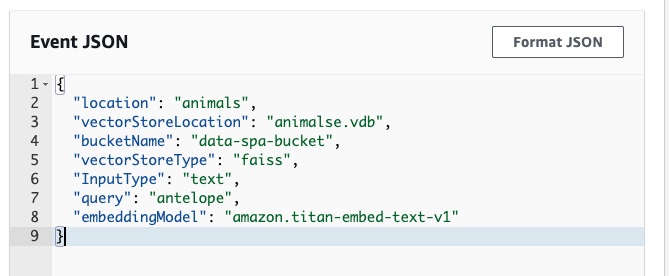

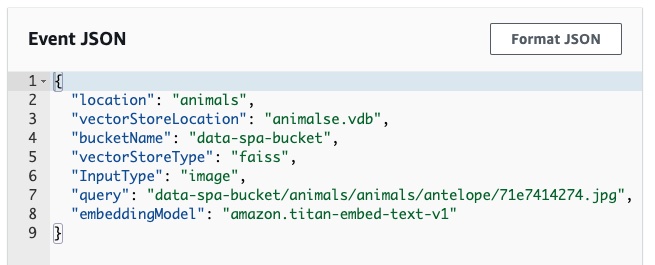

You can search by text or by image

- Text event to trigger

{

"vectorStoreLocation": "REPLACE-NAME.vdb",

"bucketName": "REPLACE-YOU-BUCKET",

"vectorStoreType": "faiss",

"InputType": "text",

"query":"TEXT-QUERY",

"embeddingModel": "amazon.titan-embed-text-v1"

}| Event | Executing function: succeeded |

|---|---|

|

|

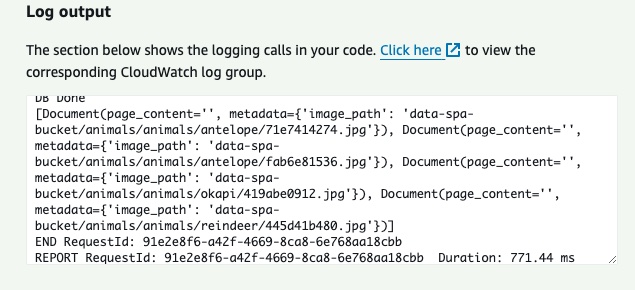

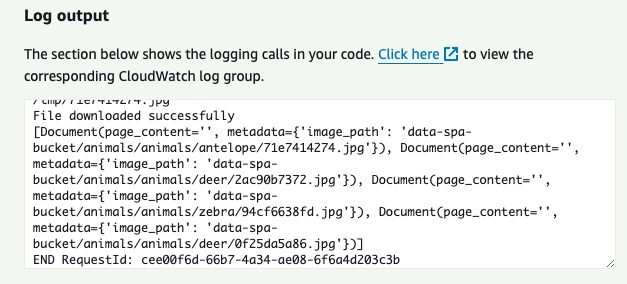

- Image event to trigger

{

"vectorStoreLocation": "REPLACE-NAME.vdb",

"bucketName": "REPLACE-YOU-BUCKET",

"vectorStoreType": "faiss",

"InputType": "image",

"query":"IMAGE-BUCKET-LOCATION-QUERY",

"embeddingModel": "amazon.titan-embed-text-v1"

}| Event | Executing function: succeeded |

|---|---|

|

|

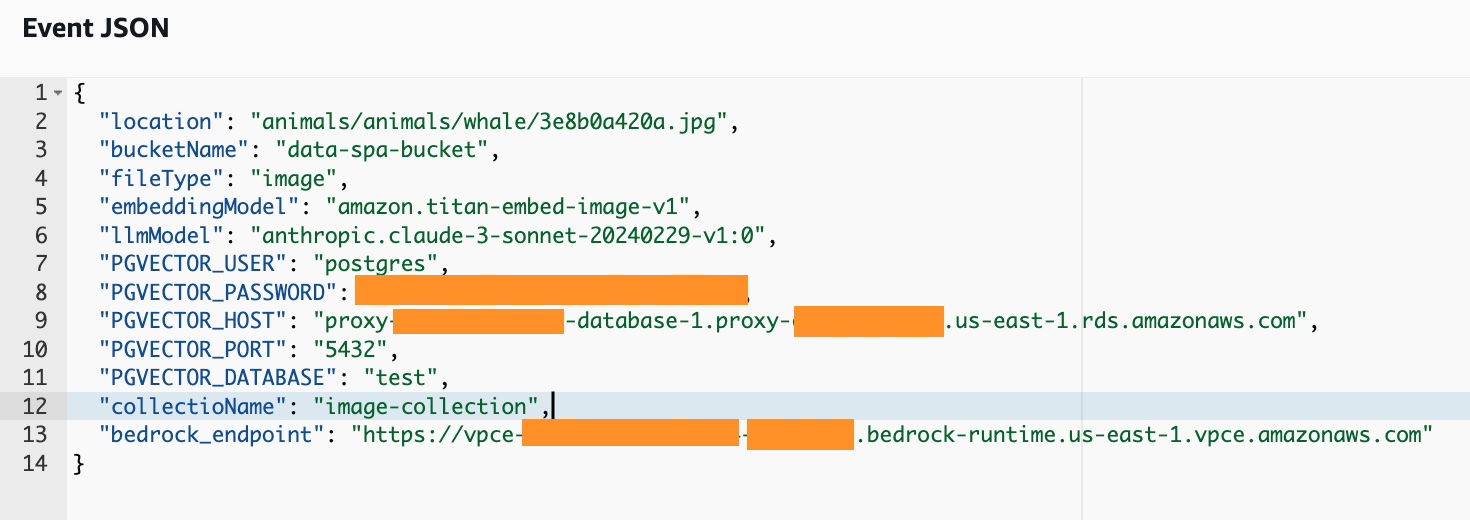

💡 The next step is to take the

image_pathvalue and download the file from Amazon S3 bucket with a download_file boto3 method.

{

"location": "YOU-KEY",

"bucketName": "YOU-BUCKET-NAME",

"fileType": "pdf or image",

"embeddingModel": "amazon.titan-embed-text-v1",

"PGVECTOR_USER":"YOU-RDS-USER",

"PGVECTOR_PASSWORD":"YOU-RDS-PASSWORD",

"PGVECTOR_HOST":"YOU-RDS-ENDPOINT-PROXY",

"PGVECTOR_DATABASE":"YOU-RDS-DATABASE",

"PGVECTOR_PORT":"5432",

"collectioName": "YOU-collectioName",

"bedrock_endpoint": "https://vpce-...-.....bedrock-runtime.YOU-REGION.vpce.amazonaws.com",

"QUERY": "YOU-TEXT-QUESTION"

}💡 Use

locationandbucketNameto deliver image location to make a query.

| Event PDF | Executing function: succeeded |

|---|---|

|

|

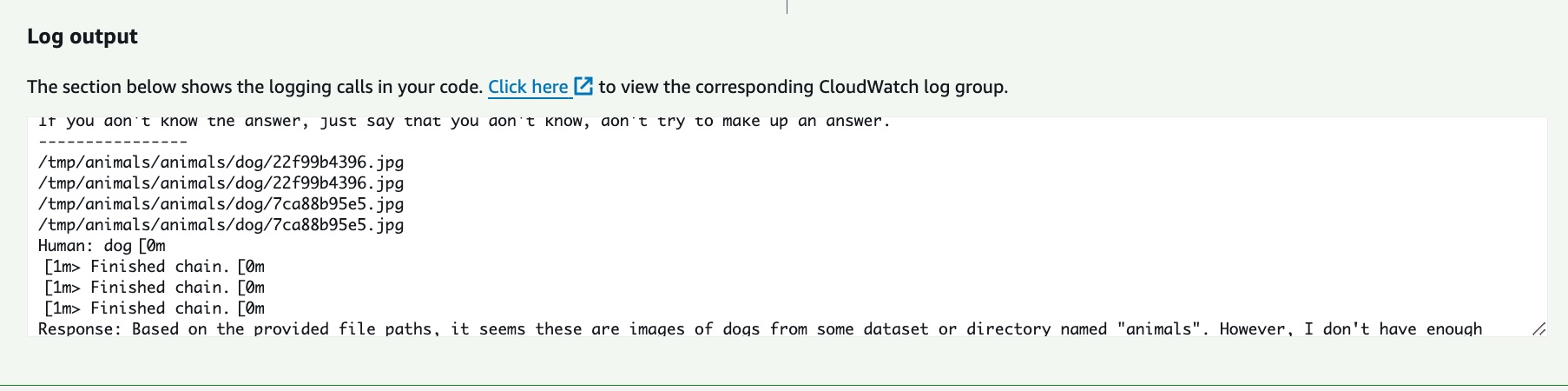

| Event Image Query Text | Executing function: succeeded |

|---|---|

|

|

| Event Image Query Image | Executing function: succeeded |

|---|---|

|

|

The Amazon Lambdas that you build in this deployment are created with a container images, you must have Docker Desktop installed and active in your computer.

Step 1: APP Set Up

✅ Clone the repo

git clone https://github.com/build-on-aws/langchain-embeddings

✅ Go to:

cd serveless-embeddings

-

Configure the AWS Command Line Interface

-

Deploy architecture with CDK Follow steps:

Step 2: Deploy architecture with CDK.

✅ Create The Virtual Environment: by following the steps in the README

python3 -m venv .venv

source .venv/bin/activate

for windows:

.venv\Scripts\activate.bat

✅ Install The Requirements:

pip install -r requirements.txt

✅ Synthesize The Cloudformation Template With The Following Command:

cdk synth

✅🚀 The Deployment:

cdk deploy

🧹 Clean the house!:

If you finish testing and want to clean the application, you just have to follow these two steps:

- Delete the files from the Amazon S3 bucket created in the deployment.

- Run this command in your terminal:

cdk destroy

In this post, you built a powerful multimodal search engine capable of handling both text and images using Amazon Titan Embeddings, Amazon Bedrock, Amazon Aurora PostgreSQL, and LangChain. You generated embeddings, stored the data in both FAISS vector databases and Amazon Aurora Postgre, and developed applications for semantic text and image search.

Additionally, you deployed a serverless application using AWS CDK with Lambda Functions to integrate embedding and retrieval capabilities through events, providing a scalable solution.

Now you have the tools to create your own multimodal search engines, unlocking new possibilities for your applications. Explore the code, experiment, and share your experiences in the comments.

- Getting started with Amazon Bedrock, RAG, and Vector database in Python

- Building with Amazon Bedrock and LangChain

- How To Choose Your LLM

- Working With Your Live Data Using LangChain

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.