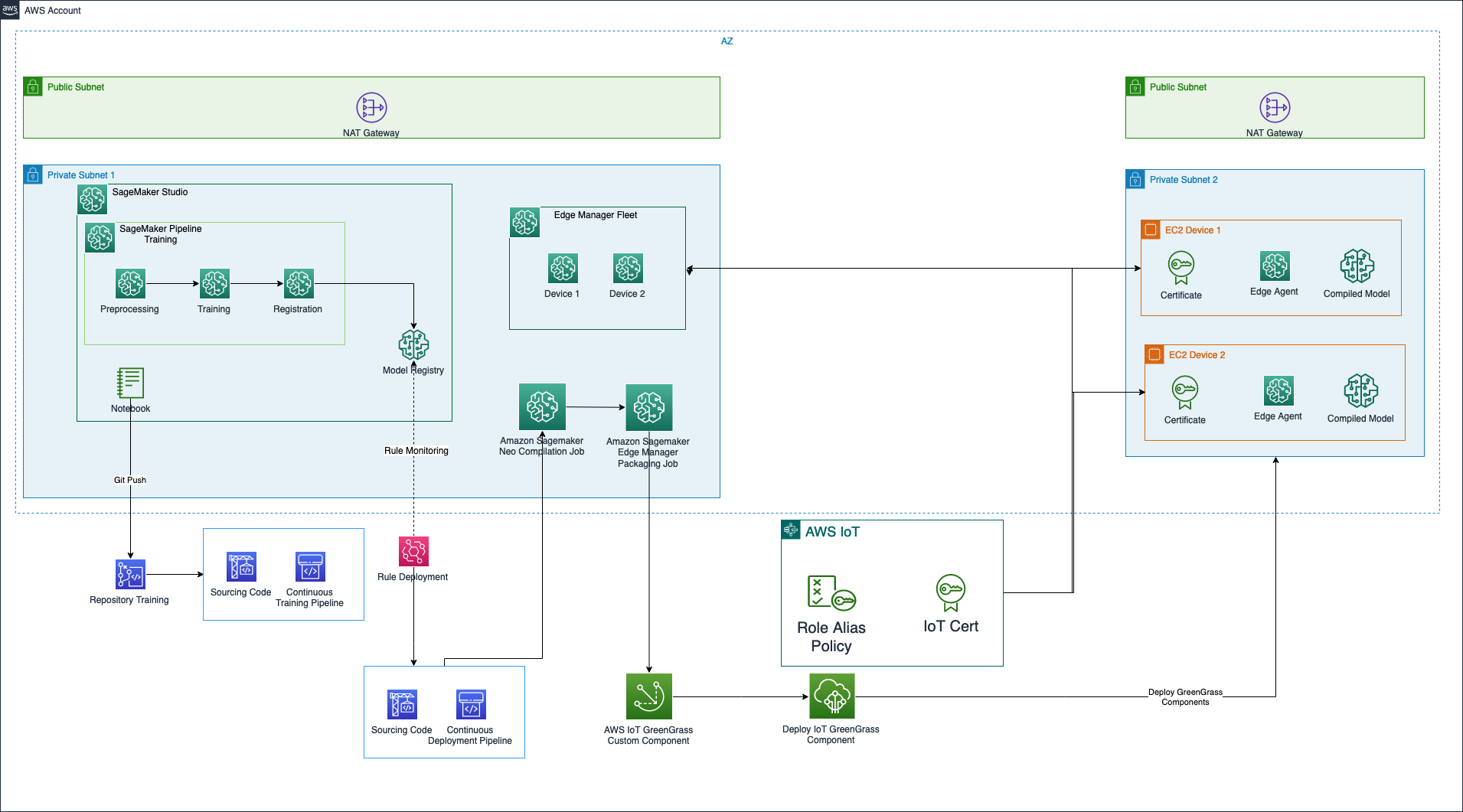

In this repository, we are stepping through an end to end implementation of Machine Learning (ML) models using Amazon SageMaker, by targeting as deployment environment simulated remote edge devices using Amazon SageMaker Edge Manager and AWS IoT GreenGrass.

This is a sample code repository for demonstrating how to organize your code for build and train your model, by starting from an implementation through notebooks for arriving to a code structure architecture for implementing ML pipeline using Amazon SageMaker Pipeline, and how to setup a repository for deploying ML models using CI/CD.

This repository is enriched by CloudFormation templates for setting up the ML environment, by creating the SageMaker Studio environment, Networking, and CI/CD for deploying ML models

Everything can be tested by using the example notebooks for running training on SageMaker using the following frameworks:

Setup the ML environment by deploying the CloudFormation templates described as below:

- 00-networking: This template is creating a networking resources,

such as VPC, Private Subnets, Security Groups, for a secure environment for Amazon SageMaker Studio. The necessary variables used by SageMaker Studio are stored using AWS Systems Manager - 01-sagemaker-studio: This template is creating

the SageMaker Studio environment, with the necessary execution role used during the experimentation and the execution of the

SageMaker Jobs. Parameters:

- SageMakerDomainName: Name to assign to the Amazon SageMaker Studio Domain. Mandatory

- SecurityGroupId: Provide a Security Group for studio if you want to use your own networking setup, otherwise the parameter is read by using AWS SSM after the deployment of the template 00-networking. Optional

- SubnetId: Provide a Subnet (Public or Private) for studio if you want to use your own networking setup, otherwise the parameter is read by using AWS SSM after the deployment of the template 00-networking. Optional

- VpcId: Provide a Vpc ID for studio if you want to use your own networking setup, otherwise the parameter is read by using AWS SSM after the deployment of the template 00-networking. Optional

- 02-ci-cd: This template is creating the CI/CD pipelines using

AWS CodeBuild and AWS CodePipeline.

It creates two CI/CD pipelines, linked to two AWS CodeCommit repositories, one for training and one for deployment, that can

be triggered through pushes on the main branch or with the automation part deployed in the next stack. Parameters:

- AccountIdDev: AWS Account ID where Data Scientists will develop ML code artifacts. If not specified, the AWS current account will be used. Optional

- PipelineSuffix: Suffix to use for creating the CI/CD pipelines. Mandatory

- RepositoryTrainingName: Name for the repository where the build and train code will be stored. Mandatory

- RepositoryDeploymentName: Name for the repository where the deployment code will be stored. Mandatory

- S3BucketArtifacts: Name of the Amazon S3 Bucket that will be created in the next stack used for storing code and model artifacts. Mandatory

- 03-ml-environment: This template is creating the necessary resources for the

ML workflow, such as Amazon S3 bucket for storing code and model artifacts, Amazon SageMaker Model Registry

for versioning trained ML models, and Amazon EventBridge Rule

for monitoring updates in the SageMaker Model Registry and start the CI/CD pipeline for deploying ML models in the production environments.

Parameters:

- AccountIdTooling: AWS Account ID where CI/CD is deployed. Can be empty if you have deployed the complete stack. In this case, the parameter is read through SSM. Optional

- CodePipelineDeploymentArn: ARN of the CodePipeline used for deploying ML models. Can be empty if you have deployed the complete stack. In this case, the parameter is read through SSM. Optional

- ModelPackageGroupDescription: Description of the Amazon SageMaker Model Package Group. This value can be empty. Optional

- ModelPackageGroupName: Name of the Amazon SageMaker Model Package Group where ML models will be stored. Mandatory

- S3BucketName: Name for the S3 bucket where ML model artifacts, code artifacts, and data will be stored. Mandatory

- 04-ec2-device-fleet: This template is creating the necessary resources for

a cloud digital twin, such as EC2 instances, SageMaker Edge Manager Device Fleet, IoT Policies and Roles. Optional parameters:

- SageMakerStudioRoleName: IAM Role used by SageMaker. If you deployed the entire stack, this value is taken through SSM

- S3BucketML: S3 Bucket used for ML purposes. If you deployed the entire stack, this value is taken through SSM

The code structure defined for the Build and Train ML models is the following:

- algorithms: The code used by the ML pipelines for processing and training ML models is stored in this folder

- algorithms/preprocessing: This folder contains the python code for performing processing of data using Amazon SageMaker Processing Jobs

- algorithms/training: This folder contains the python code for training a custom ML model using Amazon SageMaker Training Jobs

- mlpipelines: This folder contains some utilities scripts created in the official AWS example

Amazon SageMaker secure MLOps and it contains the definition for the

Amazon SageMaker Pipeline used for training

- mlpipelines/training: This folder contains the python code for the ML pipelines used for training

- notebooks: This folder contains the lab notebooks to use for this workshop:

- notebooks/00-Data-Visualization: Explore the input data and test the processing scripts in the notebook

- notebooks/01-Training-with-Pytorch: SageMaker End to End approach for processing data using SageMaker Processing, Training the ML model using SageMaker Training, Register the trained model version by using Amazon SageMaker Model Registry, evaulate your model by creating inference data using Amazon SageMaker Batch Transform

- notebooks/02-SageMaker-Pipeline-Training: Define the workflow steps and test the entire end to end using Amazon SageMaker Pipeline

The code structure defined for the Deploy ML models to simulated edge devices is the following:

- algorithms: The code used for creating the AWS GreenGrass inference component is stored in this folder

- algorithms/inference: This folder contains the scripts used for performing inference on the edge devices. It contains also the recipe definition for the AWS GreenGrass component for inference

- mlpipelines: This folder contains some utilities scripts created in the official AWS example

Amazon SageMaker secure MLOps and it contains the definition of

the script for performing Compilation, Packaging and GreenGrass Deployment

- mlpipelines/deployment: This folder contains the python code for running SageMaker Neo compilation Job, SageMaker Edge Manager Packaging Job and GreenGrass deployment of the model and inference components

- notebooks: This folder contains the lab notebooks to use for this workshop:

- notebooks/00-Package-using-ggv2: Explore the usage of Amazon SageMaker Neo, Amazon SageMaker Edge Manager for creating IoT GreenGrass components for the ML model read from the Amazon SageMaker Model Registry. It also create the IoT GreenGrass Component for inference, and creates the deployment component for the Edge devices.

- notebooks/01-Run-simulated-fleet-ggv2: Run a simulation of wind turbine and test the behaviour of the ML model deployed

- notebooks/02-Pipeline-Deployment: Test the execution of the Pipeline script before pushing in the repository for CI/CD automation