This repository contains the code used to benchmark the IQ code LLM models.

- 🏃 Running the benchmarks

- 📈 Benchmark Goals

- 📂 Benchmark dataset structure

- 📥 Collection Process

- 📋 Evaluation process

- Install the dependencies

pip install -r requirements.txt- Run the benchmarks

python src/main.py- It should allow us to generate deterministic metrics for LLM performance on issue detection.

Such as

- Overall score

- scores against different issue categories

- scores categorized into size of input contract

- scores categorized into issue impacts

- Should be cost effective and fast

Nice to haves -

- It should allow us to run benchmark on different LLMs in parallel

It should contain:

- Smart contract code - This can be flattened smart contract code

- Smart contract issues - Each issue needs -

- Location of issue

- Issue type (We need to maintain a list of all smart contract issue categories)

- Impact of the issue

In a file format (We can store in Parquet)

We collect these types of smart contracts

-

Toy Problems: We collect toy smart contracts which are specifically designed to let students to learn about different solidity vulnerabilities. these should be easy to gather and also since these contracts often focus on one vulnerability at a time, we can evaluate LLMs for awareness of these vulnerabilities and rank them quite easily. we can collect these from https://solidity-by-example.org/hacks

-

Real Contracts: We collect real smart contracts with vulnerabilities from wild which can either have more than a single vulnerability and are logic intensive. these we can collect around 10 to start with and keep increasing as we discover more interesting contracts with hidden bugs that is not trivial to catch by static analyzers, we can collect these from code4rena audits

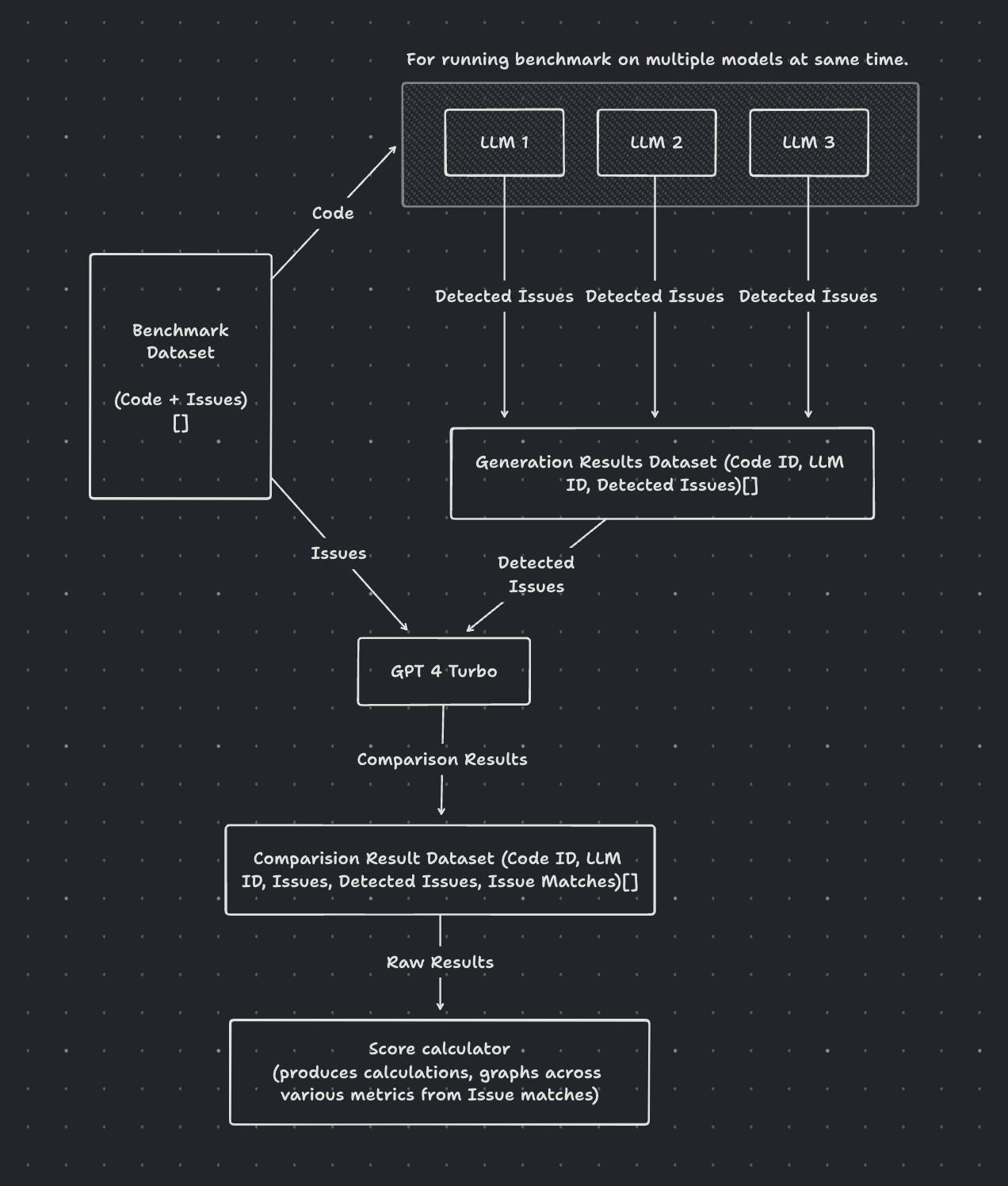

- We first generate the LLM responses on smart contract code and gather them into its own dataset

- We then compare the LLM responses with the issues list from our benchmark dataset with GPT 4 Turbo (since GPT 4 allows large context size we can do the comparison in batches to avoid rate limits)

- We parse the output from GPT 4 and store the scores based on various metrics