- Licensing

- Das Schiff Pure Metal - our approach to dynamic bare metal cluster provisioning

- Das Schiff Liquid Metal - development to optimize Pure Metal for edge and far edge deployments with use of microVM technology.

Das Schiff is a fully open-source based engine, created and used in production in Deutsche Telekom Technik to offer distributed Kubernetes Cluster-as-a-Service (CaaS) at scale, on-prem, on top of VMs or bare-metal servers, to the wide variety of appilcations.

Telekom Deutschland is leading integrated communications provider in Germany, part of Deutsche Telekom Group. Deutsche Telekom Technik is a network technology (NT)department of Telekom Deutschland in charge of providing infrastructure to deliver communication services via its 17.5m fixed line connections and to 48.8m mobile customers.

Similar as in any telco, NT departments do not run typical IT workloads. Instead of it we run networks, network functions, service platforms and associated managment systems. Modern examples of those are 5G core, ORAN DU, remote UPF, BNG, IMS, IPTV etc. Large portion of those is geographically distributed due to the nature of communications technology (e.g. access node or mobile tower). If we have full picture in mind incl. far edge locations we are talking about couple of thousands locations in Germany.

As all of those workloads are rapidly transforming towards cloud native architecture, thus requiring a Kubernetes cluster(s) to run them in. With that our challenge became following: "How to manage thousands of Kubernetes clusters accross hundreds of locations, on top of bare-metal servers or VMs, with a small, highly skilled SRE team and using only open-source software?"

Das Schiff - the engine for establishment and supervision of autonomous cloud native infrastructure (self)managed in a GitOps loop.

- Multi-cluster (main basis and our approach to multi-tenancy)

- Multi-distribution (currently upstream kubeadm based and EKS-D, but can support any K8s distro installable via kubeadm)

- Multi-site (core, edge, far edge)

- Infrastructure independent (supports bare-metal as well as local IaaS, but can support public cloud as well)

- (Self)managed in a GitOps loop

- Using Git (well known tool as single state of truth)

- Declarative (intent based)

- Immutable (prevents config drift)

- Auditable (trail via Git history), imutable, Git for single source of truth)

To run Das Schiff you need a reliable Git platform (we use GitLab), Container and Helm charts Registry (we use Harbor) and at least one of two:

- For bare-metal K8s clusters: locations with pools of bare-metal servers with Redfish based IPMI and with their BMC ports connected to a network that you can reach.

- For VM based K8s clusters: IaaS consumable via an API (e.g. vSphere, AWS, Azure, OpenStack etc.) that you can reach.

We believe that best platforms are open. The success of Kubernetes and several other examples from the past confirms that. We believe that now is the time and chance for telcos to build on Kubernetes as "The platform for building platforms and adopt modern devops practices of "continous everything" in production of its services. This requires the industry to adopt best practices in that area and to move away from the traditional "systems integrated" approach.

Therefore, we are working together with our friends from Weaveworks - original creators of GitOps movement to make Das Schiff generalized and consumable by anybody who needs to manage a large number of Kubernetes clusters distributed accross many locations. Our Primary goal is to create an open, composable and flexible 5G/telco cloud native platform for production. However it could be useful for anyone who needs to manage fleets of distributed Kubernetes clusters such as retail, railway, industrial networks etc. The initiative is currently code-named K5G and we would be happy to join forces with all interested parties. While we are working on K5G, feel free to engage in the discussions.

CNCF CNF-WG is an attempt to create momentum for transforming of telco / CNF production and we are actively contributing to it from the platform devops perspective.

Das Schiff relies on following core building blocks:

- Cluster API (CAPI) and its providers (currently CAPV and Metal3)

- FluxCD v2 (most notably its Kustomize and Helm controllers)

- Das Schiff layered git repo layout

- SOPS for the secrets stored in Git

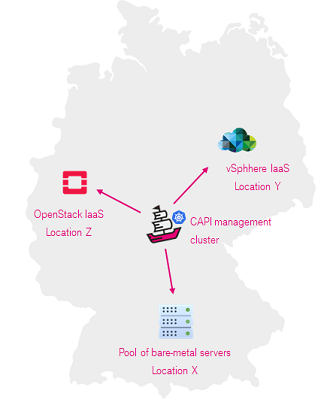

One CAPI management cluster manages workload clusters in multiple local or remote sites. For that it deploys multiple CAPI infrastructure providers and their instances.

Depending on the deployment scenario there can be multiple management clusters, that manage workloads in multiple regions or environments.

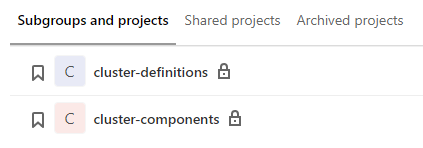

Definition of the entire infrastructure including workload clusters is stored in two main Git repos.

-

cluster-definitionsrepo contains CAPI manifests that define the workload clusters and is used by the management cluster for autonomous bootstrapping of workload clusters (CaaS). -

cluster-componentsrepo contains descriptions and configurations of applications that need to run in workload clusters as standard components (PaaS - Prometheus, Logstash, RBAC) and is used by workload clusters to set themselves up autonomously.

Git is used as main management tool.

Each management cluster has FluxCD v2 deployed and configured to watch a cluster-definitions repo related to the management cluster. As the part of bootstrapping of workload clusters, each cluster comes equipped with FluxCD v2 that points to cluster-components repo relevant for that cluster.

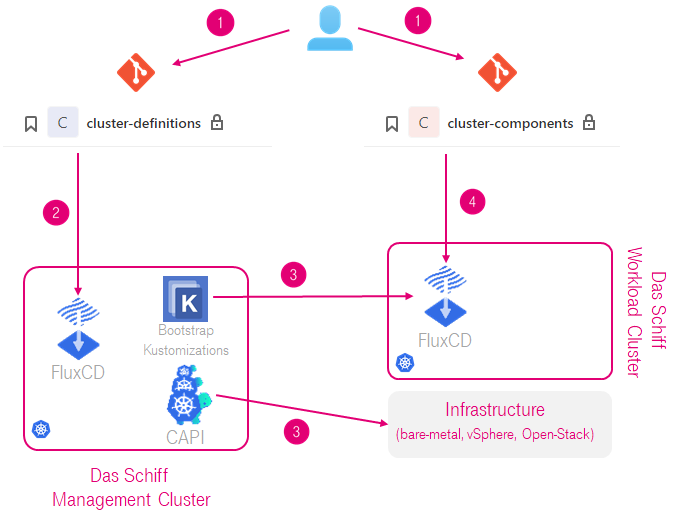

The main loop that illustrates how Das Schiff combines different building blocks looks as depicted below.

Description of the loop on example of workload cluster creation:

- Admin pushes definitions of the clusters and components to the repos

- FluxCD in management cluster detects the change in desired state and the cluster. In this example it creates corresponding CAPI objects and bootstrap Kustomizations.

- CAPI objects do their job and in communication with target infrastructure create a tenant cluster. As soon as that cluster is available, bootstrap Kustomizations deploy and configure CNI and FluxCD in it and set it to watch its own config repo. This also distributes initial cryptographic material needed for the cluster to decrypt its secrets from git.

- FluxCD in new tenant cluster starts reconciling the tenant cluster with desired state described in Git. After a short time the cluster reaches desired state which is then maintained by the loop.

Same loop is used for any change in the running clusters, altough most changes only get applied by the in-cluster Flux.

Main logic of Das Schiff engine loops is actually built in the layered structure of its Git repo.

Here is the structure of cluster-definitions repo:

cluster-definitions

|

+---schiff-management-cluster-x # Definitions of workload clusters this management cluster

| |

| +---self-definitions # Each management custer manages itself as well. These definitions are stored here.

| | Cluster.yml

| | Kubeadm-config.yml

| | machinedeployment-0.yml

| | machinehealthcheck.yml

| | MachineTemplates.yml

| |

| \---sites # Each management cluster manages multiple sites

| +---vsphere-site-1 # Here are definitons of clusters in one site

| | +---customer_A # Each site holds clusters of multiple customers. Customer clusters are grouped per site here.

| | | \---customer_A-workload-cluster-1 # Here are the definitions of one workload cluster

| | | | bootstrap-kustomisation.yaml # Defines initial components in workload cluster: FluxCD and CNI

| | | | external-ccm.yaml # Contains the CCM configured in a way to run on the management cluster

| | | | Cluster.yml # All below are standard definitions of CAPI and Infrastructure Provider objects

| | | | Kubeadm-config.yml # ^^^

| | | | machinedeployment-0.yml # ^^^

| | | | machinehealthcheck.yml # ^^^

| | | | MachineTemplates.yml # ^^^

| | | |

| | | \---secrets

| | | external-ccm-secret.yaml # contains the credentials for the CCM

| | |

| | \---customer_B

| | \---customer_B-workload-cluster-1

| | | # Same structure as above

| |

| |

| \---baremetal-site-1

| \---customer_A

| \---customer_A-workload-cluster-1

| | # Same structure as above

|

|

+---schiff-management-cluster-y # Here the domain of second management cluster starts

| +---self-definitions

| |

| \---sites

| \-- ... # Same structure as above

|

\---.sops.yaml # contains the rules to automatically encrypt all secrets in the secrets-folders with the correct keys

Beside applying standard CAPI manifests incl. manifests of infrastructure providers, FluxCD in management cluster creates set of so called bootstrap Kustomizations. They represent the link between cluster-definitions and cluster-components repositories. Bootstrap Kustomizations are responsible for deploying of FluxCD and CNI as defined in cluster-definitions repo into a newly created workload cluster, in order to hand the control to it.

At the beginning the bootstrap Kustomizations are failing as the workload cluster is not there yet. As soon as the workload cluster is available they will reconcile it and the loop will continue. After that the sole purpose of these Kustomizations is to repair / re-apply FluxCD in the workload cluster if it fails for any reason.

The sample bootstrap-kustomization.yaml is given below.

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta1

kind: Kustomization

metadata:

name: boostrap-location-1-site-1-customer_A-workload-cluster-1-cluster

namespace: location-1-site-1

spec:

interval: 5m

path: "./locations/location-1/site-1/customer_A-workload-cluster-1"

prune: false

suspend: false

sourceRef:

kind: GitRepository

name: locations-location-1-site-1-main

namespace: schiff-system

decryption:

provider: sops

secretRef:

name: sops-gpg-schiff-cp

timeout: 2m

kubeConfig:

secretRef:

name: customer_A-workload-cluster-1-kubeconfig

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta1

kind: Kustomization

metadata:

name: boostrap-location-1-site-1-customer_A-workload-cluster-1-default-namespaces

namespace: location-1-site-1

spec:

interval: 5m

path: "./default/components"

prune: false

suspend: false

sourceRef:

kind: GitRepository

name: locations-location-1-site-1-main

namespace: schiff-system

decryption:

provider: sops

secretRef:

name: sops-gpg-schiff-cp

timeout: 2m

kubeConfig:

secretRef:

name: customer_A-workload-cluster-1-kubeconfigThe cluster-components repository has a layered structure, that is used to create overlayed Kustomizations relevant for particular cluster that get applied.

The Git directories which are configured in these Kustomizations contain mostly the following:

- Plain manifests for namespaces, CNI, RBAC etc.

- HelmReleases for the components that are installed in the cluster (e.g. Prometheus, CSI etc.)

- ConfigMaps with values for HelmReleases

- Further Kustomizations

- GitRepositories, HelmRepositories

The Kustomizations are applied in the order from most general contained in default/components which is valid for all clusters, up to most specific for the particular cluster which is in locations.

In this way we can manage groups of clusters in a quite granular way:

- Default - for all clusters under management

- Customers - for all clusters of an internal customers in all or all in particular environment

- Environments - for all clusters in an environment like Dev, Test, Reference or Production

- Providers - for all clusters created via particular CAPI provider or all in particular environment

- Network zones - for all clusters in an network serment (important since CSP networks are usually very segmented and diverse)

- Locations - for all clusters in particular location & site or for individual clusters.

Here is corresponding structure of cluster-components repo:

cluster-components

+---customers # Defaults per customers and environments

| +---customer_A

| | +---default # Defaults for customer_A for all environments

| | | +---configmaps

| | | +---gitrepositories

| | | +---kustomizations

| | | \---namespaces

| | +---prd # Specific config for customer_A per environment

| | +---ref

| | \---tst

| \---customer_B

| ... # Same as above

+---default # General defaults valid for all customers and environments

| \---components

| +---core

| | +---clusterroles

| | +---configmaps

| | \---namespaces

| \---monitoring

| +---configmaps

| +---grafana

| | +---dashboards

| | | +---flux-system

| | | +---kube-system

| | | \---monitoring-system

| | \---datasources

| +---namespaces

| +---prometheus

| | +---alerts

| | +---rules

| | \---servicemonitors

| \---services

+---environments # General defaults per environment

| +---dev

| | +---components

| | | +---core

| | | | +---configmaps

| | | | \---helmreleases

| | | \---monitoring

| | | +---crds

| | | | +---grafana-operator

| | | | | \---crds

| | | | \---prometheus-operator

| | | | \---crds

| | | \---helmreleases

| | +---configmaps

| | +---helmrepositories

| | \---podmonitors

| +---prd

| | ... # Same as above

| +---ref

| | ... # Same as above

| \---tst

| ... # Same as above

+---locations # Cluster specific configs

| +---location-1

| | \---site-1

| | +---customer_A-workload-cluster-1

| | | +---cni

| | | | +---clusterrolebindings

| | | | +---clusterroles

| | | | +---configmaps

| | | | +---crds

| | | | \---serviceaccounts

| | | +---configmaps

| | | +---gitrepositories

| | | +---kustomizations

| | | \---secrets

| | \---customer_B-workload-cluster-1

| | ... # Same as above

| \---location-2

| ... # Same as above

+---network-zones

| +---environment-defaults # Contains the plain mainifest of each environment

| | +---dev

| | | +---clusterrolebindings

| | | +---clusterroles

| | | +---crds

| | | +---networkpolicies

| | | +---serviceaccounts

| | | \---services

| | +---prd

| | | ... # Same as above

| | +---ref

| | | ... # Same as above

| | \---tst

| | ...

| +---network-segment-1 # Specific config for network segment 1

| | ... # Contains the kustomize overlays used to modify the base manifests for each environment

| \---network-segment-2

| ... # Same as above

\---providers # CAPI provider defaults and specific configs per environment

+---default

+---metal3

| +---default

| | +---configmaps

| | \---namespaces

| +---dev

| | \---helmreleases

| +---prd

| | \---helmreleases

| +---ref

| | +---crds

| | +---helmreleases

| | \---helmrepositories

| \---tst

| \---helmreleases

\---vsphere

... # Same as above

One concrete example of cluster-components repo can be found here: https://github.com/telekom/das-schiff/tree/main/cluster-components

- Das Schiff at Cluster API Office Hours Apr 15th, 2020

- Talking about Das Schiff in Semaphore Uncut with Darko Fabijan

- Talking about Das Sciff in Art of Modern Ops with Cornelia Davis

- KubeCon Europe 2021 Keynote: How Deutsche Telekom built Das Schiff to sail Cloud Native Seas

Copyright (c) 2020 Deutsche Telekom AG.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License.

You may obtain a copy of the License at https://www.apache.org/licenses/LICENSE-2.0.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the LICENSE for the specific language governing permissions and limitations under the License.