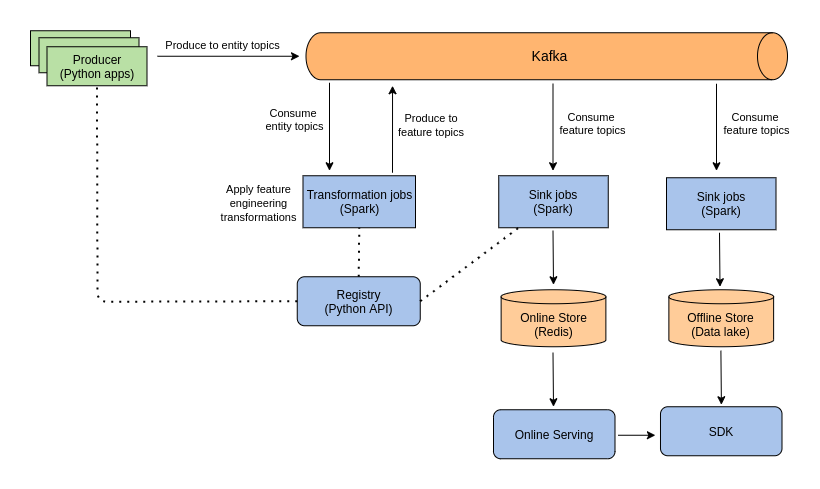

Feature Store provides a centralized location to store and document features that will be used in machine learning models and can be shared across projects. The image below presents an ideal architecture proposed for building a streaming feature store that is able to calculate features using a streaming platform, and make them available to the data scientists on dev and prod environments.

The solution currently implemented contains only the following components:

- Producer: applications producing entities to the kafka.

- Registry: repository of the schemas created on the pipeline stages.

- Kafka: streaming platform used to enable a high-performance data pipeline.

- Transformations: spark jobs to transform the entities produced by applications into features used by ML models.

- Sinks: application that consumes the feature topics and ingest them in the online store.

- Redis: in-memory data structure store, used as a online storage layer.

- docker >= 1.13.0

- docker compose >= 3

- make (making your life easier with makefiles)

This project can be easily started using the command below.

make startThis command could take a few minutes since the docker images will be either pulled or built for the following services: Zookeeper, Kafka, Kafdrop, Redis, Transformations, and Sinks. You can follow the service logs using the command below.

make logsAfter the services are running, you can access the management UI in order to make sure everything is fine.

Once everything is fine, let's run the producers.

make produce-orders