Official repo for Trial and Error: Exploration-Based Trajectory Optimization for LLM Agents

Authors: Yifan Song, Da Yin, Xiang Yue, Jie Huang, Sujian Li, Bill Yuchen Lin.

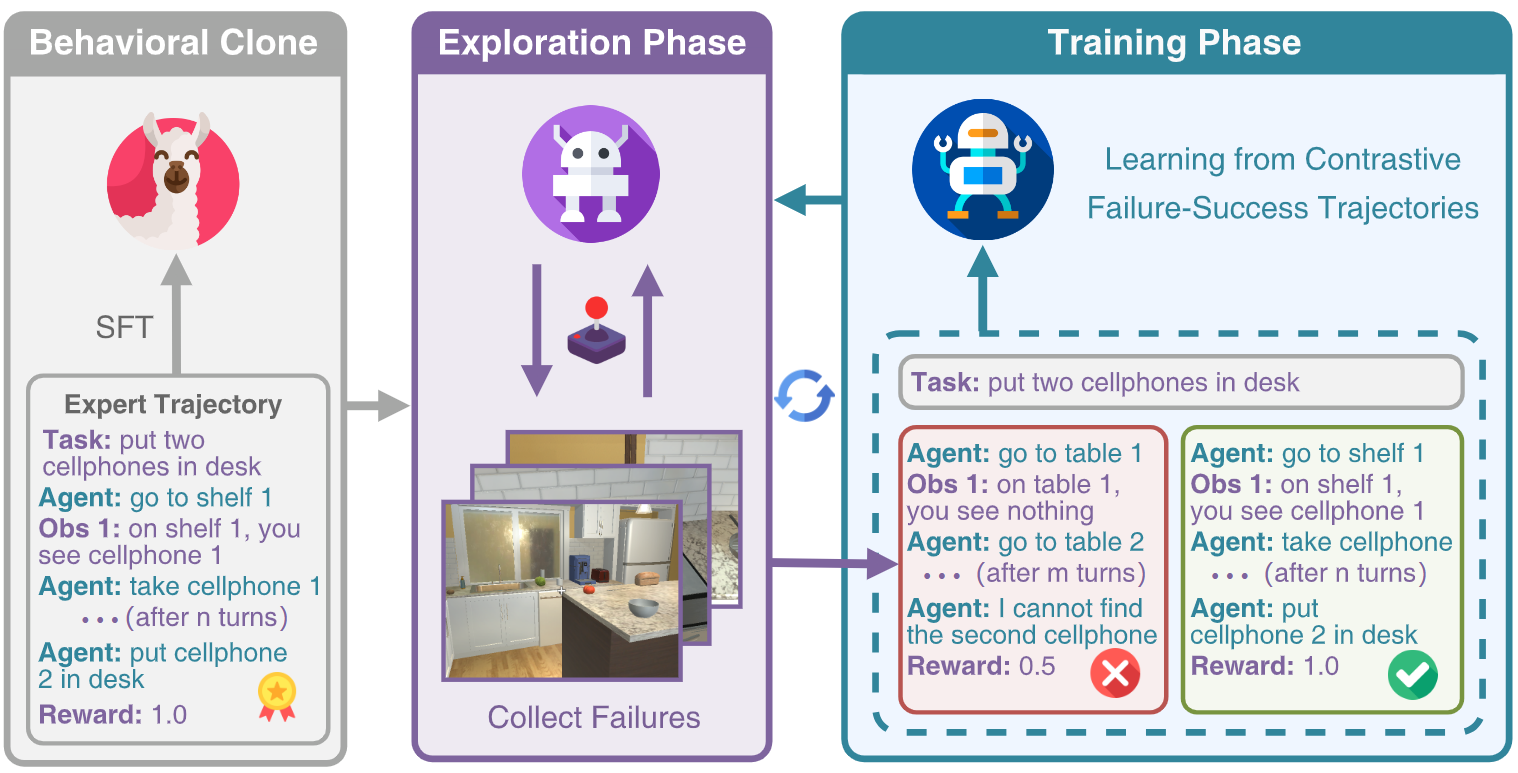

We introduce ETO (Exploration-based Trajectory Optimization), an agent learning framework inspired by "trial and error" process of human learning. ETO allows an LLM agent to iteratively collect failure trajectories and updates its policy by learning from contrastive failure-success trajectory pairs.

ETO has following features:

- 🕹️ Learning by Trial and Error

- 🎲 Learning from Failure Trajectories. Contrary to previous approaches that exclusively train on successful expert trajectories, ETO allows agents to learn from their exploration failures.

- 🎭 Contrastive Trajectory Optimization. ETO applies DPO loss to perform policy learning from failure-success trajectory pairs.

- 🌏 Iterative Policy Learning. ETO can be expanded to multiple rounds for further policy enhancement.

- 🎖️ Superior Performance

- ⚔️ Effectiveness on Three Datasets. ETO significantly outperforms strong baselines, such as RFT, PPO, on WebShop, ScienceWorld, and ALFWorld.

- 🦾 Generalization on Unseen Scenarios. ETO demonstrates an impressive performance improvement of 22% over SFT on the challenging out-of-distribution test set in ScienceWorld.

- ⌛ Task-Solving Efficiency. ETO achieves higher rewards within fewer action steps on ScienceWorld.

- 💡 Potential in Extreme Scenarios. ETO shows better performance in self-play scenarios where expert trajectories are not available.

- Upload the expert trajectories and the agent exploration trajectories on HuggingFace

There are three main folders in this project: envs, eval_agent, fastchat

envs: the interaction environment of WebShop and ScienceWorld. We transform the original WebShop repo into a package.

eval_agent: the evaluation framework of agent tasks, which is inspired by MINT.

fastchat: training scripts for SFT and DPO, which is a modified version of FastChat.

bash setup.shThe setup script performs the following actions:

- Install Python dependencies for agent training, deployment, evaluation, and the environments for WebShop, ScienceWorld, ALFWorld

- Download data and search engine indices for WebShop

- Download game files for ALFWorld

- Download expert trajectories for behavioral cloning

The bash script run_eto.sh implements the ETO pipeline. For example, you can run:

# Optional tasks: webshop, sciworld, alfworld

bash run_eto.sh webshop <EXP_NAME> <YOUR_MODEL_PATH> <YOUR_SAVE_PATH>The script performs the pipeline of ETO:

- SFT phase: using the expert trajectories to conduct SFT to get the base agent

- Evaluate SFT agent

- Launch the FastChat controller

- Launch the FastChat model worker

- Run the evaluation

- Kill the model worker. The controller will be reused in the following steps

- Launch multiple FastChat model workers and let the base agent to explore the environment in parallel

- Build contrastive failure-success trajectory pairs

- Conduct DPO training to learn from the mistakes

- Evaluate DPO agent

- Repeat 3-6 to iteratively update the policy

First, launch the controller of FastChat

python -m fastchat.serve.controllerThen, launch the model worker of FastChat

python -m fastchat.serve.model_worker --model-path <YOUR_MODEL_PATH> --port 21002 --worker-address http://localhost:21002Finally, evaluate the agent

python -m eval_agent.main --agent_config fastchat --model_name <YOUR_MODEL_NAME> --exp_config <TASK_NAME> --split test --verbose- Implement your task loader in

eval_agent/tasks. You should implement theload_tasksmethod which returns a task generator. - Implement the corresponding environment in

eval_agent/envs. The environment should parse the action generated by the LLM agent, execute the action, and return the observation. The tool/API calling should also be implemented in the environment. - Write the instruction prompt and ICL examples in

eval_agent/prompt. The default setting is 1-shot evaluation. - Write a new task config in

eval_agent/configs/task. The config defines which task class and environment class to load, and the settings of the environment (e.g., max action steps).

[

{

"id": "example_0",

"conversations": [

{

"from": "human",

"value": "Who are you?"

},

{

"from": "gpt",

"value": "I am Vicuna, a language model trained by researchers from Large Model Systems Organization (LMSYS)."

},

{

"from": "human",

"value": "Have a nice day!"

},

{

"from": "gpt",

"value": "You too!"

}

]

}

][

{

"id": "identity_0",

"prompt": [

{

"from": "human",

"value": "Hello"

},

{

"from": "gpt",

"value": "Hi"

},

{

"from": "human",

"value": "Have a nice day!"

}

],

"chosen": [

{

"from": "gpt",

"value": "OK!"

},

{

"from": "human",

"value": "How are you?"

},

{

"from": "gpt",

"value": "I'm fine"

}

],

"rejected": [

{

"from": "gpt",

"value": "No, I'm bad"

}

]

}

]If you find this repo helpful, please cite out paper:

@article{song2024trial,

author={Yifan Song and Da Yin and Xiang Yue and Jie Huang and Sujian Li and Bill Yuchen Lin},

title={Trial and Error: Exploration-Based Trajectory Optimization for LLM Agents},

year={2024},

eprint={2403.02502},

archivePrefix={arXiv},

primaryClass={cs.CL}

}