The objective of this part is to compare Autoencoder (AE) and Variational Autoencoder (VAE) models trained on the MNIST dataset.

- Establish an auto-encoder Architecture then train your model on MINST Dataset (Specify the best hyper-parameters).

- Establish a Variational auto-encoder Architecture then train your model on MINST Dataset (Specify the best hyper-parameters).

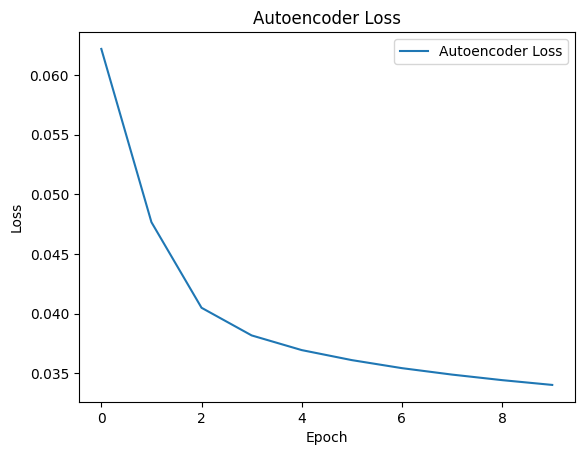

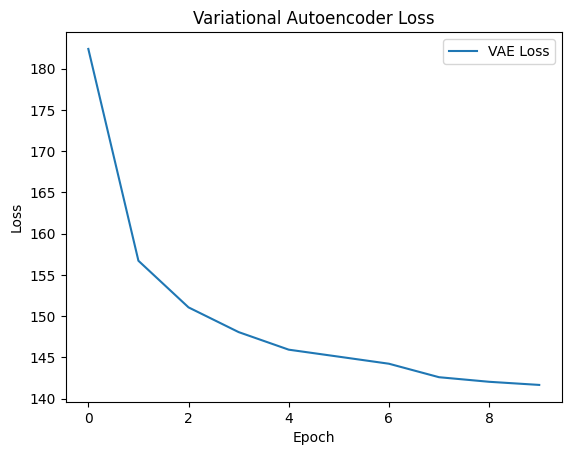

- Evaluate the two models by plotting (Loss, KL divergence, etc), what you conclude.

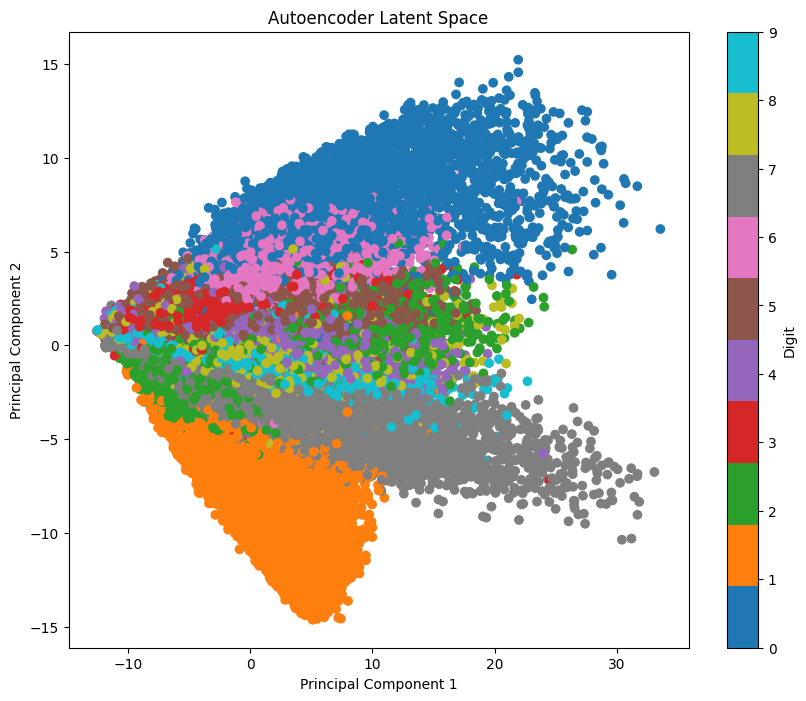

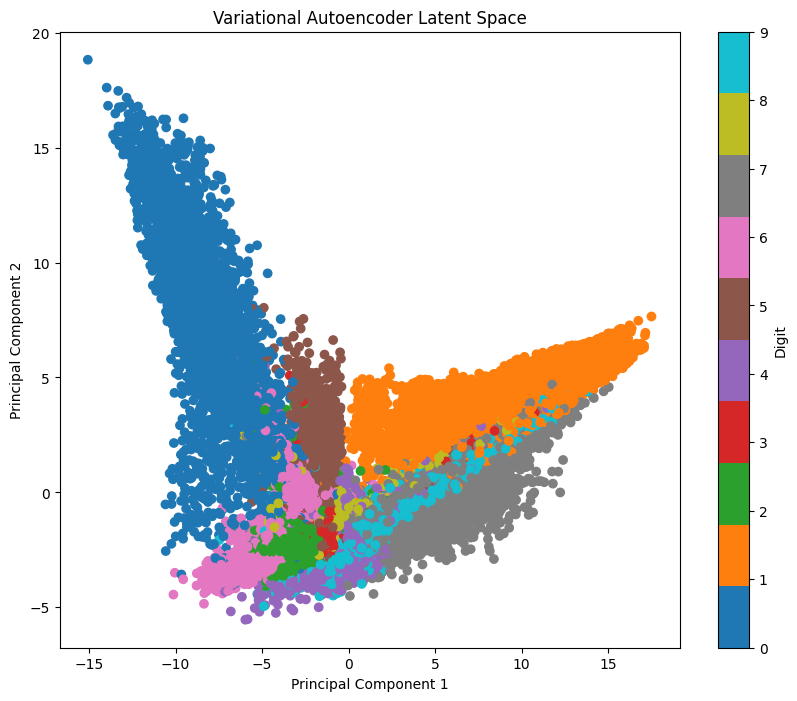

- Try to plot the latent space of the two models.

The plots demonstrate the training progress and performance of the Autoencoder and Variational Autoencoder models on the MNIST dataset.

The objective of this part is to train a Generative Adversarial Network (GAN) model on the Abstract Art Gallery dataset.

- Using Pytorch Library Define Generator, Define Discriminator, Define Loss Function, Initialize Generator and Discriminator, GPU Setting, Configure Data Loader, Define Optimizers and do Training.

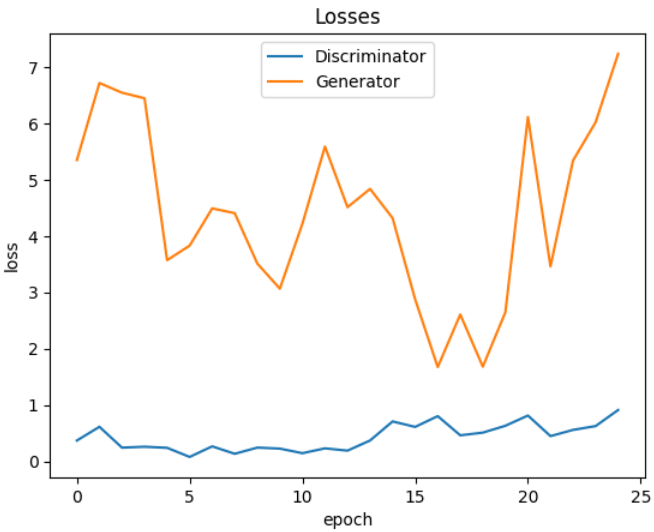

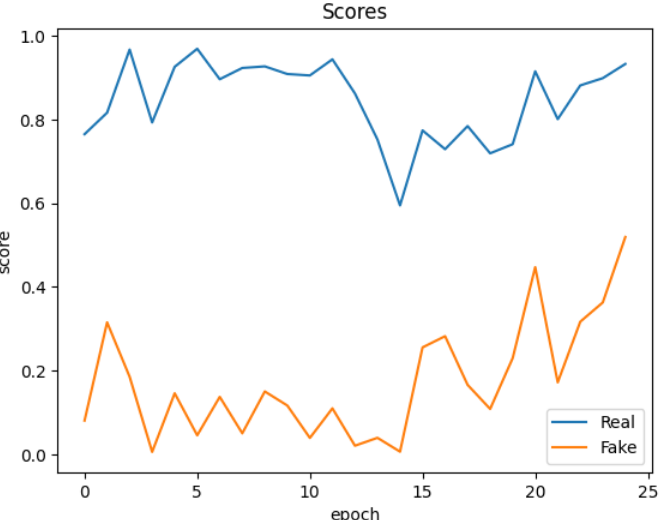

- Evaluate the model by plotting (Loss, KL divergence, etc) (Generator and Discriminator), what you conclude.

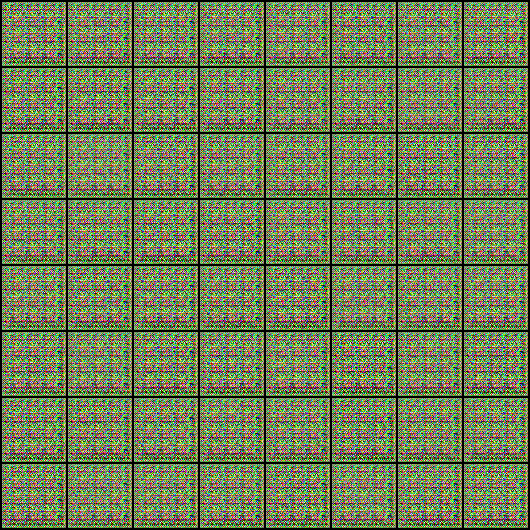

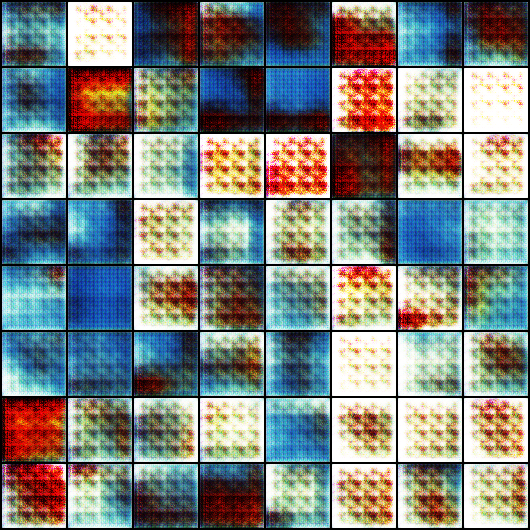

- Generate the new Data and compare their quality to the original ones.

The training of the GAN resulted in fluctuating losses over 25 epochs, indicating some instability in the process. However, the discriminator effectively distinguished between real and fake images throughout. Further optimization may be needed to improve stability and image quality.

Through this lab, I gained practical experience in implementing and training various deep learning models, including Autoencoders (AE), Variational Autoencoders (VAE), and Generative Adversarial Networks (GANs). I learned how to optimize model performance by tuning hyperparameters and interpreting training metrics such as loss and KL divergence.