Emotional Dialogue Act data contains dialogue act labels for existing emotion multi-modal conversational datasets.

Dialogue act provides an intention and performative function in an utterance of the dialogue. For example, it can infer a user's intention by distinguishing Question, Answer, Request, Agree/Reject, etc. and performative functions such as Acknowledgement, Conversational-opening or -closing, Thanking, etc.

The aim is to enrich the existing multimodal conversational emotion dataset that would help advancing conversational analysis and help to build natural dialogue systems.

We chose two popular multimodal emotion datasets: Multimodal EmotionLines Dataset (MELD) and Interactive Emotional dyadic MOtion CAPture database (IEMOCAP).

MELD contains two labels for each utterance in a dialogue: Emotions and Sentiments.

-

Emotions -- Anger, Disgust, Sadness, Joy, Neutral, Surprise and Fear.

-

Sentiments -- positive, negative and neutral.

IEMOCAP contains only emotion but at two levels: Discrete and Fine-grained Dimensional Emotions

-

Discrete Emotions: Anger, Frustration, Sadness, Joy, Excited, Neutral, Surprise and Fear.

-

Fine-grained Dimensional Emotions: Valence, Arousal and Dominance.

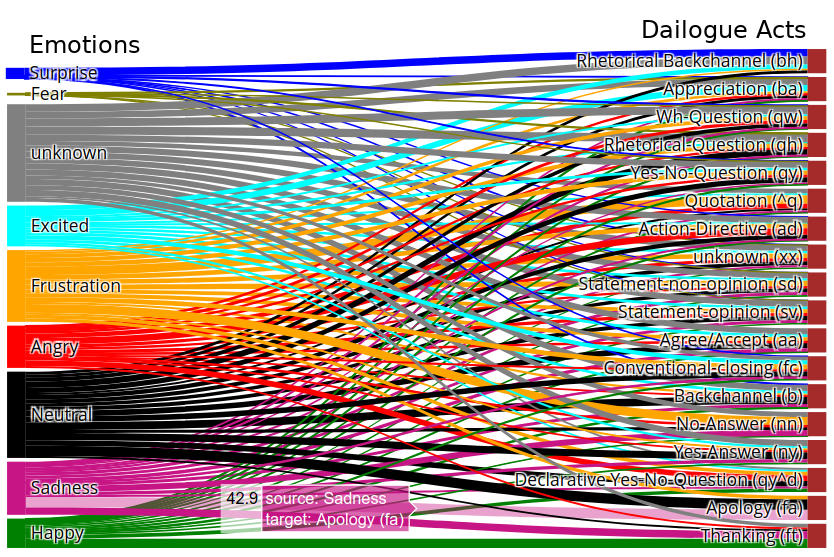

Our analysis on EDAs reveal association between dialogue acts and emotional states in natural-conversational language. Visualizing co-occurrence of utterances with emotion states in the particular dialogue acts, please see the graph below from IEMOCAP EDAs:

See live plots/graphs (thanks to Plot.ly): IEMOCAP Emotion Sankey, MELD Emotion Sankey, and MELD Sentiment Sankey.

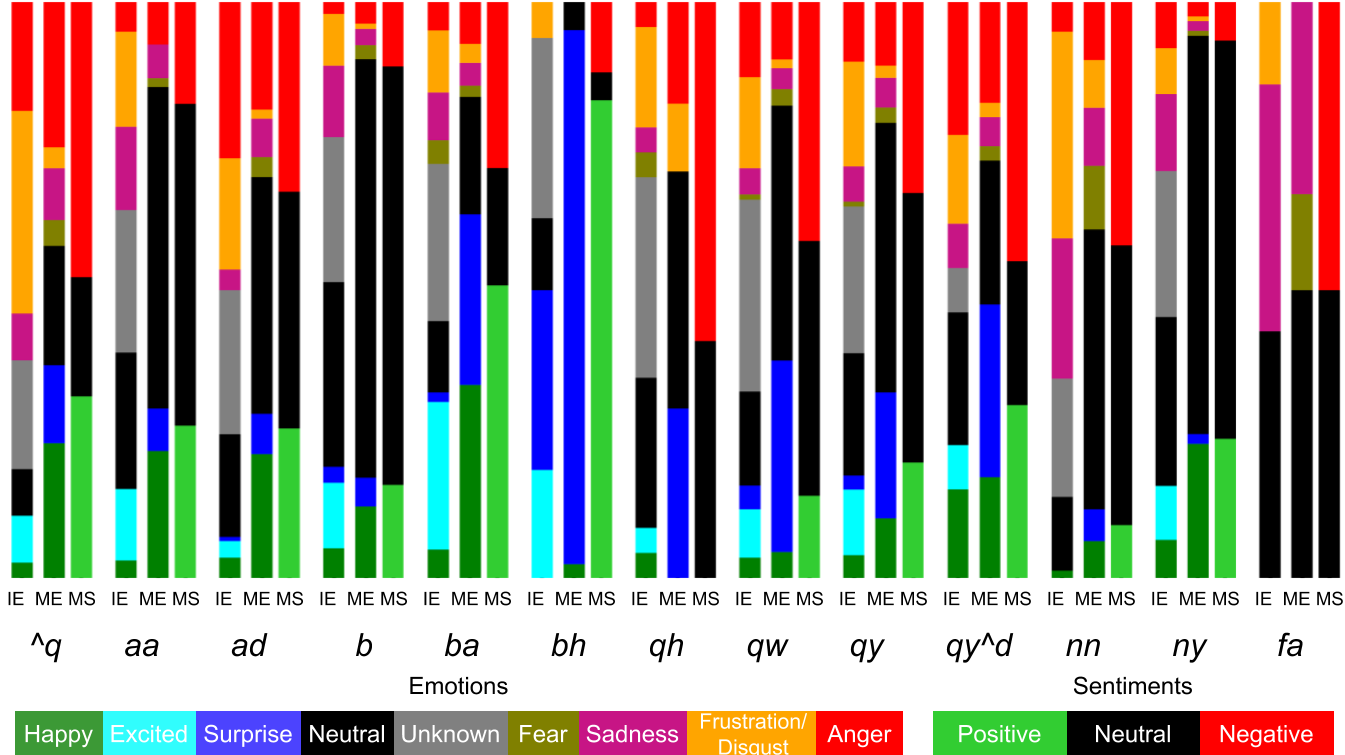

or bar diagram (as in the paper):

IE: IEMOCAP, ME: MELD Emotion and MS: MELD Sentiment.

See separated bar graphs in figures: IEMOCAP Emotion Bars, MELD Emotion Bars, and MELD Sentiment Bars.

Click for the Names and statistics of annotated Dialogue Acts in EDAs

| DA | Dialogue Act | IEMO | MELD |

|---|---|---|---|

| sd | Statement-non-opinion | 43.97 | 41.63 |

| sv | Statement-opinion | 19.93 | 09.34 |

| qy | Yes-No-Question | 10.3 | 12.39 |

| qw | Wh-Question | 7.26 | 6.08 |

| b | Acknowledge (Backchannel) | 2.89 | 2.35 |

| ad | Action-directive | 1.39 | 2.31 |

| fc | Conventional-closing | 1.37 | 3.76 |

| ba | Appreciation or Assessment | 1.21 | 3.72 |

| aa | Agree or Accept | 0.97 | 0.50 |

| nn | No-Answer | 0.78 | 0.80 |

| ny | Yes-Answer | 0.75 | 0.88 |

| br | Signal-non-understanding | 0.47 | 1.13 |

| ^q | Quotation | 0.37 | 0.81 |

| na | Affirmative non-yes answers | 0.25 | 0.34 |

| qh | Rhetorical-Question | 0.23 | 0.12 |

| bh | Rhetorical Backchannel | 0.16 | 0.30 |

| h | Hedge | 0.15 | 0.02 |

| qo | Open-question | 0.14 | 0.10 |

| ft | Thanking | 0.13 | 0.23 |

| qy^d | Declarative Yes-No-Question | 0.13 | 0.29 |

| bf | Reformulate | 0.12 | 0.19 |

| fp | Conventional-opening | 0.12 | 1.19 |

| fa | Apology | 0.07 | 0.04 |

| fo | Other Forward Function | 0.02 | 0.05 |

| Total | 10039 | 13708 |

These graphs can be generated using read_annotated_..._data.py applications

"Enriching Existing Conversational Emotion Datasets with Dialogue Acts using Neural Annotators"

The article (accepted at LREC 2020) explaining these datasets can be found at - https://www.aclweb.org/anthology/2020.lrec-1.78/

This work has been performed as part of the SECURE EU Project

https://secure-robots.eu/fellows/bothe/EDAs/

annotated_eda_data/eda_iemocap_no_utts_dataset.csv- contains the EDAs in IEMOCAP data, without utterances.annotated_eda_data/eda_meld_emotion_dataset.csv- contains the EDAs in meld data, they are staked (train, dev, test).

Click for understanding the csv files

| Column Name | Description |

|---|---|

| speaker | Name of the speaker (in MELD) or speaker id (in IEMOCAP) |

| utt_id | The index of an utterance in the dialogue, starting from 0 (in Meld) or starting 0 from speaker turn (in IEMOCAP) |

| utterance | String of utterance. |

| emotion | The emotion (neutral, joy, excited, sadness, anger, surprise, fear, frustration, disgust) expressed by the speaker in the utterance. |

| sentiment | The sentiment (positive, neutral, negative) expressed by the speaker in the utterance (only in MELD). |

| eda1 | Emotional dialogue act label from Utterance-level 1 model. |

| eda2 | Emotional dialogue act label from Utterance-level 2 model. |

| eda3 | Emotional dialogue act label from Context 1 model. |

| eda4 | Emotional dialogue act label from Context 2 model. |

| eda5 | Emotional dialogue act label from Context 3 model. |

| EDA | Final emotional dialogue act as an ensemble of all models (eda1, eda2, eda3, eda4, eda5) |

| all_match | Flag to indicate if all EDAs are matching (eda1 = eda2 = eda3 = eda4 = eda5). |

| con_match | Flag to indicate EDAs matched based on context models. |

| match | Flag to indicate EDAs matched based on confidence ranking. |

In case, if you want to reproduce the python environment used for the entire project,

please find the conda_env/environment.yml file

which can be maneged via conda (anaconda or miniconda).

-

Run

dia_act_meld_ensemble.pyordia_act_mocap_ensemble.pyfor respective data, to generate and calculate the reliability metrics ensemble of the dialogue acts given that all the labels are stored inmodel_output_labelsdirectory. -

Run

read_annotated_mocap_data.pyorread_annotated_meld_data.pyto read, generate the the graph and and calculate the data statistics

Currently, all the predictions are available in the model_output_labels directory, hence we skip the following.

- Run

dia_act_meld_annotator.pyordia_act_mocap_annotator.pyto annotate the datasets; given that models exists they will be updated soon.

In case, you want to annotate the conversational data,

please find the dialogue_act_annotator.py file,

which contains a client example for sending the requests to the server API

that takes care to generate utterance representation and make the predictions.

In case the server is not working, host your own server using the repository bothe/dialogue-act-recognition.

You just need to arrange utterances into list along with other

lists such as speaker, utt_id and emotion

(it is optional, if you have any other annotation per utterance,

or just pass flag is_emotion=False in ensemble_eda_annotation() function.).

This API is not fully tested, hence send the requests at your own risk!

However, it is fully automated and hosted via Uni-Hamburg, Informatik web servers.

Try to send, not more than 500 utterances, if it is above that number, server might take long to respond, hence it is recommended to contact author [bothe] for your query.

Paper explaining the process of dialogue act annotation:

-EDAs:

C. Bothe, C. Weber, S. Magg, S. Wermter. EDA: Enriching Emotional Dialogue Acts using an Ensemble of Neural Annotators. (LREC 2020) https://secure-robots.eu/fellows/bothe/EDAs/

The original work of datasets:

-IEMOCAP:

Busso, C., Bulut, M., Lee, C., Kazemzadeh, A., Mower, E., Kim, S., Chang, J. N., Lee, S., and Narayanan, S. IEMOCAP: Interactive emotional dyadic motion capture database. (Language Resources and Evaluation 2008) https://sail.usc.edu/iemocap/

-MELD:

S. Poria, D. Hazarika, N. Majumder, G. Naik, E. Cambria, R. Mihalcea. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversation. (ACL 2019) https://affective-meld.github.io/

Chen, S.Y., Hsu, C.C., Kuo, C.C. and Ku, L.W. EmotionLines: An Emotion Corpus of Multi-Party Conversations. (arXiv preprint arXiv:1802.08379 2018)