This repository contains my work/implementation which I do during my Masterthesis at the Accelerated Big Data Systems of TUDelft

⚠️ The goal of this project is to elaborate different technologies and how to integrate them with Spark. I want to understand the technical feasibility of different scenarios and want to figure out which problems appear and need to be solved. Therefore, this code is very volatile and does not always follow best practices.

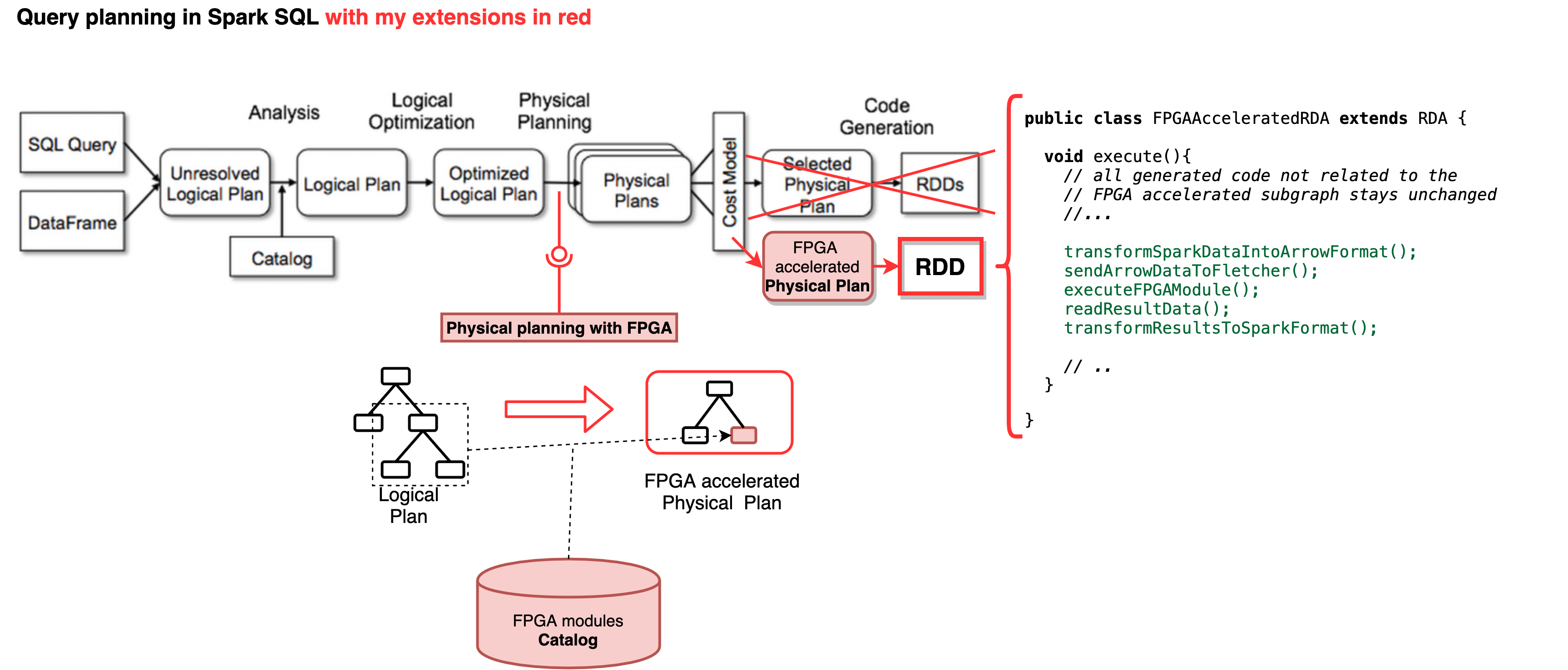

- Mapping of SparkSQL queries to FPGA building blocks and execute them by using Fletcher & Appache Arrow

Because of the many involved technologies the installation of the pre-conditions is not trivial. This installation guide describes the necessary things to do especially on machines without sudo rights (e.g. on the servers of the research group). Furthermore, if the execution on real FPGAs is not required the docker image used for the CI builds can be used:

The project is setup with gradle which allowed me to create a multi-language build. For including locally installed libraries the following env vars can be used:

LD_LIBRARY_PATH: colon separated list of directories to scan for local libraries. Evaluated before the default paths (/usr/local/libandusr/local/lib64). E.g.$WORK/local/lib:$WORK/local/lib64ADDITIONAL_INCLUDES: colon separated list of directories of additional includes e.g.$WORK/local/include/GANDIVA_JAR_DIR: path to the directory containing the gandiva jar when not on MacOs. E.g.$WORK/arrow-apache-arrow-0.17.1/java/gandiva/target/FLETCHER_PLATFORM: fletcher platfrom which should be loaded e.g.fletcher_snap

Hint: Stop the gradlew daemon './gradlew --stop' to make sure the new environment variables are passed to the gradle process.

Build the project including tests:

./gradlew build

To run individual tests:

./gradlew :spark-extension:test --tests *.FletcherReductionExampleSuite

spark-extension: this code integrates with spark and is replacing the default strategy of generating the execution plan.arrow-processorscala part which forward the Arrow vectors to the native codearrow-processor-nativecode that is responsible for the execution of the processing steps. For now it calls different C++ components e.g. the arrow parquet reader & gandiva) later it should call a Fletcher runtime.

- All test data is generated with the test

DataGenerator - The performance scenarios are defined in the

jmhpackage - To execute the JMH tests execute the following commands

./gradlew jmhJar #builds the jmh jar

mkdir -p spark-extension/build/reports/jmh/ # creates output directory

./spark-extension/build/libs/run_benchmark.sh # executes tests and passes configuration parameters