This is a simplified interface for TensorFlow, to get people started on predictive analytics and data mining.

Library covers variety of needs from linear models to Deep Learning applications like text and image understanding.

- TensorFlow provides a good backbone for building different shapes of machine learning applications.

- It will continue to evolve both in the distributed direction and as general pipelinining machinery.

- To smooth the transition from the Scikit Learn world of one-liner machine learning into the more open world of building different shapes of ML models. You can start by using fit/predict and slide into TensorFlow APIs as you are getting comfortable.

- To provide a set of reference models that would be easy to integrate with existing code.

- Python: 2.7, 3.4+

- Scikit learn: 0.16, 0.17, 0.18+

- Tensorflow: 0.7+

First, you need to make sure you have TensorFlow and Scikit Learn installed.

Run the following to install the stable version from PyPI:

pip install skflowOr run the following to install from the development version from Github:

pip install git+git://github.com/tensorflow/skflow.git- Introduction to Scikit Flow and why you want to start learning TensorFlow

- DNNs, custom model and Digit recognition examples

- Categorical variables: One hot vs Distributed representation

- More coming soon.

- Twitter #skflow.

- StackOverflow with skflow tag for questions and struggles.

- Github issues for technical discussions and feature requests.

- Gitter channel for non-trivial discussions.

Below are few simple examples of the API. For more examples, please see examples.

- It's useful to re-scale dataset before passing to estimator to 0 mean and unit standard deviation. Stochastic Gradient Descent doesn't always do the right thing when variable are very different scale.

- Categorical variables should be managed before passing input to the estimator.

Simple linear classification:

import skflow

from sklearn import datasets, metrics

iris = datasets.load_iris()

classifier = skflow.TensorFlowLinearClassifier(n_classes=3)

classifier.fit(iris.data, iris.target)

score = metrics.accuracy_score(iris.target, classifier.predict(iris.data))

print("Accuracy: %f" % score)Simple linear regression:

import skflow

from sklearn import datasets, metrics, preprocessing

boston = datasets.load_boston()

X = preprocessing.StandardScaler().fit_transform(boston.data)

regressor = skflow.TensorFlowLinearRegressor()

regressor.fit(X, boston.target)

score = metrics.mean_squared_error(regressor.predict(X), boston.target)

print ("MSE: %f" % score)Example of 3 layer network with 10, 20 and 10 hidden units respectively:

import skflow

from sklearn import datasets, metrics

iris = datasets.load_iris()

classifier = skflow.TensorFlowDNNClassifier(hidden_units=[10, 20, 10], n_classes=3)

classifier.fit(iris.data, iris.target)

score = metrics.accuracy_score(iris.target, classifier.predict(iris.data))

print("Accuracy: %f" % score)Example of how to pass a custom model to the TensorFlowEstimator:

import skflow

from sklearn import datasets, metrics

iris = datasets.load_iris()

def my_model(X, y):

"""This is DNN with 10, 20, 10 hidden layers, and dropout of 0.5 probability."""

layers = skflow.ops.dnn(X, [10, 20, 10], keep_prob=0.5)

return skflow.models.logistic_regression(layers, y)

classifier = skflow.TensorFlowEstimator(model_fn=my_model, n_classes=3)

classifier.fit(iris.data, iris.target)

score = metrics.accuracy_score(iris.target, classifier.predict(iris.data))

print("Accuracy: %f" % score)Each estimator has a save method which takes folder path where all model information will be saved. For restoring you can just call skflow.TensorFlowEstimator.restore(path) and it will return object of your class.

Some example code:

import skflow

classifier = skflow.TensorFlowLinearRegression()

classifier.fit(...)

classifier.save('/tmp/tf_examples/my_model_1/')

new_classifier = TensorFlowEstimator.restore('/tmp/tf_examples/my_model_2')

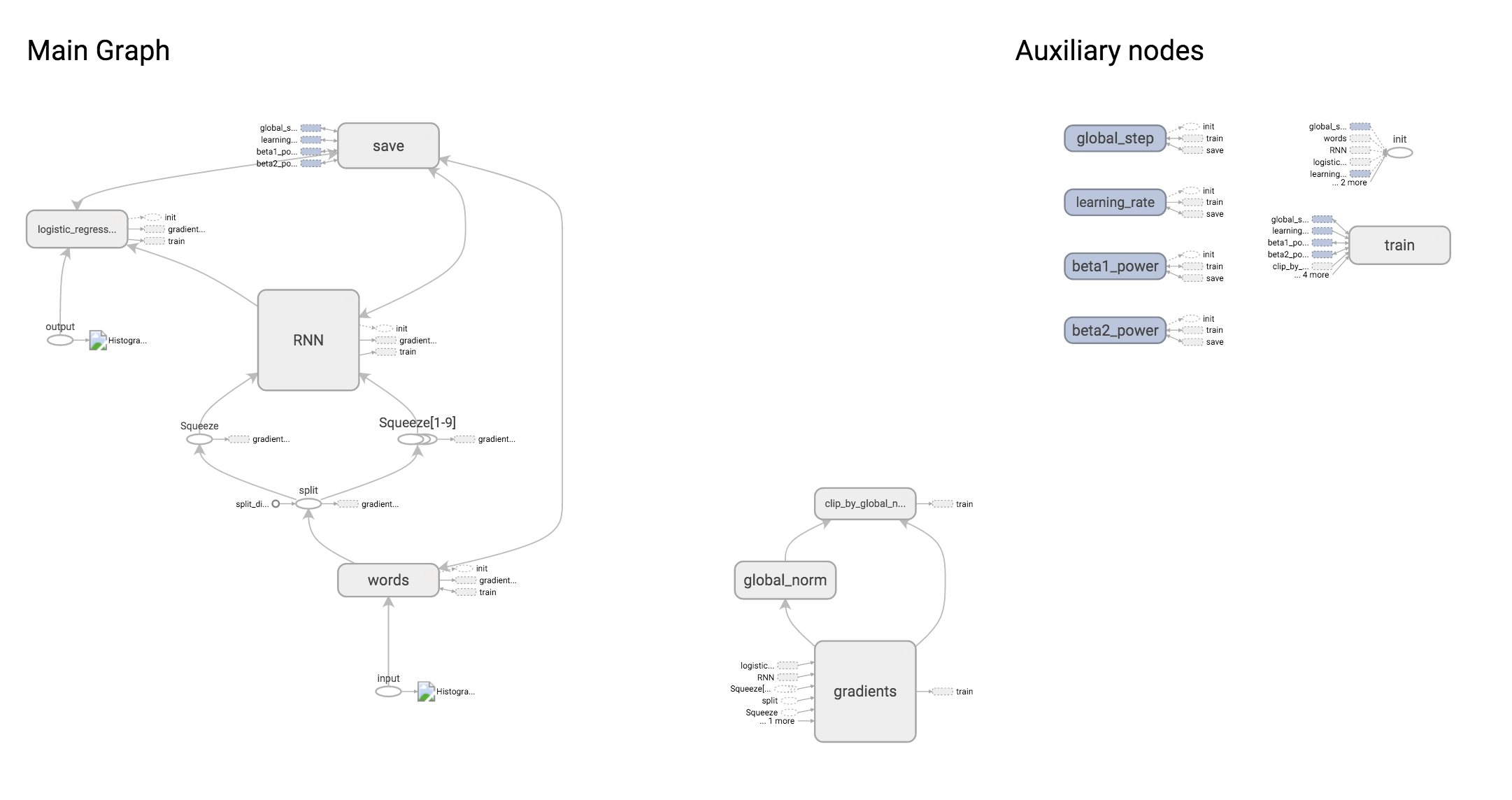

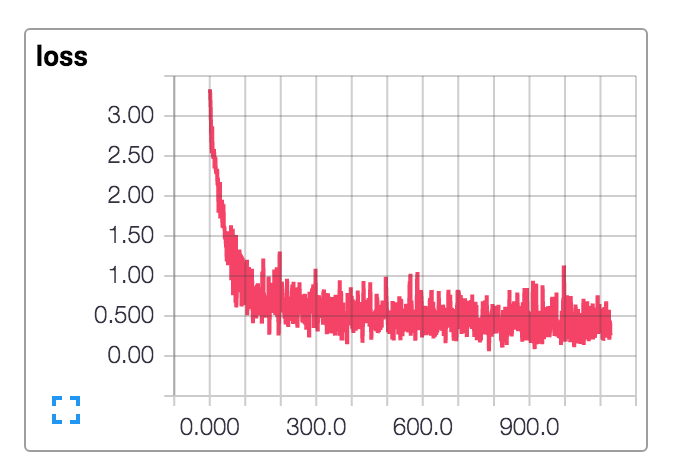

new_classifier.predict(...)To get nice visualizations and summaries you can use logdir parameter on fit. It will start writing summaries for loss and histograms for variables in your model. You can also add custom summaries in your custom model function by calling tf.summary and passing Tensors to report.

classifier = skflow.TensorFlowLinearRegression()

classifier.fit(X, y, logdir='/tmp/tf_examples/my_model_1/')Then run next command in command line:

tensorboard --logdir=/tmp/tf_examples/my_model_1and follow reported url.

See examples folder for:

- Easy way to handle categorical variables - words are just an example of categorical variable.

- Text Classification - see examples for RNN, CNN on word and characters.

- Language modeling and text sequence to sequence.

- Images (CNNs) - see example for digit recognition.

- More & deeper - different examples showing DNNs and CNNs