DEVELOPER PREVIEW NOTE: This project is currently available as a preview and should not be considered for production use at this time.

This Quick Start is a reference architecture and example template on how to use the AWS Cloud Development Kit (CDK) to orchestrate both the provisioning of the Amazon Elastic Kubernetes Service (EKS) cluster as well as the Amazon Virtual Private Cloud (VPC) network that it will live in - or letting you specify an existing VPC to use instead.

When provisioning the cluster it gives the option of either using EC2 worker Nodes via a EKS Managed Node Group, with either OnDemand or Spot capacity types, or building a Fargate-only cluster.

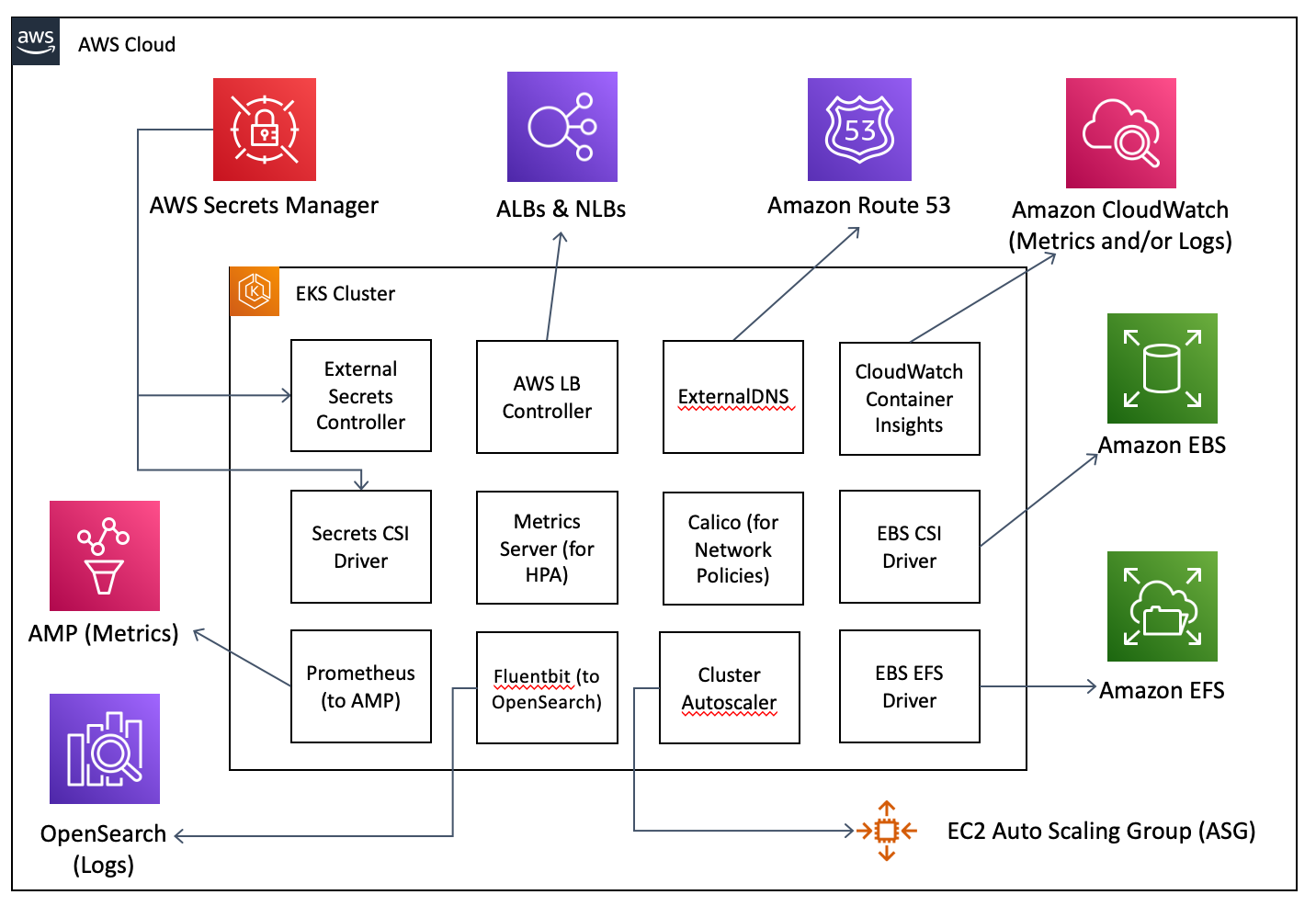

It will also help provision various associated add-ons to provide capabilities such as:

- Integration with the AWS Network Load Balancer (NLB) for Services and Application Load Balancer (ALB) for Ingresses via the AWS Load Balancer Controller.

- Integration with Amazon Route 53 via the ExternalDNS controller.

- Integration with Amazon Elastic Block Store (EBS) and Amazon Elastic File System (EFS) via the Kubernetes Container Storage Interface (CSI) Drivers for them both.

- When enabling EBS we also create a StorageClass using the CSI Driver called

ebs - When enabling EFS we also create an EFS Filesystem as well as a StorageClass set up for dynamic provisioning to it via folders called

efs

- When enabling EBS we also create a StorageClass using the CSI Driver called

- Integration with EC2 Auto Scaling of the underlying worker Nodes via the Cluster Autoscaler - which scales in/out the Nodes to ensure that all of your Pods are schedulable but your cluster is not over-provisioned.

- Integration with CloudWatch for metrics and/or logs for cluster monitoring via CloudWatch Container Insights.

- Integration with Amazon OpenSearch Service (successor to Amazon Elasticsearch Service) for logs for cluster monitoring - both provisioning the OpenSearch Domain as well as a Fluent Bit to ship the logs from the cluster to it.

- Integration with the Amazon Managed Service for Prometheus (AMP) for cluster metrics and monitoring - both provisioning the AMP Workspace as well as a local short-retention Prometheus on the cluster to collect and push the metrics to it.

- This includes an optional local self-hosted Grafana to visualise the metrics. You can opt instead to use an Amazon Managed Grafana instead in production - but setting that up and pointing it at the AMP is outside the scope of this Quick Start.

- The Kubernetes Metrics Server which is required for, amoung other things, the Horizontal Pod Autoscaler to work.

- Integration with Security Groups for network firewalling via Amazon Container Network Interface (CNI) plugin re-configured to enforce Security groups for pods.

- Alternatively, the Calico network policy engine which enforces Kubernetes Network Policies by managing host firewalls on all the Nodes.

- Integration with AWS Secrets Manager via the External Secrets operator and/or the Secrets Store CSI Driver

- Insight into costs and how to allocate them to particular workloads via the open-source Kubecost

Also, since the Quick Start deploys both EKS as well as many of the observability tools like OpenSearch and Grafana into private subnets (i.e. not on the Internet), we provide two secure mechanisms to access and manage them:

- A bastion EC2 Instance preloaded with the right tools and associated with the right IAM role/permissions that is not reachable on the Internet - only via AWS Systems Manager Session Manager

- An AWS Client VPN

While these are great for a proof-of-concept (POC) or development environment, in production you will likely have a site-to-site VPN or a DirectConnect to facilitate this secure access in a more scalable way.

The provisioning of all these add-ons can be enabled/disabled by changing parameters in the cdk.json file.

The CDK is a great tool to use since it can orchestrate both the AWS and Kubernetes APIs from the same template(s) - as well as set up things like the IAM Roles for Service Accounts (IRSA) mappings between the two.

NOTE: You do not need to know how to use the CDK, or know Python, to use this Quick Start as-is with the instructions provided. We expose enough parameters in cdk.json to allow you to customise it to suit most usecases without changing the template (just changing the parameters). You can, of course, also fork it and use it as the inspiration or the foundation for your own bespoke templates as well - but many customers won't need to do so.

The template to provision the cluster and and the add-ons is in the cluster-bootstrap/ folder. The cdk.json contains the parameters to use and the template is mostly in the eks_cluster.py file - though it imports/leverages the various other .py and .yaml files within the folder. If you have the CDK as well as the required packages from pip installed then running cdk deploy in this folder will deploy the Quick Start.

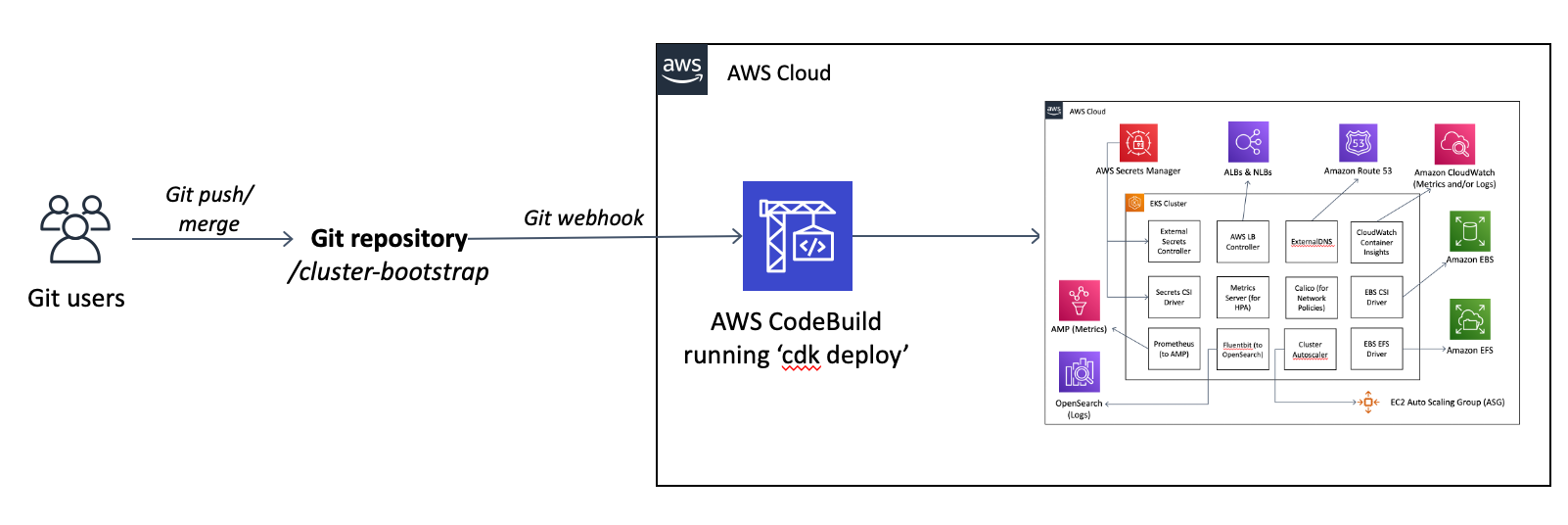

The ideal way to deploy this template, though, is via AWS CodeBuild - which provides a GitOps-style pipeline for not just the initial provisioning and then ongoing changes/maintenance of the environment. This means that if you want to change something about the running cluster you just need to change the cdk.json and/or eks_cluster.py and then merge the change to the git branch/repo and then CodeBuild will automatically apply it for you.

We provide both the buildspec.yml to tell CodeBuild how to install the CDK (via npm and pip) and then do the cdk deploy command for you as well as both a CDK and resulting CloudFormation template (pre-generated for you with a cdk synth command from the eks_codebuild.py CDK template) to set up the CodeBuild project in the cluster-codebuild/ folder.

To save you from the circular dependency of using the CDK (on your laptop?) to create the CodeBuild to then run the CDK for you to provision the cluster you can just use the cluster-codebuild/EKSCodeBuildStack.template.json CloudFormation template directly.

Alternatively, you can install and use CDK directly (not via CodeBuild) on another machine such as your laptop or an EC2 Bastion. This approach is documented here.

While you can toggle any of the parameters to in a custom configuration, we include three cdk.json files in cluster-bootstrap/ around three possible configurations:

- The default cdk.json or cdk.json.default - if you don't change anything the default parameters will deploy you the most managed yet minimal EKS cluster including:

- Managed Node Group of m5.large Instances

- AWS Load Balancer Controller

- ExternalDNS

- EBS & EFS CSI Drivers

- Cluster Autoscaler

- Bastion

- Metrics Server

- CloudWatch Container Insights for Metrics and Logs

- With a log retention of 7 days

- Security Groups for Pods for network firewalling

- Secrets Manager CSI Driver (for Secrets Manager Integration)

- The Cloud Native Community cdk.json.community - replace the

cdk.jsonfile with this file (making it cdk.json instead) and get:- Managed Node Group of m5.large Instances

- AWS Load Balancer Controller

- ExternalDNS

- EBS & EFS CSI Drivers

- Cluster Autoscaler

- Bastion

- Metrics Server

- Amazon OpenSearch Service (successor to Amazon Elasticsearch Service) for logs

- Amazon Managed Service for Prometheus (AMP) w/self-hosted Grafana

- Calico for Network Policies for network firewalling

- External Secrets Controller (for Secrets Manager Integration)

- The Fargate-only cdk.json.fargate - replace the

cdk.jsonfile with this file (making it cdk.json instead) and get:- Fargate profile to run everything in the

kube-systemanddefaultNamespaces via Fargate - AWS Load Balancer Controller

- ExternalDNS

- Bastion

- Metrics Server

- CloudWatch Logs (because the Kubernetes Filter for sending to Elastic/OpenSearch doesn't work with Fargate ATM)

- Amazon Managed Service for Prometheus (AMP) w/self-hosted Grafana (because CloudWatch Container Insights doesn't work with Fargate ATM)

- Security Groups for Pods for network firewalling (built-in to Fargate so we don't need to reconfigure the CNI)

- External Secrets Controller (for Secrets Manager Integration)

- Fargate profile to run everything in the

- Fork this Git Repo to your own GitHub account - for instruction see https://docs.github.com/en/get-started/quickstart/fork-a-repo

- Generate a personal access token on GitHub - https://docs.github.com/en/github/authenticating-to-github/creating-a-personal-access-token

- Run

aws codebuild import-source-credentials --server-type GITHUB --auth-type PERSONAL_ACCESS_TOKEN --token <token_value>to provide your token to CodeBuild - Select which of the three cdk.json files (cdk.json.default, cdk.json.community or cdk.json.fargate) you'd like as a base and copy that over the top of

cdk.jsonin thecluster-bootstrap/folder. - Edit the

cdk.jsonfile to further customise it to your environment. For example:- If you want to use an existing IAM Role to administer the cluster instead of creating a new one (which you'll then have to assume to administer the cluster) set

create_new_cluster_admin_roleto False and then add the ARN for your role inexisting_admin_role_arn- NOTE that if you bring an existing role AND deploy a Bastion that this role will get assigned to the Bastion by default as well (so that the Bastion can manage the cluster). This means that you need to allow

ec2.amazonaws.comto perform actionsts:AssumeRoleon the Trust Policy / Assumed Role Policy of this role as well as add the Managed PolicyAmazonSSMManagedInstanceCoreto this role (so that your Bastion can register with SSM via this role and Session Manager will work)

- NOTE that if you bring an existing role AND deploy a Bastion that this role will get assigned to the Bastion by default as well (so that the Bastion can manage the cluster). This means that you need to allow

- If you want to change the VPC CIDR or the the mask/size of the public or private subnets to be allocated from within that block change

vpc_cidr,vpc_cidr_mask_publicand/orvpc_cidr_mask_private. - If you want to use an existing VPC rather than creating a new one then set

create_new_vpcto False and setexisting_vpc_nameto the name of the VPC. The CDK will connect to AWS and work out the VPC and subnet IDs and which are public and private for you etc. from just the name. - If you'd like an instance type different from the default

m5.largeor to set the desired or maximum quantities changeeks_node_instance_type,eks_node_quantity,eks_node_max_quantity, etc.- NOTE that not everything in the Quick Start appears to work on Graviton/ARM64 Instance types. Initial testing shows the following addons do not work (do not have multi-arch images) - and we'll track them and enable when possible: kubecost, calico and the CSI secrets store provider.

- If you'd like the Managed Node Group to use Spot Instances instead of the default OnDemand change

eks_node_spotto True - And there are other parameters in the file to change with names that are descriptive as to what they adjust. Many are detailed in the Additional Documentation around the the add-ons below.

- If you want to use an existing IAM Role to administer the cluster instead of creating a new one (which you'll then have to assume to administer the cluster) set

- Commit and Push your changes to

cdk.json - Find and replace

https://github.com/aws-quickstart/quickstart-eks-cdk-python.gitwith the address to your GitHub fork in cluster-codebuild/EKSCodeBuildStack.template.json - (Only if you are not using the main branch) Find and replace

mainwith the name of your branch. - Go to the the console for the CloudFormation service in the AWS Console and deploy your updated cluster-codebuild/EKSCodeBuildStack.template.json

- Go to the CodeBuild console, click on the Build project that starts with

EKSCodeBuild, and then click the Start build button. - (Optional) You can click the Tail logs button to follow along with the build process

NOTE: This also enables a GitOps pattern where changes merged to the cluster-bootstrap folder on the branch mentioned (main by default) will re-trigger this CodeBuild to do another npx cdk deploy via web hook.

- Deploy and connect to the Bastion

- Deploy and connect to the Client VPN

- Deploy and connect to OpenSearch for log search and visualisation

- Deploy and connect to Prometheus (AMP) and Grafana for metrics search and visualisation

- Deploy and connect to Kubecost for cost/usage analysis and attribution

- Deploy Open Policy Agent (OPA) Gatekeeper and sample policies via the Flux GitOps Operator

- Upgrading your EKS Cluster and add-ons via the CDK

- Deploying a few included demo/sample applications showing how to use the various add-ons