The goal of this article is originally about to record what I have read and survey about Object detection, and later I found out that it's much better to make it an beginner-oriented introduction so here it is.

I would try to stay shallow, friendly and organized as much as I can so that it wouldn't freak out beginners or make them lost in terminology and formula.

Btw, I'm not a native english speaker.

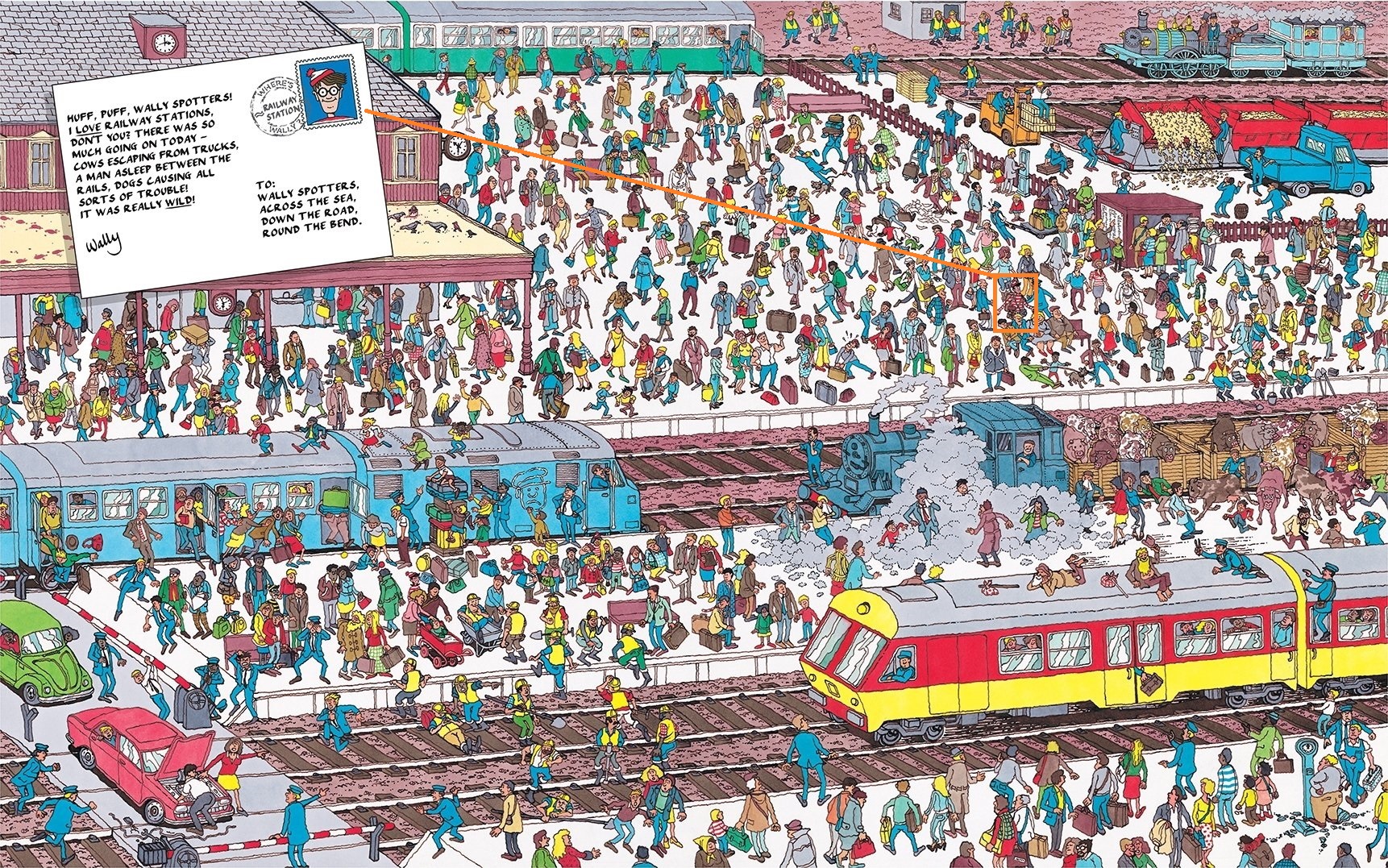

Generally speaking, It's a task about to locate certain object and tell what the object is. e.g., Where's Wally. Human can do this effortlessly so that you may not notice how hard this actually could be. Not to mention designing a program / algorithm to do so.

Therefore, we use Machine Learning, specially Neural Network nowadays, to solve this task. Rather than actually design an algorithm that can detect object, just design an program that can learn how to detect object.

e.g., Use Neural Network to find out Where's Wally / Waldo like this and this.

If you want to go through the history of Object Detection from the very beginning, go check out Lecture 1 of Stanford CS231n. It's a very nice course for those who want to study computer vision. However, This course is not designed for beginners, so if you are pretty new to this topic, maybe take a Machine Learning course on MOOC would be much more helpful.

Usually, You will see Object Detection along with several keywords like Machine Learning, Computer Vision, Image Classification, Neural Network, CNN ... and so on. To clarify this, I will list those I've heard of and explain the relationships between them.

Keep in mind that my shallow explanation may not be comprehensive enough because every words I'm going to mention could be a topic that would spend you months to study.

- Machine Learning is a study about how to design a clever program that can automatically find out how to solve various tasks without human hand-holding.

- Classifier

- Training

- Computer Vision is a sub domain of Machine Learning. Its goal is pretty much same with Machine Learning but only focus on the tasks those process image or video.

- Image Classification is a topic / task of Computer Vision whose goal is to find a way to tell what the object on the image is automatically.

- There are still other tasks like Image Segmentation, Image Captioning ...

- Neural Network is a branch of Machine Learning Classifier algorithm. Due to the increasing of amount of data and computing power, its performance has already outperform most other algorithm in most Machine Learning task in recent year. It is said that its origin of the study is about to simulate how neuron works in human brain.

- Convolutional Neural Network (CNN) is one kind of Neural Network structure which is perfect for solving most Computer Vision tasks. The core idea is all about using convolutional layer to simulate how human brian process what people see.

- TensorFlow is a

If you still want more detail, try google search.

I Split this section into 2 parts, One is Before Neural Network and another is Neural Network. In First part, I will briefly introduce some method which was either common or once state-of-the-art at their time. Then I will introduce Neural Network which is actually the reason make me to start writing this in next part.

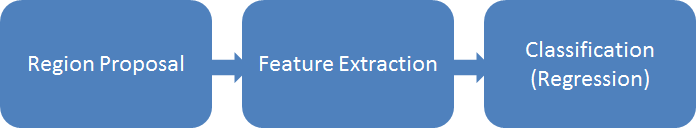

As far as I know, Most traditional method around 2006 - 2010 could fit into the three steps flow. See the figure below.

To put it simply, this is what actually each steps do:

- Region Proposal - Find those region may potentially contains an object. The most common and easiest method is sliding window. Just like the figure shown below, a fixed-size window would slide over entire image and the bounded area would be treated as the proposed regions.

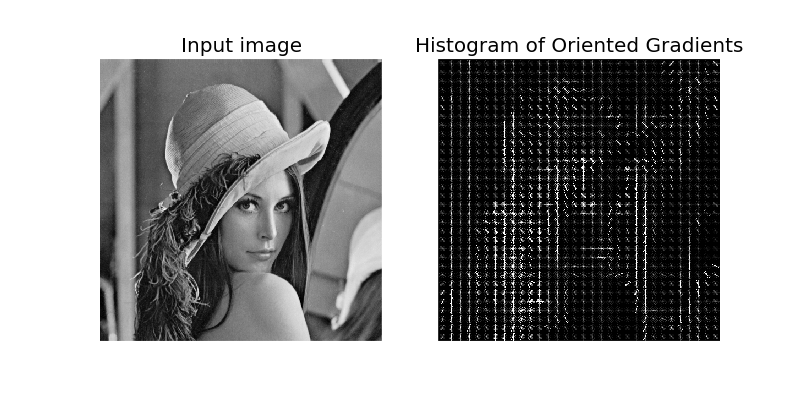

- Feature Extraction - Transform the proposed regions of image into a different kind of representation which is usually defined by human. For example, Histogram of Gradient (HoG) will calculate the gradient between pixels and take is as a representation of image. It was once very popular because its promising performance on object detection.

3. Classification - Determine if the proposed area does contain an object according to transformed representation. In this step, we would need a classifier which is trained with transformed representation to achieve this task.If you are interested in it, it is better that taking a Machine Learning course on MOOC site e.g., Coursera or Udacity.

3. Classification - Determine if the proposed area does contain an object according to transformed representation. In this step, we would need a classifier which is trained with transformed representation to achieve this task.If you are interested in it, it is better that taking a Machine Learning course on MOOC site e.g., Coursera or Udacity.

Here is some keywords of traditional method, I only list few because I know very less about them.

- Region Proposal- Sliding Window, Edge Boxes, Selective Search

- Feature Extraction - Haar, HOG, LBP, ACF

- Classification - SVM, AdaBoost, DPM

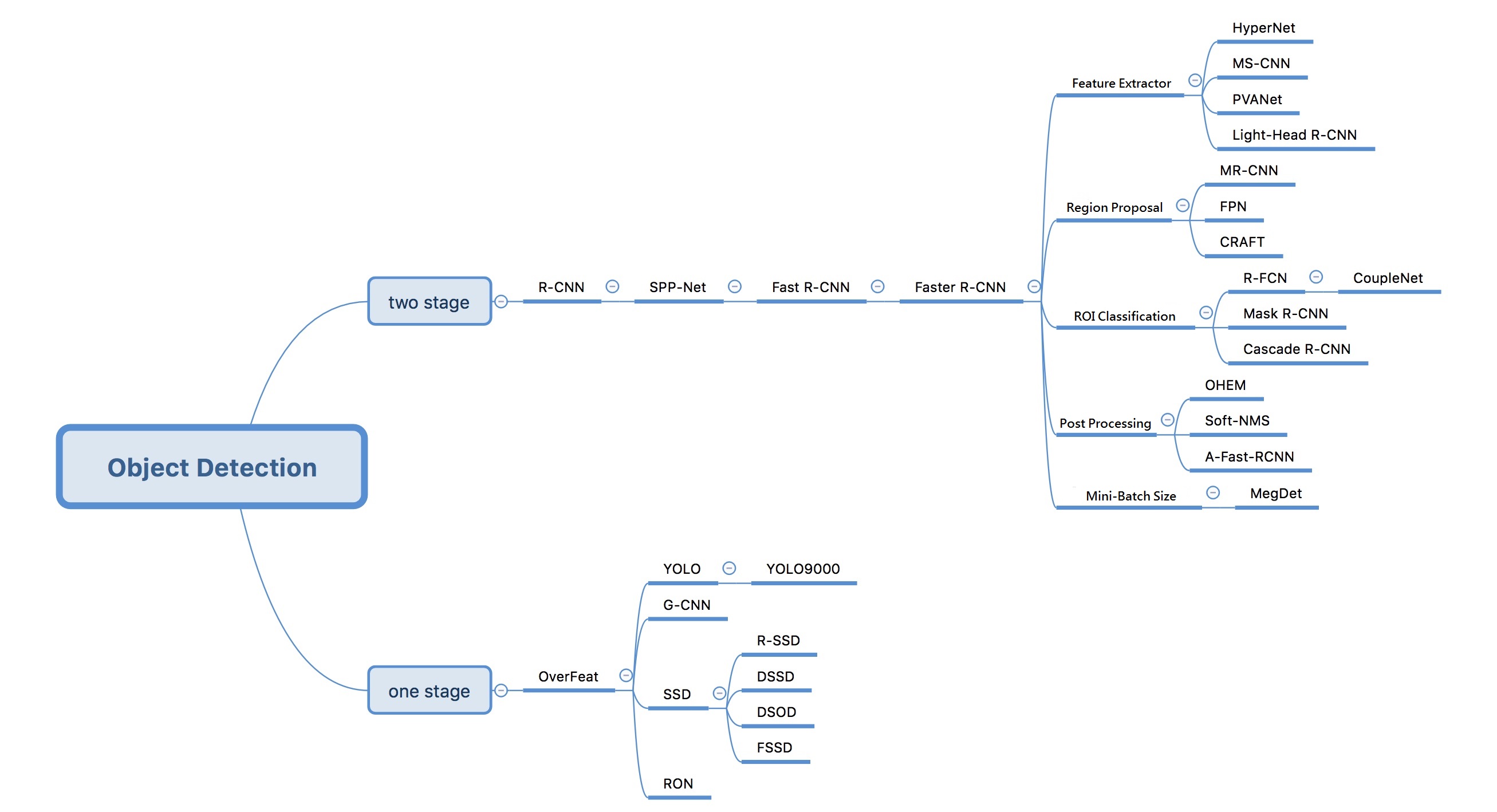

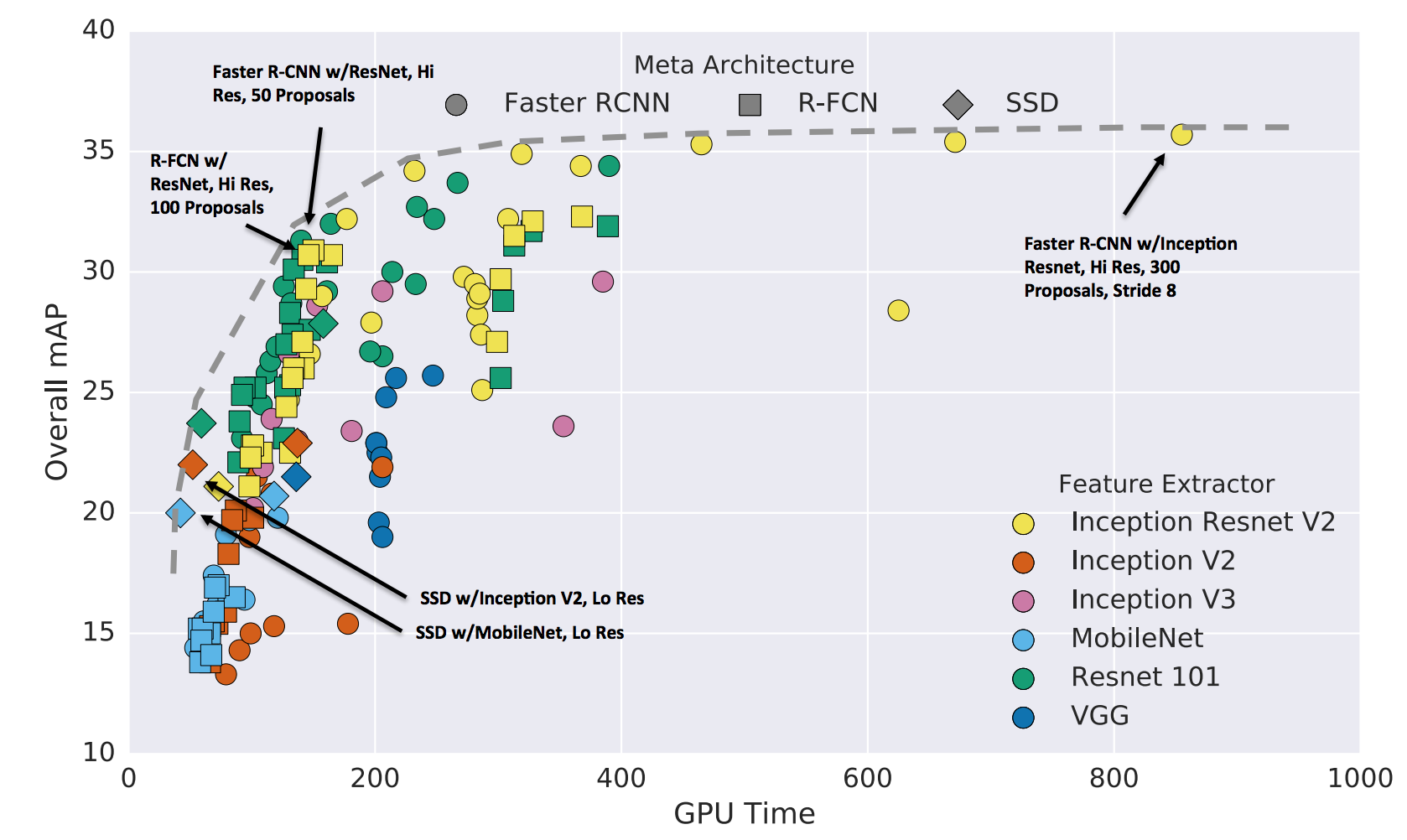

Generally, NN-based method could be classified into 2 classes. One is often called as Two-Stage Detectors due to the way it approach the task. They first find regions that are potential to be a object over image and then try to tell what kind of object is it. Another is One-Stage Detectors which attempt to solve two problems together.

Some also says that the major difference between the two kind is how they approach the problem. Two-Stage Detector try to take Object Detection as a classification problem and One-Stage Detector treat it as a regression problem. Also, they have different trade-off between accuracy and speed, Two-Stage Detectors are usually more accurate and One-Stage Detectors are faster.

Here is the paper list of both kinds detectors. I only list those I've heard of, If I miss something important, remind me please.

- Two-Stage Detectors

- One-Stage Detectors

Also, I found this tree graph is pretty useful to understand the situation of this research domain. However, it shows that the last update was at 12/31/17 so it might be a little outdated.

Other than Object Detection framework, each method mentioned above also must combine with a Backbone Network (some may called it as Feature Extractor, just like the role in the three step flow I mention above) to function normally. Different Backbone Network imply different structure, different possible performance and different computing power required. There are a lot of classic neural network structure over the years and here are the most common ones.

I only link the very origin paper because each structure I mention have various variants. You may see something like ResNet-101, VGG-16, MobileNet v1... and so on. Some suffix just means that it use different parameter but some may actually means significant breakthrough. Just realize that the research about network structure is a very popular research topic so they would get improved all the time.

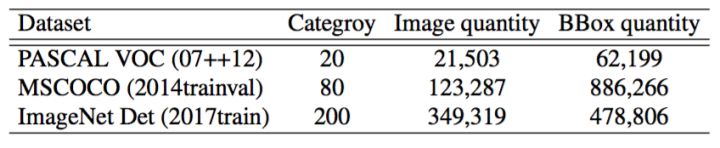

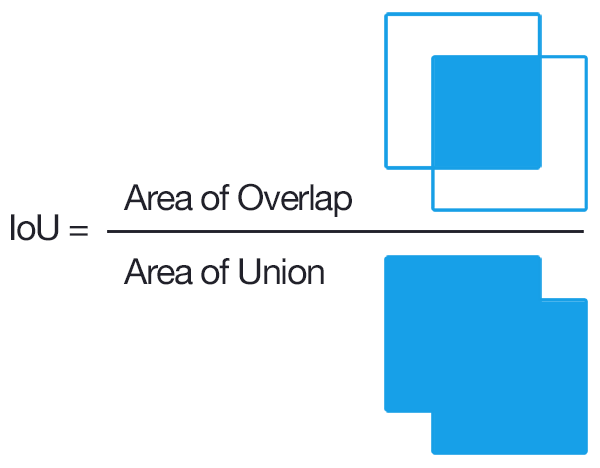

- COCO

- 80K training images, 40K val images and 20K test images

- 80 categories, around 7.2 objects per image

- Mostly 80K train + 35K val as train, only 5K val as val

- Pascal VOC 07 / 12

- Mostly 07 + 12 trainval to train, 07 test set for test

- ImageNet Det

Generally, COCO is the most difficult one because of the number of small target.

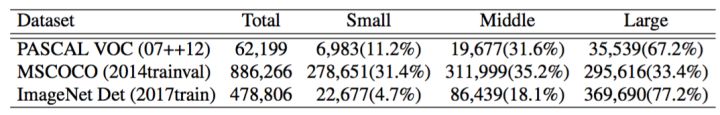

To compare the detection performance between methods, there are two most used metrics. One is Intersection over Union (IoU) and another is mean Average Precision (mAp).

- Intersection over Union (IoU) shows how much does a prediction bounding box and a ground truth bounding box overlap. The more they overlap, the higher score is. The formula is shown below. We will set a threshold on IoU and treat the predictions which got lower scores as a wrong predict. The threshold must be set between [0, 1], usually 0.5 or 0.75.

- mean Average Precision (mAP) is the metrics we most concern about. It shows the general performance of detectors. We only count predictions which scores is higher than IoU threshold as correctly predictions. Based on this, we calculate and draw precision and recall curve for every object class respectively. The Area Under Curve is so-called Average Precision. Finally, we could simply take mean of Average Precision over all class to get mean Average Precision. The value would be in [0, 100%]

Other than two mentioned above, Inference time / fps is also a very important metrics because in most case, we would like to deploy the object detector to mobile devices, which means that the computing power would be much lower than normal PC.

- English

- Simplified Chinese