This repository contains code and data for the AAAI 2022 paper:

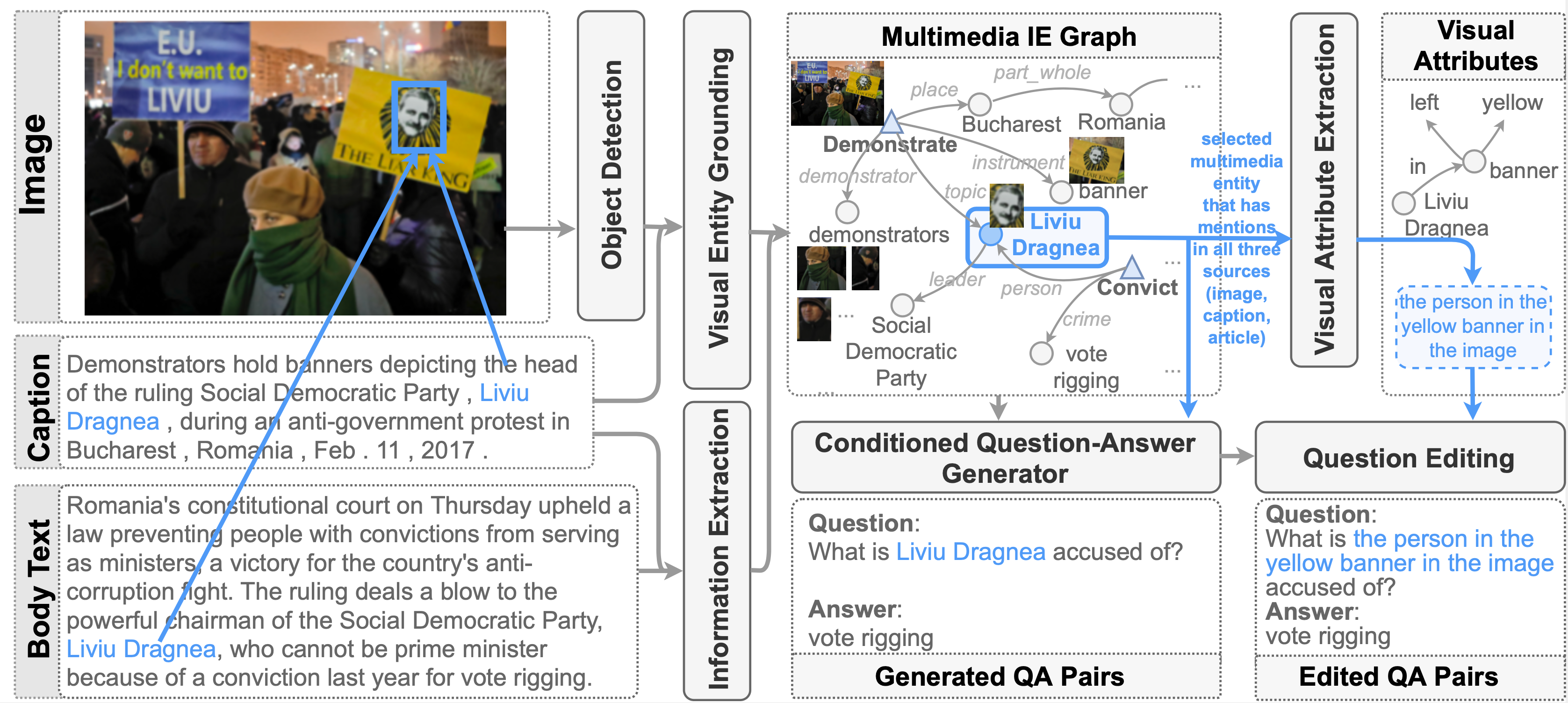

MuMuQA: Multimedia Multi-Hop News Question Answering via Cross-Media Knowledge Extraction and Grounding

Arxiv link: https://arxiv.org/pdf/2112.10728.pdf

The training set contains 21575 examples that have been automatically generated using the pipeline described in the paper.

The dev and test sets contain 263 and 1121 manually annotated examples respectively.

You can find the data here.

The eval data (dev.json/test.json) has the following fields for each example:

question: Question textcontext: The news article textcaption: The news caption textimage: The url to the imageid: Example IDanswer: Final answer to the questionbridge: Bridge answervoa_example_id: VOA Corpus example IDvoa_image_id: Image ID within VOA corpus

The train data (train.json) has the following fields for each example:

context: The news article textcaption: The news caption textquestion_generation_context: Subset of news article text from which question was generatedentity_in_question: Conditioning entity (withinquestion_generation_context) that was used during question generationgenerated_question: Question that was output from the conditioned question generation modelquestion_phrase_replaced: Phrase withingenerated questionthat was replaced with an image referencequestion: Final question textimage: The url to the imageanswer: Final answer to the questionanswer_start: Start character offset for answer span withincontextanswer_end: End character offset (inclusive) for answer span withincontextbridge: Bridge answerbridge_start: Start character offset for bridge answer span withincaptionbridge_end: End character offset (inclusive) for bridge answer span withincaptionvoa_example_id: VOA Corpus example IDvoa_image_id: Image ID within VOA corpus

We will be releasing the multi-modal grounding and text IE outputs shortly.

We will be releasing the code for the baselines shortly.

The output predictions need to be dictionary with the Example ID as the key and the answer string as the value. We use a SQuAD-style string F1 metric for evaluation.

To run evaluation on with the final answer as predictions:

python mmqa_eval.py <path to gold file> <path to predictions file>

To run evaluation on with the bridge answer as predictions:

python mmqa_eval.py <path to gold file> <path to predictions file> --bridge_eval

If you used this dataset in your work, please consider citing our paper:

@article{reddy2021mumuqa,

title={MuMuQA: Multimedia Multi-Hop News Question Answering via Cross-Media Knowledge Extraction and Grounding},

author={Reddy, Revanth Gangi and Rui, Xilin and Li, Manling and Lin, Xudong and Wen, Haoyang and Cho, Jaemin and Huang, Lifu and Bansal, Mohit and Sil, Avirup and Chang, Shih-Fu and others},

journal={arXiv preprint arXiv:2112.10728},

year={2021}

}