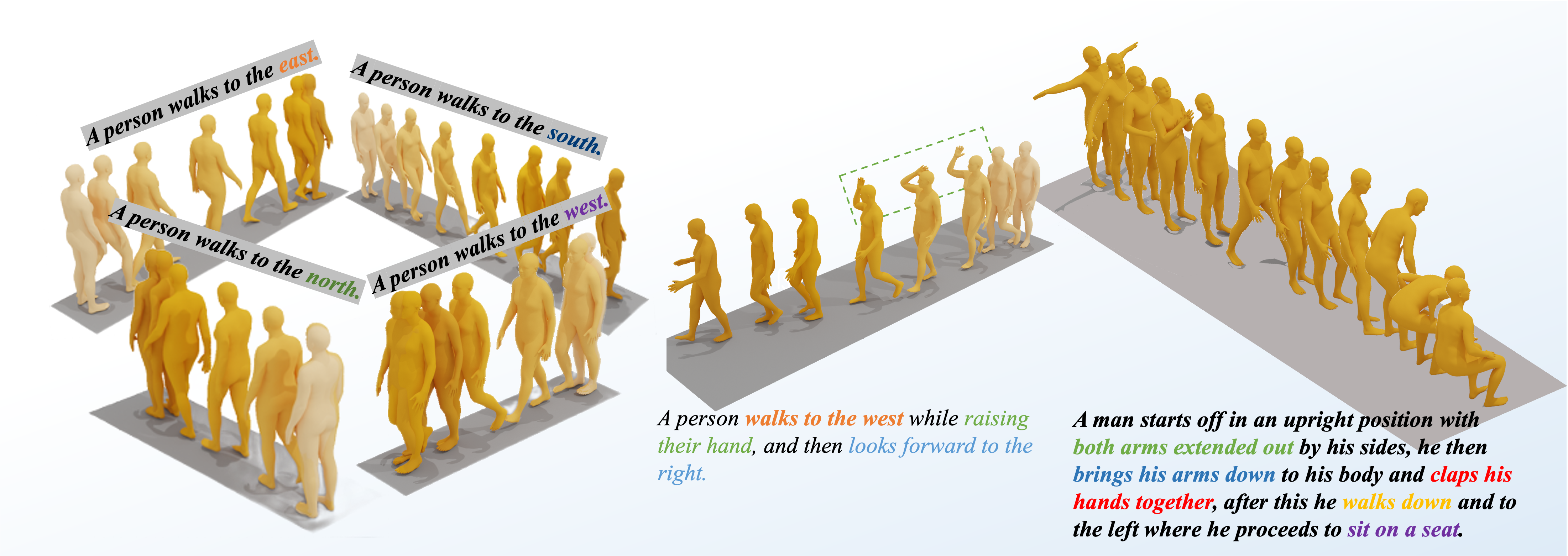

SemanticBoost framework consists of optimized diffusion model CAMD and Semantic Enhancement Module which describe specific body parts explicitly. With two modules, SemanticBoost can:

- Synthesize more smooth and stable motion sequences.

- Understand longer and more complex sentences.

- Control specific body parts precisely

In this repo, we achieves the functions:

- Export 3D joints

- Export SMPL representation

- Render with TADA 3D roles

|

|

|

[2023/10/20] Release pretrained weights and inference process

[2023/10/27] Release new pretrained weights and tensorRT speedup

[2023/11/01] Release paper on Arxiv

Environment and Weights

##### create new environment for conda

conda create -n boost python==3.9.15

#### install dependencies

conda activate boost

pip install -r requirements.txtsudo apt-get install freeglut3-devbash scripts/prepare.sh-

Download Choice 1

- Download charactors in

https://drive.google.com/file/d/1rbkIpRmvPaVD9AJeCxWqBBYHkRIwrNmC/view

- Download Init Pose in

- Save two zip files in the root dir and then run command

bash scripts/tada_process.sh

-

Download Choice 2

bash scripts/tada_goole.sh

-

Download TensorRT SDK, we test with TensorRT-8.6.0 and pytorch 2.0.1

-

Set environment

export LD_LIBRARY_PATH=/data/TensorRT-8.6.0.12/lib:$LD_LIBRARY_PATH export PATH=/data/TensorRT-8.6.0.12/bin:$PATH

-

Install python api

pip install /data/TensorRT-8.6.0.12/python/tensorrt-8.6.0-cp39-none-linux_x86_64.whl

-

Export TensorRT engine

bash scripts/quanti.sh

bash scripts/blender_prepare.shWebui or HuggingFace

Run the following script to launch webui, then visit 0.0.0.0:7860

python app.pyInference and Visualization

'''

--prompt Input textual description for generation

--mode Which model to generate motion sequences

--render Render mode [3dslow, 3dfast, joints]

--size The resolution of output video

--role TADA role name, default is None

--length The total frames of output video, fps=20

-f --follow If the camera follow the motion process during render.

-e --export If export fbx file which will cost more time.

'''python inference.py --prompt "A person walks forward and sits down on the chair." --length "120" --mode ncamd --size 1024 --render "3dslow" -f -e######## More tada_role please refer to TADA-100

python inference.py --prompt "A person walks forward and sits down on the chair." --mode ncamd --size 1024 --render "3dslow" --role "Iron Man" --length "120"python inference.py --prompt "A person walks forward.| A person dances in place.| A person walks backwards." --mode ncamd --size 1024 --render "3dslow" --length "120|100|120"If you find our code or paper helps, please consider citing:

@misc{he2023semanticboost,

title={SemanticBoost: Elevating Motion Generation with Augmented Textual Cues},

author={Xin He and Shaoli Huang and Xiaohang Zhan and Chao Wen and Ying Shan},

year={2023},

eprint={2310.20323},

archivePrefix={arXiv},

primaryClass={cs.CV}

}Thanks to MDM, T2M-GPT, MLD, HumanML3D, joints2smpl and TADA, our code is partially borrowing from them.