iml is an R package that interprets the behaviour and explains predictions of machine learning models. It implements model-agnostic interpretability methods - meaning they can be used with any machine learning model.

Currently implemented:

- Feature importance

- Partial dependence plots

- Individual conditional expectation plots (ICE)

- Tree surrogate

- LocalModel: Local Interpretable Model-agnostic Explanations

- Shapley value for explaining single predictions

Read more about the methods in the Interpretable Machine Learning book

Start an interactive notebook tutorial by clicking on the badge:

The package can be installed directly from CRAN and the develoopment version from github:

# Stable version

install.packages("iml")

# Development version

devtools::install_github("christophM/iml")Changes of the packages can be accessed in the NEWS file shipped with the package.

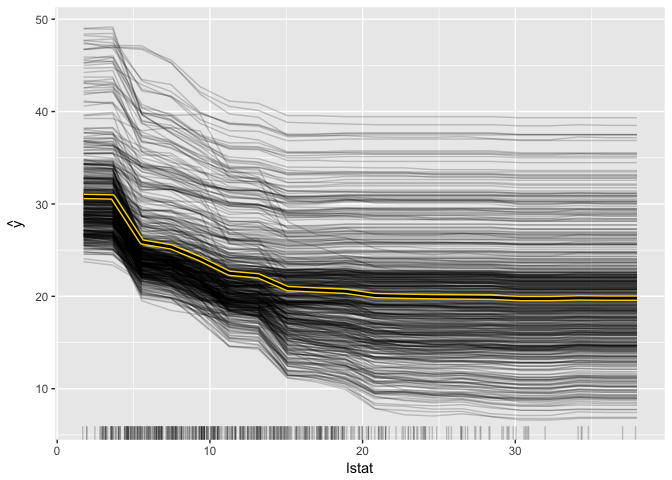

First we train a randomForest to predict the Boston median housing value. How does lstat influence the prediction individually and on average? (Partial dependence plot and ICE)

library("iml")

library("randomForest")

data("Boston", package = "MASS")

rf = randomForest(medv ~ ., data = Boston, ntree = 50)

X = Boston[which(names(Boston) != "medv")]

model = Predictor$new(rf, data = X, y = Boston$medv)

pdp.obj = Partial$new(model, feature = "lstat")

pdp.obj$plot()Referring to https://github.com/datascienceinc/Skater

This work is funded by the Bavarian State Ministry of Education, Science and the Arts in the framework of the Centre Digitisation.Bavaria (ZD.B)