Web Wanderer is a multi-threaded web crawler written in Python, utilizing concurrent.futures.ThreadPoolExecutor and Playwright to efficiently crawl and download web pages. This web crawler is designed to handle dynamically rendered websites, making it capable of extracting content from modern web applications.

First install the required dependencies.

Then you can use it as either a cli tool or as a library.

python src/main.py https://python.langchain.com/en/latest/To start crawling, simply instantiate the MultithreadedCrawler class with the seed URL and optional parameters:

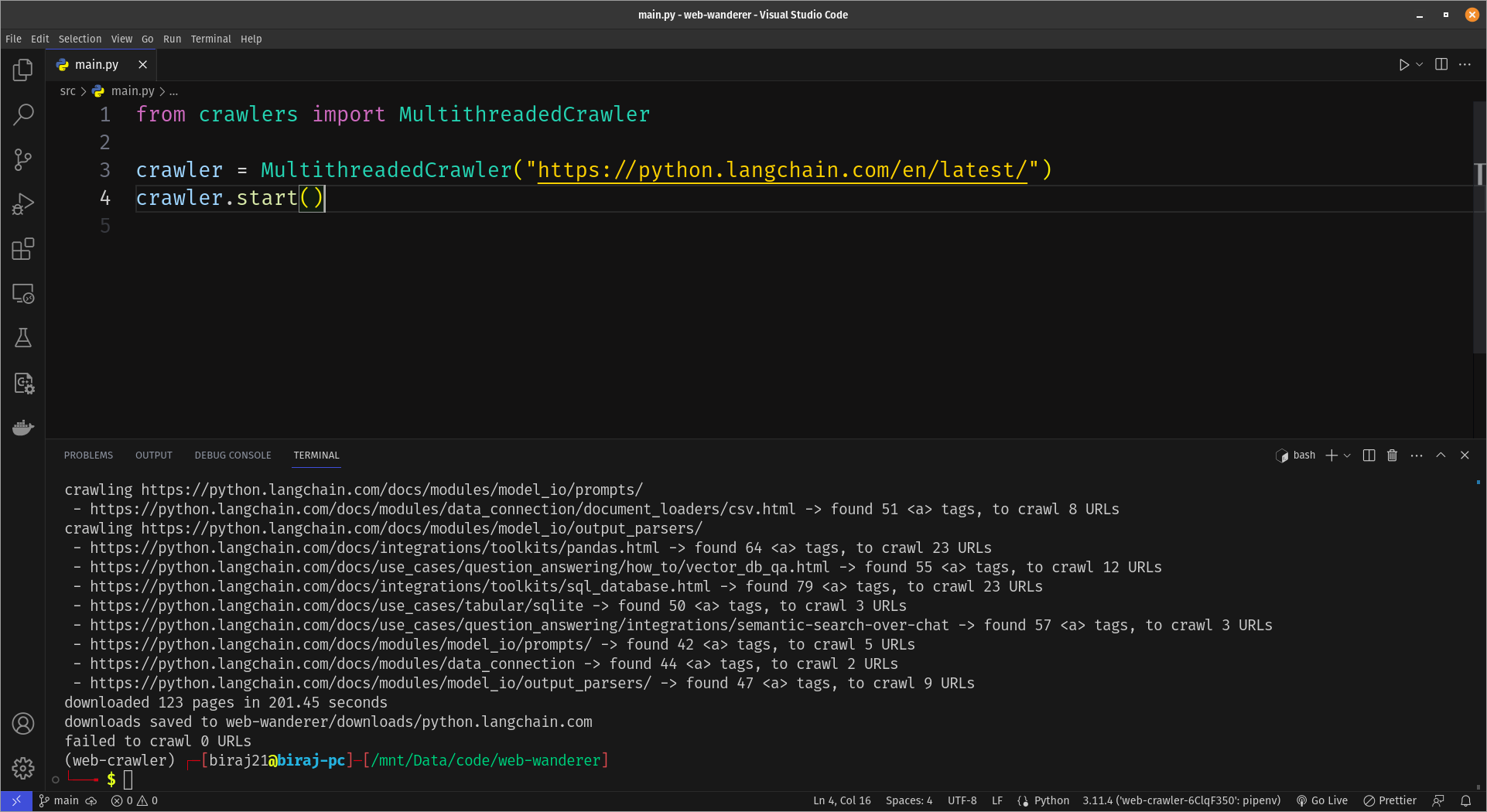

from crawlers import MultithreadedCrawler

crawler = MultithreadedCrawler("https://python.langchain.com/en/latest/")

crawler.start()The MultithreadedCrawler class is initialized with the following parameters:

seed_url(str): The URL from which the crawling process will begin.output_dir(str): The directory where the downloaded pages will be stored. By default, the pages are saved in a folder named after the base URL of the seed. Defaults toweb-wanderer/downloads/<base-url-of-seed>"num_threads(int): The number of threads the crawler should use. This determines the level of concurrency during the crawling process. Defaults to8.done_callback(Callable | None): A callback function that will be called after crawling is successfully done.

-

Multi-Threaded: Web Wanderer employs multi-threading using the

ThreadPoolExecutor, which allows for concurrent fetching of web pages, making the crawling process faster and more efficient. -

Dynamic Website Support: The integration of Playwright enables Web Wanderer to handle dynamically rendered websites, extracting content from modern web applications that rely on JavaScript for rendering.

-

Queue-Based URL Management: URLs to be crawled are managed using a shared queue, ensuring efficient and organized distribution of tasks among threads.

-

Done Callback: You have the option to set a callback function that will be executed after the crawling process is successfully completed, allowing you to perform specific actions or analyze the results.

Web Wanderer relies on the following libraries:

playwright: To handle dynamically rendered websites and interact with web pages.

Note: Have only tested this project with Python 3.11.4.

- Clone the repository:

git clone https://github.com/biraj21/web-wanderer.git

cd web-wanderer-

Install and setup pipenv

-

Active virtual environment

pipenv shell- Install dependencies

pipenv install- Install headless browser with

playwright

playwright installHappy web crawling with Web Wanderer! 🕸️🚀