This framework calculates and stores “intersection efficiencies” for the application of the “inclusion method” [1], to be used for the HH→ bbττ resonant analysis by the CMS experiment. More details available in this presentation.

The processing starts from skimmed (KLUB) Ntuples. The framework is managed by luigi (see inclusion/run.py), which runs local tasks and creates a HTCondor Direct Acyclic Graph (DAG) (see DAGMan Workflows).

I recommend using mamba. In general the following should work:

mamba create -n InclusionEnv python=3.11

mamba activate InclusionEnv

mamba install uproot pandas h5py luigi

git clone git@github.com:bfonta/inclusion.gitAt lxplus (CERN), it is better to use an LCG release, which is dependent on the environment one is using. For instance:

gcc -v # print gcc version

# the line below shows the most recent

source /cvmfs/sft.cern.ch/lcg/views/LCG_105/x86_64-el9-gcc11-opt/setup.shSome packages (luigi, for instance) might not be included in an LCG view. If so:

python -m pip install luigiThe configuration is controlled via python files stored under config/. General configurations are available in main.py, while run-specific can be specified in a custom file which is then pointed at with the command-line option --configuration. For instance, if using config/sel_default.py, we would run python3 inclusion/run.py ... --configuration sel_default.

Most parameters are very intuitive. Regarding the triggers, one has to specify the following four parameters:

triggers: a list of all triggers to be considered.exclusive: dictionary having the supported channels as keys plus a common “general” key, where the value refer to the triggers that are exclusive to that particular channel. For instance, if we want to considerIsoMu27only for themutauchannel, we would writeexclusive = {..., 'mutau': ('IsoMu27',), ...}. This option is convenient to avoid considering triggers which are unrelated to the channel under study and thus have little to no effect.inters_general: dictionary having as keys the datasets being considered (MET, SingleMuon, …) and as values the triggers and their combinations that should be applied when prcessing events from the corresponding dataset. Each trigger and combination is written as an independenttuple.inters: same asinters_generalbut for specific channels, i.e., when a trigger or a trigger combination should be considered only for a specific channel when processing a specific dataset.

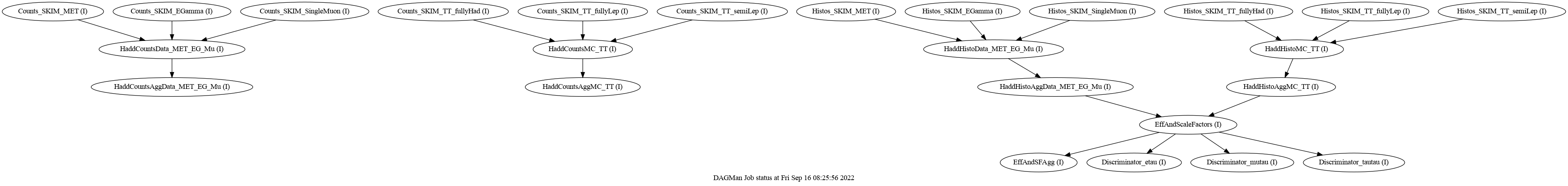

- Binning (manual or equal width with upper 5% quantile removal)

- is the only job which runs locally (i.e., not on HTCondor)

- Histos_SKIM_*: filling efficiencies numerator and denominator histograms

- HaddHisto*: add histograms together per data type (Data and all MCs)

- HaddHistoAgg*: add histograms for Data and MC

- EfficienciesAndSF*: calculate efficiencies by dividing the histograms obtained in point #2; plots MC/Data comparisons for efficiencies and normalized counts histograms

- EffAndAgg: aggregate efficiencies and scale factors in one file per channel

- Discriminator_*: choose the variables to be used to calculate the final union efficiency

- Counts_SKIM_*: count the number of events that passes a selection identical to the one of the events in the “Efficiencies Chain”

- HaddCounts*: add counters per data type (Data and all MCs)

- HaddCountsAgg*: add counters for Data and MC

Used for replicating the retrieval of the weights done by KLUB, using the outputs of steps 6. and 7.:

- UnionWeightsCalculator_SKIM_*: calculate the union efficiencies (following the

inclusion method[1]) - Closure: perform a simple closure (complete closure is done outside this framework in the HH → bbτ τ

C++analysis code)

Run dot -Tpng dag.dot -o dag.png as explained here (a dot file was previously created by the DAG with DOT dag.dot here).

HTCondor DAGs support by default an automatic resubmission mechanism. Whenever a DAG is interrupted or exists unsuccessfully, a *rescue* file is created, specifying which jobs were “DONE” by the time the DAG stopped. This file is picked up automatically if the DAG is resubmitted (using the standard condor_submit command); jobs with “DONE” status are not resubmitted. More information here.

Run the submission workflow (check the meaning of the arguments by adding --help):

python3 inclusion/run.py --branch nocounts --data Mu --mc_processes TT --configuration sel_only_met_nocut --channels mutau mumu --nbins 25 --tag Tag2018 --year 2018where the configuration file must be defined under inclusion/config/.

If everything runs as expected, the above should run locally all local tasks (currently DefineBinning only) and launch a HTCondor DAG which encodes the dependencies of the remaining tasks and runs them in the server.

You can run only part of the framework by selecting the appropriate --branch option. For instance, for running the “Counts Chain” only (if you only care about how many events passed each trigger) use --branch counts.

The HTCondor files are written using the inclusion/condor/dag.py and inclusion/condor/job_writer.py files.

| Output files | Destination folder |

|---|---|

ROOT | /data_CMS/cms/<llr_username>/TriggerScaleFactors/<some_tag>/Data/ |

| Plots | /data_CMS/cms/<llr_username>/TriggerScaleFactors/<some_tag>/Outputs/ |

| Submission | $HOME/jobs/<some_tag>/submission/ |

| Output | $HOME/jobs/<some_tag>/outputs/ |

| DAG | $HOME/jobs/<some_tag>/outputs/CondorDAG/ |

You can also run each luigi task separately by running its corresponding python scripts (all support --help). Inspect HTCondor’s output shell and condor files for the full commands.

Input files, variables and quantities associated to the triggers can be configured in inclusion/config/main.py.

One can copy the folder with the plots to CERN’s available website for easier inspection. Using my bfontana CERN username as an example:

cp /data_CMS/cms/<llr username>/TriggerScaleFactors/<some_tag>/Outputs/ /eos/user/b/bfontana/www/TriggerScaleFactors/<some_tag>One can then visualize the plots here.

In order to avoid cluttering the local area with output files, a bash script was written to effortlessly delete them:

bash inclusion/clean.sh -t <any_tag> -f -dUse -h/--help to inspect all options.

Studies were performed using “standalone” scripts (not part of the main chain, running locally, but using some definitions of the “core” package).

To run jobs at LLR accessing files on /eos/:

voms-proxy-init --voms cms --out ~/.t3/proxy.cert

/opt/exp_soft/cms/t3/eos-login -username bfontana -keytab -initThe jobs should contain the following lines:

export X509_USER_PROXY=~/.t3/proxy.cert

. /opt/exp_soft/cms/t3/eos-login -username bfontana -wnRun the script (-h for all options):

for chn in "etau" "mutau" "tautau"; do python tests/test_trigger_regions.py --indir /data_CMS/cms/alves/HHresonant_SKIMS/SKIMS_UL18_Upstream_Sig/ --masses 400 500 600 700 800 900 1000 1250 1500 --channel $chn --spin 0 --region_cuts 190 190 --configuration inclusion.config.sel_default; doneAdd the --plot option to reuse the intermediate datasets if you are running over the same data due to cosmetics changes.

Run the script (-h for all options):

python3 tests/test_trigger_gains.py --masses 400 500 600 700 800 900 1000 1250 1500 --channels tautau --region_cuts 190 190 --year 2018This runs on the CSV tables (per mass and channel) produced by the tests/test_trigger_regions.py script. It produces two plots, the first displaying the independent benefit of adding the MET trigger or the SingleTau trigger, and the second showing the added gain of including both.

For instance (input paths defined at the end of the script):

for mmm in "400" "600" "800" "1000" "2000"; do for chn in "etau" "mutau" "tautau"; do python tests/test_draw_kin_regions.py --channel $chn --year 2018 --category baseline --dtype signal --mass $mmm --mode trigger --skim_tag OpenCADI --save; done; doneYou can remove --save to speed-up the plotting in case the data has already been saved in a previous run.

By passing --debug_workflow, the user can obtain more information regarding the specific order tasks and their functions are run.

When using --scheduler central, one can visualize the luigi workflow by accessing the correct port in the browser, specified with luigid --port <port_number> &. If using ssh, the port will have to be forwarded.