This repository contains:

- A simple DCGAN model with a flexible configurable architecture along with the following avaliable losses (in losses.py):

- An implementation of Adaptive Discriminator Augmentation for training GANs with limited amounts of data. It was used for ablations and hyperparameter optimization for the corresponding Keras code example, but was turned off for the experiments below.

- An implementation of Kernel Inception Distance (KID), which is a GAN performance metric with a simple unbiased estimator, that is more suitable for limited amounts of images, and is also computationally cheaper to measure compared to the Frechet Inception Distance (FID). Implementation details include (all being easy to tweak):

- The Inceptionv3 network's pretrained weights are loaded from Keras applications.

- For computational efficiency, the images are evaluated at the minimal possible resolution (75x75 instead of 299x299), therefore the exact values might not be comparable with other implementations.

- For computational efficiency, the metric is only measured on the validation splits of the datasets.

List of GAN training tips and tricks based on experience with this repository.

Try it out in a Colab Notebook (good results take around 2 hours of training):

Cherry-picked 256x256 flowers (augmentation + residual connections + no transposed convolutions):

- 6000 training images

- 400 epoch training

- 64x64 resolution, cropped on bounding boxes

KID results (the lower the better):

| Loss / Architecture | Vanilla DCGAN | Spectral Norm. | Residual | Residual + Spectral Norm. |

|---|---|---|---|---|

| Non-saturating GAN | 0.087 | 0.184 | 0.479 | 0.533 |

| Least Squares GAN | 0.114 | 0.153 | 0.312 | 0.361 |

| Hinge GAN | 0.123 | 0.238 | 0.165 | 0.304 |

| Wasserstein GAN | * | 1.066 | * | 0.679 |

Images generated by a vanilla DCGAN + non-saturating loss:

- 6000 training images (70% of every split)

- 500 epoch training

- 64x64 resolution, center cropped

KID results (the lower the better):

| Loss / Architecture | Vanilla DCGAN | Spectral Norm. | Residual | Residual + Spectral Norm. |

|---|---|---|---|---|

| Non-saturating GAN | 0.080 | 0.083 | 0.094 | 0.139 |

| Least Squares GAN | 0.104 | 0.092 | 0.110 | 0.131 |

| Hinge GAN | 0.090 | 0.087 | 0.101 | 0.099 |

| Wasserstein GAN | * | 0.165 | * | 0.107 |

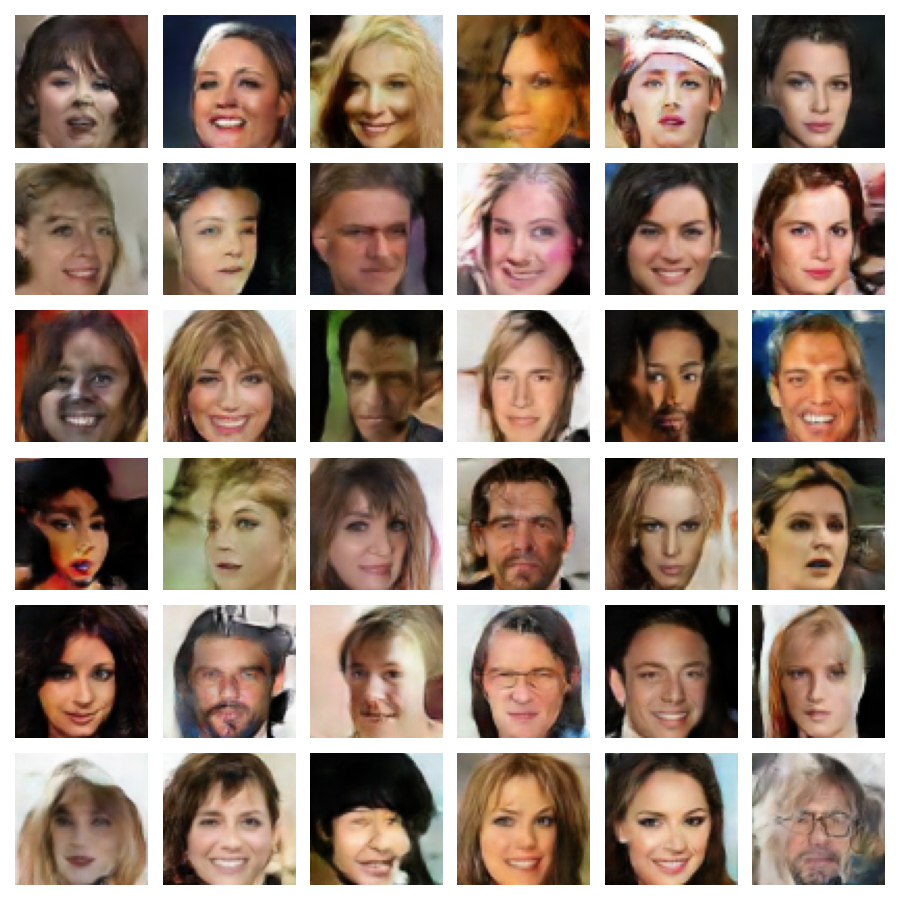

Images generated by a vanilla DCGAN + non-saturating loss:

- 160.000 training images

- 25 epoch training

- 64x64 resolution, center cropped

KID results (the lower the better):

| Loss / Architecture | Vanilla DCGAN | Spectral Norm. | Residual | Residual + Spectral Norm. |

|---|---|---|---|---|

| Non-saturating GAN | 0.015 | 0.044 | 0.016 | 0.036 |

| Least Squares GAN | 0.017 | 0.036 | 0.014 | 0.036 |

| Hinge GAN | 0.015 | 0.041 | 0.020 | 0.036 |

| Wasserstein GAN | * | 0.058 | * | 0.061 |

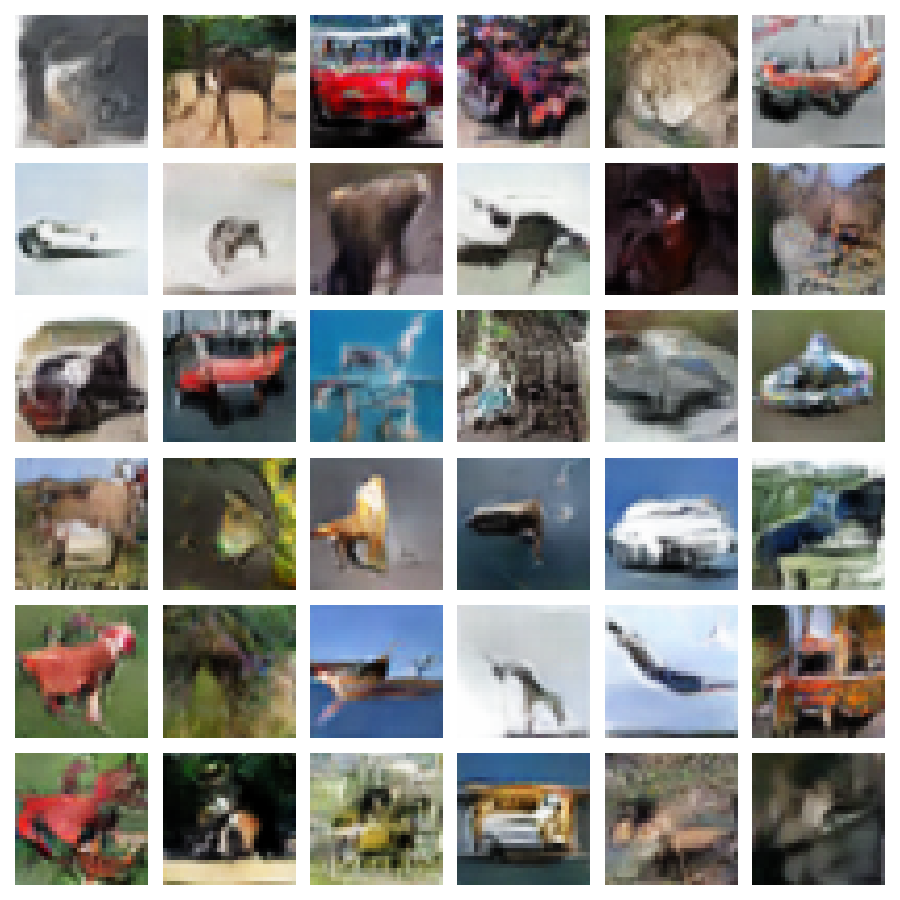

Images generated by a vanilla DCGAN + non-saturating loss:

- 50.000 training images

- 100 epoch training

- 32x32 resolution

KID results (the lower the better):

| Loss / Architecture | Vanilla DCGAN | Spectral Norm. | Residual | Residual + Spectral Norm. |

|---|---|---|---|---|

| Non-saturating GAN | 0.081 | 0.117 | 0.088 | 0.127 |

| Least Squares GAN | 0.081 | 0.107 | 0.089 | 0.109 |

| Hinge GAN | 0.084 | 0.109 | 0.092 | 0.121 |

| Wasserstein GAN | * | 0.213 | * | 0.213 |

Images generated by a vanilla DCGAN + non-saturating loss:

*Based on theory, Wasserstein GANs require Lipschitz-constrained discriminators, and therefore they are only evaluated with architectures using spectral normalization in their discriminators.

After comparing GAN losses across architectures and datasets, my findings are in line with the findings of the Are GANs Created Equal? study: no loss outperforms the non-saturating loss consistently. The training dynamics show similar stability, and the generation quality is also similar across the losses. Wasserstein GANs seem to be underperforming in comparison to the others, though the hyperparameters used might be suboptimal, as they follow mostly the DCGAN paper. I recommend using the non-saturating loss as a default.

In this implementation, RandomFlip, RandomTranslation, RandomRotation and RandomZoom are used for image augmentation when applying Adaptive Discriminator Augmentation, because in the paper these "pixel blitting" and geometric image augmentations are shown to be the most useful (see figures 4a and 4b). One can add other augmentations as well, using the custom Keras augmentations layers of this repository for example, which implements color jitter, additive gaussian noise and random resized crop among others in a differentiable and GPU-compatible manner.

For a similar implementation of denoising diffusion models, check out this repository.