The repository implements a reinforcement learning algorithm to address the problem of instruct RL agents to learn temporally extended goals in multi-task environments. The project is based on the idea introduced in LTL2Action: LTL Instruction for multi-task RL (Vaezipoor et al. 2021).

More details can be found in the Report.

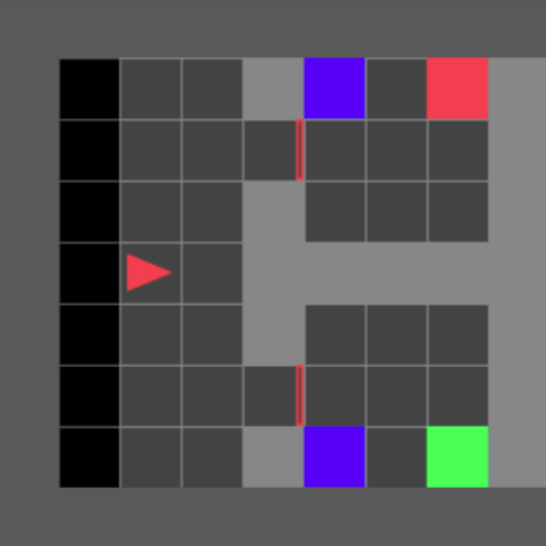

The environment is implemented with gym-minigrid: an agent (red triangle) must navigate in a 7x7 map. There are walls (grey squares), goals (colored squares) that are shuffled at each episode and doors (blue rectangles). The actions are go straight, turn left, turn right and the observations returned are the 7x7 colored grid and the orientation of the agent codified by an integer.

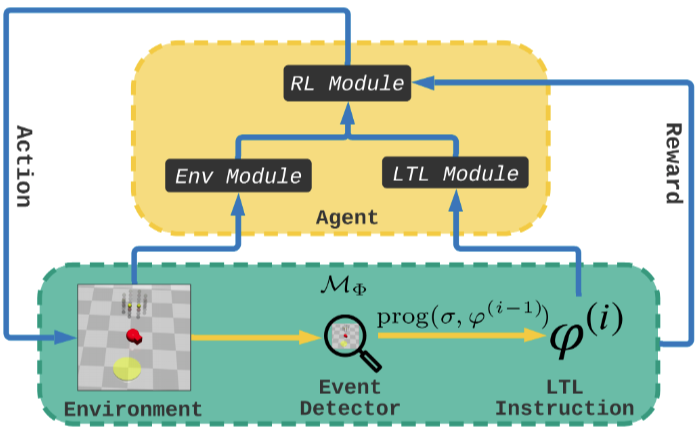

We implemented a RL framework with LTL instructions which learn to solve complex tasks (formalized in LTL language) in challenging environments. At every iteration the RL agent can partially observe the environment sorrounding it and through an event detector a set of truth assignments which are going to progressed (through a progression function) the LTL instruction, identifying the remaining aspect of the tasks to be accomplished. Therefore, the overall method relies on two modules which serve as feature extractors: one for the observation of the environment and one for the LTL instruction, which are later combined together to forms the input of a standard RL algorithm (PPO).

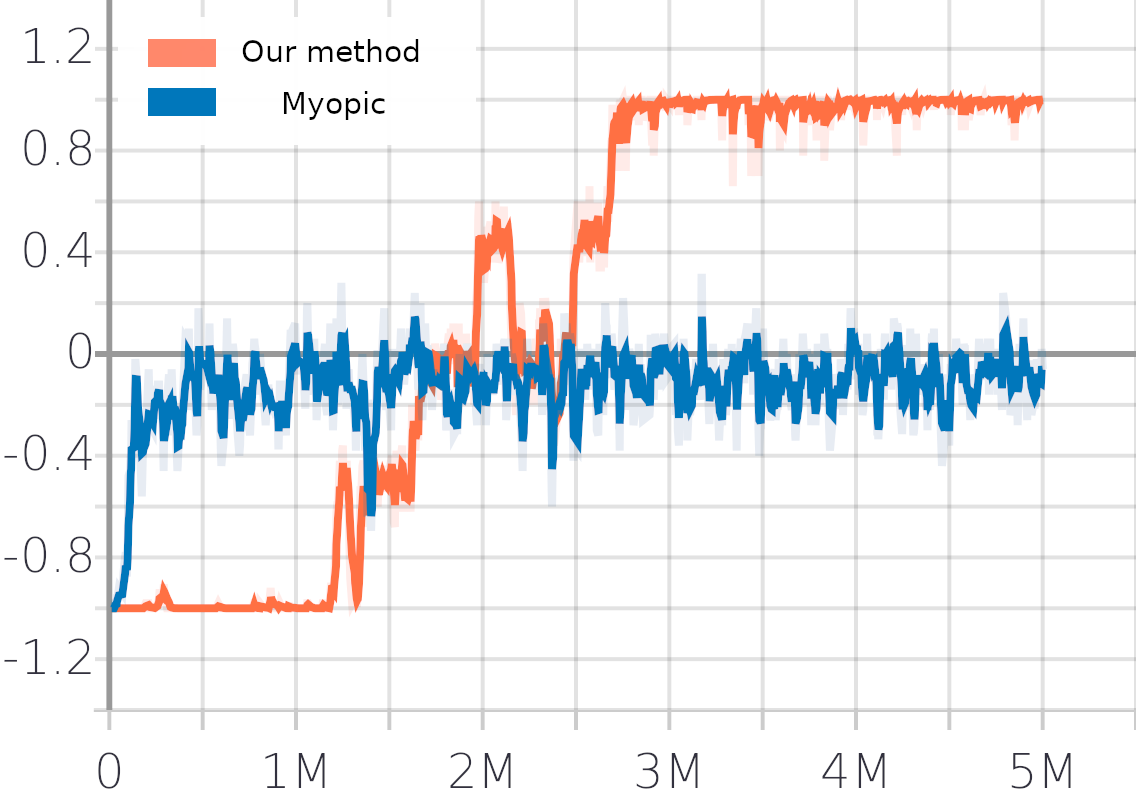

The method is able to solve multi-task environments with an high success rate. To make the agent generalize over the task formulas, every episode is descripted with a different LTL task sampled from a finite set. In the following plot, a normal PPO agent and the novel agent are trained on the same environment. The two task taken in consideration are:

- go to blue THEN go to red

- go to blue THEN go to green

A Myopic agent reach a success rate of 50%, meaning that it cannot "see" what is the successive goal after the blue one.

The agent is trained over a variety of LTL tasks, like partially ordered tasks and avoidance tasks. In the gif below the task "eventually go to blue square and then go to green square" is performed.

In the second example video the agent execute a sequence of partially ordered task that appear in the image bottom part, showing also the progression mechanism. When the task is accomplished the LTL formula progresses to true. Note that LTL formulae are represented in prefix notation by using tokens for operators and prepositions and brackets for relations.

or, for better compatibility, the following command can be used:

conda env create -f environment.yml

python train.py

python test.py

- Vaezipoor, Pashootan, Li, Andrew, Icarte, Rodrigo Toro, and McIlraith, Sheila (2021). “LTL2Action:Generalizing LTL Instructions for Multi-Task RL”. In:International Conference on MachineLearning (ICML)

- Icarte, Rodrigo Toro, Klassen, Toryn Q., Valenzano, Richard Anthony, and McIlraith, Sheila A.(2018). “Teaching Multiple Tasks to an RL Agent using LTL.” In:International Conferenceon Autonomous Agents and Multiagent. Systems (AAMAS), (pp. 452–461)